Correction for Camera Roll in a Perspectively Distorted Image: Cases for 2 and 3 Point Perspectives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Still Photography

Still Photography Soumik Mitra, Published by - Jharkhand Rai University Subject: STILL PHOTOGRAPHY Credits: 4 SYLLABUS Introduction to Photography Beginning of Photography; People who shaped up Photography. Camera; Lenses & Accessories - I What a Camera; Types of Camera; TLR; APS & Digital Cameras; Single-Lens Reflex Cameras. Camera; Lenses & Accessories - II Photographic Lenses; Using Different Lenses; Filters. Exposure & Light Understanding Exposure; Exposure in Practical Use. Photogram Introduction; Making Photogram. Darkroom Practice Introduction to Basic Printing; Photographic Papers; Chemicals for Printing. Suggested Readings: 1. Still Photography: the Problematic Model, Lew Thomas, Peter D'Agostino, NFS Press. 2. Images of Information: Still Photography in the Social Sciences, Jon Wagner, 3. Photographic Tools for Teachers: Still Photography, Roy A. Frye. Introduction to Photography STILL PHOTOGRAPHY Course Descriptions The department of Photography at the IFT offers a provocative and experimental curriculum in the setting of a large, diversified university. As one of the pioneers programs of graduate and undergraduate study in photography in the India , we aim at providing the best to our students to help them relate practical studies in art & craft in professional context. The Photography program combines the teaching of craft, history, and contemporary ideas with the critical examination of conventional forms of art making. The curriculum at IFT is designed to give students the technical training and aesthetic awareness to develop a strong individual expression as an artist. The faculty represents a broad range of interests and aesthetics, with course offerings often reflecting their individual passions and concerns. In this fundamental course, students will identify basic photographic tools and their intended purposes, including the proper use of various camera systems, light meters and film selection. -

Minoru Photo Club the Art of Panning (By Natalie Norton ) What Is

Minoru Photo Club July 24, 2018 There are different ways of creating a sense of movement in photography. From John Hedgecoe’s Photography Basics, he listed four ways – Slow Shutter, panning, diagonal movement and zoom movement. For today, I will share with you what I have researched on the art of panning using a slow shutter speed. There are many unwritten rules in photography. Keeping your camera steady is one of them. Well, for this artistic technique, you will need to forget all you have learned about the importance of shooting with a rock-solid camera. The creative result will be many cool motion-blur images The Art Of Panning (by Natalie Norton) What is Panning? Panning is one of many artistic techniques for more creative photographs. It is the horizontal movement of a camera, deliberately moving or panning the camera, as it scans a moving subject. It is the swinging of the camera - steadily to follow a passing subject, (can keep a moving image in one place on the film). Panning is another effective way of instilling a sense of motion in a picture with a moving subject. The result is a fairly sharp subject set against a blurred and streaked background. This gives the shot a real feeling of motion, speed and action. SOME TIPS & GUIDELINES: Subject/What should I Photograph? – since you want to create a sense of motion, your obvious subject choices include cars, racing cars, joggers, cyclists, etc.. But do try this technique when capturing pets, horses, people running or even someone on a swing. -

Video Tripod Head

thank you for choosing magnus. One (1) year limited warranty Congratulations on your purchase of the VPH-20 This MAGNUS product is warranted to the original purchaser Video Pan Head by Magnus. to be free from defects in materials and workmanship All Magnus Video Heads are designed to balance under normal consumer use for a period of one (1) year features professionals want with the affordability they from the original purchase date or thirty (30) days after need. They’re durable enough to provide many years replacement, whichever occurs later. The warranty provider’s of trouble-free service and enjoyment. Please carefully responsibility with respect to this limited warranty shall be read these instructions before setting up and using limited solely to repair or replacement, at the provider’s your Video Pan Head. discretion, of any product that fails during normal use of this product in its intended manner and in its intended VPH-20 Box Contents environment. Inoperability of the product or part(s) shall be determined by the warranty provider. If the product has • VPH-20 Video Pan Head Owner’s been discontinued, the warranty provider reserves the right • 3/8” and ¼”-20 reducing bushing to replace it with a model of equivalent quality and function. manual This warranty does not cover damage or defect caused by misuse, • Quick-release plate neglect, accident, alteration, abuse, improper installation or maintenance. EXCEPT AS PROVIDED HEREIN, THE WARRANTY Key Features PROVIDER MAKES NEITHER ANY EXPRESS WARRANTIES NOR ANY IMPLIED WARRANTIES, INCLUDING BUT NOT LIMITED Tilt-Tension Adjustment Knob TO ANY IMPLIED WARRANTY OF MERCHANTABILITY Tilt Lock OR FITNESS FOR A PARTICULAR PURPOSE. -

Special Techniques to Make Your Image Different

Special techniques to make your image different 1. Timelapse in one image During a photo tour in Yunnan province, China, we go shoot at a busy local market. I, however, like to experiment something new, presenting the hustle and bustle of the market photographically in a unique way: A time-lapse compressed into one image. To achieve the result, 2 approaches coming to my mind: Option 1. Using the in-camera Multiple exposure mode: Nikon cameras has multiple exposure functions for long time. After years of being ignored, it finally comes to Canon cameras starting 5D III, which I happen to use. This one is shot using "Average" mode in multiple exposure, I set total of 9 exposures, taking one shot every couple seconds, the exposure is automatically reduced, proportional to the number of shots taken, to avoid over-exposing common areas or overlapping bright areas. Where overlapping areas do occur, the final brightness level will be the same as the would have been in a single, properly- exposed shot. And, any non-overlapping areas will be darkened. The final combined image is automatically generated by the camera. The pro is that you can experiment a lot with this technique, simple and fast. The con however, is that you have no control on the resulting image at all. The camera has full control here. Option 2. Taking a lot shots and manipulate them in post processing: This is a hard way, but you have absolute control of the final image. It, however, is time-consuming and requires lots of fiddling with Photoshop. -

Visual Homing with a Pan-Tilt Based Stereo Camera Paramesh Nirmal Fordham University

Fordham University Masthead Logo DigitalResearch@Fordham Faculty Publications Robotics and Computer Vision Laboratory 2-2013 Visual homing with a pan-tilt based stereo camera Paramesh Nirmal Fordham University Damian M. Lyons Fordham University Follow this and additional works at: https://fordham.bepress.com/frcv_facultypubs Part of the Robotics Commons Recommended Citation Nirmal, Paramesh and Lyons, Damian M., "Visual homing with a pan-tilt based stereo camera" (2013). Faculty Publications. 15. https://fordham.bepress.com/frcv_facultypubs/15 This Article is brought to you for free and open access by the Robotics and Computer Vision Laboratory at DigitalResearch@Fordham. It has been accepted for inclusion in Faculty Publications by an authorized administrator of DigitalResearch@Fordham. For more information, please contact [email protected]. Visual homing with a pan-tilt based stereo camera Paramesh Nirmal and Damian M. Lyons Department of Computer Science, Fordham University, Bronx, NY 10458 ABSTRACT Visual homing is a navigation method based on comparing a stored image of the goal location and the current image (current view) to determine how to navigate to the goal location. It is theorized that insects, such as ants and bees, employ visual homing methods to return to their nest [1]. Visual homing has been applied to autonomous robot platforms using two main approaches: holistic and feature-based. Both methods aim at determining distance and direction to the goal location. Navigational algorithms using Scale Invariant Feature Transforms (SIFT) have gained great popularity in the recent years due to the robustness of the feature operator. Churchill and Vardy [2] have developed a visual homing method using scale change information (Homing in Scale Space, HiSS) from SIFT. -

E-PL7 Instruction Manual

Table of Contents Quick task index Preparing the camera and flow of 1. operations DIGITAL CAMERA 2. Shooting 3. Viewing photographs and movies 4. Basic operations Instruction Manual 5. Using shooting options 6. Menu functions Connecting the camera to a 7. smartphone Connecting the camera to a 8. computer and a printer 9. Battery, battery charger, and card 10. Interchangeable lenses 11. Using separately sold accessories 12. Information 13. SAFETY PRECAUTIONS Thank you for purchasing an Olympus digital camera. Before you start to use your new camera, please read these instructions carefully to enjoy optimum performance and a longer service life. Keep this manual in a safe place for future reference. We recommend that you take test shots to get accustomed to your camera before taking important photographs. The screen and camera illustrations shown in this manual were produced during the development stages and may differ from the actual product. If there are additions and/or modifications of functions due to firmware update for the camera, the contents will differ. For the latest information, please visit the Olympus website. This notice concerns the supplied fl ash unit and is chiefl y directed to users in North America. Information for Your Safety IMPORTANT SAFETY INSTRUCTIONS When using your photographic equipment, basic safety precautions should always be followed, including the following: • Read and understand all instructions before using. • Close supervision is necessary when any fl ash is used by or near children. Do not leave fl ash unattended while in use. • Care must be taken as burns can occur from touching hot parts. -

Accurate Control of a Pan-Tilt System Based on Parameterization of Rotational Motion

EUROGRAPHICS 2018/ O. Diamanti and A. Vaxman Short Paper Accurate control of a pan-tilt system based on parameterization of rotational motion JungHyun Byun, SeungHo Chae, and TackDon Han Media System Lab, Department of Computer Science, Yonsei University, Republic of Korea Abstract A pan-tilt camera system has been adopted by a variety of fields since it can cover a wide range of region compared to a single fixated camera setup. Yet many studies rely on factory-assembled and calibrated platforms and assume an ideal rotation where rotation axes are perfectly aligned with the optical axis of the local camera. However, in a user-created setup where a pan-tilting mechanism is arbitrarily assembled, the kinematic configurations may be inaccurate or unknown, violating ideal rotation. These discrepancies in the model with the real physics result in erroneous servo manipulation of the pan-tilting system. In this paper, we propose an accurate control mechanism for arbitrarily-assembled pan-tilt camera systems. The proposed method formulates pan-tilt rotations as motion along great circle trajectories and calibrates its model parameters, such as positions and vectors of rotation axes, in 3D space. Then, one can accurately servo pan-tilt rotations with pose estimation from inverse kinematics of their transformation. The comparative experiment demonstrates out-performance of the proposed method, in terms of accurately localizing target points in world coordinates, after being rotated from their captured camera frames. CCS Concepts •Computing methodologies ! Computer vision; Vision for robotics; Camera calibration; Active vision; 1. Introduction fabricated and assembled in an do-it-yourself or arbitrary manner. -

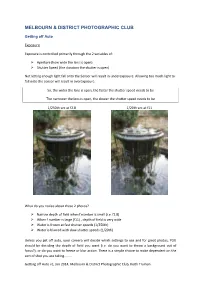

Getting Off the Auto Setting on Your Camera

MELBOURN & DISTRICT PHOTOGRAPHIC CLUB Getting off Auto Exposure Exposure is controlled primarily through the 2 variables of: Aperture (how wide the lens is open) Shutter Speed (the duration the shutter is open) Not letting enough light fall onto the Sensor will result in underexposure. Allowing too much light to fall onto the sensor will result in overexposure. So, the wider the lens is open, the faster the shutter speed needs to be The narrower the lens is open, the slower the shutter speed needs to be 1/250th sec at f2.8 1/20th sec at f11 What do you notice about these 2 photos? Narrow depth of field when f number is small (i.e. f2.8) When f number is large (f11) , depth of field is very wide Water is frozen at fast shutter speeds (1/250th) Water is blurred with slow shutter speeds (1/20th) Unless you get off auto, your camera will decide which settings to use and for great photos, YOU should be deciding the depth of field you want (i.e. do you want to throw a background out of focus?), or do you want to freeze or blur action. There is a simple choice to make dependent on the sort of shot you are taking........ Getting off Auto v1, Jan 2014, Melbourn & District Photographic Club, Keith Truman Is controlling depth of field more important than shutter speed (if it is you should use Aperture Priority)? Is freezing or blurring action more important than controlling depth of field (if it is you should use Shutter Priority. -

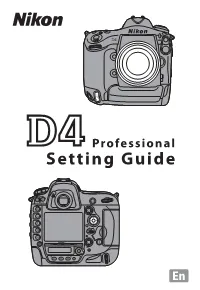

Nikon D4 Technical Guide

Professional Setting Guide Table of Contents TTakingaking PPhotographshotographs 1 Improving Camera Response ........................................... 2 Settings by Subject .............................................................6 Matching Settings to Your Goal...................................... 12 • Reducing Camera Blur: Vibration Reduction ..................12 • Preserving Natural Contrast: Active D-Lighting ............13 • Quick Setting Selection: Shooting Menu Banks ............14 • Finding Controls in the Dark: Button Backlights ...........15 • Reducing Noise at High ISO Sensitivities .........................15 • Reducing Noise and Blur: Auto ISO Sensitivity Control.. 16 • Reducing Shutter Noise: Quiet and Silent Release .......17 • Optimizing White Balance .....................................................18 • Varying White Balance: White Balance Bracketing .......22 • Copying White Balance from a Photograph ...................26 • Creating a Multiple Exposure ...............................................28 • Choosing a Memory Card for Playback ............................30 • Copying Pictures Between Memory Cards ......................31 • Copying Settings to Other D4 Cameras ...........................31 • Keeping the Camera Level: Virtual Horizon ....................32 • Composing Photographs: The Framing Grid ..................34 • Resizing Photographs for Upload: Resize ........................34 ii Autofocus Tips ................................................................... 35 • Focusing with the AF-ON -

Panning and Zooming High-Resolution Panoramas in Virtual Reality Devices Huiwen Chang Michael F

Panning and Zooming High-Resolution Panoramas in Virtual Reality Devices Huiwen Chang Michael F. Cohen Facebook and Princeton Univeristy Facebook Figure 1: We explore four modes for panning high-resolution panoramas in virtual reality devices. Left to right: normal mode, circle mode, transparent circle mode, and zoom circle mode. The latter two provided equally successful interfaces. ABSTRACT clear details of nature or cities. Artists are creating very large Two recent innovations in immersive media include the ability format panoramas combining high resolution imagery with to capture very high resolution panoramic imagery, and the AI-based Deep Dream to create new artistic and impressionis- rise of consumer level heads-up displays for virtual reality. tic worlds [1]. Scientists use them to examine high resolution Unfortunately, zooming to examine the high resolution in VR microscopic scans and for environmental examination [11]. breaks the basic contract with the user, that the FOV of the All interactive mapping applications are essentially pan/zoom visual field matches the FOV of the imagery. In this paper, interfaces on very large images. The viewer’s ability to explore we study methods to overcome this restriction to allow high the panoramic imagery transforms the once static relationship resolution panoramic imagery to be able to be explored in VR. between the viewer and the subject. The viewer is no longer a passive observer, but now determines which parts and what We introduce and test new interface modalities for exploring details of the image to view by panning and zooming the high resolution panoramic imagery in VR. In particular, we imagery. -

The Essential Reference Guide for Filmmakers

THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS IDEAS AND TECHNOLOGY IDEAS AND TECHNOLOGY AN INTRODUCTION TO THE ESSENTIAL REFERENCE GUIDE FOR FILMMAKERS Good films—those that e1ectively communicate the desired message—are the result of an almost magical blend of ideas and technological ingredients. And with an understanding of the tools and techniques available to the filmmaker, you can truly realize your vision. The “idea” ingredient is well documented, for beginner and professional alike. Books covering virtually all aspects of the aesthetics and mechanics of filmmaking abound—how to choose an appropriate film style, the importance of sound, how to write an e1ective film script, the basic elements of visual continuity, etc. Although equally important, becoming fluent with the technological aspects of filmmaking can be intimidating. With that in mind, we have produced this book, The Essential Reference Guide for Filmmakers. In it you will find technical information—about light meters, cameras, light, film selection, postproduction, and workflows—in an easy-to-read- and-apply format. Ours is a business that’s more than 100 years old, and from the beginning, Kodak has recognized that cinema is a form of artistic expression. Today’s cinematographers have at their disposal a variety of tools to assist them in manipulating and fine-tuning their images. And with all the changes taking place in film, digital, and hybrid technologies, you are involved with the entertainment industry at one of its most dynamic times. As you enter the exciting world of cinematography, remember that Kodak is an absolute treasure trove of information, and we are here to assist you in your journey. -

Camera Movements

Camera Movements Eco-film Carousel CAMERA MOVEMENTS: PAN Pan: • Moving the camera lens to one side or another. Look to your left, then look to your right - that's panning. • It can reveal parts of the scenery not seen previously IMAGE SOURCE: https://www.google.ca/url?sa=i&rct=j&q=&esrc=s&source=images&cd=&ved=0ahUKEwifh43TvafLAhWHtIMKHQTVALsQjxwIAw&url=http%3A%2F%2Fsketch update.blogspot.com.es%2F2011_03_01_archive.html&psig=AFQjCNFKcLCPY9g2NIMzX98h7F4T4JQVaA&ust=1457196366225384 CAMERA MOVEMENTS: DOLLY Dolly: • Motion towards or motion from. • Dolly-in means step towards the subject with the camera • Dolly-out means to step backwards with the camera, keeping the zoom the same. • The direction of the dolly draws different types of attention from the viewer. When the dolly moves toward the subject, the viewer’s interest is increased. • Zooming the camera changes the focal length of the lens, which can introduce wide-angle distortion IMAGE SOURCE: https://www.google.ca/url?sa=i&rct=j&q=&esrc=s&source=images&cd=&ved=0ahUKEwifh43TvafLAhWHtIMKHQTVALsQjxwIAw&url=http%3A%2F%2Fsketch update.blogspot.com.es%2F2011_03_01_archive.html&psig=AFQjCNFKcLCPY9g2NIMzX98h7F4T4JQVaA&ust=1457196366225384 CAMERA MOVEMENTS: ARC Arc: • An arc shot is the movement of the camera in a full or semi-circle around an object or character. • An arc shot is used to add drama to a film sequence and increases the intensity of the narrative. • They are known to be greatly effective when filming a moving object, although a complicated shot, it holds the audience's attention. IMAGE SOURCE: http://chammondg321.blogspot.ca/2012/10/camera-movements.html CAMERA MOVEMENTS: ZOOM Zoom: • Zooming involves changing the focal length of the lens to make the subject appear closer or further away in the frame.