In-Datacenter Performance Analysis of a Tensor Processing Unit

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Artificial Intelligence in Health Care: the Hope, the Hype, the Promise, the Peril

Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril Michael Matheny, Sonoo Thadaney Israni, Mahnoor Ahmed, and Danielle Whicher, Editors WASHINGTON, DC NAM.EDU PREPUBLICATION COPY - Uncorrected Proofs NATIONAL ACADEMY OF MEDICINE • 500 Fifth Street, NW • WASHINGTON, DC 20001 NOTICE: This publication has undergone peer review according to procedures established by the National Academy of Medicine (NAM). Publication by the NAM worthy of public attention, but does not constitute endorsement of conclusions and recommendationssignifies that it is the by productthe NAM. of The a carefully views presented considered in processthis publication and is a contributionare those of individual contributors and do not represent formal consensus positions of the authors’ organizations; the NAM; or the National Academies of Sciences, Engineering, and Medicine. Library of Congress Cataloging-in-Publication Data to Come Copyright 2019 by the National Academy of Sciences. All rights reserved. Printed in the United States of America. Suggested citation: Matheny, M., S. Thadaney Israni, M. Ahmed, and D. Whicher, Editors. 2019. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. NAM Special Publication. Washington, DC: National Academy of Medicine. PREPUBLICATION COPY - Uncorrected Proofs “Knowing is not enough; we must apply. Willing is not enough; we must do.” --GOETHE PREPUBLICATION COPY - Uncorrected Proofs ABOUT THE NATIONAL ACADEMY OF MEDICINE The National Academy of Medicine is one of three Academies constituting the Nation- al Academies of Sciences, Engineering, and Medicine (the National Academies). The Na- tional Academies provide independent, objective analysis and advice to the nation and conduct other activities to solve complex problems and inform public policy decisions. -

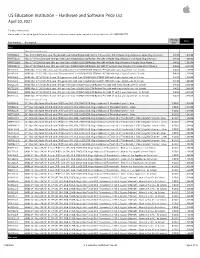

Apple US Education Price List

US Education Institution – Hardware and Software Price List April 30, 2021 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac iMac with Intel processor MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR2P2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 3-year AppleCare+ for Schools 8/4/20 1,818.00 BR2S2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 4-year AppleCare+ for Schools 8/4/20 1,868.00 BR2Q2LL/A BNDL iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB -

AI Computer Wraps up 4-1 Victory Against Human Champion Nature Reports from Alphago's Victory in Seoul

The Go Files: AI computer wraps up 4-1 victory against human champion Nature reports from AlphaGo's victory in Seoul. Tanguy Chouard 15 March 2016 SEOUL, SOUTH KOREA Google DeepMind Lee Sedol, who has lost 4-1 to AlphaGo. Tanguy Chouard, an editor with Nature, saw Google-DeepMind’s AI system AlphaGo defeat a human professional for the first time last year at the ancient board game Go. This week, he is watching top professional Lee Sedol take on AlphaGo, in Seoul, for a $1 million prize. It’s all over at the Four Seasons Hotel in Seoul, where this morning AlphaGo wrapped up a 4-1 victory over Lee Sedol — incidentally, earning itself and its creators an honorary '9-dan professional' degree from the Korean Baduk Association. After winning the first three games, Google-DeepMind's computer looked impregnable. But the last two games may have revealed some weaknesses in its makeup. Game four totally changed the Go world’s view on AlphaGo’s dominance because it made it clear that the computer can 'bug' — or at least play very poor moves when on the losing side. It was obvious that Lee felt under much less pressure than in game three. And he adopted a different style, one based on taking large amounts of territory early on rather than immediately going for ‘street fighting’ such as making threats to capture stones. This style – called ‘amashi’ – seems to have paid off, because on move 78, Lee produced a play that somehow slipped under AlphaGo’s radar. David Silver, a scientist at DeepMind who's been leading the development of AlphaGo, said the program estimated its probability as 1 in 10,000. -

Holiday Catalog

Brilliant for what’s next. With the power to achieve anything. AirPods Pro AppleCare+ Protection Plan†* $29 Key Features • Active Noise Cancellation for immersive sound • Transparency mode for hearing and connecting with the world around you • Three sizes of soft, tapered silicone tips for a customizable fit • Sweat and water resistant1 • Adaptive EQ automatically tunes music to the shape of your ear • Easy setup for all your Apple devices2 • Quick access to Siri by saying “Hey Siri”3 • The Wireless Charging Case delivers more than 24 hours of battery life4 AirPods Pro. Magic amplified. Noise nullified. Active Noise Cancellation for immersive sound. Transparency mode for hearing what’s happening around you. Sweat and water resistant.1 And a more customizable fit for all-day comfort. AirPods® AirPods AirPods Pro with Charging Case with Wireless Charging Case with Wireless Charging Case $159 $199 $249 1 AirPods Pro are sweat and water resistant for non-water sports and exercise and are IPX4 rated. Sweat and water resistance are not permanent conditions. The charging case is not sweat or water resistant. 2 Requires an iCloud account and macOS 10.14.4, iOS 12.2, iPadOS, watchOS 5.2, or tvOS 13.2 or later. 3Siri may not be available in all languages or in all areas, and features may vary by area. 4 Battery life varies by use and configuration. See apple.com/batteries for details. Our business is part of a select group of independent Apple® Resellers and Service Providers who have a strong commitment to Apple’s Mac® and iOS platforms and have met or exceeded Apple’s highest training and sales certifications. -

Apple Accessories & Prices Input Devices Apple Magic Mouse 2 (APPX333) £65.50 Imac, Mac Mini and Accessories Magic Mouse 2 - Space Grey (APPX015) £82.50

Apple Accessories & Prices Input Devices Apple Magic Mouse 2 (APPX333) £65.50 iMac, Mac Mini and Accessories Magic Mouse 2 - Space Grey (APPX015) £82.50 Magic Trackpad 2 (APPX335) £107.50 Magic Trackpad 2 - Space Grey (APPX016) £124.00 Magic Keyboard (APPK005) £79.95 Magic Keyboard - Numeric (APPK006) £105.00 Magic Keyboard - Numeric - Space Grey (APPK007) £124.00 Adapters and Network Mini DisplayPort to DVI Adapter (APPX117) £23.95 Mini DisplayPort to VGA Adapter (APPX142) £23.95 USB-C to USB-A (APPX281) £14.95 USB-C to Digital AV (APPX099) £62.50 Misc. Accessories USB Retina Superdrive (APPX228) £65.50 HomePod Mini Smart Speakers - Available in Space Grey and Silver (APPX137/138) £82.50 HomePod Smart Speakers - Available in Space Grey and Silver (APPX013/014) £232.50 Many other adapters and accessories also available! Guernsey33 Commercial Arcade, Computers St. Peter Port Tel 01481-728738 Ltd E. & O. E. 18th August 2021 Mac Products & Specifications Mac Products & Specifications Mac Mini - i5 2.6GHz (APPC022) £915.00 iMac 27” i5 3.1GHz - 5K Retina Display (APPC028) £1499.00 3.0GHz 6-Core i5 Processor w/ 9MB shared L3 cache - Turbo Boost 3.1GHz 6-Core i5 Processor (Turbo Boost up to 4.5GHz), 8GB up to 4.1GHz, 8GB DDR4 RAM, 256GB Solid State Drive, Intel UHD DDR4 RAM, 256GB Solid State Drive, Radeon Pro 5300 Graphics Graphics 630, 802.11ac Wi-Fi (802.11 a/b/g/n compatible) & Bluetooth 5.0 (4GB), 802.11ac Wi-Fi (802.11 a/b/g/n compatible) & Bluetooth 5.0 Mac Mini - M1 8-Core Processor (APPC031) £582.00 iMac 27” i5 3.3GHz - 5K Retina Display -

Fault Tolerance and Re-Training Analysis on Neural Networks

FAULT TOLERANCE AND RE-TRAINING ANALYSIS ON NEURAL NETWORKS by ABHINAV KURIAN GEORGE B.Tech Electronics and Communication Engineering Amrita Vishwa Vidhyapeetham, Kerala, 2012 A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science, Computer Engineering, College of Engineering and Applied Science, University of Cincinnati, Ohio 2019 Thesis Committee: Chair: Wen-Ben Jone, Ph.D. Member: Carla Purdy, Ph.D. Member: Ranganadha Vemuri, Ph.D. ABSTRACT In the current age of big data, artificial intelligence and machine learning technologies have gained much popularity. Due to the increasing demand for such applications, neural networks are being targeted toward hardware solutions. Owing to the shrinking feature size, number of physical defects are on the rise. These growing number of defects are preventing designers from realizing the full potential of the on-chip design. The challenge now is not only to find solutions that balance high-performance and energy-efficiency but also, to achieve fault-tolerance of a computational model. Neural computing, due to its inherent fault tolerant capabilities, can provide promising solutions to this issue. The primary focus of this thesis is to gain deeper understanding of fault tolerance in neural network hardware. As a part of this work, we present a comprehensive analysis of fault tolerance by exploring effects of faults on popular neural models: multi-layer perceptron model and convolution neural network. We built the models based on conventional 64-bit floating point representation. In addition to this, we also explore the recent 8-bit integer quantized representation. A fault injector model is designed to inject stuck-at faults at random locations in the network. -

Abstractions for Programming Graphics Processors in High-Level Programming Languages

Abstracties voor het programmeren van grafische processoren in hoogniveau-programmeertalen Abstractions for Programming Graphics Processors in High-Level Programming Languages Tim Besard Promotor: prof. dr. ir. B. De Sutter Proefschrift ingediend tot het behalen van de graad van Doctor in de ingenieurswetenschappen: computerwetenschappen Vakgroep Elektronica en Informatiesystemen Voorzitter: prof. dr. ir. K. De Bosschere Faculteit Ingenieurswetenschappen en Architectuur Academiejaar 2018 - 2019 ISBN 978-94-6355-244-8 NUR 980 Wettelijk depot: D/2019/10.500/52 Examination Committee Prof. Filip De Turck, chair Department of Information Technology Faculty of Engineering and Architecture Ghent University Prof. Koen De Bosschere, secretary Department of Electronics and Information Systems Faculty of Engineering and Architecture Ghent University Prof. Bjorn De Sutter, supervisor Department of Electronics and Information Systems Faculty of Engineering and Architecture Ghent University Prof. Jutho Haegeman Department of Physics and Astronomy Faculty of Sciences Ghent University Prof. Jan Lemeire Department of Electronics and Informatics Faculty of Engineering Vrije Universiteit Brussel Prof. Christophe Dubach School of Informatics College of Science & Engineering The University of Edinburgh Prof. Alan Edelman Computer Science & Artificial Intelligence Laboratory Department of Electrical Engineering and Computer Science Massachusetts Institute of Technology ii Dankwoord Ik wist eigenlijk niet waar ik aan begon, toen ik in 2012 in de cata- comben van het Technicum op gesprek ging over een doctoraat. Of ik al eens met LLVM gewerkt had. Ondertussen zijn we vele jaren verder, werk ik op een bureau waar er wel daglicht is, en is het eindpunt van deze studie zowaar in zicht. Dat mag natuurlijk wel, zo vertelt men mij, na 7 jaar. -

P1360R0: Towards Machine Learning for C++: Study Group 19

P1360R0: Towards Machine Learning for C++: Study Group 19 Date: 2018-11-26(Post-SAN mailing): 10 AM ET Project: ISO JTC1/SC22/WG21: Programming Language C++ Audience SG19, WG21 Authors : Michael Wong (Codeplay), Vincent Reverdy (University of Illinois at Urbana-Champaign, Paris Observatory), Robert Douglas (Epsilon), Emad Barsoum (Microsoft), Sarthak Pati (University of Pennsylvania) Peter Goldsborough (Facebook) Franke Seide (MS) Contributors Emails: [email protected] [email protected] [email protected] [email protected] [email protected] [email protected] [email protected] Reply to: [email protected] Introduction 2 Motivation 2 Scope 5 Meeting frequency and means 6 Outreach to ML/AI/Data Science community 7 Liaison with other groups 7 Future meetings 7 Conclusion 8 Acknowledgements 8 References 8 Introduction This paper proposes a WG21 SG for Machine Learning with the goal of: ● Making Machine Learning a first-class citizen in ISO C++ It is the collaboration of a number of key industry, academic, and research groups, through several connections in CPPCON BoF[reference], LLVM 2018 discussions, and C++ San Diego meeting. The intention is to support such an SG, and describe the scope of such an SG. This is in terms of potential work resulting in papers submitted for future C++ Standards, or collaboration with other SGs. We will also propose ongoing teleconferences, meeting frequency and locations, as well as outreach to ML data scientists, conferences, and liaison with other Machine Learning groups such as at Khronos, and ISO. As of the SAN meeting, this group has been officially created as SG19, and we will begin teleconferences immediately, after the US thanksgiving, and after NIPS. -

Understanding & Generalizing Alphago Zero

Under review as a conference paper at ICLR 2019 UNDERSTANDING &GENERALIZING ALPHAGO ZERO Anonymous authors Paper under double-blind review ABSTRACT AlphaGo Zero (AGZ) (Silver et al., 2017b) introduced a new tabula rasa rein- forcement learning algorithm that has achieved superhuman performance in the games of Go, Chess, and Shogi with no prior knowledge other than the rules of the game. This success naturally begs the question whether it is possible to develop similar high-performance reinforcement learning algorithms for generic sequential decision-making problems (beyond two-player games), using only the constraints of the environment as the “rules.” To address this challenge, we start by taking steps towards developing a formal understanding of AGZ. AGZ includes two key innovations: (1) it learns a policy (represented as a neural network) using super- vised learning with cross-entropy loss from samples generated via Monte-Carlo Tree Search (MCTS); (2) it uses self-play to learn without training data. We argue that the self-play in AGZ corresponds to learning a Nash equilibrium for the two-player game; and the supervised learning with MCTS is attempting to learn the policy corresponding to the Nash equilibrium, by establishing a novel bound on the difference between the expected return achieved by two policies in terms of the expected KL divergence (cross-entropy) of their induced distributions. To extend AGZ to generic sequential decision-making problems, we introduce a robust MDP framework, in which the agent and nature effectively play a zero-sum game: the agent aims to take actions to maximize reward while nature seeks state transitions, subject to the constraints of that environment, that minimize the agent’s reward. -

AI for Broadcsaters, Future Has Already Begun…

AI for Broadcsaters, future has already begun… By Dr. Veysel Binbay, Specialist Engineer @ ABU Technology & Innovation Department 0 Dr. Veysel Binbay I have been working as Specialist Engineer at ABU Technology and Innovation Department for one year, before that I had worked at TRT (Turkish Radio and Television Corporation) for more than 20 years as a broadcast engineer, and also as an IT Director. I have wide experience on Radio and TV broadcasting technologies, including IT systems also. My experience includes to design, to setup, and to operate analogue/hybrid/digital radio and TV broadcast systems. I have also experienced on IT Networks. 1/25 What is Artificial Intelligence ? • Programs that behave externally like humans? • Programs that operate internally as humans do? • Computational systems that behave intelligently? 2 Some Definitions Trials for AI: The exciting new effort to make computers think … machines with minds, in the full literal sense. Haugeland, 1985 3 Some Definitions Trials for AI: The study of mental faculties through the use of computational models. Charniak and McDermott, 1985 A field of study that seeks to explain and emulate intelligent behavior in terms of computational processes. Schalkoff, 1990 4 Some Definitions Trials for AI: The study of how to make computers do things at which, at the moment, people are better. Rich & Knight, 1991 5 It’s obviously hard to define… (since we don’t have a commonly agreed definition of intelligence itself yet)… Lets try to focus to benefits, and solve definition problem later… 6 Brief history of AI 7 Brief history of AI . The history of AI begins with the following article: . -

Efficiently Mastering the Game of Nogo with Deep Reinforcement

electronics Article Efficiently Mastering the Game of NoGo with Deep Reinforcement Learning Supported by Domain Knowledge Yifan Gao 1,*,† and Lezhou Wu 2,† 1 College of Medicine and Biological Information Engineering, Northeastern University, Liaoning 110819, China 2 College of Information Science and Engineering, Northeastern University, Liaoning 110819, China; [email protected] * Correspondence: [email protected] † These authors contributed equally to this work. Abstract: Computer games have been regarded as an important field of artificial intelligence (AI) for a long time. The AlphaZero structure has been successful in the game of Go, beating the top professional human players and becoming the baseline method in computer games. However, the AlphaZero training process requires tremendous computing resources, imposing additional difficulties for the AlphaZero-based AI. In this paper, we propose NoGoZero+ to improve the AlphaZero process and apply it to a game similar to Go, NoGo. NoGoZero+ employs several innovative features to improve training speed and performance, and most improvement strategies can be transferred to other nonspecific areas. This paper compares it with the original AlphaZero process, and results show that NoGoZero+ increases the training speed to about six times that of the original AlphaZero process. Moreover, in the experiment, our agent beat the original AlphaZero agent with a score of 81:19 after only being trained by 20,000 self-play games’ data (small in quantity compared with Citation: Gao, Y.; Wu, L. Efficiently 120,000 self-play games’ data consumed by the original AlphaZero). The NoGo game program based Mastering the Game of NoGo with on NoGoZero+ was the runner-up in the 2020 China Computer Game Championship (CCGC) with Deep Reinforcement Learning limited resources, defeating many AlphaZero-based programs. -

AI Chips: What They Are and Why They Matter

APRIL 2020 AI Chips: What They Are and Why They Matter An AI Chips Reference AUTHORS Saif M. Khan Alexander Mann Table of Contents Introduction and Summary 3 The Laws of Chip Innovation 7 Transistor Shrinkage: Moore’s Law 7 Efficiency and Speed Improvements 8 Increasing Transistor Density Unlocks Improved Designs for Efficiency and Speed 9 Transistor Design is Reaching Fundamental Size Limits 10 The Slowing of Moore’s Law and the Decline of General-Purpose Chips 10 The Economies of Scale of General-Purpose Chips 10 Costs are Increasing Faster than the Semiconductor Market 11 The Semiconductor Industry’s Growth Rate is Unlikely to Increase 14 Chip Improvements as Moore’s Law Slows 15 Transistor Improvements Continue, but are Slowing 16 Improved Transistor Density Enables Specialization 18 The AI Chip Zoo 19 AI Chip Types 20 AI Chip Benchmarks 22 The Value of State-of-the-Art AI Chips 23 The Efficiency of State-of-the-Art AI Chips Translates into Cost-Effectiveness 23 Compute-Intensive AI Algorithms are Bottlenecked by Chip Costs and Speed 26 U.S. and Chinese AI Chips and Implications for National Competitiveness 27 Appendix A: Basics of Semiconductors and Chips 31 Appendix B: How AI Chips Work 33 Parallel Computing 33 Low-Precision Computing 34 Memory Optimization 35 Domain-Specific Languages 36 Appendix C: AI Chip Benchmarking Studies 37 Appendix D: Chip Economics Model 39 Chip Transistor Density, Design Costs, and Energy Costs 40 Foundry, Assembly, Test and Packaging Costs 41 Acknowledgments 44 Center for Security and Emerging Technology | 2 Introduction and Summary Artificial intelligence will play an important role in national and international security in the years to come.