Learning of Cooperative Actions in Multi-Agent Systems: a Case Study of Pass Play in Soccer Hitoshi Matsubara, Itsuki Noda and Kazuo Hiraki

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Influence of Physical Properties of Hockey Stick Blade on Shots

Influence of Physical Properties of Hockey Stick Blade on Shots Martin Plesch1, 2, Samuel Jan´ Plesn´ık3, and Natalia´ Ruˇzickovˇ a´ 4, * 1Institute of Physics, Slovak Academy of Sciences, Bratislava, Slovak Republic 2Institute of Computer Science, Masaryk University, Brno, Czech Republic 3Prve´ Sukromn´ e´ Gymnazium,´ Bratislava, Slovak Republic 4Institute of Science and Technology, Klosterneuburg, Austria *[email protected] ABSTRACT Parameters of a shot of an ice hockey player are mostly determined by the capabilities of the player and the physical properties of the stick used. To reach better performance, every hockey player uses also a hockey tape for an adjustment of the stick blade, that changes both the damping properties as well as the friction coefficient of the blade surface. To show the unexpected extent to which these physical properties of the blade affect the shot, we compared two types of blade cover: traditional tape (rolled onto the blade) and a blade sticker that adheres to both sides of the hockey stick blade. We analysed high-speed recordings of two types of shots by 13 players, ranging from amateurs and junior players to NHL superstars. The two covers differ greatly in friction coefficient and stiffness, which results in significantly (more than 99% confidence) greater speed, rotation and energy when using the stiffer and rougher sticker. 1 Introduction Ice hockey sticks have experienced a gradual development in recent decades. Progress in material science allowed the replacement of widely used wood by composite materials that nowadays represent the standard for hockey players worldwide. Broad usage of new materials like aluminium and composites has become an interesting topic for research as well. -

Stick Making Guide

How To Make a Broomball Stick: An Introductory Guide Created by: Justin Hicks Ben Hoover Michael W. Johnson Tyler Schwartz Introduction Broomball has become a tradition for many Michigan Tech students during the long winter months in Houghton, Michigan. The following guide provides step‐by‐ step instructions on how to make a broomball stick for IRHC Broomball at Michi‐ gan Technological University. It is best suited for individuals who have never con‐ structed a broomball stick before. It can also be used as an instructional resource for those teaching others how a broomball stick is constructed. The guide will lay out the general procedure for constructing a broomball stick. The guide will also discuss different styles of broomball sticks that can be made and the IRHC rules and regulations that pertain to the construction of broomball sticks. Remember that this guide is intended only for those individual who going to be competing in IRHC Broomball at Michigan Tech. Broom Diagram (Yes, this is a diagram of a broom!) This diagram points out some im‐ portant parts of the broom, but more importantly, gives them a standard name that will be used throughout the rest of the guide. Broom Handle Metal Coil Metal Ring Broom Head Strings Bristles IRHC Rules and Regulations Page 6 of the IRHC Broomball Rules and Details packet states the following: ‐ Only the broom’s original handle may be attached to the original broom head (i.e. no homemade brooms). ‐ Brooms must have a wooden handle. ‐ No official Broomball brooms will be allowed (hard plastic brooms). ‐ A minimum of six (6) inches of bristle must extend below the broom’s handle. -

A Type System for Format Strings E

ifact t * r * Comple t te A A n * te W E is s * e C n l l o D C A o * * c T u e m S s E u e S e n I R t v A Type System for Format Strings e o d t y * s E a * a l d u e a t Konstantin Weitz Gene Kim Siwakorn Srisakaokul Michael D. Ernst University of Washington, USA {weitzkon,genelkim,ping128,mernst}@cs.uw.edu ABSTRACT // Untested code (Hadoop) Most programming languages support format strings, but their use Resource r = ... format("Insufficient memory %d", r); is error-prone. Using the wrong format string syntax, or passing the wrong number or type of arguments, leads to unintelligible text // Unchecked input (FindBugs) output, program crashes, or security vulnerabilities. String urlRewriteFormat = read(); This paper presents a type system that guarantees that calls to format(urlRewriteFormat, url); format string APIs will never fail. In Java, this means that the API will not throw exceptions. In C, this means that the API will not // User unaware log is a format routine (Daikon) log("Exception " + e); return negative values, corrupt memory, etc. We instantiated this type system for Java’s Formatter API, and // Invalid syntax for Formatter API (ping-gcal) evaluated it on 6 large and well-maintained open-source projects. format("Unable to reach {0}", server); Format string bugs are common in practice (our type system found Listing 1: Real-world code examples of common programmer 104 bugs), and the annotation burden on the user of our type system mistakes that lead to format routine call failures. -

National Federation Track and Field Rules Committee Places a High Priority on Risk Minimization for Pole Vault Competitors

NFHS RULES CHANGES AFFECTING RISK (1982-2017) Baseball 1982 Batting helmets meeting NOCSAE Standard to be required in 1985. Slippery tape on bat handles is illegal. 1983 Any player warming up a pitcher at any location shall wear a protective cup and a mask with a throat protector Casts, splints or braces must be padded and there shall be no exposed metal or any other hard material. Prosthesis that is not dangerous will be permitted. 1984 Any on-deck batter is required to wear a batting helmet. Protective devices attached to a batting helmet must be approved by the state association. Nontraditional playing equipment must be reviewed by the NFHS Baseball Rules Committee before it will be permitted. A player who maliciously runs into a fielder is to be ejected and shall also be called out. Metal cleats were prohibited. 1985 Devices or attachments that cause bat handles to become flush with the knob are illegal. Only bats may be used in loosening up. 1986 Exterior warning label is mandatory on batting helmets. Players in coaches’ boxes required to wear a head protector. Jewelry is prohibited. Medical-alert bracelets or medical-alert necklaces are legal if taped to the body. 1987 Bases with tapered edges are permitted. On-deck circles should be placed 37’ away from home plate if space permits. Otherwise, they should be placed a safe distance to the side and away from home plate. 1988 Bases designed to disengage their anchor systems for safety purposes are legal. 1989 Metal cleats are permitted. By state association adoption, a double first base is permitted. -

2021-2022 Oregon School Activities Association

2021‐2022 Oregon School Activities Association Handbook Peter Weber, Publisher / Editor Published by OREGON SCHOOL ACTIVITIES ASSOCIATION 25200 SW Parkway, Suite 1 Wilsonville, OR 97070 Phone: 503.682.6722 Fax: 503.682.0960 How to find information in the OSAA Handbook The first section of the OSAA Handbook includes a Table of Contents to the entire publication. The Cheerleading, Dance and Drill, Music and Speech Handbooks have been removed from this publication. These handbooks can be found on their respective activities pages of the OSAA website. Wording that has been changed from previous years is indicated by bold italic lettering. Linked references to other sections are shaded and Questions and Answers are shaded. OSAA Mission Statement The mission of the OSAA is to serve member schools by providing leadership and state coordination for the conduct of interscholastic activities, which will enrich the educational experiences of high school students. The OSAA will work to promote interscholastic activities that provide equitable participation opportunities, positive recognition and learning experiences to students, while enhancing the achievement of educational goals. Non‐Discrimination Policy (Executive Board Policies, Revised July 2019) A. The Oregon School Activities Association does not discriminate on the basis of race, color, religion, sex, sexual orientation, national origin, marital status, age or disability in the performance of its authorized functions, and encourages its member schools, school personnel, participants and spectators to adopt and follow the same policy. B. A claim of discrimination against a member school shall be brought directly to the member school of concern. C. Any party that believes he/she has been subjected to an incident involving discrimination or discriminatory harassment at an OSAA sanctioned event, may submit a written complaint through the online complaint process proved on the OSAA website (Complaint Form). -

Harmonized Tariff Schedule of the United States (2020) Revision 23 Annotated for Statistical Reporting Purposes

Harmonized Tariff Schedule of the United States (2020) Revision 23 Annotated for Statistical Reporting Purposes CHAPTER 95 TOYS, GAMES AND SPORTS EQUIPMENT; PARTS AND ACCESSORIES THEREOF XX 95-1 Notes 1. This chapter does not cover: (a) Candles (heading 3406); (b) Fireworks or other pyrotechnic articles of heading 3604; (c) Yarns, monofilament, cords or gut or the like for fishing, cut to length but not made up into fishing lines, of chapter 39, heading 4206 or section XI; (d) Sports bags or other containers of heading 4202, 4303 or 4304; (e) Fancy dress of textiles, of chapter 61 or 62; sports clothing and special articles of apparel of textiles, of chapter 61 or 62, whether or not incorporating incidentally protective components such as pads or padding in the elbow, knee or groin areas (for example, fencing clothing or soccer goalkeeper jerseys); (f) Textile flags or bunting, or sails for boats, sailboards or land craft, of chapter 63; (g) Sports footwear (other than skating boots with ice or roller skates attached) of chapter 64, or sports headgear of chapter 65; (h) Walking-sticks, whips, riding-crops or the like (heading 6602), or parts thereof (heading 6603); (ij) Unmounted glass eyes for dolls or other toys, of heading 7018; (k) Parts of general use, as defined in note 2 to section XV, of base metal (section XV), or similar goods of plastics (chapter 39); (l) Bells, gongs or the like of heading 8306; (m) Pumps for liquids (heading 8413), filtering or purifying machinery and apparatus for liquids or gases (heading 8421), electric -

Capturing Interpersonal Coordination Processes in Association Football: from Dyads to Collectives

UNIVERSIDADE TÉCNICA DE LISBOA FACULDADE DE MOTRICIDADE HUMANA Capturing Interpersonal Coordination Processes in Association Football: from Dyads to Collectives Dissertation submitted in order to obtain the degree of Ph.D. in the branch of Human Kinetics, specialty in Sport Sciences Advisor: Professor Doutor Duarte Fernando Rosa Belo Patronilho Araújo Jury Chair: ReItor da Universidade Técnica de Lisboa Jury Members: Professor Doutor João Manuel Pardal Barreiros Professor Doutor Carlos Lago Peñas Professor Doutor António Jaime da Eira Sampaio Professor Doutor Duarte Fernando Rosa Belo Patronilho Araújo Professor Doutor António Paulo Pereira Ferreira RICARDO FILIPE LIMA DUARTE 2012 The work presented in this dissertation was supported by the Foundation for Science and Technology (Portugal), grant SFRH/BD/43994/2008 awarded to Ricardo Duarte. All the effort devoted to this work is dedicated to my ultimate little treasure, Madalena. Agradecimentos Ao longo do desenvolvimento deste trabalho, inúmeras relações interpessoais se formaram e constrangeram de modo decisivo o seu curso de ação. A natureza fractal da relação humana com o tempo e o espaço cria em mim, neste momento, a necessidade de recuar, nesse tempo e nesse espaço, e começar por agradecer o suporte familiar que sempre recebi e sem o qual nada teria sido possível. Ao meu Pai, à minha Mãe, às minhas incansáveis irmãs e à Madrinha que sempre esteve disponível, estarei eternamente grato. Mas estas coisas não se agradecem, partilham-se e retribuem-se. Obrigado Gé por teres alimentado a minha curiosidade científica e me teres oferecido o livro do Lovelock sobre Gaia. Percebo agora que o capítulo 2 desta tese começou a ser construído há muitos anos atrás. -

A Review of Men's Underwear Styles and Its Various Fabrics

Latest Trends in Textile and L UPINE PUBLISHERS Fashion Designing Open Access DOI: 10.32474/LTTFD.2018.02.000134 ISSN: 2637-4595 Research Article A Review of Men’s Underwear Styles and Its Various Fabrics Dibyendu Bikash Datta* Department of Fashion Management Studies, National Institute of Fashion Technology, Kolkata, India Received: June 07, 2018; Published: June 13, 2018 *Corresponding author: Dibyendu Bikash Datta, Department of Fashion Management Studies, National Institute of Fashion Technology, Kolkata, India, Email: Abstract Underwear is an innerwear, normally worn next to the skin or under other clothing. The innerwear is also called second skin because it is in direct contact with the skin. Owing to the importance of this aspect, the fabric of the innerwear is the next important criteria that are considered while buying innerwear. Innovations and technology are constantly evolving for men’s underwear industry. Underwear is essential for hygiene and some styles provide support in regulating the body’s shape. Social situations, weather conditions, and the apparel can affect the style of undergarment men wear. Different types of men’s underwear and the variations on each of the styles are widely available. The availability of products related to intimate apparels for men available online is expected to drive the growth of online shopping for underwear worldwide. This paper describes the various styles of underwear garments and the fabric they are made from. Keywords: Underwear; Innerwear; Comfort; Intimate; Briefs Introduction boxer shorts to the radically aesthetic men’s thongs, G-strings, and Men are no longer living in an age where their un dies have to be even C-string. -

Water Polo Uniform Rules

2020‐22 NFHS Water Polo Uniform Rules Rule 1‐20 Cap Colors ART. 1 . Each team shall have two sets of caps manufactured for water polo, one white and the other a dark contrasting color. The caps of the home team may be any dark color that contrasts with the color of the ball and with the color of the numbers. Dark caps may not be yellow, gold, orange, powder blue, light gray, pale green or similar light colors. The caps of the home team field players may be divided into thirds or alternating‐colored panels, consisting of two colors, a solid dark color such as navy blue or black, and a solid color such as powder blue, medium blue, orange or green (this panel shall not be white). These caps must be clearly distinguishable from the caps of the goalkeepers (the caps of the field players may not be divided into red and white panels or into red and dark panels). The numbers on the caps shall be white, yellow or gold, clearly visible, of the correct size and placed on the darker portion of the cap. No logos or other designs may be in the panels with the cap numbers. The middle panel of the dark caps may contain, with no restriction on the size or number, the competitor’s name, school name, school nickname, and/or the school logo, provided the background remains a contrasting color. ART. 2 . Caps for the visiting team field players shall be solid white. The middle panel(s) (if in quarters) of these caps may contain, with no restriction on the size or number, competitor’s name, school name, school nickname, and/or the school logo, provided that the background remains white. -

Seattle Tennis Center Strings, Grips and Accessories

Amy Yee Tennis Center Strings, Grips and Accessories STRING STRING* (prices include string and labor) Wilson: NXT 16 & 17 (17 is thinner) gauge – A multifilament string bonded with polyurethane for $40 excellent playability and shock absorption. If you have arm or shoulder injuries this string is an excellent choice. Ideal for 2.5 and above Luxilon 4G 125 A softer polyester than ALU Power for good playability and is very $40 durable, but takes it a step further by holding tension longer. Popular among tour players. Ideal for 3.5 players and above AYTC’s Ultimate Duo – A hybrid that gives players the advantages of a Luxilon 4G string $42 paired with the playability of NXT. Ideal for 3.5 players and above. Gamma: TNT 16 & 17 (17 thinner) Gauge: A high performance string with controllable power, very $35 good playability and durability. Pearl nylon coating gives it a crisp feel. Ideal for players 3.0 and above. Green Moto: This monofilament (Solid Core) has 7 shaped edges to allow massive spin $32 potential for an affordable price. The string plays well at lower tensions as well. Ideal for 3.0 and above players. (Juniors will love the color) $32 Revelation: This live wire is an engineered and activated string that is designed to be easy on the arm, although has great elasticity to create a mini trampoline effect and thus a little more power. Ideal for players 3.0 and above iOsoft 16: The freedom to attack the ball and not worry about over hitting. A top level $32 control string 3.0+ Synthetic with Wearguard 16 & 17 gauge – A good all-around string w/wear resistant $27 coating for long life. -

BABOLAT, “The” Brand of Tennis Lovers

BABOLAT, “the” brand of tennis lovers “We are the brand of all tennis lovers all around the world!”: this is how Eric Babolat summarizes in a few words the activity of the eponymous company that he has been managing for more than 15 years. The tennis gear of the greatest players in the world has been invented, tested and developed behind the walls of the family company in the heart of Gerland–a neighborhood of Lyon, France. For nearly 140 years, Babolat has lived only for tennis. The true DNA of the brand, tennis is present in the genes of the Lyon-based company. A real success story, in the French way: a very unique style, a world-recognized expertise and innovation as a core value. As much as 90% of its revenue comes from tennis equipment, but Babolat also provides equipment for other racquet sports as well, such as badminton, padel tennis and fronton. Babolat, in a few key figures (June 2013): 339 employees worldwide (France: 227, USA: 55, Europe: 52. Others: 5) • Present in 155 countries, • A two digit sales growth for more than 5 years, • 15,000 authorized dealers, • 20,000 tennis clubs in the world using Babolat, • and products present in tournaments all around the globe thanks to its iconic team of champions. 2013 Global Sales Revenue: 147 M€ (growth +6% vs. 2012) Breakdown by geography - European subsidiaries: 61.9 M€ - USA: 34.8 M€ - Other countries: 50.4 M€ Breakdown by products - Rackets: 48% 70.6 M€ - Strings: 12%, 17.6 M€ - Balls: 8%, 11.4 M€ - Shoes/Apparel: 20%, 29.6 M€ - Other: 12%, 17.6 M€ Since 1875, and after many years focused on innovation, Babolat has become the reference brand for tennis equipment: strings, rackets, shoes, balls, apparel, accessories and bags, for players of all levels. -

Nfhs Rules Changes Affecting Risk (1982-2018)

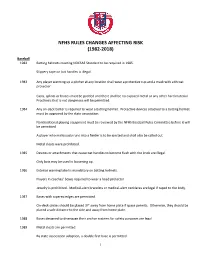

NFHS RULES CHANGES AFFECTING RISK (1982-2018) Baseball 1982 Batting helmets meeting NOCSAE Standard to be required in 1985. Slippery tape on bat handles is illegal. 1983 Any player warming up a pitcher at any location shall wear a protective cup and a mask with a throat protector Casts, splints or braces must be padded and there shall be no exposed metal or any other hard material. Prosthesis that is not dangerous will be permitted. 1984 Any on-deck batter is required to wear a batting helmet. Protective devices attached to a batting helmet must be approved by the state association. Nontraditional playing equipment must be reviewed by the NFHS Baseball Rules Committee before it will be permitted. A player who maliciously runs into a fielder is to be ejected and shall also be called out. Metal cleats were prohibited. 1985 Devices or attachments that cause bat handles to become flush with the knob are illegal. Only bats may be used in loosening up. 1986 Exterior warning label is mandatory on batting helmets. Players in coaches’ boxes required to wear a head protector. Jewelry is prohibited. Medical-alert bracelets or medical-alert necklaces are legal if taped to the body. 1987 Bases with tapered edges are permitted. On-deck circles should be placed 37’ away from home plate if space permits. Otherwise, they should be placed a safe distance to the side and away from home plate. 1988 Bases designed to disengage their anchor systems for safety purposes are legal. 1989 Metal cleats are permitted. By state association adoption, a double first base is permitted.