Engineering Elegant Systems: Theory of Systems Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2020 Aerospace Engineering Major

Major Map: Aerospace Engineering Bachelor of Science in Engineering (B.S.E.) College of Engineering and Computing Department of Mechanical Engineering Bulletin Year: 2020-2021 This course plan is a recommended sequence for this major. Courses designated as critical (!) may have a deadline for completion and/or affect time to graduation. Please see the Program Notes section for details regarding “critical courses” for this particular Program of Study. Credit Min. Major ! Course Subject and Title Hours Grade1 GPA2 Code Prerequisites Notes Semester One (17 Credit Hours) ENGL 101 Critical Reading and Composition 3 C CC-CMW ! MATH 141 Calculus 13 4 C CC-ARP C or better in MATH 112/115/116 or Math placement test score CHEM 111 & CHEM 111L – General Chemistry I 4 C CC-SCI C or better in MATH 111/115/122/141 or higher math or Math placement test AESP 101 Intro. to Aerospace Engineering 3 * PR Carolina Core AIU4 3 CC-AIU Semester Two (18 Credit Hours) ENGL 102 Rhetoric and Composition 3 CC-CMW C or better in ENGL 101 CC-INF ! MATH 142 Calculus II 4 C CC-ARP C or better in MATH 141 CHEM 112 & CHEM 112L – General Chemistry II 4 PR C or better in CHEM 111, MATH 111/115/122/141 or higher math ! PHYS 211 & PHYS 211L – Essentials of Physics I 4 C CC-SCI C or better in MATH 141 EMCH 111 Intro. to Computer-Aided Design 3 * PR Semester Three (15 Credit Hours) ! EMCH 200 Statics 3 C * PR C or better in MATH 141 ! EMCH 201 Intro. -

Moving Towards a New Urban Systems Science

Ecosystems DOI: 10.1007/s10021-016-0053-4 Ó 2016 Springer Science+Business Media New York 20TH ANNIVERSARY PAPER Moving Towards a New Urban Systems Science Peter M. Groffman,1* Mary L. Cadenasso,2 Jeannine Cavender-Bares,3 Daniel L. Childers,4 Nancy B. Grimm,5 J. Morgan Grove,6 Sarah E. Hobbie,3 Lucy R. Hutyra,7 G. Darrel Jenerette,8 Timon McPhearson,9 Diane E. Pataki,10 Steward T. A. Pickett,11 Richard V. Pouyat,12 Emma Rosi-Marshall,11 and Benjamin L. Ruddell13 1Department of Earth and Environmental Sciences, City University of New York Advanced Science Research Center and Brooklyn College, New York, New York 10031, USA; 2Department of Plant Sciences, University of California, Davis, California 95616, USA; 3Department of Ecology, Evolution and Behavior, University of Minnesota, Saint Paul, Minnesota 55108, USA; 4School of Sustain- ability, Arizona State University, Tempe, Arizona 85287, USA; 5School of Life Sciences and Global Institute of Sustainability, Arizona State University, Tempe, Arizona 85287, USA; 6USDA Forest Service, Baltimore Field Station, Baltimore, Maryland 21228, USA; 7Department of Earth and Environment, Boston University, Boston, Massachusetts 02215, USA; 8Department of Botany and Plant Sciences, University of California Riverside, Riverside, California 92521, USA; 9Urban Ecology Lab, Environmental Studies Program, The New School, New York, New York 10003, USA; 10Department of Biology, University of Utah, Salt Lake City, Utah 84112, USA; 11Cary Institute of Ecosystem Studies, Millbrook, New York 12545, USA; 12USDA Forest Service, Research and Development, District of Columbia, Washington 20502, USA; 13School of Informatics, Computing, and Cyber Systems, Northern Arizona University, Flagstaff, Arizona 86001, USA ABSTRACT Research on urban ecosystems rapidly expanded in linchpins in the further development of sustain- the 1990s and is now a central topic in ecosystem ability science and argue that there is a strong need science. -

Warren Mcculloch and the British Cyberneticians

Warren McCulloch and the British cyberneticians Article (Accepted Version) Husbands, Phil and Holland, Owen (2012) Warren McCulloch and the British cyberneticians. Interdisciplinary Science Reviews, 37 (3). pp. 237-253. ISSN 0308-0188 This version is available from Sussex Research Online: http://sro.sussex.ac.uk/id/eprint/43089/ This document is made available in accordance with publisher policies and may differ from the published version or from the version of record. If you wish to cite this item you are advised to consult the publisher’s version. Please see the URL above for details on accessing the published version. Copyright and reuse: Sussex Research Online is a digital repository of the research output of the University. Copyright and all moral rights to the version of the paper presented here belong to the individual author(s) and/or other copyright owners. To the extent reasonable and practicable, the material made available in SRO has been checked for eligibility before being made available. Copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational, or not-for-profit purposes without prior permission or charge, provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. http://sro.sussex.ac.uk Warren McCulloch and the British Cyberneticians1 Phil Husbands and Owen Holland Dept. Informatics, University of Sussex Abstract Warren McCulloch was a significant influence on a number of British cyberneticians, as some British pioneers in this area were on him. -

Systems Engineering Essentials (In Aerospace)

Systems Engineering Essentials (in Aerospace) March 2, 1998 Matt Sexstone Aerospace Engineer NASA Langley Research Center currently a graduate student at the University of Virginia NASA Intercenter Systems Analysis Team Executive Summary • “Boeing wants Systems Engineers . .” • Systems Engineering (SE) is not new and integrates all of the issues in engineering • SE involves a life-cycle balanced perspective to engineering design and problem solving • An SE approach is especially useful when there is no single “correct” answer • ALL successful project team leaders and management employ SE concepts NASA Intercenter Systems Analysis Team Overview • My background • Review: definition of a system • Systems Engineering – What is it? – What isn’t it? – Why implement it? • Ten essentials in Systems Engineering • Boeing wants Systems Engineers. WHY? • Summary and Conclusions NASA Intercenter Systems Analysis Team My Background • BS Aerospace Engineering, Virginia Tech, 1990 • ME Mechanical & Aerospace Engineering, Manufacturing Systems Engineering, University of Virginia, 1997 • NASA B737 High-Lift Flight Experiment • NASA Intercenter Systems Analysis Team – Conceptual Design and Mission Analysis – Technology and Systems Analysis • I am not the “Swami” of Systems Engineering NASA Intercenter Systems Analysis Team Review: Definition of System • “A set of elements so interconnected as to aid in driving toward a defined goal.” (Gibson) • Generalized elements: – Environment – Sub-systems with related functions or processes – Inputs and outputs • Large-scale systems – Typically include a policy component (“beyond Pareto”) – Are high order (large number of sub-systems) – Usually complex and possibly unique NASA Intercenter Systems Analysis Team Systems Engineering is . • An interdisciplinary collaborative approach to derive, evolve, and verify a life-cycle balanced system solution that satisfies customer expectations and meets public acceptability (IEEE-STD-1220, 1994) • the absence of stupidity • i.e. -

Systems Theory

1 Systems Theory BRUCE D. FRIEDMAN AND KAREN NEUMAN ALLEN iopsychosocial assessment and the develop - nature of the clinical enterprise, others have chal - Bment of appropriate intervention strategies for lenged the suitability of systems theory as an orga - a particular client require consideration of the indi - nizing framework for clinical practice (Fook, Ryan, vidual in relation to a larger social context. To & Hawkins, 1997; Wakefield, 1996a, 1996b). accomplish this, we use principles and concepts The term system emerged from Émile Durkheim’s derived from systems theory. Systems theory is a early study of social systems (Robbins, Chatterjee, way of elaborating increasingly complex systems & Canda, 2006), as well as from the work of across a continuum that encompasses the person-in- Talcott Parsons. However, within social work, sys - environment (Anderson, Carter, & Lowe, 1999). tems thinking has been more heavily influenced by Systems theory also enables us to understand the the work of the biologist Ludwig von Bertalanffy components and dynamics of client systems in order and later adaptations by the social psychologist Uri to interpret problems and develop balanced inter - Bronfenbrenner, who examined human biological vention strategies, with the goal of enhancing the systems within an ecological environment. With “goodness of fit” between individuals and their its roots in von Bertalanffy’s systems theory and environments. Systems theory does not specify par - Bronfenbrenner’s ecological environment, the ticular theoretical frameworks for understanding ecosys tems perspective provides a framework that problems, and it does not direct the social worker to permits users to draw on theories from different dis - specific intervention strategies. -

1 Umpleby Stuart Reconsidering Cybernetics

UMPLEBY STUART RECONSIDERING CYBERNETICS The field of cybernetics attracted great attention in the 1950s and 1960s with its prediction of a Second Industrial Revolution due to computer technology. In recent years few people in the US have heard of cybernetics (Umpleby, 2015a, see Figures 1 and 2 at the end of Paper). But a wave of recent books suggests that interest in cybernetics is returning (Umpleby and Hughes, 2016, see Figure at the end of Paper). This white paper reviews some basic ideas in cybernetics. I recommend these and other ideas as a resource for better understanding and modeling of social systems, including threat and response dynamics. Some may claim that whatever was useful has already been incorporated in current work generally falling under the complexity label, but that is not the case. Work in cybernetics has continued with notable contributions in recent years. Systems science, complex systems, and cybernetics are three largely independent fields with their own associations, journals and conferences (Umpleby, 2017). Four types of descriptions used in social science After working in social science and systems science for many years I realized that different academic disciplines use different basic elements. Economists use measurable variables such as price, savings, GDP, imports and exports. Psychologists focus on ideas, concepts and attitudes. Sociologists and political scientists focus on groups, organizations, and coalitions. Historians and legal scholars emphasize events and procedures. People trained in different disciplines construct different narratives using these basic elements. One way to reveal more of the variety in a social system is to create at least four descriptions – one each using variables, ideas, groups, and events (See the figures and tables in Medvedeva & Umpleby, 2015). -

The Origins of Aerospace Engineering Degree Courses

Contributed paper Introduction Theorigins of the aerospaceindustry go back The origins of manycenturies. Everyone is familiar with the aerospace engineering storyof Icaruswho having designed a pairof wings,attempted to ¯y.Hewas successfulbut degree courses ¯ewtooclose to the sun,whereupon the adhesiveused as wingfastening melted due to E.C.P. Ransom and thermalradiation and his ¯ ightended in A.W. Self disaster.At the timethis wouldhave been regardedas science® ction,but clearly there was someawareness of aerodynamics(for wingdesign), adhesives and thermal radiation. The authors Inretrospect, it isapparent that before E.C.P. Ransom and A.W. Self are at Kingston University, successfulman carrying powered ¯ ightcould London, UK bedemonstrated, there had been a periodof intensestudy including experimental and Keywords theoreticalanalysis. The Royal Aeronautical Society,formed in 1866, precededthe ®rst Higher education, Aerospace engineering ¯ightby some37 years.As alearnedsociety it encouragedthe discoveryand exchange of Abstract knowledgenecessary for successful heavier The development of degree courses specically designed than air¯ ight. for aerospace engineers is described in relation to the Orvilleand Wilbur Wright, contrary to change in needs of the industry since the demonstration of popularunderstanding, were extremely powered ight. The impact of two world wars and political talentedresearch workers as wellas decisions on the way universities have been able to meet competentdesigners. T oimprovetheir the demand for graduates is discussed. -

Two Canadian Case Studies Alex Ryan and Mark Leung

RSD2 Relating Systems Thinking and Design 2013 working paper. www.systemic-design.net Systemic Design: Two Canadian Case Studies Alex Ryan and Mark Leung Always design a thing by considering it in its next larger context – a chair in a room, a room in a house, a house in an environment, an environment in a city plan —Eliel Saarinen1 A systems approach begins when first you see the world through the eyes of another —C. West Churchman2 Design is the future of systems methodology —Russ Ackoff3 Design is about unlocking the possibilities that lie within multiple perspectives. That design is about solving a complex problem with multiple constraints – John Maeda4 Introduction The currently fragmented state of ‘systems + design’ praxis is curious in light of the affinities between the two interdisciplines, as emphasized in the quotations above. To explain why designers and systems thinkers have not been talking to each other, we may look to their differences. Design as evolution of craft has been characterized as “thinking with your hands” and as such is rooted in an epistemology of practice.5 In contrast, the systems movement began with Ludwig von Bertalanffy’s General System Theory, which placed systems thinking above the disciplinary sciences, in order to provide a non-reductionist foundation for the unity of science.6 Whereas the designer learns by doing in concrete situations, the systems thinker’s knowledge accrues by abstracting away from the particular details of any specific instance of practice. But if this genealogy is sufficient to account for the lack of dialogue between and synthesis of systems + design, then the two interdisciplines are on a collision course. -

The Applications of Systems Science

The Applications of Systems Science Understanding How the World Works and How You Work In It Motivating Question • What does it mean to UNDERSTAND? – Feeling of understanding – listening to a lecture or reading a book – Using understanding – solving a problem or creating an artifact – Understanding processes – predicting an outcome Understanding • Various categories of what we call knowledge – Knowing What*: facts, explicit, episodic knowledge • Conscious remembering – Knowing How: skills, tacit knowledge • Performance of tasks, reasoning – Knowing That: concepts, relational knowledge • Connecting different concepts – Knowing Why: contexts, understanding • Modeling and explaining • Thinking is the dynamics of the interactions between these various categories • Competencies based on strength of cognitive capabilities * Includes knowing when and where The System of Knowledge and Knowing An example of a “Concept Map” Knowing Why contexts modeling understanding explaining Thinking remembering connecting performing Knowing That concepts relational knowledge Knowing What Knowing How facts skills explicit knowledge tacit knowledge Perceiving, Learning, & Memory recognizing encoding linking recalling Cognitive Capabilities Thinking • Thinking capabilities depend on general cognition models – Critical thinking • Skepticism, recognizing biases, evidence-based, curiosity, reasoning – Scientific thinking • Critical thinking + testing hypotheses, analyzing evidence, formal modeling – Systems thinking • Scientific thinking + holistic conceptualization, -

What Is Systems Theory?

What is Systems Theory? Systems theory is an interdisciplinary theory about the nature of complex systems in nature, society, and science, and is a framework by which one can investigate and/or describe any group of objects that work together to produce some result. This could be a single organism, any organization or society, or any electro-mechanical or informational artifact. As a technical and general academic area of study it predominantly refers to the science of systems that resulted from Bertalanffy's General System Theory (GST), among others, in initiating what became a project of systems research and practice. Systems theoretical approaches were later appropriated in other fields, such as in the structural functionalist sociology of Talcott Parsons and Niklas Luhmann . Contents - 1 Overview - 2 History - 3 Developments in system theories - 3.1 General systems research and systems inquiry - 3.2 Cybernetics - 3.3 Complex adaptive systems - 4 Applications of system theories - 4.1 Living systems theory - 4.2 Organizational theory - 4.3 Software and computing - 4.4 Sociology and Sociocybernetics - 4.5 System dynamics - 4.6 Systems engineering - 4.7 Systems psychology - 5 See also - 6 References - 7 Further reading - 8 External links - 9 Organisations // Overview 1 / 20 What is Systems Theory? Margaret Mead was an influential figure in systems theory. Contemporary ideas from systems theory have grown with diversified areas, exemplified by the work of Béla H. Bánáthy, ecological systems with Howard T. Odum, Eugene Odum and Fritj of Capra , organizational theory and management with individuals such as Peter Senge , interdisciplinary study with areas like Human Resource Development from the work of Richard A. -

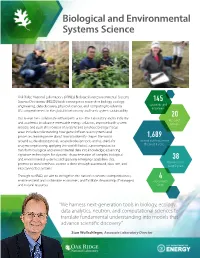

Biological and Environmental Systems Science

Biological and Environmental Systems Science Oak Ridge National Laboratory’s (ORNL’s) Biological and Environmental Systems Science Directorate (BESSD) leads convergence research in biology, ecology, 145 engineering, data discovery, physical sciences, and computing to advance Scientists and engineers US competitiveness in the global bioeconomy and Earth system sustainability. Our researchers collaborate with experts across the Laboratory and in industry 20 Research and academia to advance renewable energy solutions, improve Earth system groups models, and push the frontiers of systems and synthetic biology. Focus areas include understanding how genes influence ecosystem-level processes; learning more about how biodiversity shapes the world 1,689 around us; developing novel, secure biodesign tools and testbeds for Journal publications in enzyme engineering; applying the world’s fastest supercomputers to the past 5 years transform biological and environmental data into knowledge; advancing signature technologies for dynamic characterization of complex biological and environmental systems; and applying emerging capabilities that 38 promise to transform how science is done through automated, data-rich, and Patents in the past 5 years interconnected systems. Through our R&D, we aim to strengthen the nation’s economic competitiveness, 4 enable resilient and sustainable economies, and facilitate stewardship of managed Governor’s and natural resources. Chairs “We harness next-generation tools in biology, ecology, data analytics, neutron, and computational sciences to translate fundamental understanding into models that advance scientific discovery.” Stan Wullschleger, Associate Laboratory Director Our Research Environmental Sciences—Our researchers expand scientific knowledge and develop innovative strategies and technologies that help sustain Earth’s natural resources. Biosciences—Our scientists advance knowledge discovery and develop technology to characterize and engineer complex biological systems that benefit the environment and our bioeconomy. -

Authenticity, Interdisciplinarity, and Mentoring for STEM Learning Environments

International Journal of Education in Mathematics, Science and Technology Volume 4, Number 1, 2016 DOI:10.18404/ijemst.78411 Lessons Learned: Authenticity, Interdisciplinarity, and Mentoring for STEM Learning Environments Mehmet C. Ayar, Bugrahan Yalvac Article Info Abstract Article History In this paper, we discuss the individuals’ roles, responsibilities, and routine activities, along with their goals and intentions in two different contexts—a Received: school science context and a university research context—using sociological 11 July 2015 lenses. We highlight the distinct characteristics of both contexts to suggest new design strategies for STEM learning environments in school science context. We Accepted: collected our research data through participant observations, field notes, group 11 October 2015 conversations, and interviews. Our findings indicate that school science practices were limited to memorizing and replicating science content knowledge through Keywords lectures and laboratory activities. Simple-structured science activities were a STEM education means to engage school science students in practical work and relate the STEM learning theoretical concepts to such work. Their routine activities were to succeed in Learning environment schooling objectives. In university research settings, the routine activities had School science interdisciplinary dimensions representing cognitive, social, and material Science communities dimensions of scientific practice. Such routine activities were missing in the practices of school science. We found that the differences between school and university research settings were primarily associated with individuals’ goals and intentions, which resulted in different social structures. In school settings, more authentic social structure can evolve if teachers trust their students and allow them to share the social and epistemic authorities through establishing mentorship.