Advanced Topics in Information Retrieval / Mining & Organization 2 Outline

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

L'équipe Des Scénaristes De Lost Comme Un Auteur Pluriel Ou Quelques Propositions Méthodologiques Pour Analyser L'auctorialité Des Séries Télévisées

Lost in serial television authorship : l’équipe des scénaristes de Lost comme un auteur pluriel ou quelques propositions méthodologiques pour analyser l’auctorialité des séries télévisées Quentin Fischer To cite this version: Quentin Fischer. Lost in serial television authorship : l’équipe des scénaristes de Lost comme un auteur pluriel ou quelques propositions méthodologiques pour analyser l’auctorialité des séries télévisées. Sciences de l’Homme et Société. 2017. dumas-02368575 HAL Id: dumas-02368575 https://dumas.ccsd.cnrs.fr/dumas-02368575 Submitted on 18 Nov 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution - NonCommercial - NoDerivatives| 4.0 International License UNIVERSITÉ RENNES 2 Master Recherche ELECTRA – CELLAM Lost in serial television authorship : L'équipe des scénaristes de Lost comme un auteur pluriel ou quelques propositions méthodologiques pour analyser l'auctorialité des séries télévisées Mémoire de Recherche Discipline : Littératures comparées Présenté et soutenu par Quentin FISCHER en septembre 2017 Directeurs de recherche : Jean Cléder et Charline Pluvinet 1 « Créer une série, c'est d'abord imaginer son histoire, se réunir avec des auteurs, la coucher sur le papier. Puis accepter de lâcher prise, de la laisser vivre une deuxième vie. -

Bad Cops: a Study of Career-Ending Misconduct Among New York City Police Officers

The author(s) shown below used Federal funds provided by the U.S. Department of Justice and prepared the following final report: Document Title: Bad Cops: A Study of Career-Ending Misconduct Among New York City Police Officers Author(s): James J. Fyfe ; Robert Kane Document No.: 215795 Date Received: September 2006 Award Number: 96-IJ-CX-0053 This report has not been published by the U.S. Department of Justice. To provide better customer service, NCJRS has made this Federally- funded grant final report available electronically in addition to traditional paper copies. Opinions or points of view expressed are those of the author(s) and do not necessarily reflect the official position or policies of the U.S. Department of Justice. This document is a research report submitted to the U.S. Department of Justice. This report has not been published by the Department. Opinions or points of view expressed are those of the author(s) and do not necessarily reflect the official position or policies of the U.S. Department of Justice. Bad Cops: A Study of Career-Ending Misconduct Among New York City Police Officers James J. Fyfe John Jay College of Criminal Justice and New York City Police Department Robert Kane American University Final Version Submitted to the United States Department of Justice, National Institute of Justice February 2005 This project was supported by Grant No. 1996-IJ-CX-0053 awarded by the National Institute of Justice, Office of Justice Programs, U.S. Department of Justice. Points of views in this document are those of the authors and do not necessarily represent the official position or policies of the U.S. -

Tom Clancy’S “Patriot Games,” Released in 1992

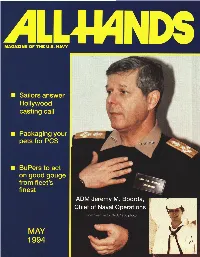

rn Packaging your ADM Jeremy .. Sa, Chief of Naval Operations From Seaman to CNO, 1956 photo D 4”r Any dayin the Navv J May 18,1994, is just like any other day in the Navy, butwe want you to photograph it. 0th amateur and professional civilian and military photographers are askedto record what's happening on their ship or installa- tion on Wednesday, May 18, 1994, for a special photo featureto appear in theOc- tober edition ofAll Hands magazine. We need photos that tell a story and capture fac-the es of sailors, Marines, their families and naval employ- ees. We're looking for imagination and creativity- posed shots will be screenedout. Shoot what is uniqueto your ship or installation, something you may see everyday but others may never get the opportunityto experience. formation. This includes full name, rank and duty sta- We're looking for the best photos from the field, for a tion of the photographer; the names and hometowns worldwide representation of what makes theNavy what of identifiable people in the photos; details on what's it is. happening in the photo; and where the photo tak- was Be creative. Use different lenses - wide angle and en. Captions must be attached individuallyto each pho- telephoto -to give an ordinaryphoto afresh look. Shoot to or slide. Photos must be processed and received by from different angles and don't be afraid to bend those All Hands by June 18, 1994. Photos will not be re- knees. Experiment with silhouettes and time-exposed turned. shots. Our mailing address is: Naval Media Center, Pub- Accept the challenge! lishing Division, AlTN: All Hands, Naval Station Ana- Photos must be shot in the 24-hour period of May costia, Bldg. -

György Rákosi: Beyond Identity: the Case of a Complex Hungarian

BEYOND IDENTITY: THE CASE OF A COMPLEX HUNGARIAN REFLEXIVE György Rákosi University of Debrecen Proceedings of the LFG09 Conference Miriam Butt and Tracy Holloway King (Editors) 2009 CSLI Publications http://csli-publications.stanford.edu/ 459 Abstract It is a well-known typological universal that long distance re- flexives are generally monomorphemic and complex reflexives tend to be licensed only locally. I argue in this paper that the Hungarian body part reflexive maga ‘himself’ and its more complex counterpart önmaga ‘himself, his own self’ represent a non-isolated pattern that adds a new dimension to this typology. Nominal modification of a highly grammaticalized body part re- flexive may reactivate the dormant underlying possessive struc- ture, thereby granting the more complex reflexive variant an in- creased level of referentiality and syntactic freedom. In particu- lar, the reactivation of the possessive structure in önmaga is shown to be concomitant with the possibility of referring to rep- resentations of the self, as well as a preference for what appears to be coreferential readings and the loss or dispreference of bound-variable readings. 1. Introduction According to an established typology, complex reflexives are expected to be local and relatively well-behaved from a binding theoretical perspective, whereas long distance reflexives tend to be monomorphemic (see Faltz 1985, Pica 1987 and subsequent work, as well as Dalrymple 1993 and Bresnan 2001 in the LFG literature). Polymorphemic reflexives, however, are not uniform as they may show different types of morphological complexity. In particular, body part reflexives, which owe their complexity to their historical origin as possessive structures, are often grammatical outside of the local domain in which their antecedent is located. -

The Presidents of the United States of America II Mp3, Flac, Wma

The Presidents Of The United States Of America II mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: II Country: Europe Released: 1996 Style: Alternative Rock, Pop Rock MP3 version RAR size: 1993 mb FLAC version RAR size: 1961 mb WMA version RAR size: 1792 mb Rating: 4.1 Votes: 157 Other Formats: RA APE MMF MP2 MP3 DXD AAC Tracklist Hide Credits 1 Ladies And Gentlemen Part I 1:37 2 Lunatic To Love 2:57 3 Volcano 2:58 4 Mach 5 3:16 5 Twig 2:37 6 Bug City 3:05 7 Bath Of Fire 3:02 8 Japan 2:34 9 Tiki God 2:53 10 L.I.P. 3:20 Froggie 11 3:10 Guitar – Mark Sandman 12 Toob Amplifier 1:21 13 Supermodel 2:49 14 Tremelo Blooz 2:51 15 Mach 5 (Live) 3:40 16 Tiki Lounge God 3:11 17 Back Porch (Live) 3:34 18 Puffy Little Shoes 5:00 19 Ladies And Gentlemen Part II 3:12 Credits Co-producer – Craig Montgomery Engineer – Craig Montgomery Mastered By – Wally Traugott Mixed By – Jerry Finn Notes Recorded at Bad Animals and Studio Lithio, Seattle. Japanese Edition contains 5 bonus tracks. These are the numbers: 8, 14-17 Other versions Category Artist Title (Format) Label Category Country Year The Presidents Of The United C 67577 II (LP, Album) Columbia C 67577 US 1996 States Of America The Presidents Of The United II (CD, Album + 485092 9 Columbia 485092 9 Australia 1996 States Of America CD + Ltd) The Presidents Of The United CT 67577 II (Cass, Album) Columbia CT 67577 US 1996 States Of America The Presidents Of The United 485092.4 II (Cass, Album) Columbia 485092.4 Malaysia 1996 States Of America The Presidents Of The United CT 67577 II (Cass, Album) Columbia CT 67577 Canada 1996 States Of America Related Music albums to II by The Presidents Of The United States Of America 1. -

Love and the Bible Setting the Stage for the Search

Love and the Bible Robin Calamaio - Copyright 2003 - Edit 2019 freelygive-n.com If you think love is an important topic to Christianity, you owe it to yourself, and the world around you, to learn God's teaching on this subject. It is amazing how confused the teaching is on this vital topic. You are about to embark on a fascinating study, and like all correct Bible understandings, it is tremendously liberating. Love. This seems like an important subject in Christianity. One could even contend it is a core element of the Christian faith. After all, the first commandment is the requirement to love God with all of one's heart, mind, soul and strength (Mk 12:30). The second commandment requires us to love our neighbor as our self (Mk 12:31). This “love” requirement covers all our vital relationships - toward self, neighbor and Creator! But the centrality of this “love doctrine” extends even further. The Bible states that love fulfills the Law (Ro 13:8-10 and Gal 5:14). So, all the legal requirements of the Law of God are fulfilled by love? It is evidently made up of something that has the ability to even satisfy the requirements the entire Old Testament theocracy. This is worth thought, investigation and inquiry. So, ... what is love? If we are to fulfill these commands, we need some kind of definition. How else can we know if we are meeting His requirements? Before proceeding, I want you to write down your definition of “love.” Nobody will ever know what you write down unless you decide to share it. -

Lost Season 6 Episode 6 Online

Lost season 6 episode 6 online click here to download «Lost» – Season 6, Episode 6 watch in HD quality with subtitles in different languages for free and without registration! Lost - Season 6: The survivors must deal with two outcomes of the detonation of a Scroll down and click. Watch Lost Season 6 Episode 6 - Sayid is faced with a difficult decision, and Claire sends a warning to the. Watch Lost Season 6 Episode 6 online via TV Fanatic with over 5 options to watch the Lost S6E6 full episode. Affiliates with free and. Watch Lost - Season 6 in HD quality online for free, putlocker Lost - Season 6. Watch Lost - Season 6, Episode 6 - Sundown: Sayid faces a difficult decision, and. free lost season 6 episode 6 watch online Download Link www.doorway.ru? keyword=free-lost-seasonepisodewatch-online&charset=utf Watch Lost Season 6 Online. The survivors of a plane crash are Watch The latest Lost Season 6 Video: Episode What They Died For · 35 Links, 18 May. Lost - Season 6. Home > Lost - Season 6 > Episode. Episode May 24, Episode May 24, Episode May 24, Episode May www.doorway.ru Watch Lost Season 6 Episode 6 "Sundown" and Season 6 Full Online!"Lost Tras la detonación de la bomba nuclear al final de la anterior temporada, se producen dos consecuencias. En una de las?€œrealidades?€? el avión de. Watch Lost - Season 6, Episode 6 - Sundown: Sayid faces a difficult decision, and Claire sends a Watch Online Watch Full Episodes: Lost. Watch Lost in oz season 1 Episode 6 online full episodes streaming. -

The Construction of Mother Archetypes in Five Novels by Doris Lessing

ADVERTIMENT. Lʼaccés als continguts dʼaquesta tesi queda condicionat a lʼacceptació de les condicions dʼús establertes per la següent llicència Creative Commons: http://cat.creativecommons.org/?page_id=184 ADVERTENCIA. El acceso a los contenidos de esta tesis queda condicionado a la aceptación de las condiciones de uso establecidas por la siguiente licencia Creative Commons: http://es.creativecommons.org/blog/licencias/ WARNING. The access to the contents of this doctoral thesis it is limited to the acceptance of the use conditions set by the following Creative Commons license: https://creativecommons.org/licenses/?lang=en Ph.D. Thesis Closing Circles: The Construction of Mother Archetypes in Five Novels by Doris Lessing. Anna Casablancas i Cervantes Thesis supervisor: Dr. Andrew Monnickendam. Programa de doctorat en Filologia Anglesa. Departament de Filologia Anglesa i Germanística. Facultat de Filosofia i Lletres. Universitat Autònoma de Barcelona. 2016. Als meus pares, que mereixen veure’s reconeguts en tots els meus èxits pel seu exemple d’esforç i sacrifici, i per saber sempre que ho aconseguiria. Als meus fills, Júlia i Bernat, que són la motivació, la força i l’alegria en cadascun dels projectes que goso emprendre. ACKNOWLEDGEMENTS I would like to thank my thesis supervisor, Dr. Andrew Monnickendam, for the continuous support and guidance of my Ph.D. study. His wise advice and encouragement made it possible to finally complete this thesis. My sincere thanks also goes to Sara Granja, administrative assistant for the Doctorate programme at the Departament de Filologia Anglesa i Germanística, for her professionalism and efficiency whenever I got lost among the bureaucracy. But the person who unquestionably deserves my deepest gratitude is, for countless reasons, Dr. -

Massachusetts Trial Court Officer Academy Recruit Manual

MASSACHUSETTS TRIAL COURT OFFICER ACADEMY RECRUIT MANUAL WESTOVER AIR FORCE BASE August 2020 Recruit Manual Massachusetts Trial Court Officer Academy Table of Contents Table of Contents ............................................................................................................................ 2 FUNDAMENTAL TRAINING VALUES ..................................................................................... 6 MASSACHUSETTS TRIAL COURT ACADEMY HONOR CODE ........................................... 7 MASSACHUSETTS TRIAL COURT CUSTOMS AND COURTESIES .................................... 8 MASSACHUSETTS TRIAL COURT ACADEMY MISSION STATEMENT .......................... 8 TRAINING PHILOSOPHY ........................................................................................................... 8 OATH OF OFFICE ........................................................................................................................ 9 MASSACHUSETTS TRIAL COURT MISSION STATEMENT ............................................... 10 MASSACHUSETTS TRIAL COURT SECURITY DIVISION MISSION STATEMENT ....... 10 OUTSIDE CONTACT ................................................................................................................. 11 Visitation ................................................................................................................................... 11 Mail Call ................................................................................................................................... 11 Telephone Use -

How the Detective Fiction of Pd James Provokes

THEOLOGY IN SUSPENSE: HOW THE DETECTIVE FICTION OF P.D. JAMES PROVOKES THEOLOGICAL THOUGHT Jo Ann Sharkey A Thesis Submitted for the Degree of MPhil at the University of St. Andrews 2011 Full metadata for this item is available in Research@StAndrews:FullText at: http://research-repository.st-andrews.ac.uk/ Please use this identifier to cite or link to this item: http://hdl.handle.net/10023/3156 This item is protected by original copyright This item is licensed under a Creative Commons License THE UNIVERSITY OF ST. ANDREWS ST. MARY’S COLLEGE THEOLOGY IN SUSPENSE: HOW THE DETECTIVE FICTION OF P.D. JAMES PROVOKES THEOLOGICAL THOUGHT A DISSERTATION SUBMITTED TO THE FACULTY OF DIVINITY INSTITUTE FOR THEOLOGY, IMAGINATION, AND THE ARTS IN CANDIDANCY FOR THE DEGREE OF MASTER OF PHILOSOPHY BY JO ANN SHARKEY ST. ANDREWS, SCOTLAND 15 APRIL 2010 Copyright © 2010 by Jo Ann Sharkey All Rights Reserved ii ABSTRACT The following dissertation argues that the detective fiction of P.D. James provokes her readers to think theologically. I present evidence from the body of James’s work, including her detective fiction that features the Detective Adam Dalgliesh, as well as her other novels, autobiography, and non-fiction work. I also present a brief history of detective fiction. This history provides the reader with a better understanding of how P.D James is influenced by the detective genre as well as how she stands apart from the genre’s traditions. This dissertation relies on an interview that I conducted with P.D. James in November, 2008. During the interview, I asked James how Christianity has influenced her detective fiction and her responses greatly contribute to this dissertation. -

Jack's Costume from the Episode, "There's No Place Like - 850 H

Jack's costume from "There's No Place Like Home" 200 572 Jack's costume from the episode, "There's No Place Like - 850 H... 300 Jack's suit from "There's No Place Like Home, Part 1" 200 573 Jack's suit from the episode, "There's No Place Like - 950 Home... 300 200 Jack's costume from the episode, "Eggtown" 574 - 800 Jack's costume from the episode, "Eggtown." Jack's bl... 300 200 Jack's Season Four costume 575 - 850 Jack's Season Four costume. Jack's gray pants, stripe... 300 200 Jack's Season Four doctor's costume 576 - 1,400 Jack's Season Four doctor's costume. Jack's white lab... 300 Jack's Season Four DHARMA scrubs 200 577 Jack's Season Four DHARMA scrubs. Jack's DHARMA - 1,300 scrub... 300 Kate's costume from "There's No Place Like Home" 200 578 Kate's costume from the episode, "There's No Place Like - 1,100 H... 300 Kate's costume from "There's No Place Like Home" 200 579 Kate's costume from the episode, "There's No Place Like - 900 H... 300 Kate's black dress from "There's No Place Like Home" 200 580 Kate's black dress from the episode, "There's No Place - 950 Li... 300 200 Kate's Season Four costume 581 - 950 Kate's Season Four costume. Kate's dark gray pants, d... 300 200 Kate's prison jumpsuit from the episode, "Eggtown" 582 - 900 Kate's prison jumpsuit from the episode, "Eggtown." K... 300 200 Kate's costume from the episode, "The Economist 583 - 5,000 Kate's costume from the episode, "The Economist." Kat.. -

Nouveautés - Mai 2019

Nouveautés - mai 2019 Animation adultes Ile aux chiens (L') (Isle of dogs) Fiction / Animation adultes Durée : 102mn Allemagne - Etats-Unis / 2018 Scénario : Wes Anderson Origine : histoire originale de Wes Anderson, De : Wes Anderson Roman Coppola, Jason Schwartzman et Kunichi Nomura Producteur : Wes Anderson, Jeremy Dawson, Scott Rudin Directeur photo : Tristan Oliver Décorateur : Adam Stockhausen, Paul Harrod Compositeur : Alexandre Desplat Langues : Français, Langues originales : Anglais, Japonais Anglais Sous-titres : Français Récompenses : Écran : 16/9 Son : Dolby Digital 5.1 Prix du jury au Festival 2 cinéma de Valenciennes, France, 2018 Ours d'Argent du meilleur réalisateur à la Berlinale, Allemagne, 2018 Support : DVD Résumé : En raison d'une épidémie de grippe canine, le maire de Megasaki ordonne la mise en quarantaine de tous les chiens de la ville, envoyés sur une île qui devient alors l'Ile aux Chiens. Le jeune Atari, 12 ans, vole un avion et se rend sur l'île pour rechercher son fidèle compagnon, Spots. Aidé par une bande de cinq chiens intrépides et attachants, il découvre une conspiration qui menace la ville. Critique presse : « De fait, ce film virtuose d'animation stop-motion (...) reflète l'habituelle maniaquerie ébouriffante du réalisateur, mais s'étoffe tout à la fois d'une poignante épopée picaresque, d'un brûlot politique, et d'un manifeste antispéciste où les chiens se taillent la part du lion. » Libération - La Rédaction « Un conte dont la splendeur et le foisonnement esthétiques n'ont d'égal que la férocité politique. » CinemaTeaser - Aurélien Allin « Par son sujet, « L'île aux chiens » promet d'être un classique de poche - comme une version « bonza? de l'art d'Anderson -, un vertige du cinéma en miniature, patiemment taillé.