The Future of Air Force Motion Imagery Exploitation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Producer's Handbook

DEVELOPMENT AND OTHER CHALLENGES A PRODUCER’S HANDBOOK by Kathy Avrich-Johnson Edited by Daphne Park Rehdner Summer 2002 Introduction and Disclaimer This handbook addresses business issues and considerations related to certain aspects of the production process, namely development and the acquisition of rights, producer relationships and low budget production. There is no neat title that encompasses these topics but what ties them together is that they are all areas that present particular challenges to emerging producers. In the course of researching this book, the issues that came up repeatedly are those that arise at the earlier stages of the production process or at the earlier stages of the producer’s career. If not properly addressed these will be certain to bite you in the end. There is more discussion of various considerations than in Canadian Production Finance: A Producer’s Handbook due to the nature of the topics. I have sought not to replicate any of the material covered in that book. What I have sought to provide is practical guidance through some tricky territory. There are often as many different agreements and approaches to many of the topics discussed as there are producers and no two productions are the same. The content of this handbook is designed for informational purposes only. It is by no means a comprehensive statement of available options, information, resources or alternatives related to Canadian development and production. The content does not purport to provide legal or accounting advice and must not be construed as doing so. The information contained in this handbook is not intended to substitute for informed, specific professional advice. -

Freeze Frame by Lydia Rypcinski 8 Victoria Tahmizian Bowling and Other [email protected] Fun at 45 Below Zero

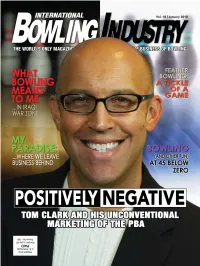

THE WORLD'S ONLY MAGAZINE DEVOTED EXCLUSIVELY TO THE BUSINESS OF BOWLING CONTENTS VOL 18.1 PUBLISHER & EDITOR Scott Frager [email protected] Skype: scottfrager 6 20 MANAGING EDITOR THE ISSUE AT HAND COVER STORY Fred Groh More than business Positively negative [email protected] Take a close look. You want out-of-the-box OFFICE MANAGER This is a brand new IBI. marketing? You want Tom Patty Heath By Scott Frager Clark. How his tactics at [email protected] PBA are changing the CONTRIBUTORS way the media, the public 8 Gregory Keer and the players look Lydia Rypcinski COMPASS POINTS at bowling. ADMINISTRATIVE ASSISTANT Freeze frame By Lydia Rypcinski 8 Victoria Tahmizian Bowling and other [email protected] fun at 45 below zero. By Gregory Keer 28 ART DIRECTION & PRODUCTION THE LIGHTER SIDE Designworks www.dzynwrx.com A feather in your 13 (818) 735-9424 cap–er, lane PORTFOLIO Feather bowling’s the FOUNDER Allen Crown (1933-2002) What was your first game where the balls job, Cathy DeSocio? aren’t really balls, there are no bowling shoes, 13245 Riverside Dr., Suite 501 Sherman Oaks, CA 91423 and the lanes aren’t even 13 (818) 789-2695(BOWL) flat. But people come What was your first Fax (818) 789-2812 from miles around, pay $40 job, John LaSpina? [email protected] an hour, and book weeks in advance. www.BowlingIndustry.com 14 HOTLINE: 888-424-2695 What Bowling 32 Means to Me THE GRAPEVINE SUBSCRIPTION RATES: One copy of Two bowling International Bowling Industry is sent free to A tattoo league? every bowling center, independently owned buddies who built a lane 20 Go ahead and laugh but pro shop and collegiate bowling center in of their own when their the U.S., and every military bowling center it’s a nice chunk of and pro shop worldwide. -

AIR POWER History / WINTER 2015 from the Editor

WINTER 2015 - Volume 62, Number 4 WWW.AFHISTORICALFOUNDATION.ORG The Air Force Historical Foundation Founded on May 27, 1953 by Gen Carl A. “Tooey” Spaatz MEMBERSHIP BENEFITS and other air power pioneers, the Air Force Historical All members receive our exciting and informative Foundation (AFHF) is a nonprofi t tax exempt organization. Air Power History Journal, either electronically or It is dedicated to the preservation, perpetuation and on paper, covering: all aspects of aerospace history appropriate publication of the history and traditions of American aviation, with emphasis on the U.S. Air Force, its • Chronicles the great campaigns and predecessor organizations, and the men and women whose the great leaders lives and dreams were devoted to fl ight. The Foundation • Eyewitness accounts and historical articles serves all components of the United States Air Force— Active, Reserve and Air National Guard. • In depth resources to museums and activities, to keep members connected to the latest and AFHF strives to make available to the public and greatest events. today’s government planners and decision makers information that is relevant and informative about Preserve the legacy, stay connected: all aspects of air and space power. By doing so, the • Membership helps preserve the legacy of current Foundation hopes to assure the nation profi ts from past and future US air force personnel. experiences as it helps keep the U.S. Air Force the most modern and effective military force in the world. • Provides reliable and accurate accounts of historical events. The Foundation’s four primary activities include a quarterly journal Air Power History, a book program, a • Establish connections between generations. -

MARGARET KAMINSKI 2354 Ewing Street, Los Angeles, CA 90039 646.207.8652 • [email protected]

MARGARET KAMINSKI 2354 Ewing Street, Los Angeles, CA 90039 646.207.8652 • [email protected] EXPERIENCE Nickelodeon, Los Angeles, CA 2016 – Present Writer, NiCkelodeon Kids’ ChoiCe Awards (2019) Writer, NiCkelodeon Kids’ ChoiCe Sports Awards (2018) Writer, NiCkelodeon Kids’ ChoiCe Awards (2018) Writers’ Assistant, Nickelodeon Kids’ Choice Awards (2017) Writers’ Assistant, NiCkelodeon Kids’ ChoiCe SPorts Awards (2017) Writers’ Assistant, NiCkelodeon Kids’ ChoiCe SPorts Awards (2016) Above Average Productions, Los Angeles, CA January 2018 – Present Freelance Writer Live From WZRD, Warner Media/VRV, Los Angeles, CA November 2018 – MarCh 2019 Staff Writer Scholastic’s Choices Magazine, New York, New York Video Producer, Story Editor MarCh 2018 – Present Freelance Writer February 2015 – Present Assistant Editor, Managing Editor of Teaching Resources May 2012 – February 2015 SKOOGLE Pilot (All That Reboot), PoCket.WatCh, Los Angeles, CA April 2018 – August 2018 Contributing Writer (punch-up) E! Live from the Red Carpet, Los Angeles, CA August 2017 – November 2018 August 2017 – Present Writer The 2018 PeoPle’s ChoiCe Awards The 2018 Primetime Emmy Awards The 2017 Primetime Emmy Awards The 2017 AmeriCan MusiC Awards The 2018 Golden Globes The 2018 SCreen ACtors Guild Awards The 2018 Grammy Awards MTV Movie + TV Awards Pre-Show, Los Angeles, CA May 2018 – June 2018 Writer PotterCon/Wizard U., National/Touring July 2012 – January 2018 Executive Producer Fallen For You: Pilot, Los Angeles, CA DeCember 2016 Writers’ Assistant Collier.Simon, Los Angeles, CA February 2016 – Present Freelance Writer Masthead Media, New York, NY SePtember 2015 – February 2016 Freelance Writer PMK•BNC (Vowel), New York, NY MarCh 2015 – SePtember 2015 Freelance Writer EDUCATION Barnard College, Columbia University, B.A. -

Rec Center Gets a Makeover Dordt Instructor Challenges Steve King for Congress Concert Choir Tour: Ain't No Sickness Can Hold

Dordt NISO Dordt Changes media concert theatre to DCC truck Piano in ACTF Dreamer page 3 page 3 page 4 page 8 January 31, 2019 Issue 1 Follow us online Concert Choir tour: ain’t no sickness can hold them down Haemi Kim -- Staff Writer could incorporate this beautiful language into our piece. I loved the excitement on people’s During winter break, Dordt College Concert faces in the audience—or fear among the high Choir and its conductor Ryan Smit went on a schoolers—when we did this song and I had tour, sharing their talents to many different people at almost every stop come up to me audiences. It was a week-long tour going afterwards and thank me for it.” around Iowa, Wisconsin, Illinois, and Indiana. Even though the choir tour was a blast to Many of the choir students enjoyed being on many of the choir members, this year, the tour because it was an opportunity to meet other stomach flu spread within the choir, affecting choir members and build relationships with around 14 students, and even the conductor other choir members. during their last concert before coming back. “It’s a unique opportunity to get to know the Because of this, TeBrake and Seaman stepped other choir members outside of the choir room,” up to conduct the last concert, each taking half said senior soprano Kourtney TeBrake. of the performance. “Before tour, you recognize people in the As music education majors, it wasn’t their first choir, maybe know their names, but when you time conducting for a choir. -

Overhead Surveillance

Confrontation or Collaboration? Congress and the Intelligence Community Overhead Surveillance Eric Rosenbach and Aki J. Peritz Overhead Surveillance One of the primary methods the U.S. uses to gather vital national security information is through air- and space-based platforms, collectively known as “overhead surveillance.” This memorandum provides an overview of overhead surveillance systems, the agencies involved in gathering and analyzing overhead surveillance, and the costs and benefits of this form of intelligence collection. What is Overhead Surveillance? “Overhead surveillance” describes a means to gather information about people and places from above the Earth’s surface. These collection systems gather imagery intelligence (IMINT), signals intelligence (SIGINT) and measurement and signature intelligence (MASINT). Today, overhead surveillance includes: • Space-based systems, such as satellites. • Aerial collection platforms that range from large manned aircraft to small unmanned aerial vehicles (UAVs). A Brief History of Overhead Surveillance Intelligence, surveillance and reconnaissance platforms, collectively known as ISR, date back to the 1790s when the French military used observation balloons to oversee battlefields and gain tactical advantage over their adversaries. Almost all WWI and WWII belligerents used aerial surveillance to gain intelligence on enemy lines, fortifications and troop movements. Following WWII, the U.S. further refined airborne and space-based reconnaissance platforms for use against the Soviet Union. Manned reconnaissance missions, however, were risky and could lead to potentially embarrassing outcomes; the 1960 U-2 incident was perhaps the most widely publicized case of the risks associated with this form of airborne surveillance. Since the end of the Cold War, overhead surveillance technology has evolved significantly, greatly expanding the amount of information that the policymaker and the warfighter can use to make critical, time-sensitive decisions. -

THE WRITE CHOICE by Spencer Rupert a Thesis Presented to The

THE WRITE CHOICE By Spencer Rupert A thesis presented to the Independent Studies Program of the University of Waterloo in fulfilment of the thesis requirements for the degree Bachelor of Independent Studies (BIS) Waterloo, Canada 2009 1 Table of Contents 1 Abstract...................................................................................................................................................7 2 Summary.................................................................................................................................................8 3 Introduction.............................................................................................................................................9 4 Writing the Story...................................................................................................................................11 4.1 Movies...........................................................................................................................................11 4.1.1 Writing...................................................................................................................................11 4.1.1.1 In the Beginning.............................................................................................................11 4.1.1.2 Structuring the Story......................................................................................................12 4.1.1.3 The Board.......................................................................................................................15 -

Canadian Canada $7 Spring 2020 Vol.22, No.2 Screenwriter Film | Television | Radio | Digital Media

CANADIAN CANADA $7 SPRING 2020 VOL.22, NO.2 SCREENWRITER FILM | TELEVISION | RADIO | DIGITAL MEDIA The Law & Order Issue The Detectives: True Crime Canadian-Style Peter Mitchell on Murdoch’s 200th ep Floyd Kane Delves into class, race & gender in legal PM40011669 drama Diggstown Help Producers Find and Hire You Update your Member Directory profile. It’s easy. Login at www.wgc.ca to get started. Questions? Contact Terry Mark ([email protected]) Member Directory Ad.indd 1 3/6/19 11:25 AM CANADIAN SCREENWRITER The journal of the Writers Guild of Canada Vol. 22 No. 2 Spring 2020 Contents ISSN 1481-6253 Publication Mail Agreement Number 400-11669 Cover Publisher Maureen Parker Diggstown Raises Kane To New Heights 6 Editor Tom Villemaire [email protected] Creator and showrunner Floyd Kane tackles the intersection of class, race, gender and the Canadian legal system as the Director of Communications groundbreaking CBC drama heads into its second season Lana Castleman By Li Robbins Editorial Advisory Board Michael Amo Michael MacLennan Features Susin Nielsen The Detectives: True Crime Canadian-Style 12 Simon Racioppa Rachel Langer With a solid background investigating and writing about true President Dennis Heaton (Pacific) crime, showrunner Petro Duszara and his team tell us why this Councillors series is resonating with viewers and lawmakers alike. Michael Amo (Atlantic) By Matthew Hays Marsha Greene (Central) Alex Levine (Central) Anne-Marie Perrotta (Quebec) Murdoch Mysteries’ Major Milestone 16 Lienne Sawatsky (Central) Andrew Wreggitt (Western) Showrunner Peter Mitchell reflects on the successful marriage Design Studio Ours of writing and crew that has made Murdoch Mysteries an international hit, fuelling 200+ eps. -

Aerial Survellance-Reconnaisance Field Army

<3 # / FM 30-20 DEP/KRTMENT OF THE ARMY FIELD MANUAL SURVEILLANCE NAISSANCE ARMY ^SHINGTONXC K1££ orti HEADQUARTERS, DEPARTMENT DiF THE ARMY APRIL 1969 TAGO 7075A # 9 * *FM 30-20 FIELD MANUAL HEADQUARTERS DEPARTMENT OF THE ARMY No. 30-20 WASHINGTON, D.C., 25 April 1969 AERIAL SURVEILLANCE-RECONNAISSANCE, FIELD ARMY Paragraph Page CHAPTER 1. INTRODUCTION 1-1—1-4 1-1 2. G2 AIR ORGANIZATION AND FUNCTIONS Section I. Organization 2-1 2-1 2-1 II. Functions 2-3, 3-4 2-1 CHAPTER 3. CONCEPT OF AERIAL SURVEILLANCE AND RECONNAISSANCE EMPLOYMENT 3-1—3-6 3-1 4. AERIAL SURVEILLANCE AND RECONNAIS- SANCE MISSIONS Section I. Type missions 4_i 4_g 4-1 II. Collection means 4_7_4_n 4-2 CHAPTER 5. AERIAL SURVEILLANCE AND RECONNAIS- SANCE PLANNING, OPERATIONS, AND COORDINATION Section I. General planning 5-1—5-5 5-1 II. Specific planning 5-6, 5-7 5-4 III. Request prccedures 5-8—5-10 5- 6 IV. Aircraft and sensor capabilities 5-11,5-12 5-10 V. Operational aids 5-13—5-26 5-12 VI. Coordination 5-27—5-30 5-21 CHAPTER 6. COMMUNICATIONS 6-1—6-5 6- 1 7. MILITARY INTELLIGENCE BATTALION, AIR RECONNAISSANCE SUPPORT, FIELD ARMY Section I. Mission, organization, and functions •_ 7-1—7-3 7-1 II. Concept of employment 7_4 7_g 7-3 III. Planning and operations _ 7_9, 7-10 7-5 CHAPTER 8. AVIATION AERIAL SURVEILLANCE COMPANY Section I. Mission, organization, capabilities, and limitations __ g_i g-6 8-1 II. Command, control, and communication g_7 8-10 8-4 III. -

National Reconnaissance Office Review and Redaction Guide

NRO Approved for Release 16 Dec 2010 —Tep-nm.T7ymqtmthitmemf- (u) National Reconnaissance Office Review and Redaction Guide For Automatic Declassification Of 25-Year-Old Information Version 1.0 2008 Edition Approved: Scott F. Large Director DECL ON: 25x1, 20590201 DRV FROM: NRO Classification Guide 6.0, 20 May 2005 NRO Approved for Release 16 Dec 2010 (U) Table of Contents (U) Preface (U) Background 1 (U) General Methodology 2 (U) File Series Exemptions 4 (U) Continued Exemption from Declassification 4 1. (U) Reveal Information that Involves the Application of Intelligence Sources and Methods (25X1) 6 1.1 (U) Document Administration 7 1.2 (U) About the National Reconnaissance Program (NRP) 10 1.2.1 (U) Fact of Satellite Reconnaissance 10 1.2.2 (U) National Reconnaissance Program Information 12 1.2.3 (U) Organizational Relationships 16 1.2.3.1. (U) SAF/SS 16 1.2.3.2. (U) SAF/SP (Program A) 18 1.2.3.3. (U) CIA (Program B) 18 1.2.3.4. (U) Navy (Program C) 19 1.2.3.5. (U) CIA/Air Force (Program D) 19 1.2.3.6. (U) Defense Recon Support Program (DRSP/DSRP) 19 1.3 (U) Satellite Imagery (IMINT) Systems 21 1.3.1 (U) Imagery System Information 21 1.3.2 (U) Non-Operational IMINT Systems 25 1.3.3 (U) Current and Future IMINT Operational Systems 32 1.3.4 (U) Meteorological Forecasting 33 1.3.5 (U) IMINT System Ground Operations 34 1.4 (U) Signals Intelligence (SIGINT) Systems 36 1.4.1 (U) Signals Intelligence System Information 36 1.4.2 (U) Non-Operational SIGINT Systems 38 1.4.3 (U) Current and Future SIGINT Operational Systems 40 1.4.4 (U) SIGINT -

Aerial Reconnaissance (Pennsylvania Military Museum, T

PMM BLOG ARCHIVE December 16, 2020 Aerial Reconnaissance (Pennsylvania Military Museum, T. Gum, Site Admin.) Even though the Korean War had come to a standstill a new form of warfare was being lit off between the United States and the USSR. To remain ahead of the curve, the ability to deliver substantial payloads of ordinance and conduct aerial reconnaissance was critical. The history of the B-47 is relatively well known, and on this day in history (17 DEC 1947) it completed its first flight. The related, and subsequent, iterations of this six-engine subsonic long-range flyer are perhaps lesser known by the general public; the “B” standing for bomber/bombing and others based on this platform carrying various lettering respective to design or purpose. DAYTON, Ohio -- Boeing RB-47H at the National Museum of the United States Air Force. (U.S. Air Force photo) The RB-47H being a (r)econnaissance outfitting of the B-47E platform, was a crucial option when dealing with an adversary well accomplished in deception and misinformation. The role played was quite simple – gather intelligence on the size, location, and capability of the Russian air defense and radar networks. From 1955 to the mid 1960s the RB-47H filled this role until being replaced by the RC-135… arguably a more capable option. The RB-47H was capable of long range missions due to it’s base-design, and successfully operated out of countless airfields and bases. The positioning of these airfields of course played a strategic role in staging and coordinating defensive measures when an RB was intercepted. -

Preserving Ukraine's Independence, Resisting Russian Aggression

Preserving Ukraine’s Independence, Resisting Russian Aggression: What the United States and NATO Must Do Ivo Daalder, Michele Flournoy, John Herbst, Jan Lodal, Steven Pifer, James Stavridis, Strobe Talbott and Charles Wald © 2015 The Atlantic Council of the United States. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means without permission in writing from the Atlantic Council, except in the case of brief quotations in news articles, critical articles, or reviews. Please direct inquiries to: Atlantic Council 1030 15th Street, NW, 12th Floor Washington, DC 20005 ISBN: 978-1-61977-471-1 Publication design: Krystal Ferguson; Cover photo credit: Reuters/David Mdzinarishvili This report is written and published in accordance with the Atlantic Council Policy on Intellectual Independence. The authors are solely responsible for its analysis and recommendations. The Atlantic Council, the Brookings Institution, and the Chicago Council on Global Affairs, and their funders do not determine, nor do they necessarily endorse or advocate for, any of this report’s conclusions. February 2015 PREFACE This report is the result of collaboration among the Donbas provinces of Donetsk and Luhansk. scholars and former practitioners from the A stronger Ukrainian military, with enhanced Atlantic Council, the Brookings Institution, the defensive capabilities, will increase the pros- Center for a New American Security, and the pects for negotiation of a peaceful settlement. Chicago Council on Global Affairs. It is informed When combined with continued robust Western by and reflects mid-January discussions with economic sanctions, significant military assis- senior NATO and U.S.