How Will the Internet Scale?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Internet As Emerging Critical Infrastructure: What Needs to Be Measured?

the Internet as emerging critical infrastructure: what needs to be measured? cooperative association for internet data analysis 30 november 2005 presented to lsn jet @ nsf (http://www.nitrd.gov/subcommittee/lsn/jet/) [email protected] outline of talk what is critical infrastructure top problems of Internet historical context (incongruity) what have we learned and how can we apply it? [ case study: scalability (separate talk) ] what we (all) can do to help critical infrastructure what is it? how does it get that way? what are common characteristics? is the Internet one? or will it be soon? what are the implications for public and private sectors? underlying goals: innovation, economic strength, democracy, freedom, health, science, arts, society. it really is about living in a better world... top Internet problems 16 operational internet problems • security • authentication • spam • scalable configuration management • robust scalability of routing system • compromise of e2e principle • dumb network • measurement • patch management • “normal accidents” • growth trends in traffic and user expectations • time management and prioritization of tasks • stewardship vs governance • intellectual property and digital rights • interdomain qos/emergency services • inter-provider vendor/business coordination persistently unsolved problems for 10+ years (see presentations at www.caida.org ) top Internet problems why we’re not making progress • top unsolved problems in internet operations and engineering are rooted in economics, ownership, and trust (EOT). • -

Flooding Attacks by Exploiting Persistent Forwarding Loops

Flooding Attacks by Exploiting Persistent Forwarding Loops Jianhong Xia, Lixin Gao and Teng Fei University of Massachusetts, Amherst MA 01003, USA Email: {jxia, lgao, tfei}@ecs.umass.edu Introduction • Routing determines forwarding paths X A Y C B Why Persistent Forwarding Loop Occurs --- Example on Neglecting Pull-up Route • Announces 18.0.0.0/16 to the Internet • Router A has default route pointing to B Internet • Router A uses 18.0.0.0/24 only BB 18.0.0.0/16 ute ult ro AA defa • Any traffic to 18.0.0.0/24 18.0.1.0~18.0.255.255 will enter a forwarding ` loop between A and B ` ` Risk of Persistent Forwarding Loops • Flooding Attacks to legitimate hosts X Traffic to X Imperiled Ra Rb Rc Addresses Traffic to Y Y Shadowed Addresses • How many shadowed addresses in the Internet? • How many imperiled addresses in the Internet? Measurement Design •Design – Balancing granularity and overhead – Samples 2 addresses in each /24 IP block • Addresses space collection – Addresses covered by RouteView table – De-aggregate prefixes to /24 prefixes • Fine-grained prefixes • Data traces – Traceroute to 5.5 million fine-grained prefixes – Measurement lasts for 3 weeks in Sep. 2005 Shadowed vs. Imperiled Addresses • Shadowed addresses/prefixes – 135,973 shadowed prefixes – 2.47% of routable addresses – Located in 5341 ASes • Imperiled addresses/prefixes – 42,887 imperiled prefixes – 0.78% of routable addresses – Located in 2117 ASes Validating Persistent Forwarding Loops • Validation from various locations – From Asia, Europe, West and East coast of US -

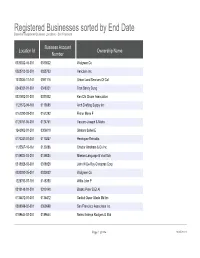

Registered Businesses Sorted by End Date Based on Registered Business Locations - San Francisco

Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco Business Account Location Id Ownership Name Number 0030032-46-001 0030032 Walgreen Co 0028703-02-001 0028703 Vericlaim Inc 1012834-11-141 0091116 Urban Land Services Of Cal 0348331-01-001 0348331 Tran Sandy Dung 0331802-01-001 0331802 Ken Chi Chuan Association 1121572-09-161 0113585 Arch Drafting Supply Inc 0161292-03-001 0161292 Fisher Marie F 0124761-06-001 0124761 Vaccaro Joseph & Maria 1243902-01-201 0306019 Shatara Suheil E 0170247-01-001 0170247 Henriquez Reinaldo 1125567-10-161 0130286 Chador Abraham & Co Inc 0189884-03-001 0189884 Mission Language & Vocl Sch 0318928-03-001 0318928 John W De Roy Chiroprac Corp 0030032-35-001 0030032 Walgreen Co 1228793-07-191 0148350 Willis John P 0310148-01-001 0310148 Blasko Peter B Et Al 0135472-01-001 0135472 Saddul Oscar Allado Md Inc 0369698-02-001 0369698 San Francisco Associates Inc 0189644-02-001 0189644 Neirro Erainya Rodgers G Etal Page 1 of 984 10/05/2021 Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco DBA Name Street Address City State Source Zipcode Walgreens #15567 845 Market St San Francisco CA 94103 Vericlaim Inc 500 Sansome St Ste 614 San Francisco CA 94111 Urban Land Services Of Cal 1170 Sacramento St 5d San Francisco CA 94108 Elizabeth Hair Studio 672 Geary St San Francisco CA 94102 Ken Chi Chuan Association 3626 Taraval St Apt 3 San Francisco CA 94116 Arch Drafting Supply Inc 10 Carolina St San Francisco CA 94103 Marie Fisher Interior -

The Great Telecom Meltdown for a Listing of Recent Titles in the Artech House Telecommunications Library, Turn to the Back of This Book

The Great Telecom Meltdown For a listing of recent titles in the Artech House Telecommunications Library, turn to the back of this book. The Great Telecom Meltdown Fred R. Goldstein a r techhouse. com Library of Congress Cataloging-in-Publication Data A catalog record for this book is available from the U.S. Library of Congress. British Library Cataloguing in Publication Data Goldstein, Fred R. The great telecom meltdown.—(Artech House telecommunications Library) 1. Telecommunication—History 2. Telecommunciation—Technological innovations— History 3. Telecommunication—Finance—History I. Title 384’.09 ISBN 1-58053-939-4 Cover design by Leslie Genser © 2005 ARTECH HOUSE, INC. 685 Canton Street Norwood, MA 02062 All rights reserved. Printed and bound in the United States of America. No part of this book may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without permission in writing from the publisher. All terms mentioned in this book that are known to be trademarks or service marks have been appropriately capitalized. Artech House cannot attest to the accuracy of this information. Use of a term in this book should not be regarded as affecting the validity of any trademark or service mark. International Standard Book Number: 1-58053-939-4 10987654321 Contents ix Hybrid Fiber-Coax (HFC) Gave Cable Providers an Advantage on “Triple Play” 122 RBOCs Took the Threat Seriously 123 Hybrid Fiber-Coax Is Developed 123 Cable Modems -

Research Articles in the American Economic Review, the RAND Journal Ofeconomics, The

3 I. Introduction And Qualifications 1. My name is Nicholas S. Economides. I am a Professor ofEconomics at the Stern School ofBusiness ofNew York University, located at 44 West 4th Street New York, NY 10012. 2. I received a B.Sc. degree in Mathematical Economics (first class honors) from the London School ofEconomics in 1976, a Masters degree in Economics from the University of California at Berkeley in 1979 and a Ph.D. degree in Economics from Berkeley in 1981, specializing in Industrial Organization. 3. From 1981 to 1988, I was assistant and then associate professor ofeconomics at Columbia University. From 1988 to 1990, I was associate professor ofeconomics at Stanford University. I have taught at the Stern School ofBusiness since 1990. During the academic year 1996-1997, I was visiting professor at Stanford University. 4. I have published more than seventy research papers in the areas ofindustrial organization, microeconomics, network economics, antitrust, finance, and telecommunications policy, and I have given numerous seminar presentations at academic and government institutions and conferences. I have published academic research articles in the American Economic Review, the RAND Journal ofEconomics, the International Journal ofIndustrial Organization, the International Economic Review, the Journal ofEconomic Theory, and the Journal ofIndustrial Economics, among others. I am currently editor ofthe International Journal ofIndustrial Organization and ofNetnomics. I have served as advisor and consultant to major telecommunications companies, a number of 4 the Federal Reserve Banks, the Bank ofGreece, and major Financial Exchanges. I teach graduate (MBA and Ph.D.) courses in antitrust, industrial organization, microeconomics, and telecommunications. A copy ofmy curriculum vitae is attached as Attachment 1. -

2. the Advertising-Supported Internet 21 2.1 Internet Advertising Segments 2.2 the Value of the Advertising-Supported Internet 3

Economic Value of the Advertising- Supported Internet Ecosystem June 10, 2009 Authored by Hamilton Consultants, Inc. With Dr. John Deighton, Harvard Business School, and Dr. John Quelch, Harvard Business School HAMILTON CONSULTANTS Cambridge, Massachusetts Executive Summary 1. Background 8 1.1 Purpose of the study 1.2 The Internet today 1.3 Structure of the Internet 2. The Advertising-Supported Internet 21 2.1 Internet advertising segments 2.2 The value of the advertising-supported Internet 3. Internet Companies and Employment by Internet Segment 26 3.1 Overview of Internet companies 3.2 Summary of employment 3.3 Internet service providers (ISPs) and transport 3.4 Hardware providers 3.5 Information technology consulting and solutions companies 3.6 Software companies 3.7 Web hosting and content management companies 3.8 Search engines/portals 3.9 Content sites: news, entertainment, research, information services. 3.10 Software as a service (SaaS) 3.11 Advertising agencies and ad support services 3.12 Ad networks 3.13 E-mail marketing and support 3.14 Enterprise-based Internet marketing, advertising and web design 3.15 E-commerce: e-tailing, e-brokerage, e-travel, and others 3.16 B2B e-commerce 4. Companies and Employment by Geography 50 4.1 Company headquarters and total employees by geography 4.2 Census data for Internet employees by geography 4.3 Additional company location data by geography 5. Benefits of the Ad-Supported Internet Ecosystem 54 5.1 Overview of types of benefits 5.2 Providing universal access to unlimited information 5.3 Creating employment 5.4 Providing one of the pillars of economic strength during the 2008-2009 recession 5.5 Fostering further innovation 5.6 Increasing economic productivity 5.7 Making a significant contribution to the U.S. -

Division of Investment Department of the Treasury State of New Jersey Pension Fund June 30, 2009 and 2008 (With Independent Auditors’ Report Thereon)

F INANCIAL S TATEMENTS, M ANAGEMENT’ S D ISCUSSION AND A NALYSIS AND S UPPLEMENTAL S CHEDULES Division of Investment Department of the Treasury State of New Jersey Pension Fund June 30, 2009 and 2008 (With Independent Auditors’ Report Thereon) Division of Investment Department of the Treasury State of New Jersey Pension Fund Financial Statements June 30, 2009 and 2008 Contents Independent Auditors’ Report ..........................................................................................................1 Management’s Discussion and Analysis .........................................................................................3 Basic Financial Statements: Statements of Net Assets .................................................................................................................7 Statements of Changes in Net Assets...............................................................................................8 Notes to Financial Statements ..........................................................................................................9 Supplemental Schedules: Schedule 1 – Combining Schedule of Net Assets ..........................................................................31 Schedule 2 – Combining Schedule of Changes in Net Assets .......................................................32 Schedule 3 – Portfolio of Investments – Common Fund A ...........................................................33 Schedule 4 – Portfolio of Investments – Common Fund B ...........................................................57 -

Technology Fast 500

2001 Deloitte & Touche Technology Fast 500 www.fast500.com Leading the Way It’s been a tough year for the technology sector, and the com- Industry segments represented were relatively stable from panies on this year’s Deloitte & Touche Technology Fast 500 last year, with software companies again leading the way are not immune to the effects of the tech correction and the with 221 companies or 44 percent of the list. Notably, given slowing of the U.S. economy. Indeed, some Fast 500 compa- the malaise in the field, communications companies jumped nies have seen their businesses slow significantly in 2001 and from nine percent to 13 percent of the list.While Internet are struggling after five years of dramatic growth (the 2001 companies are prominent at the top of the list, as a group, Fast 500 measures five-year growth through fiscal 2000). they slid from 17 percent to 15 percent. With that said, this year’s Technology Fast 500 companies, as Geographical Shifts a group, found a way to grow even faster than their prede- After a one-year hiatus, the West resumed its position at the cessors.The 2001 Technology Fast 500 averaged a five-year head of the technology class, accounting for 32 percent of percentage revenue growth rate of 6,184 percent, compared the Fast 500, up from 27 percent. California was, by far, the to 3,956 percent for last year’s list.The top five companies biggest contributor to the list with 132 companies, including grew an average of 93,496 percent, compared to 59,367 percent two of the top three companies, calling the Golden State for last year’s top five. -

Before the FEDERAL COMMUNICATIONS COMMISSION Washington, D.C

Before the FEDERAL COMMUNICATIONS COMMISSION Washington, D.C. 20554 In the Matter of ) ) SBC Communications, Inc. and AT&T Corp. ) WC Docket No. 05-65 ) Applications for Approval of Transfer of Control ) ) REPLY COMMENTS OF BT AMERICAS INC. AND BT INFONET USA BT AMERICAS INC. AND BT INFONET USA Joel S. Winnik A. Sheba Chacko David J. Saylor Chief Regulatory Counsel, The Americas David L. Sieradzki BT AMERICAS INC. HOGAN & HARTSON LLP 11440 Commerce Park Drive 555 – 13th St., NW Reston, VA 20191 Washington, D.C. 20004 (202) 637-5600 Kristen Neller Verderame VP, U.S. Regulation & Government Relations BT AMERICAS INC. 2025 M St., NW, Suite 450 Washington, D.C. 20036 Dated: May 10, 2005 TABLE OF CONTENTS Page Introduction and Summary ............................................................................................................. 1 I. The Merger Would Harm Competition For Global Telecommunications Services, To The Detriment Of Business Consumers .................................................................... 3 A. The Merger Would Increase Concentration in the GTS Market Because it Would Eliminate SBC as a Potentially Powerful New Entrant in the GTS Market........... 5 B. The Merger Would Harm GTS Competition By Increasing SBC’s Monopoly Market Power Over Local Special Access Connections For Enterprise Networks, A Critical Wholesale Input to GTS......................................................................... 7 1. GTS Consumers And Providers Are Highly Dependent on SBC’s Special Access Service for Local Connectivity....................................................... 7 2. SBC Already Has Enormous Market Power Over Special Access and Other Local Wholesale Services in Its Region ........................................... 9 3. The Merger Would Strengthen SBC’s Special Access Monopoly By Eliminating One of the Few Remaining Serious Special Access Competitors and the Largest Special Access Consumer.......................... -

Case No COMP/M.3752 - VERIZON / MCI

EN Case No COMP/M.3752 - VERIZON / MCI Only the English text is available and authentic. REGULATION (EC) No 139/2004 MERGER PROCEDURE Article 6(1)(b) NON-OPPOSITION Date: 07/10/2005 In electronic form on the EUR-Lex website under document number 32005M3752 Office for Official Publications of the European Communities L-2985 Luxembourg COMMISSION OF THE EUROPEAN COMMUNITIES Brussels, 07/10/2005 SG-Greffe(2005) D/205408 In the published version of this decision, some information has been omitted pursuant to Article PUBLIC VERSION 17(2) of Council Regulation (EC) No 139/2004 concerning non-disclosure of business secrets and other confidential information. The omissions are MERGER PROCEDURE shown thus […]. Where possible the information ARTICLE 6(1)(b) DECISION omitted has been replaced by ranges of figures or a general description. To the notifying party: Dear Sir/Madam, Subject: Case No COMP/M.3752 - Verizon/MCI Notification of 2 September 2005 pursuant to Article 4 of Council Regulation No 139/20041 1. On 2 September 2005, the Commission received a notification of a proposed concentration pursuant to Article 4 of Council Regulation (EC) No 139/2004 by which the undertaking Verizon Communications Inc. (“Verizon”, USA) acquires within the meaning of Article 3(1)(b) of the Council Regulation control of the whole of the undertaking MCI Inc (“MCI”, USA) by way of purchase of shares. I. THE PARTIES 2. Verizon provides telecommunications services to residential, small business and some large corporate and government customers in various regions of the U.S. These services include local, domestic long distance and international voice telephony services. -

Is Cybersecurity a Priority in Your Business?

20 ST. LOUIS BUSINESS JOURNAL TABLE OF EXPERTS IS CYBERSECURITY A PRIORITY IN YOUR BUSINESS? PRESENTED BY Meet the experts SEKHAR PRABHAKAR DAVE HARTLEY ROB MCCORMICK THOMAS HAYDE Sekhar Prabhakar is one of the founders David Hartley is a principal with UHY Rob McCormick founded Avatara in 2005 As a privacy professional at Spencer of C-Edge Software Consultants. Prabhakar LLP in St. Louis delivering “Virtual CIO” with the goal of bringing enterprise level IT Fane, Thomas Hayde aids clients in started his career as a software engineer technology guidance to primarily private solutions to midsized businesses nationwide developing comprehensive strategies to at Arms Inc. working on a subcontract with businesses in the middle market. Hartley through CompleteCloud. CompleteCloud is address information practices and data Booz Allen Hamilton. He was one of the key assists companies with everything from the most secure private cloud environment breach risks, avoid potential claims and members of the supply chain management digital transformation and IT strategy to on the market that leverages virtual desktops liabilities, and ensure compliance with system development team for Continental assessing cyber risks and implementing to help companies centralize data, increase all relevant legal requirements. Hayde Baking Co. (Wonder Bread). Prabhakar then cybersecurity programs. Hartley has performance, and rethink the way they pay also helps clients develop and implement joined Sybase Open Solutions Group and experience in technology, consulting, audit -

The Great Telecom Meltdown

4 The Internet Boom and the Limits to Growth Nothing says “meltdown” quite like “Internet.” For although the boom and crash cycle had many things feeding it, the Internet was at its heart. The Internet created demand for telecommunications; along the way, it helped create an expectation of demand that did not materialize. The Internet’s commercializa- tion and rapid growth led to a supply of “dotcom” vendors; that led to an expec- tation of customers that did not materialize. But the Internet itself was not at fault. The Internet, after all, was not one thing at all; as its name implies, it was a concatenation [1] of networks, under separate ownership, connected by an understanding that each was more valuable because it was able to connect to the others. That value was not reduced by the mere fact that many people overesti- mated it. The ARPAnet Was a Seminal Research Network The origins of the Internet are usually traced to the ARPAnet, an experimental network created by the Advanced Research Projects Agency, a unit of the U.S. Department of Defense, in conjunction with academic and commercial contrac- tors. The ARPAnet began as a small research project in the 1960s. It was pio- neering packet-switching technology, the sending of blocks of data between computers. The telephone network was well established and improving rapidly, though by today’s standards it was rather primitive—digital transmission and switching were yet to come. But the telephone network was not well suited to the bursty nature of data. 57 58 The Great Telecom Meltdown A number of individuals and companies played a crucial role in the ARPAnet’s early days [2].