Contributions to Information Theoretically Secure Communication

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

L0702108.Pdf

WARNING Caution: To reduce the risk of electric shock, do To reduce the risk of fire or electric shock, do not RISKOFELECTRICSHOCKthe lamp, no user serviceable parts inside. Refer l not remove cover (or back). With the exception of expose this product to rain or DONOTOPEN servicing to qualified service personnel. moisture. The apparatus shall not be exposed to dripping or _t This symbol indicates _]_11 Thissy mbolindicates splashing and no objects filled "dangerous voltage" inside important instructions with liquids, such as vases, shall the product that presents accompanying the product. a risk of electric shock or be placed on the apparatus. personal injury. Special disposal of the lamp for environmental reasons may be Thisrequiredsymbolunderindicatesthe lawsthatapplicablethe lamp toin yourjurisdiction.the HDTV containsFormercury.disposal or recycling information, please contact your local authorities or the Electronic Industries Alliance: www.eiae.org, or call 1-800-338-0376 for more details. Refer to the identification/rating label located on the back panel of your product for its proper operating voltage. FCC Regulations state that unauthorized changes or modifications to this equipment may void the user's authority to operate it. Cable fV Installer: This reminder is provided to call your attention to Article 820-40 of the National Electrical Code (Section 54 of the Canadian Electrical Code, Part 1) which provides guidelines for proper grounding and, in particular, specifies that the cable ground shall be connected to the grounding system of the building as close to the point of cable entry as practical. Important: This television is a table model and is designed to sit on a firm, flat, surface. -

SPECTRUM TV PACKAGES Hillsborough, Pinellas, Pasco & Hernando Counties |

SPECTRUM TV PACKAGES Hillsborough, Pinellas, Pasco & Hernando Counties | Investigation Discovery WFTS - ABC HD GEM Shopping Network Tennis Channel 87 1011 1331 804 TV PACKAGES SEC Extra HD WMOR - IND HD GEM Shopping Network HD FOX Sports 2 88 1012 1331 806 SundanceTV WTVT - FOX HD EWTN CBS Sports Network 89 1013 1340 807 Travel Channel WRMD - Telemundo HD AMC MLB Network SPECTRUM SELECT 90 1014 1355 815 WTAM - Azteca America WVEA - Univisión HD SundanceTV Olympic Channel 93 1015 1356 816 (Includes Spectrum TV Basic Community Programming WEDU - PBS Encore HD IFC NFL Network 95 1016 1363 825 and the following services) ACC Network HD WXPX - ION HD Hallmark Mov. & Myst. ESPN Deportes 99 1017 1374 914 WCLF - CTN HSN WGN America IFC FOX Deportes 2 101 1018 1384 915 WEDU - PBS HSN HD Nickelodeon Hallmark Mov. & Myst. NBC Universo 3 101 1102 1385 929 WTOG - The CW Disney Channel Disney Channel FX Movie Channel El Rey Network 4 105 1105 1389 940 WFTT - UniMás Travel Channel SonLife WVEA - Univisión HD TUDN 5 106 1116 1901 942 WTTA - MyTV EWTN Daystar WFTT - UniMás HD Disney Junior 6 111 1117 1903 1106 WFLA - NBC FOX Sports 1 INSP Galavisión Disney XD 8 112 1119 1917 1107 Bay News 9 IFC Freeform WRMD - Telemundo HD Universal Kids 9 113 1121 1918 1109 WTSP - CBS SundanceTV Hallmark Channel Nick Jr. 10 117 1122 1110 WFTS - ABC FX Upliftv HD BYUtv 11 119 1123 SPECTRUM TV BRONZE 1118 WMOR - IND FXX ESPN ESPNEWS 12 120 1127 1129 WTVT - FOX Bloomberg Television ESPN2 (Includes Spectrum TV Select ESPNU 13 127 1128 and the following channels) 1131 C-SPAN TBN FS Sun ESPN Deportes 14 131 1148 1132 WVEA - Univisión Investigation Discovery FS Florida FOX Sports 2 15 135 1149 1136 WXPX - ION FOX Business Network SEC Network Digi Tier 1 CBS Sports Network 17 149 1150 LMN 1137 WGN America Galavisión NBC Sports Network 50 NBA TV 18 155 1152 TCM 1140 WRMD - Telemundo SHOPHQ FOX Sports 1 53 MLB Network 19 160 1153 Golf Channel 1141 TBS HSN2 HD SEC Extra HD 67 NFL Network 23 161 1191 BBC World News 1145 OWN QVC2 HD Spectrum Sports Networ. -

SPECTRUM TV PACKAGES Morganfield Sturgis/Uniontown/Waverly | August 2021

SPECTRUM TV PACKAGES Morganfield Sturgis/Uniontown/Waverly | August 2021 TV PACKAGES 55 Oxygen 395 Pets.TV 286 StarzEncore Family - E 860 ESPN College Extra 56 Discovery Channel 396 Recipe.TV 288 StarzEncore Westerns - E 861 ESPN College Extra SPECTRUM BASIC 57 TLC 551 Smithsonian Channel 862 ESPN College Extra 58 The Weather Channel 555 RFD-TV MULTICULTURAL 863 ESPN College Extra (Includes Digital Music channels 60 Freeform 744 Black News Channel CHANNELS 864 ESPN College Extra and the following services) 61 FX 758 Heroes & Icons 865 ESPN College Extra 1 Spectrum News 1 (KY) 63 Cartoon Network 768 Hallmark Drama LATINO VIEW 866 ESPN College Extra Bowling Green 65 FS South 867 ESPN College Extra HBO 93 Univisión 3 WTSN - Antenna TV 70 National Geographic 135 Tr3s OTHER SERVICES 4 WFIE - NBC 74 FX Movie Channel 401 HBO - E 201 NBC Universo 8 WTVW - The CW 76 SEC Network 405 HBO 2 - E 202 Estrella TV 360 The Filipino Channel 9 WNIN - PBS 77 SEC Extra 407 HBO Comedy - E 78 FXX 203 Cinelatino 365 TV Asia 410 HBO Family - E 10 WEVV - CBS 204 CNN en Español 367 TV JAPAN 79 Hallmark Mov. & Myst. 412 HBO Latino - E 11 WEHT - ABC 205 Discovery en Español 368 RTN (Russian) 80 IFC 415 HBO Signature - E 12 WKOH - PBS 206 ESPN Deportes 370 ZTC Chinese 81 Investigation Discovery 417 HBO Zone - E 14 WEVV - FOX/MyTV 207 Hogar de HGTV 371 CCTV-4 98 C-SPAN2 90 SundanceTV 208 FOX Deportes 372 TV5MONDE 99 C-SPAN 9 4 MotorTrend Showtime 209 HISTORY en Español 373 RAI Italia 118 C-SPAN3 96 BBC America 426 Showtime - E 210 íHOLA! TV 374 ART Cable 227 WYYW CD -

Sharp-LC-70LE650U-AQUOS-Smart

SHARP LC-80LE857U LC-80LE650U LC-70LE857U LC-70LE650U LC-60LE857U LC-60LE650U / LC-80LE757U LC-70C7500U AQU OS LC-70LE757U LC-60C7500U LIQUID CRYSTAL TELEVISION LC TI:!:LEVISEU R ACL B TELEVISOR CON PANTALLA DE CRISTAL LiQUIDO L OPERATION MANUAL MODE D'EMPLOI MANUAL DE OPERACION S N0M1245_===--_ NYOE IMPORTANT : Please read this operation manual before starting operating the equipment. IMPORTANT : Veuillez lire ce mode d'emploi avant de commencer & utiliser I'appareil. LC IMPORTANTE : Lea este manual de operaci6n antes de comenzar a operar el equipo. DOLBY H_Frll| TM DIGITAL PLUS NIGN-DEFINITION MULTIMEDIA INTERFACE (Except for LC-90LE657U) (forthe LE857U, LE757U, 2.0+Digital Out LE755U, LE657U and LE655U models) (Except for LC-70LE750U and LC-60LE750U) Downloaded from www.Manualslib.com manuals search engine Skype TM is supported by this TV set. For details, refer to the following: For the operatiorl manual: http://www, sharpusa, corn (USA) http://www.sharp.ca (Canada) http://www.sharp.com.mx (Mexico) For information on the communication camera: http://freetalk.me/product/sharp/ For details on Skype: http://www.skype.com Ce televiseur eet compatible avec Skype TM. Pour lee details, reportez-vous a : Pour le mode d'emploi : http://www.sharpusa.com (Etats-Unis) htt p://w_,_,'v,shar p.ca (Canada) http://www.sharp.com.mx (Mexique) Pour des informations sur la camera de communication : http://freetalk.me/product/sharp/ Pour les d6tails sur Skype : http://www.skype.com Skype TM ee compatible con eete televisor. Para conocer m_s detallee, consulte: -

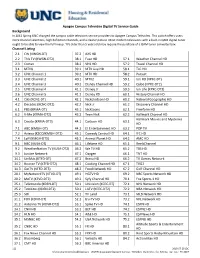

Channel and Setup Guide

Apogee Campus Televideo Digital TV Service Guide Background In 2013 Spring UNC changed the campus cable television service providers to Apogee Campus Televideo. This switch offers users more channel selections, high definition channels, and a clearer picture. Most modern televisions with a built-in QAM digital tuner ought to be able to view the full lineup. TVs older than 5 years old may require the purchase of a QAM tuner converter box. Channel Listing 2.1 CW (KWGN-DT) 37.2 AXS HD 2.2 This TV (KWGN-DT2) 38.1 Fuse HD 57.1 Weather Channel HD 2.3 Comet 38.2 VH1 HD 57.2 Travel Channel HD 3.1 MTVU 39.1 MTV Live HD 58.1 TLC HD 3.2 UNC Channel 1 39.2 MTV HD 58.2 Pursuit 3.3 UNC Channel 2 40.1 MTV2 59.1 Ion HD (KPXC-DT) 3.4 UNC Channel 3 40.2 Disney Channel HD 59.2 Qubo (KPXC-DT2) 3.5 UNC Channel 4 41.1 Disney Jr 59.3 Ion Life (KPXC-DT3) 3.6 UNC Channel 5 41.2 Disney XD 60.1 History Channel HD 4.1 CBS (KCNC-DT) 42.1 Nickelodeon HD 60.2 National Geographic HD 4.2 Decades (KCNC-DT2) 42.2 Nick Jr 61.2 Discovery Channel HD 6.1 PBS (KRMA-DT) 43.1 Nicktoons 62.1 Freeform HD 6.2 V-Me (KRMA-DT2) 43.2 Teen Nick 62.2 Hallmark Channel HD Hallmark Movies and Mysteries 6.3 Create (KRMA-DT3) 44.1 Cartoon HD 63.1 HD 7.1 ABC (KMGH-DT) 44.2 E! Entertainment HD 63.2 POP TV 7.2 Azteca (KZCO/KMGH-DT2) 45.1 Comedy Central HD 64.1 IFC HD 7.4 Laff (KMGH-DT3) 45.2 Animal Planet HD 64.2 AMC HD 9.1 NBC (KUSA-DT) 46.1 Lifetime HD 65.1 ReelzChannel 9.2 WeatherNation TV (KUSA-DT2) 46.2 We TV HD 65.2 TBS HD 9.3 Justice Network 47.1 Oxygen 66.1 TNT HD 14.1 UniMás (KTFD-DT) -

Channel Lineup Alphabetical

CHANNEL LINEUP for BASIC & EXPANDED BASIC CABLE PACKAGES - Listed Alphabetically STB = Set Top Box DTV = Digital TV w/ QAM tuner * = Channel availble in HD, with use of HD STB ** = Channel available only in HD, with use of HD STB STB DTV CHANNEL STB DTV CHANNEL STB DTV CHANNEL 70 70-5 A&E* 19 19-5 Fox - WXIX* (Cincinnati) 96 96-5 Outside TV* 9 9-5 ABC - WCPO* (Cincinnati) 56 56-5 Fox News* 90 90-5 OWN* 36 36-5 ABC - WTVQ* (Lexington) 31 31-5 Fox Sports 1* 87 87-5 Oxygen* 101 101-5 Animal Planet* 29 29-5 Fox Sports Ohio* 48 48-5 PBS - WCET* (Cincinnati) BASIC CABLE 160 160-5 Antenna TV 30 30-5 Fox Sports South* 81 81-5 Pop* 47 47-5 Boomerang 83 83-5 Freeform* 194 194-5 Quest 131 131-5 Bounce (Cincinnati) 75 75-5 FX* 111 111-5 QVC* & 171 171-5 Bounce (Lexington) 76 76-5 FXX* 102 102-5 RFD* 88 88-5 Bravo* 35 35-5 Golf Channel* 32 32-5 SEC Network* 46 46-5 Cartoon Network* 152 152-5 Grit (Cincinnati) 33 33-5 SEC Network Overflow EXPANDED 12 12-5 CBS - WKRC* (Cincinnati) 195 195-5 Grit (Lexington) 77 77-5 Spike* 18 18-5 CBS - WKYT* (Lexington) 2 2-5 Guide 141 141-5 Stadium BASIC CABLE 163 163-5 Charge! 85 85-5 Hallmark* 74 74-5 SyFy* 151 151-5 Circle (Cincinnati) 58 58-5 Headline News* 162 162-5 TBD 181 181-5 Circle (Lexington) 150 150-5 Heroes & Icons 113 113-5 TBN* 110 110-5 CMT* 94 94-5 HGTV* 72 72-5 TBS* 60 60-5 CNBC* 99 99-5 History* 91 91-5 TLC* CHANNEL 57 57-5 CNN* 112 112-5 Home Shopping Network* 73 73-5 TNT* 107 107-5 Comedy Central* 103 103-5 Investigation Discovery* 92 92-5 Travel Channel* 161 161-5 Comet 153 153-5 Ion 104 104-5 -

TV Compatibility

Television Compatibility The University/College digital cable system requires a television, DVR or other tuner device with a QAM tuner. You must check the specifications from your manufacturer to determine if it includes the required tuner. The types of tuners currently in use in the United States are listed here for your reference. NTSC TUNER (NOT COMPATIBLE) NTSC, named for the National Television System Committee, is the analog television system used in the United States from 1941 to 2009. After nearly 70 years of use, the FCC ordered the discontinuation of most over-the-air NTSC transmissions in the United States in 2009. Analog channels are still available on many cable systems to provide basic programming without the use of a cable conversion box. Some manufacturers no longer include this type of tuner in televisions built after 2009. ATSC TUNER (NOT COMPATIBLE) An ATSC (Advanced Television Systems Committee) tuner, often called an ATSC receiver or HDTV tuner is a type of television tuner that allows reception of digital television (DTV) television channels transmitted by television stations in North America. The FCC required all television manufacturers to include an ATSC tuner in all products since 2007, and required television broadcasters to switch in 2009. This type of tuner is currently included in all new televisions, including the inexpensive "digital conversion boxes" that were widely available leading up to the 2009 switchover. QAM (REQUIRED) QAM (quadrature amplitude modulation) is the format by which digital cable channels are encoded and transmitted via cable television providers, including CWRU. A QAM tuner is the cable equivalent of an ATSC tuner which receives over-the-air digital channels broadcast by local television stations. -

Written Direct Statement of Settling Devotional Claimants, in the Matter of Phase II Distribution of the 1998 and 1999 Cable Royalty Funds

Before the COPYRIGHT ROYALTY JUDGES Washington, DC DR I GIMEL In the Matter of ) ) Distribution of the 2004, 2005, ) Docket No. 2012-6 CRB CD 2004-2009 2006, 2007, 2008 and 2009 ) (Phase II) Cable Royalty Funds ) In the Matter of ) ) Distribution of the 1999-2009 ) Docket No. 2012-7 CRB SD 1999-2009 Satellite Royalty Funds ) (Phase II) WRITTEN DIRECT STATEMENT OF THE SETTLING DEVOTIONAL CLAIMANTS Clifford M. Harrington (D.C. Bar No. 218107) Matthew J. MacLean (D.C. Bar No. 479257) Victoria N. Lynch (D.C. Bar No. 1001445) PILLSBURY WINTHROP SHAW PITTMAN LLP Post Office Box 57197 Washington, DC 20036-9997 Telephone: 202-663-8525 Facsimile: 202-663-8007 E-Mail:[email protected] Counselfor Settling Devotional Claimants August 22, 2016 Table of Contents I. Introductory Comments II. Supplemental Testimony of Toby Berlin, School of Toby, Inc. III. Supplemental Testimony of Erkan Erdem, Ph.D., KPMG LLP IV. Supplemental Testimony of John Sanders, Bond k, Pecaro, Inc. V. Declaration of Shirley Mahue, Coral Ridge Ministries Media, Inc. VI. Declaration of Peter T. Vay, Pillsbury Winthrop Shaw Pittman LLP VII. Oral Testimony of Toby Berlin, Dkt. Nos. 2012-6 CRB CD 2004-2009 (Phase II) & 2012-7 CRB SD 1999-2009 (Phase II), Direct and Rebuttal Hearing, Tr. Vol 2 at 60:12-145:4 (Apr. 14, 2015). VIII. Written Direct Testimony of Toby Berlin, Dkt. Nos. 2012-6 CRB CD 2004-2009 (Phase Il) A 2012-7 CRB SD 1999-2009 (Phase Ii), Amended Written Direct Statement of the Settling Devotional Claimants (Jul. 8, 2014). Before the COPYRIGHT ROYALTY JUDGES Washington, DC In the Matter of ) ) Distribution of the 2004, 2005, ) Docket No. -

Cable Technician Pocket Guide Subscriber Access Networks

RD-24 CommScope Cable Technician Pocket Guide Subscriber Access Networks Document MX0398 Revision U © 2021 CommScope, Inc. All rights reserved. Trademarks ARRIS, the ARRIS logo, CommScope, and the CommScope logo are trademarks of CommScope, Inc. and/or its affiliates. All other trademarks are the property of their respective owners. E-2000 is a trademark of Diamond S.A. CommScope is not sponsored, affiliated or endorsed by Diamond S.A. No part of this content may be reproduced in any form or by any means or used to make any derivative work (such as translation, transformation, or adaptation) without written permission from CommScope, Inc and/or its affiliates ("CommScope"). CommScope reserves the right to revise or change this content from time to time without obligation on the part of CommScope to provide notification of such revision or change. CommScope provides this content without warranty of any kind, implied or expressed, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. CommScope may make improvements or changes in the products or services described in this content at any time. The capabilities, system requirements and/or compatibility with third-party products described herein are subject to change without notice. ii CommScope, Inc. CommScope (NASDAQ: COMM) helps design, build and manage wired and wireless networks around the world. As a communications infrastructure leader, we shape the always-on networks of tomor- row. For more than 40 years, our global team of greater than 20,000 employees, innovators and technologists have empowered customers in all regions of the world to anticipate what's next and push the boundaries of what's possible. -

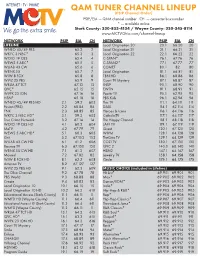

QAM Channel Lineup

QAM TUNER CHANNEL LINEUP (PSIP Channel Order) PSIP/EIA — QAM channel number CH — converter box number * — available online Stark County: 330-833-4134 / Wayne County: 330-345-8114 www.MCTVOhio.com/channel-lineup NETWORK PSIP EIA CH NETWORK PSIP EIA CH LIFELINE Local Origination 20 20.1 66.20 20 WNEO 45/49 PBS 65.2 2 Local Origination 21 21. 1 66.21 21 WKYC 3 NBC* 65.3 3 Local Origination 22 22.1 66.22 22 WOIO 19 CBS 65.4 4 C-SPAN* 76.1 67.76 76 WEWS 5 ABC* 65.5 5 C-SPAN2* 77.1 67.77 77 WUAB 43 CW 65.6 6 COMET 80.1 82 80 WBNX 55 65.7 7 Local Origination 81. 1 66.81 81 WJW 8 FOX 65.8 8 TBN HD 86.1 68.86 86 WVIZ 25 PBS 65.9 9 Court TV Mystery 87.1 68.87 87 WRLM 47 TCT 67. 13 13 INSP 90.1 68.90 90 QVC* 65.15 15 EWTN 91.1 68.91 91 WVPX 23 ION 67.16 16 Positiv TV 95.1 62.95 95 HSN* 65.18 18 PBS Kids 96.1 62.96 96 WNEO 45/49 PBS HD 2.1 59.2 602 This TV 111 . 1 64.111 111 Fusion (PBS) 2.2 68.84 84 DABL 114.1 62.114 114 FNX 2.3 68.85 85 Heroes & Icons 116.1 64.116 116 WKYC 3 NBC HD* 3.1 59.3 603 CatholicTV 117. 1 64.117 117 True Crime Network 3.2 67.14 14 The Happy Channel 118.1 68.118 118 WOIO 19 CBS HD 4.1 60.2 604 LAFF TV 119.1 6 7. -

SPECTRUM TV PACKAGES Desert Cities | August 2018

SPECTRUM TV PACKAGES Desert Cities | August 2018 Hallmark Channel SEC Network Nat Geo Wild Showtime Fam Zn - E 88 384 130 558 TV PACKAGES Hallmark Mov. & Myst. SEC Extra Smithsonian Channel Showtime - E 89 385 131 559 INSP FOX Sports 1 Viceland 90 400 133 OWN Velocity HD fyi, OTHER SERVICES SPECTRUM SELECT 91 403 134 Oxygen EWTN Fusion 92 460 144 (Includes Spectrum TV Basic FXX INSP El Rey Network Music Choice 93 461 145 1901 and the following services) FOX Sports 1 Daystar Heroes & Icons Music Choice 94 463 151 1902 KESQ - CBS FX Movie Channel TBN DIY Network Music Choice 2 95 464 161 1903 KESQ - ABC BBC America SonLife Cooking Channel Music Choice 3 96 470 163 1904 KNBC - NBC Community Bulletin Boa.. Hillsong Channel Fuse Music Choice 4 98 471 169 1905 KCWQ - The CW C-SPAN2 Shop Zeal 6 Lifetime Real Women Music Choice 5 99 476 174 1906 KABC - ABC USA Network Shop Zeal 7 GSN Music Choice 7 101 477 177 1907 PBS SoCal A&E Shop Zeal 8 Discovery Life Channel Music Choice 8 102 478 180 1908 KVCR - PBS TNT QVC TV One Music Choice 9 103 480 184 1909 C-SPAN TBS QVC2 ASPiRE TV Music Choice 10 104 481 185 1910 KDFX - FOX AMC Shop Zeal 1 Ovation Music Choice 11 105 482 187 1911 KVER - Univisión Discovery Channel HSN BBC World News Music Choice 12 106 483 209 1912 KMIR - NBC HISTORY HSN2 i24 Music Choice 13 107 484 210 1913 KMIR - MeTV FX Shop Zeal 3 Newsy Music Choice 14 108 485 224 1914 KUNA - Telemundo FXX Shop Zeal 4 Disney Junior Music Choice 15 109 486 254 1915 KCAL - IND BBC America EVINE Universal Kids Music Choice 16 110 487 255 1916 Government Access SYFY Shop Zeal 5 Baby First TV Music Choice 17 111 488 256 1917 KPSE - MyTV truTV Shop Zeal 2 Nick Jr. -

Pace International TV & Video Catalog

2017 PRODUCT CATALOG Video, Data & Content Leading, Simplifying & Supporting ABOUT PACE History Pace International was incorporated in 1972 by its founder Jim Deutsch. Headquartered in Rochester, MN, Pace catered to an independent base of TV and appliance retailers selling consumer electronics, repair parts, commercial audio and video, and TV reception equipment. Since then, the company has grown substantially from a regional distributor serving the upper Midwest to a national wholesaler ÃiÀÛV}VÕÃÌiÀÃ>xäÃÌ>ÌiÃ>` >>`>°½ÃÃ*>ÌÀV]Ü ÃÌ>ÀÌi`ÜÌ Ì iwÀ£ÇÇ]à now the CEO of the company and is carrying on the legacy of dedicated customer service, along with both of his sons. Since 2008, Pace has been the DISH national authorized commercial distributor for hardware and content. We offer solutions to PCO's, Integrators, ISP's, WISP's, CLEC’s and ILEC’s, helping them provide voice, video, and data anywhere in their communities. With unmatched value-added services, our team invites you to experience what differentiates Pace from the rest of the competition. Mission Statement Leading, simplifying and supporting the integration of hardware and content solutions within a complex communications marketplace. CONTENTS 2 LINE CARD 7 DISH EQUIPMENT smartbox smartbox Accessories Receivers and Accessories 19 HEADEND EQUIPMENT Racks, Shelves and Cabinets Headend Equipment Multiswitches and Modulators DISH QAM HD Over Coax 29 DATA PRODUCTS 41 LTE 43 CELLULAR BOOSTING 47 FIBER OPTIC 51 CABLING AND WIRING 55 INSTALLATION TOOLS Power Tools Installation Equipment Strippers