Computer Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

History of Computer Science from Wikipedia, the Free Encyclopedia

History of computer science From Wikipedia, the free encyclopedia The history of computer science began long before the modern discipline of computer science that emerged in the 20th century, and hinted at in the centuries prior. The progression, from mechanical inventions and mathematical theories towards the modern concepts and machines, formed a major academic field and the basis of a massive worldwide industry.[1] Contents 1 Early history 1.1 Binary logic 1.2 Birth of computer 2 Emergence of a discipline 2.1 Charles Babbage and Ada Lovelace 2.2 Alan Turing and the Turing Machine 2.3 Shannon and information theory 2.4 Wiener and cybernetics 2.5 John von Neumann and the von Neumann architecture 3 See also 4 Notes 5 Sources 6 Further reading 7 External links Early history The earliest known as tool for use in computation was the abacus, developed in period 2700–2300 BCE in Sumer . The Sumerians' abacus consisted of a table of successive columns which delimited the successive orders of magnitude of their sexagesimal number system.[2] Its original style of usage was by lines drawn in sand with pebbles . Abaci of a more modern design are still used as calculation tools today.[3] The Antikythera mechanism is believed to be the earliest known mechanical analog computer.[4] It was designed to calculate astronomical positions. It was discovered in 1901 in the Antikythera wreck off the Greek island of Antikythera, between Kythera and Crete, and has been dated to c. 100 BCE. Technological artifacts of similar complexity did not reappear until the 14th century, when mechanical astronomical clocks appeared in Europe.[5] Mechanical analog computing devices appeared a thousand years later in the medieval Islamic world. -

Alan Turingturing –– Computercomputer Designerdesigner

AlanAlan TuringTuring –– ComputerComputer DesignerDesigner Brian E. Carpenter with input from Robert W. Doran The University of Auckland May 2012 Turing, the theoretician ● Turing is widely regarded as a pure mathematician. After all, he was a B-star Wrangler (in the same year as Maurice Wilkes) ● “It is possible to invent a single machine which can be used to compute any computable sequence. If this machine U is supplied with the tape on the beginning of which is written the string of quintuples separated by semicolons of some computing machine M, then U will compute the same sequence as M.” (1937) ● So how was he able to write Proposals for development in the Mathematics Division of an Automatic Computing Engine (ACE) by the end of 1945? 2 Let’s read that carefully ● “It is possible to inventinvent a single machinemachine which can be used to compute any computable sequence. If this machinemachine U is supplied with the tapetape on the beginning of which is writtenwritten the string of quintuples separated by semicolons of some computing machinemachine M, then U will compute the same sequence as M.” ● The founding statement of computability theory was written in entirely physical terms. 3 What would it take? ● A tape on which you can write, read and erase symbols. ● Poulsen demonstrated magnetic wire recording in 1898. ● A way of storing symbols and performing simple logic. ● Eccles & Jordan patented the multivibrator trigger circuit (flip- flop) in 1919. ● Rossi invented the coincidence circuit (AND gate) in 1930. ● Building U in 1937 would have been only slightly more bizarre than building a differential analyser with Meccano. -

Concepts and Paradigms of Object-Oriented Programming Expansion of Oct 400PSLA-89 Keynote Talk Peter Wegner, Brown University

Concepts and Paradigms of Object-Oriented Programming Expansion of Oct 400PSLA-89 Keynote Talk Peter Wegner, Brown University 1. What Is It? 8 1.1. Objects 8 1.2. Classes 10 1.3. Inheritance 11 1.4. Object-Oriented Systems 12 2. What Are Its Goals? 13 2.1. Software Components 13 2.2. Object-Oriented Libraries 14 2.3. Reusability and Capital-intensive Software Technology 16 2.4. Object-Oriented Programming in the Very Large 18 3. What Are Its Origins? 19 3.1. Programming Language Evolution 19 3.2. Programming Language Paradigms 21 4. What Are Its Paradigms? 22 4.1. The State Partitioning Paradigm 23 4.2. State Transition, Communication, and Classification Paradigms 24 4.3. Subparadigms of Object-Based Programming 26 5. What Are Its Design Alternatives? 28 5.1. Objects 28 5.2. Types 33 5.3. Inheritance 36 5.4. Strongly Typed Object-Oriented Languages 43 5.5. Interesting Language Classes 45 5.6. Object-Oriented Concept Hierarchies 46 6. what Are Its Models of Concurrency? 49 6.1. Process Structure 50 6.2. Internal Process Concurrency 55 6.3. Design Alternatives for Synchronization 56 6.4. Asynchronous Messages, Futures, and Promises 57 6.5. Inter-Process Communication 58 6.6. Abstraction, Distribution, and Synchronization Boundaries 59 6.7. Persistence and Transactions 60 7. What Are Its Formal Computational Models? 62 7.1. Automata as Models of Object Behavior 62 7.2. Mathematical Models of Types and Classes 65 7.3. The Essence of Inheritance 74 7.4. Reflection in Object-Oriented Systems 78 8. -

Alan Turing's Automatic Computing Engine

5 Turing and the computer B. Jack Copeland and Diane Proudfoot The Turing machine In his first major publication, ‘On computable numbers, with an application to the Entscheidungsproblem’ (1936), Turing introduced his abstract Turing machines.1 (Turing referred to these simply as ‘computing machines’— the American logician Alonzo Church dubbed them ‘Turing machines’.2) ‘On Computable Numbers’ pioneered the idea essential to the modern computer—the concept of controlling a computing machine’s operations by means of a program of coded instructions stored in the machine’s memory. This work had a profound influence on the development in the 1940s of the electronic stored-program digital computer—an influence often neglected or denied by historians of the computer. A Turing machine is an abstract conceptual model. It consists of a scanner and a limitless memory-tape. The tape is divided into squares, each of which may be blank or may bear a single symbol (‘0’or‘1’, for example, or some other symbol taken from a finite alphabet). The scanner moves back and forth through the memory, examining one square at a time (the ‘scanned square’). It reads the symbols on the tape and writes further symbols. The tape is both the memory and the vehicle for input and output. The tape may also contain a program of instructions. (Although the tape itself is limitless—Turing’s aim was to show that there are tasks that Turing machines cannot perform, even given unlimited working memory and unlimited time—any input inscribed on the tape must consist of a finite number of symbols.) A Turing machine has a small repertoire of basic operations: move left one square, move right one square, print, and change state. -

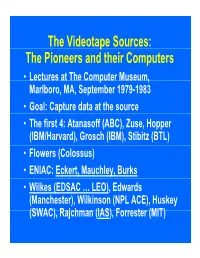

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

GEORGE ELMER FORSYTHE January 8, 1917—April 9, 1972

MATHEMATICS OF COMPUTATION, VOLUME 26, NUMBER 120, OCTOBER 1972 GEORGE ELMER FORSYTHE January 8, 1917—April9, 1972 With the sudden death of George Forsythe, modern numerical analysis has lost one of its earliest pioneers, an influential leader who helped bring numerical analysis and computer science to their present positions. We in computing have been fortunate to have benefited from George's abilities, and we all feel a deep sense of personal and professional loss. Although George's interest in calculation can be traced back at least to his seventh grade attempt to see how the digits repeated in the decimal expansion of 10000/7699, his early research was actually in summability theory, leading to his Ph.D. in 1941 under J. D. Tamarkin at Brown University. A brief period at Stanford thereafter was interrupted by his Air Force service, during which he became interested in meteor- ology, co-authoring an influential book in that field. George then spent a year at Boeing, where his interest in scientific computing was reawakened, and in 1948 he began his fruitful association with the Institute for Numerical Analysis of the National Bureau of Standards on the UCLA campus, remaining at UCLA for three years after leaving the Institute in 1954. In 1957 he rejoined the Mathematics Department at Stanford as Professor, became director of the Computation Center, and eventually Chairman of the Computer Science Department when it was founded in 1965. George greatly influenced the development of modern numerical analysis, especially in numerical linear algebra where his 1953 classification of methods for solving linear systems gave structure to a field of great importance. -

The Interactive Nature of Computing: Refuting the Strong Church-Turing Thesis

TEX source file Click here to download Manuscript: paper.tex The Interactive Nature of Computing: Refuting the Strong Church-Turing Thesis Dina Goldin∗, Peter Wegner Brown University Abstract. The classical view of computing positions computation as a closed-box transformation of inputs (rational numbers or finite strings) to outputs. According to the interactive view of computing, computation is an ongoing interactive process rather than a function-based transformation of an input to an output. Specifi- cally, communication with the outside world happens during the computation, not before or after it. This approach radically changes our understanding of what is computation and how it is modeled. The acceptance of interaction as a new paradigm is hindered by the Strong Church-Turing Thesis (SCT), the widespread belief that Turing Machines (TMs) capture all computation, so models of computation more expressive than TMs are impossible. In this paper, we show that SCT reinterprets the original Church-Turing Thesis (CTT) in a way that Turing never intended; its commonly assumed equiva- lence to the original is a myth. We identify and analyze the historical reasons for the widespread belief in SCT. Only by accepting that it is false can we begin to adopt interaction as an alternative paradigm of computation. We present Persistent Turing Machines (PTMs), that extend TMs to capture sequential interaction. PTMs allow us to formulate the Sequential Interaction Thesis, going beyond the expressiveness of TMs and of the CTT. The paradigm shift to interaction provides an alternative understanding of the nature of computing that better reflects the services provided by today’s computing technology. -

Introduction to the Literature on Programming Language Design Gary T

Computer Science Technical Reports Computer Science 7-1999 Introduction to the Literature On Programming Language Design Gary T. Leavens Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Leavens, Gary T., "Introduction to the Literature On Programming Language Design" (1999). Computer Science Technical Reports. 59. http://lib.dr.iastate.edu/cs_techreports/59 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Introduction to the Literature On Programming Language Design Abstract This is an introduction to the literature on programming language design and related topics. It is intended to cite the most important work, and to provide a place for students to start a literature search. Keywords programming languages, semantics, type systems, polymorphism, type theory, data abstraction, functional programming, object-oriented programming, logic programming, declarative programming, parallel and distributed programming languages Disciplines Programming Languages and Compilers This article is available at Iowa State University Digital Repository: http://lib.dr.iastate.edu/cs_techreports/59 Intro duction to the Literature On Programming Language Design Gary T. Leavens TR 93-01c Jan. 1993, revised Jan. 1994, Feb. 1996, and July 1999 Keywords: programming languages, semantics, typ e systems, p olymorphism, typ e theory, data abstrac- tion, functional programming, ob ject-oriented programming, logic programming, declarative programming, parallel and distributed programming languages. -

Dina Goldin · Scott A. Smolka · Peter Wegner (Eds.) Dina Goldin Scott A

Dina Goldin · Scott A. Smolka · Peter Wegner (Eds.) Dina Goldin Scott A. Smolka Peter Wegner (Eds.) Interactive Computation The New Paradigm With 84 Figures 123 Editors Dina Goldin Scott A. Smolka Brown University State University of New York at Stony Brook Computer Science Department Department of Computer Science Providence, RI 02912 Stony Brook, NY 11794-4400 USA USA [email protected] [email protected] Peter Wegner Brown University Computer Science Department Providence, RI 02912 USA [email protected] Cover illustration: M.C. Escher’s „Whirlpools“ © 2006 The M.C. Escher Company-Holland. All rights reserved. www.mcescher.com Library of Congress Control Number: 2006932390 ACM Computing Classification (1998): F, D.1, H.1, H.5.2 ISBN-10 3-540-34666-X Springer Berlin Heidelberg New York ISBN-13 978-3-540-34666-1 Springer Berlin Heidelberg New York This work is subject to copyright. All rights are reserved, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broad- casting, reproduction on microfilm or in any other way, and storage in data banks. Duplication of this publication or parts thereof is permitted only under the provisions of the German Copyright Law of September 9, 1965, in its current version, and permission for use must always be obtained from Springer. Violations are liable for prosecution under the German Copyright Law. Springer is a part of Springer Science+Business Media springer.com © Springer-Verlag Berlin Heidelberg 2006 The use of general descriptive names, registered names, trademarks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant pro- tective laws and regulations and therefore free for general use. -

Know Your Discipline: Teaching the Philosophy of Computer Science

Journal of Information Technology Education Volume 6, 2007 Know Your Discipline: Teaching the Philosophy of Computer Science Matti Tedre University of Joensuu, Dept. of Computer Science and Statistics Joensuu, Finland [email protected] Executive Summary The diversity and interdisciplinarity of computer science and the multiplicity of its uses in other sciences make it hard to define computer science and to prescribe how computer science should be carried out. The diversity of computer science also causes friction between computer scien- tists from different branches. Computer science curricula, as they stand, have been criticized for being unable to offer computer scientists proper methodological training or a deep understanding of different research traditions. At the Department of Computer Science and Statistics at the Uni- versity of Joensuu we decided to include in our curriculum a course that offers our students an awareness of epistemological and methodological issues in computer science, and we wanted to design the course to be meaningful for practicing computer scientists. In this article the needs and aims of our course on the philosophy of computer science are discussed, and the structure and arrangements—the whys, whats, and hows—of that course are explained. The course, which is given entirely on-line, was designed for advanced graduate or postgraduate computer science students from two Finnish universities: the University of Joensuu and the Uni- versity of Kuopio. The course has four relatively broad themes, and all those themes are tied to the students’ everyday work or their own research topics. I have prepared course readings about each of those four themes. -

The Scholarly Work of Reliable and Well-Designed Mathematical Software a Personal Perspective

The Scholarly Work of Reliable and Well-Designed Mathematical Software A personal perspective Tim Davis July 9, 2014 1 The Scholarly Work of Reliable and Well-Designed Mathematical Software Outline: What defines a scholarly work? Does mathematical software fit that definition? Why publish prototype-quality mathematical software? Why publish robust, reliable, and full-featured mathematical software? Troubling trends and a troubling status quo My recommendations for you and for SIAM 2 Definition of \scholarly work" Scholarly or artistic works, regardless of their form of expression: intended purpose is to disseminate the results of academic research, scholarly study, or artistic expression, ... in any medium. When is a work scholarly (software or any other form)? Proposed metrics: 1 peer-reviewed 2 published in reputable academic journals 3 published in academic-quality books 4 topic of discussion at academic conferences 5 citation for academic society fellow 3 Peer-reviewed software ACM Transactions on Mathematical Software Founded in 1975 Purpose: As a scientific journal, ACM Transactions on Mathematical Software (TOMS) documents the theoretical underpinnings of numeric, symbolic, algebraic, and geometric computing applications. It focuses on analysis and construction of algorithms and programs, and the interaction of programs and architecture. Algorithms documented in TOMS are available as the Collected Algorithms of the ACM in print, on microfiche, on disk, and online. 942 Collected Algorithms of the ACM since 1975: peer-reviewed scholarly software I serve as an Associate Editor of this journal 4 ACM TOMS Editorial Charter The purpose of the ACM Transactions on Mathematical Software (TOMS) is to communicate important research results addressing the development, evaluation and use of mathematical software. -

Alan Turing 1 Alan Turing

Alan Turing 1 Alan Turing Alan Turing Turing at the time of his election to Fellowship of the Royal Society. Born Alan Mathison Turing 23 June 1912 Maida Vale, London, England, United Kingdom Died 7 June 1954 (aged 41) Wilmslow, Cheshire, England, United Kingdom Residence United Kingdom Nationality British Fields Mathematics, Cryptanalysis, Computer science Institutions University of Cambridge Government Code and Cypher School National Physical Laboratory University of Manchester Alma mater King's College, Cambridge Princeton University Doctoral advisor Alonzo Church Doctoral students Robin Gandy Known for Halting problem Turing machine Cryptanalysis of the Enigma Automatic Computing Engine Turing Award Turing test Turing patterns Notable awards Officer of the Order of the British Empire Fellow of the Royal Society Alan Mathison Turing, OBE, FRS ( /ˈtjʊərɪŋ/ TEWR-ing; 23 June 1912 – 7 June 1954), was a British mathematician, logician, cryptanalyst, and computer scientist. He was highly influential in the development of computer science, giving a formalisation of the concepts of "algorithm" and "computation" with the Turing machine, which can be considered a model of a general purpose computer.[1][2][3] Turing is widely considered to be the father of computer science and artificial intelligence.[4] During World War II, Turing worked for the Government Code and Cypher School (GC&CS) at Bletchley Park, Britain's codebreaking centre. For a time he was head of Hut 8, the section responsible for German naval cryptanalysis. He devised a number of techniques for breaking German ciphers, including the method of the bombe, an electromechanical machine that could find settings for the Enigma machine.