Post-Gazette 10-2-09.Pmd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Comment, March 1, 1979

Bridgewater State University Virtual Commons - Bridgewater State University The ommeC nt Campus Journals and Publications 1979 The ommeC nt, March 1, 1979 Bridgewater State College Volume 52 Number 5 Recommended Citation Bridgewater State College. (1979). The Comment, March 1, 1979. 52(5). Retrieved from: http://vc.bridgew.edu/comment/461 This item is available as part of Virtual Commons, the open-access institutional repository of Bridgewater State University, Bridgewater, Massachusetts. Vol. LII No.5 Bridgewater State College March 1,1979 Students to Receive Minimum by Karen Tobin The Comment attempted to students are getting 1t. Minimum On Tuesday, February 27, contact Dr. Richard Veno, the wage is low enough in these days of President Rondileau announced his Director of the Student Union, Dr. inflation." decision on the campus minimum -Owen McGowan, the Head David Morwick, Financial Aid wage question. Beginning on March Librarian, and David Morwick, the Officer, said that the new minimum 1, all students employed by Financial Aid Officer to find out their wage should not adversely effect the Bridgewater State College will' reactions to Dr. Rondileau's, College Work-Study Program. receive the federal minimum wage announcement. Dr. Vena was out people will simply eaam their money, of $2.90 per hour. (on business) and therefore could more quickly. He noted there is the i. Dr. Rondileau explained the not comment. Dr. McGowen. Head possibility that some of this year's decision, 'We studied the'situation Librarian, saId that the raise in awards may be increased but this is very carefully. We came to the best minimum. -

TV/Series Lost: (Re)Garder L'île

TV/Series Lost: (re)garder l’île Guillaume Dulong To cite this version: Guillaume Dulong. TV/Series Lost: (re)garder l’île. TV/Series, GRIC - Groupe de recherche Identités et Cultures, 2016, 10.4000/tvseries.4371. hal-02901641 HAL Id: hal-02901641 https: //hal-u-bordeaux-montaigne.archives-ouvertes.fr/hal-02901641 Submitted on 17 Jul 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. TV/Series Hors séries 1 | 2016 Lost: (re)garder l'île The Leftovers, the Lost fever Double frénésie en séries pour Damon Lindelof Guillaume Dulong Édition électronique URL : http://journals.openedition.org/tvseries/4371 DOI : 10.4000/tvseries.4371 ISSN : 2266-0909 Éditeur GRIC - Groupe de recherche Identités et Cultures Référence électronique Guillaume Dulong, « The Leftovers, the Lost fever », TV/Series [En ligne], Hors séries 1 | 2016, mis en ligne le 30 juin 2020, consulté le 02 juillet 2020. URL : http://journals.openedition.org/tvseries/4371 ; DOI : https://doi.org/10.4000/tvseries.4371 Ce document a été généré automatiquement le 2 juillet 2020. TV/Series est mis à disposition selon les termes de la licence Creative Commons Attribution - Pas d'Utilisation Commerciale - Pas de Modification 4.0 International. -

Daring Deceit

FINAL-1 Sun, Oct 25, 2015 11:52:55 PM tvupdateYour Weekly Guide to TV Entertainment For the week of November 1 - 7, 2015 Daring deceit Priyanka Chopra INSIDE stars in “Quantico” •Sports highlights Page 2 •TV Word Search Page 2 •Hollywood Q&A Page 3 •Family Favorites Page 4 The plot thickens as Alex Parrish (Priyanka Chopra, “Fashion,” 2008) struggles to prove she wasn’t behind a terrorist attack in New York City in a new episode of “Quantico,” airing Sunday, Nov. 1, on ABC. Flashbacks recount Parrish’s time at the FBI academy, where she trained alongside a diverse group of recruits. As the flashbacks unfold, it becomes clear that no one is what they seem, and everyone has something to hide. RICHARD’S New, Used&Vintage FURNITURE 0% Reclining SALE *Sofas *Love Seats *Sectionals *Chairs NO INTEREST ON LOANS *15% off Custom Orders Only! Group Page Shell Richards 5 x 3” at 1 x 3” CASH FOR GOLD Ends Nov14th Remember TRADE INS arewelcome 527 S. Broadway,Salem, NH www.richardsfurniture.com (On the Methuen Line above Enterprise Rent-A-Car) 25 WaterStreet 603-898-2580 •OPEN 7DAYSAWEEK Lawrence,MA WWW.CASHFORGOLDINC.COM 978-686-3903 FINAL-1 Sun, Oct 25, 2015 11:52:56 PM COMCAST ADELPHIA 2 Sports Highlights Kingston CHANNEL Atkinson Countdown to Green Live NESN Bruins Face-Off Live Sunday 8:00 p.m. (25) Baseball MLB World (7) Salem Londonderry Series Live (25) College Football Pre-game Live 7:00 p.m. ESPN Football NCAA Live Windham 9:00 a.m. (25) Fox NFL Sunday Live Sandown ESPN Basketball NBA New York Knicks ESPN College Football Scoreboard NESN Hockey NHL Boston Bruins at Pelham, 9:30 a.m. -

What to Watch

What MOVIES to watch YOU’LL LOVE SUNDAY All times Eastern. Start times can vary based on cable/satellite provider. Confi rm times on your on-screen guide. ‘Sixteen Candles’ NASCAR Cup Series: STEVE KAGAN Championship 4 Sixteen Candles (1984, Comedy) NBC, 3 p.m. Live Molly Ringwald, Anthony Michael ‘Moonbase 8’ Hall CMT, 6:30 p.m. The four remaining drivers eligible for the NASCAR Cup Series season championship A24 FILMS duke it out at Phoenix Raceway, with the best fi nisher among them claiming the title. Rady), who helps her heal and fi nd love during NCIS: Los Angeles the holidays. CBS, 8:30 p.m. Season Premiere CATCH The Simpsons In the Season 12 premiere episode “The By Whatever Means A CLASSIC FOX, 8 p.m. Bear,” a Russian bomber fl ying over the U.S. In the new episode “The 7 Beer Itch,” Homer goes missing, sending Sam (LL Cool J) and Necessary: The Times (voice of Dan Castellaneta) falls under the Callen (Chris O’Donnell) on a mission to track spell of a British femme fatale (guest voice of it down and secure its weapons and intel. of Godfather of Harlem EPIX, 10 p.m. New Docuseries Olivia Colman). Bob’s Burgers This four-part docuseries is inspired by the Christmas FOX, 9 p.m. music and subjects featured in Godfather of Harlem. It brings alive the dramatic true story With the Darlings Tina (voice of Dan Mintz) is put in charge of of Harlem and its music during the 1960s the Wagsta School time capsule project, Hallmark Channel, 8 p.m. -

How Far Is Too Far? the Line Between "Offensive" and "Indecent" Speech

Federal Communications Law Journal Volume 49 Issue 2 Article 4 2-1997 How Far Is Too Far? The Line Between "Offensive" and "Indecent" Speech Milagros Rivera-Sanchez University of Florida Follow this and additional works at: https://www.repository.law.indiana.edu/fclj Part of the Communications Law Commons, and the First Amendment Commons Recommended Citation Rivera-Sanchez, Milagros (1997) "How Far Is Too Far? The Line Between "Offensive" and "Indecent" Speech," Federal Communications Law Journal: Vol. 49 : Iss. 2 , Article 4. Available at: https://www.repository.law.indiana.edu/fclj/vol49/iss2/4 This Article is brought to you for free and open access by the Law School Journals at Digital Repository @ Maurer Law. It has been accepted for inclusion in Federal Communications Law Journal by an authorized editor of Digital Repository @ Maurer Law. For more information, please contact [email protected]. How Far Is Too Far? The Line Between "Offensive" and "Indecent" Speech Milagros Rivera-Sanchez* I. INTRODUCTION .................................. 327 II. SCOPE AND METHOD .............................. 329 m. INDECENCY AND THE FCC's COMPLAINT INVESTIGATION PROCESS ........................... 332 A. Definition of Indecency ..................... 332 B. Context ................................ 333 C. The Complaint InvestigationProcess ............ 336 IV. DISMISSED COMPLAINTS ........................... 337 A. Expletives or Vulgar Words ................... 337 B. Descriptionsof Sexual or Excretory Activites or Organs ....................... -

FM16 Radio.Pdf

63 Pleasant Hill Road • Scarborough P: 885.1499 • F: 885.9410 [email protected] “Clean Up Cancer” For well over a year now many of us have seen the pink van yearly donation is signifi cant and the proceeds all go to the cure of Eastern Carpet and Upholstery Cleaning driving around York for women’s cancer. and Cumberland counties, and we may have asked what’s it all about. To clear up this question I spent some time with Diane Diane was introduced to breast cancer early in life when her Gadbois at her home and asked her some very personal questions mother had a radical mastectomy. She remembers her mother’s that I am sure were diffi cult to answer. You see, George and Diane doctor telling her sister and her “one of you will have cancer.” Gadbois are private people who give more than their share back Not a pleasant thought at the time, but it stuck with Diane and to the community, and the last thing they want is to be noticed saved her life. Twice, after the normal tests and screenings for for their generosity. They started Eastern Carpet and Upholstery cancer, Diane received a clean bill of health and relatively soon Cleaning 40 years ago on a wish and a prayer and now have the after, while doing a self-examination, found a lump. Not once but largest family-run carpet cleaning and water damage restoration twice! Fortunately they were found in time, and Diane is doing company in the area. fi ne, but she wants to get the message out that as important as it is to get regular screenings, it is equally as important to be your own Back to the pink van! If you notice on the rear side panels are advocate and make double sure with a self-examination. -

![Arxiv:2004.10645V2 [Cs.CL] 5 Oct 2020 the Evidence in Wikipedia](https://docslib.b-cdn.net/cover/7680/arxiv-2004-10645v2-cs-cl-5-oct-2020-the-evidence-in-wikipedia-1297680.webp)

Arxiv:2004.10645V2 [Cs.CL] 5 Oct 2020 the Evidence in Wikipedia

AMBIGQA: Answering Ambiguous Open-domain Questions Sewon Min,1,2 Julian Michael,1 Hannaneh Hajishirzi,1,3 Luke Zettlemoyer1,2 1University of Washington 2Facebook AI Research 3Allen Institute for Artificial Intelligence fsewon,julianjm,hannaneh,[email protected] Abstract Ambiguity is inherent to open-domain ques- tion answering; especially when exploring new topics, it can be difficult to ask questions that have a single, unambiguous answer. In this paper, we introduce AMBIGQA, a new open-domain question answering task which involves finding every plausible answer, and then rewriting the question for each one to re- solve the ambiguity. To study this task, we con- struct AMBIGNQ, a dataset covering 14,042 questions from NQ-OPEN, an existing open- domain QA benchmark. We find that over half of the questions in NQ-OPEN are ambigu- ous, with diverse sources of ambiguity such as event and entity references. We also present strong baseline models for AMBIGQA which Figure 1: An AMBIGNQ example where the prompt we show benefit from weakly supervised learn- question (top) appears to have a single clear answer, but ing that incorporates NQ-OPEN, strongly sug- is actually ambiguous upon reading Wikipedia. AM- gesting our new task and data will support sig- BIGQA requires producing the full set of acceptable nificant future research effort. Our data and answers while differentiating them from each other us- baselines are available at https://nlp.cs. ing disambiguated rewrites of the question. washington.edu/ambigqa. 1 Introduction shown in Figure1, ambiguity is a function of both In the open-domain setting, it can be difficult the question and the evidence provided by a large to formulate clear and unambiguous questions. -

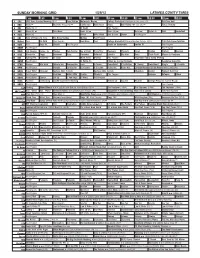

Sunday Morning Grid 1/29/12 Latimes.Com/Tv Times

SUNDAY MORNING GRID 1/29/12 LATIMES.COM/TV TIMES 7 am 7:30 8 am 8:30 9 am 9:30 10 am 10:30 11 am 11:30 12 pm 12:30 2 CBS CBS News Sunday Morning (N) Å Face/Nation Motorcycle Racing College Basketball Michigan at Ohio State. (N) PGA Tour Golf 4 NBC News Å Meet the Press (N) Å Conference Wall Street House Bull Riding PBR Tour. (N) Å Figure Skating 5 CW News (N) Å In Touch Paid Program 7 ABC News (N) Å This Week News (N) Å News (N) Å News Å Vista L.A. NBA Basketball 9 KCAL News (N) Prince Mike Webb Joel Osteen Shook Paid Program 11 FOX Hour of Power (N) (TVG) Fox News Sunday Midday Paid Program 13 MyNet Paid Tm Wrld Paid Program Best Buys Paid College Basketball Miami at Boston College. (TVG) 700 Club Super Telethon 18 KSCI Paid Hope Hr. Church Paid Program Hecho en Guatemala Iranian TV Paid Program 22 KWHY Paid Program Paid Program 24 KVCR Sid Science Curios -ity Thomas Bob Builder Joy of Paint Paint This Dewberry Wyland’s Sara’s Kitchen Kitchen Mexican 28 KCET Hands On Raggs Busytown Peep Pancakes Pufnstuf Lidsville Hey Kids Taste Chefs Field Moyers & Company 30 ION Turning Pnt. Discovery In Touch Mark Jeske Beyond Paid Program Inspiration Today Camp Meeting 34 KMEX Paid Program Al Punto (N) Fútbol de la Liga Mexicana República Deportiva 40 KTBN Rhema Win Walk Miracle-You Redemption Love In Touch PowerPoint It Is Written B. Conley From Heart King Is J. -

1982-07-17 Kerrville Folk Festival and JJW Birthday Bash Page 48

BB049GREENLYMONT3O MARLk3 MONTY GREENLY 0 3 I! uc Y NEWSPAPER 374 0 E: L. M LONG RE ACH CA 9 0807 ewh m $3 A Billboard PublicationDilisoar The International Newsweekly Of Music & Home Entertainment July 17, 1982 (U.S.) AFTER `GOOD' JUNE AC Formats Hurting On AM Dial Holiday Sales Give Latest Arbitron Ratings Underscore FM Penetration By DOUGLAS E. HALL Billboard in the analysis of Arbitron AM cannot get off the ground, stuck o Retailers A Boost data, characterizes KXOK as "being with a 1.1, down from 1.6 in the win- in ter and 1.3 a year ago. ABC has suc- By IRV LICHTMAN NEW YORK -Adult contempo- battered" by its FM competitors formats are becoming as vul- AC. He notes that with each passing cessfully propped up its adult con- NEW YORK -Retailers were while prerecorded cassettes contin- rary on the AM dial as were top book, the age point at which listen - temporary WLS -AM by giving the generally encouraged by July 4 ued to gain a greater share of sales, nerable the same waveband a ership breaks from AM to FM is ris- FM like call letters and simulcasting weekend business, many declaring it according to dealers surveyed. 40 stations on few years ago, judging by the latest ing. As this once hit stations with the maximum the FCC allows. The maintained an upward sales trend Business was up a modest 2% or spring Arbitrons for Chicago, De- teen listeners, it's now hurting those result: WLS -AM is up to 4.8 from evident over the past month or so. -

Annual Report 2014–2015 Contents

ANNUAL REPORT 2014–2015 CONTENTS MESSAGE FROM THE CEO & PRESIDENT 2 HIGHLIGHTS 2014–2015 FINANCIAL STATEMENTS 2014 2014 HIGHLIGHTS INDEPENDENT AUDITOR’S REPORT 6 42 2015 HIGHLIGHTS FINANCIAL STATEMENT 8 43 OUR WORK INVESTORS NEWS MEDIA MILLION DOLLAR LIFETIME CLUB 10 46 ENTERTAINMENT FOUNDATIONS 14 46 TH 25 ANNUAL GLAAD MEDIA AWARDS CORPORATE PARTNERS 17 47 26TH ANNUAL GLAAD MEDIA AWARDS LEGACY CIRCLE 21 48 TRANSGENDER MEDIA SHAREHOLDERS CIRCLE 25 49 GLOBAL VOICES 29 DIRECTORY SOUTHERN STORIES 32 GLAAD STAFF SPANISH-LANGUAGE & LATINO MEDIA 54 35 GLAAD NATIONAL YOUTH BOARD OF DIRECTORS 38 55 LEADERSHIP COUNCILS 55 My first year as GLAAD’s CEO & President was an unforgettable one as it was marked by significant accomplishments for the LGBT movement. Marriage equality is now the law of the land, the Boy Scouts ended its discriminatory ban based on sexual orientation, and an LGBT group marched in New York City’s St. Patrick’s Day Parade for the very first time. And as TIME noted, our nation has reached a “transgender tipping point.” Over 20 million people watched Caitlyn Jenner come out, and ABC looked to GLAAD as a valued resource for that game-changing interview. MESSAGE FROM THE CEO & PRESIDENT But even with these significant advancements, at GLAAD, we still see a dangerous gap between historic policy advancements and the hearts and minds of Americans—in other words, a gap between equality and acceptance. To better understand this disparity, GLAAD commissioned a Harris Poll to measure how Americans really feel about LGBT people. The results, released in our recent Accelerating Acceptance report, prove that beneath legislative progress lies a dangerous layer of discomfort and discrimination. -

Around $ 2 Medium 2-Topping Pizzas5 8” Individual $1-Topping99 Pizza 5And 16Each Oz

NEED A TRIM? AJW Landscaping 910-271-3777 September 8 - 14, 2018 Mowing – Edging – Pruning – Mulching Licensed – Insured – FREE Estimates 00941084 Carry Out ‘Kidding’ MANAGEr’s SPECIAL WEEKDAY SPECIAL around $ 2 MEDIUM 2-TOPPING Pizzas5 8” Individual $1-Topping99 Pizza 5and 16EACH oz. Beverage Jim Carrey stars (Additional toppings $1.40 each) in “Kidding” Monday Thru Friday from 11am - 4pm 1352 E Broad Ave. 1227 S Main St. Rockingham, NC 28379 Laurinburg, NC 28352 (910) 997-5696 (910) 276-6565 *Not valid with any other offers Joy Jacobs, Store Manager 234 E. Church Street Laurinburg, NC 910-277-8588 www.kimbrells.com Page 2 — Saturday, September 8, 2018 — Laurinburg Exchange Funny, not funny: Jim Carrey’s ‘Kidding’ mixes comedy and drama By Kyla Brewer ular television series role. At the to prepare the show to go on with- TV Media time of the announcement, Show- out him. At the same time, Deidre time executive David Nevins had grapples with her own challenging irst, there was Howdy Doody. high praise for the star. personal and professional issues. FThen came Captain Kangaroo, “No one inhabits a character like On the homefront, Jeff’s wife, Jill, the beloved Mr. Rogers and so on. Jim Carrey, and this role — which is has hit a rebellious streak. Cheerful, wise and kind, children’s like watching Humpty Dumpty after A show about a children’s enter- entertainers such as these have in- the fall — is going to leave televi- tainer is well timed, considering the spired generations of kids. A new sion audiences wondering how they current wave of Mr. -

'AVENGING ANGEL' CAST BIOS KEVIN SORBO (Preacher) – for Seven Years, Kevin Sorbo Played the Hulking, Heroic Lead in USA Ne

‘AVENGING ANGEL’ CAST BIOS KEVIN SORBO (Preacher) – For seven years, Kevin Sorbo played the hulking, heroic lead in USA Network’s “Hercules: The Legendary Journeys,” a role that made him a star. But the self-described jock from Minnetonka, Minnesota (the home of Tonka Toys), the fourth of five kids, got the acting bug at age 11 seeing something quite different – the musical “Oklahoma” and Shakespeare’s “The Merchant of Venice” at Minneapolis’s famed Guthrie Theater. Though he made his first commercial - for Target - while attending the University of Minnesota, Sorbo graduated from Moorehead State University in Fargo, North Dakota, with a dual degree in advertising and marketing. In 1982, after college, Kevin moved to Texas, where he began studying acting in earnest. A model friend there convinced him to travel to Europe, where he stayed for three years, building a tremendous commercial reel, with spots shot in cities from Milan and Munich to Paris and London. Within days of his return to the States in 1985, he shipped off to Australia for new commercial assignments, returning to L.A. six months later. Sorbo remained in demand through the early 90s, for both television and commercial work – he estimates appearing in 57 spots in the three years prior to being cast as Hercules. After appearing in such series as “Murder, She Wrote,” “Cheers” and “The Commish,” and after seven call backs with producer Sam Raimi, Kevin was given the role of Hercules. The character appeared first in a series of two hour movies as part of USA Network’s “The Action Pack,” alongside William Shatner’s “Tek Wars” and others – Hercules being the sole survivor of the group and landing its own series.