Machine Learning for Streaming Data: State of the Art, Challenges, and Opportunities

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Mining – Intro

Data warehouse& Data Mining UNIT-3 Syllabus • UNIT 3 • Classification: Introduction, decision tree, tree induction algorithm – split algorithm based on information theory, split algorithm based on Gini index; naïve Bayes method; estimating predictive accuracy of classification method; classification software, software for association rule mining; case study; KDD Insurance Risk Assessment What is Data Mining? • Data Mining is: (1) The efficient discovery of previously unknown, valid, potentially useful, understandable patterns in large datasets (2) Data mining is the analysis step of the "knowledge discovery in databases" process, or KDD (2) The analysis of (often large) observational data sets to find unsuspected relationships and to summarize the data in novel ways that are both understandable and useful to the data owner Knowledge Discovery Examples of Large Datasets • Government: IRS, NGA, … • Large corporations • WALMART: 20M transactions per day • MOBIL: 100 TB geological databases • AT&T 300 M calls per day • Credit card companies • Scientific • NASA, EOS project: 50 GB per hour • Environmental datasets KDD The Knowledge Discovery in Databases (KDD) process is commonly defined with the stages: (1) Selection (2) Pre-processing (3) Transformation (4) Data Mining (5) Interpretation/Evaluation Data Mining Methods 1. Decision Tree Classifiers: Used for modeling, classification 2. Association Rules: Used to find associations between sets of attributes 3. Sequential patterns: Used to find temporal associations in time series 4. Hierarchical -

Data Science (ML-DL-Ai)

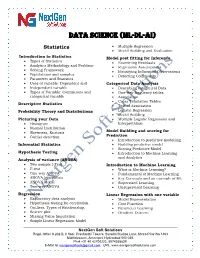

Data science (ML-DL-ai) Statistics Multiple Regression Model Building and Evaluation Introduction to Statistics Model post fitting for Inference Types of Statistics Examining Residuals Analytics Methodology and Problem- Regression Assumptions Solving Framework Identifying Influential Observations Populations and samples Detecting Collinearity Parameter and Statistics Uses of variable: Dependent and Categorical Data Analysis Independent variable Describing categorical Data Types of Variable: Continuous and One-way frequency tables categorical variable Association Cross Tabulation Tables Descriptive Statistics Test of Association Probability Theory and Distributions Logistic Regression Model Building Picturing your Data Multiple Logistic Regression and Histogram Interpretation Normal Distribution Skewness, Kurtosis Model Building and scoring for Outlier detection Prediction Introduction to predictive modelling Inferential Statistics Building predictive model Scoring Predictive Model Hypothesis Testing Introduction to Machine Learning and Analytics Analysis of variance (ANOVA) Two sample t-Test Introduction to Machine Learning F-test What is Machine Learning? One-way ANOVA Fundamental of Machine Learning ANOVA hypothesis Key Concepts and an example of ML ANOVA Model Supervised Learning Two-way ANOVA Unsupervised Learning Regression Linear Regression with one variable Exploratory data analysis Model Representation Hypothesis testing for correlation Cost Function Outliers, Types of Relationship, -

Machine Learning V1.1

An Introduction to Machine Learning v1.1 E. J. Sagra Agenda ● Why is Machine Learning in the News again? ● ArtificiaI Intelligence vs Machine Learning vs Deep Learning ● Artificial Intelligence ● Machine Learning & Data Science ● Machine Learning ● Data ● Machine Learning - By The Steps ● Tasks that Machine Learning solves ○ Classification ○ Cluster Analysis ○ Regression ○ Ranking ○ Generation Agenda (cont...) ● Model Training ○ Supervised Learning ○ Unsupervised Learning ○ Reinforcement Learning ● Reinforcement Learning - Going Deeper ○ Simple Example ○ The Bellman Equation ○ Deterministic vs. Non-Deterministic Search ○ Markov Decision Process (MDP) ○ Living Penalty ● Machine Learning - Decision Trees ● Machine Learning - Augmented Random Search (ARS) Why is Machine Learning In The News Again? Processing capabilities General ● GPU’s etc have reached level where Machine ● Tools / Languages / Automation Learning / Deep Learning practical ● Need for Data Science no longer limited to ● Cloud computing allows even individuals the tech giants capability to create / train complex models on ● Education is behind in creating Data vast data sets Scientists ● Organizing data is hard. Organizations Memory (Hard Drive (now SSD) as well RAM) challenged ● Speed / capacity increasing ● High demand due to lack of qualified talent ● Cost decreasing Data ● Volume of Data ● Access to vast public data sets ArtificiaI Intelligence vs Machine Learning vs Deep Learning Artificial Intelligence is the all-encompassing concept that initially erupted Followed by Machine Learning that thrived later Finally Deep Learning is escalating the advances of Artificial Intelligence to another level Artificial Intelligence Artificial intelligence (AI) is perhaps the most vaguely understood field of data science. The main idea behind building AI is to use pattern recognition and machine learning to build an agent able to think and reason as humans do (or approach this ability). -

Dynamic Feature Scaling for Online Learning of Binary Classifiers

Dynamic Feature Scaling for Online Learning of Binary Classifiers Danushka Bollegala University of Liverpool, Liverpool, United Kingdom May 30, 2017 Abstract Scaling feature values is an important step in numerous machine learning tasks. Different features can have different value ranges and some form of a feature scal- ing is often required in order to learn an accurate classifier. However, feature scaling is conducted as a preprocessing task prior to learning. This is problematic in an online setting because of two reasons. First, it might not be possible to accu- rately determine the value range of a feature at the initial stages of learning when we have observed only a handful of training instances. Second, the distribution of data can change over time, which render obsolete any feature scaling that we perform in a pre-processing step. We propose a simple but an effective method to dynamically scale features at train time, thereby quickly adapting to any changes in the data stream. We compare the proposed dynamic feature scaling method against more complex methods for estimating scaling parameters using several benchmark datasets for classification. Our proposed feature scaling method consistently out- performs more complex methods on all of the benchmark datasets and improves classification accuracy of a state-of-the-art online classification algorithm. 1 Introduction Machine learning algorithms require train and test instances to be represented using a set of features. For example, in supervised document classification [9], a document is often represented as a vector of its words and the value of a feature is set to the num- ber of times the word corresponding to the feature occurs in that document. -

Capacity and Trainability in Recurrent Neural Networks

Published as a conference paper at ICLR 2017 CAPACITY AND TRAINABILITY IN RECURRENT NEURAL NETWORKS Jasmine Collins,∗ Jascha Sohl-Dickstein & David Sussillo Google Brain Google Inc. Mountain View, CA 94043, USA {jlcollins, jaschasd, sussillo}@google.com ABSTRACT Two potential bottlenecks on the expressiveness of recurrent neural networks (RNNs) are their ability to store information about the task in their parameters, and to store information about the input history in their units. We show experimentally that all common RNN architectures achieve nearly the same per-task and per-unit capacity bounds with careful training, for a variety of tasks and stacking depths. They can store an amount of task information which is linear in the number of parameters, and is approximately 5 bits per parameter. They can additionally store approximately one real number from their input history per hidden unit. We further find that for several tasks it is the per-task parameter capacity bound that determines performance. These results suggest that many previous results comparing RNN architectures are driven primarily by differences in training effectiveness, rather than differences in capacity. Supporting this observation, we compare training difficulty for several architectures, and show that vanilla RNNs are far more difficult to train, yet have slightly higher capacity. Finally, we propose two novel RNN architectures, one of which is easier to train than the LSTM or GRU for deeply stacked architectures. 1 INTRODUCTION Research and application of recurrent neural networks (RNNs) have seen explosive growth over the last few years, (Martens & Sutskever, 2011; Graves et al., 2009), and RNNs have become the central component for some very successful model classes and application domains in deep learning (speech recognition (Amodei et al., 2015), seq2seq (Sutskever et al., 2014), neural machine translation (Bahdanau et al., 2014), the DRAW model (Gregor et al., 2015), educational applications (Piech et al., 2015), and scientific discovery (Mante et al., 2013)). -

Naiad: a Timely Dataflow System

Naiad: A Timely Dataflow System Derek G. Murray Frank McSherry Rebecca Isaacs Michael Isard Paul Barham Mart´ın Abadi Microsoft Research Silicon Valley {derekmur,mcsherry,risaacs,misard,pbar,abadi}@microsoft.com Abstract User queries Low-latency query are received responses are delivered Naiad is a distributed system for executing data parallel, cyclic dataflow programs. It offers the high throughput Queries are of batch processors, the low latency of stream proces- joined with sors, and the ability to perform iterative and incremental processed data computations. Although existing systems offer some of Complex processing these features, applications that require all three have re- incrementally re- lied on multiple platforms, at the expense of efficiency, Updates to executes to reflect maintainability, and simplicity. Naiad resolves the com- data arrive changed data plexities of combining these features in one framework. A new computational model, timely dataflow, under- Figure 1: A Naiad application that supports real- lies Naiad and captures opportunities for parallelism time queries on continually updated data. The across a wide class of algorithms. This model enriches dashed rectangle represents iterative processing that dataflow computation with timestamps that represent incrementally updates as new data arrive. logical points in the computation and provide the basis for an efficient, lightweight coordination mechanism. requirements: the application performs iterative process- We show that many powerful high-level programming ing on a real-time data stream, and supports interac- models can be built on Naiad’s low-level primitives, en- tive queries on a fresh, consistent view of the results. abling such diverse tasks as streaming data analysis, it- However, no existing system satisfies all three require- erative machine learning, and interactive graph mining. -

Incremental Decision Trees

ECD Master Thesis Report INCREMENTAL DECISION TREES Bassem Khouzam 01/09/2009 Advisor: Dr. Vincent LEMAIRE Location: Orange Labs Abstract: In this manuscript we propose standardized definitions for some ambiguously used terms in machine learning literature. Then we discuss generalities of incremental learning and study its major characteristics. Later we approach incremental decision trees for binary classification problems, present their state of art, and study experimentally the potential of using random sampling method in building an incremental tree algorithm based on an offline one. R´esum´e: Dans ce manuscript, nous proposens des d´efinitionsstandardis´esspour quelques no- tions utilis´eesd'une mani`ere ambigu¨edans la literature d'apprentissage automatique. Puis, nous discutons les g´en´eralit´esd'apprentissage incr´ementaleet ´etudionsses ma- jeurs charact´eristiques. Les arbres de d´ecisionincr´ementauxpour les probl`emesde classification binaire sont apr`esabord´es,nous pr´esentonsl'´etatde l'art, et ´etudions empiriquement le potentiel de l'utilisation de la m´ethode l'echantillonage al´eatoire en construisant un algorithm incr´ementaled'arbre de d´ecisionbas´esur un algorithm hors ligne. iii " To Liana and Hadi ..." Acknowledgments I would like to thank all people who have helped and inspired me during my internship. I especially want to express my gratitude to my supervisor Dr. Vincent LEMAIRE for his inspiration and guid- ance during my internship at Orange Labs. He shared with me a lot of his expertise and research insight, his geniality was materialized in precious advices led me always in the right way. I am particularly grateful for his special courtesy that is second to none. -

Training and Testing of a Single-Layer LSTM Network for Near-Future Solar Forecasting

applied sciences Conference Report Training and Testing of a Single-Layer LSTM Network for Near-Future Solar Forecasting Naylani Halpern-Wight 1,2,*,†, Maria Konstantinou 1, Alexandros G. Charalambides 1 and Angèle Reinders 2,3 1 Chemical Engineering Department, Cyprus University of Technology, Lemesos 3036, Cyprus; [email protected] (M.K.); [email protected] (A.G.C.) 2 Energy Technology Group, Department of Mechanical Engineering, Eindhoven University of Technology, 5612 AE Eindhoven, The Netherlands; [email protected] 3 Department of Design, Production and Management, Faculty of Engineering Technology, University of Twente, 7522 NB Enschede, The Netherlands * Correspondence: [email protected] or [email protected] † Current address: Archiepiskopou Kyprianou 30, Limassol 3036, Cyprus. Received: 30 June 2020; Accepted: 31 July 2020; Published: 25 August 2020 Abstract: Increasing integration of renewable energy sources, like solar photovoltaic (PV), necessitates the development of power forecasting tools to predict power fluctuations caused by weather. With trustworthy and accurate solar power forecasting models, grid operators could easily determine when other dispatchable sources of backup power may be needed to account for fluctuations in PV power plants. Additionally, PV customers and designers would feel secure knowing how much energy to expect from their PV systems on an hourly, daily, monthly, or yearly basis. The PROGNOSIS project, based at the Cyprus University of Technology, is developing a tool for intra-hour solar irradiance forecasting. This article presents the design, training, and testing of a single-layer long-short-term-memory (LSTM) artificial neural network for intra-hour power forecasting of a single PV system in Cyprus. -

Anytime Algorithms for Stream Data Mining

Anytime Algorithms for Stream Data Mining Von der Fakultat¨ fur¨ Mathematik, Informatik und Naturwissenschaften der RWTH Aachen University zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften genehmigte Dissertation vorgelegt von Diplom-Informatiker Philipp Kranen aus Willich, Deutschland Berichter: Universitatsprofessor¨ Dr. rer. nat. Thomas Seidl Visiting Professor Michael E. Houle, PhD Tag der mundlichen¨ Prufung:¨ 14.09.2011 Diese Dissertation ist auf den Internetseiten der Hochschulbibliothek online verfugbar.¨ Contents Abstract / Zusammenfassung1 I Introduction5 1 The Need for Anytime Algorithms7 1.1 Thesis structure......................... 16 2 Knowledge Discovery from Data 17 2.1 The KDD process and data mining tasks ........... 17 2.2 Classification .......................... 25 2.3 Clustering............................ 36 3 Stream Data Mining 43 3.1 General Tools and Techniques................. 43 3.2 Stream Classification...................... 52 3.3 Stream Clustering........................ 59 II Anytime Stream Classification 69 4 The Bayes Tree 71 4.1 Introduction and Preliminaries................. 72 4.2 Indexing density models.................... 76 4.3 Experiments........................... 87 4.4 Conclusion............................ 98 i ii CONTENTS 5 The MC-Tree 99 5.1 Combining Multiple Classes.................. 100 5.2 Experiments........................... 111 5.3 Conclusion............................ 116 6 Bulk Loading the Bayes Tree 117 6.1 Bulk loading mixture densities . 117 6.2 Experiments.......................... -

ML Cheatsheet Documentation

ML Cheatsheet Documentation Team Sep 02, 2021 Basics 1 Linear Regression 3 2 Gradient Descent 21 3 Logistic Regression 25 4 Glossary 39 5 Calculus 45 6 Linear Algebra 57 7 Probability 67 8 Statistics 69 9 Notation 71 10 Concepts 75 11 Forwardpropagation 81 12 Backpropagation 91 13 Activation Functions 97 14 Layers 105 15 Loss Functions 117 16 Optimizers 121 17 Regularization 127 18 Architectures 137 19 Classification Algorithms 151 20 Clustering Algorithms 157 i 21 Regression Algorithms 159 22 Reinforcement Learning 161 23 Datasets 165 24 Libraries 181 25 Papers 211 26 Other Content 217 27 Contribute 223 ii ML Cheatsheet Documentation Brief visual explanations of machine learning concepts with diagrams, code examples and links to resources for learning more. Warning: This document is under early stage development. If you find errors, please raise an issue or contribute a better definition! Basics 1 ML Cheatsheet Documentation 2 Basics CHAPTER 1 Linear Regression • Introduction • Simple regression – Making predictions – Cost function – Gradient descent – Training – Model evaluation – Summary • Multivariable regression – Growing complexity – Normalization – Making predictions – Initialize weights – Cost function – Gradient descent – Simplifying with matrices – Bias term – Model evaluation 3 ML Cheatsheet Documentation 1.1 Introduction Linear Regression is a supervised machine learning algorithm where the predicted output is continuous and has a constant slope. It’s used to predict values within a continuous range, (e.g. sales, price) rather than trying to classify them into categories (e.g. cat, dog). There are two main types: Simple regression Simple linear regression uses traditional slope-intercept form, where m and b are the variables our algorithm will try to “learn” to produce the most accurate predictions. -

Improving Iot Data Stream Analytics Using Summarization Techniques Maroua Bahri

Improving IoT data stream analytics using summarization techniques Maroua Bahri To cite this version: Maroua Bahri. Improving IoT data stream analytics using summarization techniques. Machine Learn- ing [cs.LG]. Institut Polytechnique de Paris, 2020. English. NNT : 2020IPPAT017. tel-02865982 HAL Id: tel-02865982 https://tel.archives-ouvertes.fr/tel-02865982 Submitted on 12 Jun 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Improving IoT Data Stream Analytics Using Summarization Techniques These` de doctorat de l’Institut Polytechnique de Paris prepar´ ee´ a` Tel´ ecom´ Paris Ecole´ doctorale n◦626 Denomination´ (Sigle) Specialit´ e´ de doctorat : Informatique NNT : 2020IPPAT017 These` present´ ee´ et soutenue a` Palaiseau, le 5 juin 2020, par MAROUA BAHRI Composition du Jury : Albert Bifet Professor, Tel´ ecom´ Paris Co-directeur de these` Silviu Maniu Associate Professor, Universite´ Paris-Sud Co-directeur de these` Joao˜ Gama Professor, University of Porto President´ Cedric´ Gouy-Pailler Engineer-Researcher, CEA-LIST Examinateur Ons Jelassi -

1.2 Le Zhang, Microsoft

Objective • “Taking recommendation technology to the masses” • Helping researchers and developers to quickly select, prototype, demonstrate, and productionize a recommender system • Accelerating enterprise-grade development and deployment of a recommender system into production • Key takeaways of the talk • Systematic overview of the recommendation technology from a pragmatic perspective • Best practices (with example codes) in developing recommender systems • State-of-the-art academic research in recommendation algorithms Outline • Recommendation system in modern business (10min) • Recommendation algorithms and implementations (20min) • End to end example of building a scalable recommender (10min) • Q & A (5min) Recommendation system in modern business “35% of what consumers purchase on Amazon and 75% of what they watch on Netflix come from recommendations algorithms” McKinsey & Co Challenges Limited resource Fragmented solutions Fast-growing area New algorithms sprout There is limited reference every day – not many Packages/tools/modules off- and guidance to build a people have such the-shelf are very recommender system on expertise to implement fragmented, not scalable, scale to support and deploy a and not well compatible with enterprise-grade recommender by using each other scenarios the state-of-the-arts algorithms Microsoft/Recommenders • Microsoft/Recommenders • Collaborative development efforts of Microsoft Cloud & AI data scientists, Microsoft Research researchers, academia researchers, etc. • Github url: https://github.com/Microsoft/Recommenders • Contents • Utilities: modular functions for model creation, data manipulation, evaluation, etc. • Algorithms: SVD, SAR, ALS, NCF, Wide&Deep, xDeepFM, DKN, etc. • Notebooks: HOW-TO examples for end to end recommender building. • Highlights • 3700+ stars on GitHub • Featured in YC Hacker News, O’Reily Data Newsletter, GitHub weekly trending list, etc.