Building Better AI Chips

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advanced Packaging Solutions

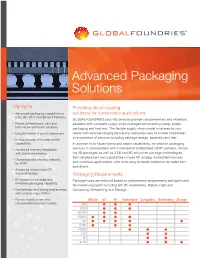

Advanced Packaging Solutions Highlights Providing silicon-scaling • Advanced packaging capabilities in solutions for tomorrow’s applications 2.5D, 3D, WLP and Silicon Photonics GLOBALFOUNDRIES post-fab services provide complementary and extended • Power, performance, cost and solutions with complete supply chain management including bump, probe, form-factor optimized solutions packaging and final test. The flexible supply chain model is tailored to your • Industry leader in smart interposers needs with services ranging from bump and probe only to a more comprehen- sive spectrum of services including package design, assembly and test. • In-house bump and wafer probe capabilities In addition to in-house bump and probe capabilities, we provide packaging • Advanced memory integration services in collaboration with a network of established OSAT partners, includ- with stacked memories ing 2D packages as well as 2.5D and 3D advanced package technologies. Test development and capabilities include RF, analog, embedded memory, • Ownership and process maturity for HVM and mmWave applications, with wide array of tester platforms for wafer sort operations. • Advanced silicon node CPI and qualification Packaging Requirements • RF system-in-package and Package types are selected based on performance requirements and optimized mmWave packaging capability for market segments including IoT, RF, Automotive, Mobile, High-end • Partnerships and strong relationships Computing, Networking and Storage. with leading-edge OSATs • Flexible supply chain and Mobile IoT RF Automotive Computing Networking Storage collaborative business models QFN FBGA WLCSP FOWLP SiP fcCSP FCBGA 2.5D 3D Si-PH Advanced Packaging Solutions Packaging Technologies TSV Si Interposer Availability GF Si nodes are qualified in a wide range of package Full Reticle 26x33mm2 technologies including 2D wirebond designs, flip Stitched Interposer >1300 mm2 chip, WLCSP and FOWLP configurations, as well 10:1 Aspect Ratio TSV 10um Dia./ 100um Depth as 2.5D, 3D and Si-Photonics. -

Spring 2017 Industry Study Industry Report Electronics

Spring 2017 Industry Study Industry Report Electronics The Dwight D. Eisenhower School for National Security and Resource Strategy National Defense University Fort McNair, Washington, DC 20319-5062 i ELECTRONICS 2017 ABSTRACT: While currently assessed as mature and healthy, the global semiconductor industry is facing a strategic inflection point. This inflection will shape a future for the industry that is significantly different than the past. Although outlook for that future remains favorable, numerous challenges place that future at risk. Challenges found in Chinese competition, skilled workforce shortages, commercial semiconductor market shifts, unique DoD electronics needs, and ongoing requirements for rapid innovation threaten the stability of the market, the U.S. competitive advantage, and U.S. economic and national security. Future success in the industry hinges upon policies which address these challenges and enable U.S. companies to embrace future opportunities. LTC Khalid Alothman, Saudi Arabian Army CDR Terri L. Gabriel, U.S. Navy LTC Kevin F. Hanrahan, U.S. Army COL Jeffrey Howell, U.S. Army Mr. Benjamin Lam, U.S. Dept. of State Mr. Steven Mapes, Office of the Secretary of Defense Lt Col Adrian Meyer, Air National Guard COL Michael Samson, Philippine Army Col James E. Smith, U.S. Air Force Mr. Keith Smithson, Dept. of Energy COL William Smoot, U.S. Army Mr. Sim Walker, Dept. of the Army Lt Col Aaron Weiner, U.S. Air Force Ms. Denise L. Williams, Office of the Secretary of Defense Dr. Stephen Basile, Faculty Mr. Michael Dixon, Department of State, Faculty Col Thomas A. Santoro, Jr., U.S. Air Force, Faculty ii Industry Study Outreach and Field Studies On Campus Presenters BAE Systems, Inc., Arlington, VA Bureau of East Asian and Pacific Affairs, U.S. -

Heading Towards Big Data Building a Better Data Warehouse for More Data, More Speed, and More Users

Heading Towards Big Data Building A Better Data Warehouse For More Data, More Speed, And More Users Raymond Gardiner Goss [email protected] Kousikan Veeramuthu [email protected] Manufacturing Technology GLOBALFOUNDRIES Malta, NY, USA Abstract—As a new company, GLOBALFOUNDRIES is determine the caller’s income level and specify to which agent aggressively agile and looking at ways to not just mimic existing to route the call or the switch would timeout. When switches semiconductor manufacturing data management but to leverage were overwhelmed with data, they would drop packets and new technologies and advances in data management without algorithms had to infer states based on most probable current sacrificing performance or scalability. Being a global technology state. Other industries, such as social media, are challenged company that relies on the understanding of data, it is important to centralize the visibility and control of this information, bringing more by unstructured data and need tools to help turn text it to the engineers and customers as they need it. messages and photos into useful information for search engines and marketing purposes. The challenge in the semiconductor Currently, the factories are employing the best practices and world is with the size of the data. Speed becomes a secondary data architectures combined with business intelligence analysis problem because so many sources are needed to be joined and reporting tools. However, the expected growth in data over together in a timely manner. Large recipes, complex output the next several years and the need to deliver more complex data integration for analysis will easily stress the traditional tools from the test floor combined now with more Interface-A trace beyond the limits of the traditional data infrastructure. -

Boa Motion to Dismiss

Case 21-10036-CSS Doc 211-4 Filed 02/15/21 Page 425 of 891 Disposition of the Stapled Securities Gain on sale of Stapled Securities by a Non-U.S. Stapled Securityholder will not be subject to U.S. federal income taxation unless (i) the Non-U.S. Stapled Securityholder’s investment in the Stapled Securities is effectively connected with its conduct of a trade or business in the United States (and, if provided by an applicable income tax treaty, is attributable to a permanent establishment or fixed base the Non-U.S. Stapled Securityholder maintains in the United States) and a properly completed Form W-8ECI has not been provided, (ii) the Non-U.S. Stapled Securityholder is present in the United State for 183 days or more in the taxable year of the sale and other specified conditions are met, or (iii) the Non-U.S. Stapled Securityholder is subject to U.S. federal income tax pursuant to the provisions of the U.S. tax law applicable to U.S. expatriates. If gain on the sale of Stapled Securities would be subject to U.S. federal income taxation, the Stapled Securityholder would generally recognise any gain or loss equal to the difference between the amount realised and the Stapled Securityholder’s adjusted basis in its Stapled Securities that are sold or exchanged. This gain or loss would be capital gain or loss, and would be long-term capital gain or loss if the Stapled Securityholder’s holding period in its Stapled Securities exceeds one year. In addition, a corporate Non-U.S. -

RF CMOS: Integrated on a Chip FE ANALOG Modules: ANALOG

TowerJazz (TSEM) Investor and Analyst Day November 16, 2016 | New York Safe Harbor This presentation contains forward-looking statements within the meaning of the “safe harbor” provisions of the Private Securities Litigation Reform Act of 1995. These statements are based on management’s current expectations and beliefs and are subject to a number of risks, uncertainties and assumptions that could cause actual results to differ materially from those described in the forward-looking statements. All statements other than statements of historical fact are statements that could be deemed forward-looking statements. For example, statements regarding expected (i) customer demand, (ii) utilization and cross utilization of our Fabs, (iii) growth in our end markets, (iv) market and technology trends, and (v) growth in revenues, cash flow, margins and net profits are all forward-looking statements. Actual results may differ materially from those projected or implied by such forward-looking statements due to various risks and uncertainties applicable to TowerJazz’s business as described in the reports filed by Tower Semiconductor Ltd. (“Tower”) with the Securities and Exchange Commission (the “SEC”) and the Israel Securities Authority (“ISA”), including the risks identified under the heading "Risk Factors" in Tower’s most recent filings on Forms 20-F and 6-K. No assurances can be given that any of the events anticipated by the forward-looking statements will transpire or occur, or if any of them do, what impact they will have on the results of operations or financial condition of TowerJazz. TowerJazz is providing this information as of the date of this presentation and expressly disclaims any obligation to update any of the forward-looking statements or other information contained in this document as a result of new information, future events or otherwise. -

Advanced Micro Devices (AMD)

Strategic Report for Advanced Micro Devices, Inc. Tad Stebbins Andrew Dialynas Rosalie Simkins April 14, 2010 Advanced Micro Devices, Inc. Table of Contents Executive Summary ............................................................................................ 3 Company Overview .............................................................................................4 Company History..................................................................................................4 Business Model..................................................................................................... 7 Market Overview and Trends ...............................................................................8 Competitive Analysis ........................................................................................ 10 Internal Rivalry................................................................................................... 10 Barriers to Entry and Exit .................................................................................. 13 Supplier Power.................................................................................................... 14 Buyer Power........................................................................................................ 15 Substitutes and Complements............................................................................ 16 Financial Analysis ............................................................................................. 18 Overview ............................................................................................................ -

14Nm Finfet Technology

14LPP 14nm FinFET Technology Highlights Enabling Connected Intelligence • 14nm FinFET technology GLOBALFOUNDRIES 14LPP 14nm FinFET process technology platform is + Manufactured in state-of-the-art ideal for high-performance, power-efficient SoCs in demanding, high-volume facilities in Saratoga County, New York applications. + Volume production in Computing, 3D FinFET transistor technology provides best-in-class performance and Networking, Mobile and Server power with significant cost advantages from 14nm area scaling. 14LPP applications technology can provide up to 55% higher device performance and 60% • Ideal for high-performance, lower total power compared to 28nm technologies. power-efficient SoC applications + Cloud / Data Center servers Lg Gate length shrink enables + CPU and GPU performance scaling + High-end mobile processors + Automotive ADAS FET is turned on its edge + Wired and wireless networking + IoT edge computing • Lower supply voltage • Comprehensive design ecosystem • Reduced off-state leakage + Full foundation and complex • Faster switching speed IP libraries – high drive current + PDK and reference flows supported by major EDA and IP partners + Robust DFM solutions Target Applications and Solutions • Complete services and Mobile Apps Processor High Performance Compute & Networking supply chain support 60% power reduction 60% power reduction 2x # cores + Regularly scheduled MPWs 80% higher performance, >2.2GHz >3GHz maximum performance + Advanced packaging and test solutions, including 2.5/3D products 45% area reduction -

PART I: People & Strategy

By Ken Luskin [email protected] October 28, 2014 PART I: People & Strategy The Brilliance of Steve Jobs was in building a team of geniuses Virtually everyone in the world knows that Steve Jobs was the driving force behind the success of Apple. Unfortunately, few of them understand that without the team of technical gurus Jobs assembled, his visions would not have become reality. Without a Microprocessor Brain, the iPhone would not exist Inside every “smart” computing device is a microprocessor, considered the brains of the device. When Jobs was creating what is now known as the iPhone, he needed a special microprocessor, which could make it through the day without draining the battery. Jobs first approached Intel, the company that was supplying the microprocessors for the entire MAC line. Intel was not interested in supplying a relatively low cost mobile chip, because the bean counters and FAB guys had no vision. So, Jobs built a team of top mobile microproprocessor engineers, licensed the ARM architecture, and created a chip that became the brains of the iPhone and iPad. Apple buys P.A. Semiconductor in 2008 to keep Apple’s microprocessors on the cutting edge With this acquisition Apple received a group of highly innovative engineers, including ex AMD employee Jim Keller. From a VentureBeat article: “Keller spearheaded the development of Apple’s custom mobile processors — including the A4 in the iPhone 4, the A5 in the iPhone 4S and iPad 2, and the A5X in the new iPad — all of which have managed to keep Apple’s mobile devices on the cutting edge. -

TROUBLING SIGNS IBM Head Ginni Rometty Cannot Find a Way to Stimulate Growth IBM COMPANY

TROUBLING SIGNS IBM head Ginni Rometty cannot find a way to stimulate growth IBM COMPANY LITTLE BIG BLUE IBM The turnover of the US technological icon has been shrinking for years. If superbrain Watson fails to bring about the turnaround, CEO Virginia Rometty will probably have to go. For Virginia Marie Rometty (59), called Ginni, the domination of the world is only a matter of time. At the end of October, at her in-house exhibition in Las Vegas, the chief executive of IBM announced that in five years her computer technology will influence “every decision” on this planet. The task for IBM's superbrain Watson is clear – it must solve “the biggest problems of the world.” In a few years, this market, called Cognitive Computing, is expected to be worth an incredible two trillion dollars. Rometty claims that more than one billion people will be using the in-house “moonshot” technology as early as 2017. The magic word is Artificial Intelligence (AI). With such a pitch a techie from the Silicon Valley would certainly have the chance for a life-sustaining venture capital shot. But IBM is not a new company – even though Rometty likes to describe her Big Blue as a “105-year old start-up.” ► JANUARY 2017 manager magazin 65 PHOTO [M]: BEN BAKER / REDUX / LAIF / / REDUX BAKER [M]: BEN PHOTO COMPANY IBM The large corporation lacks the most of HP, the other US technological Rometty has pursued this goal important criterion for this type of legend. It was disrupted personally. using all available means. After all, company – dynamism. -

Senior Vice President, Cognitive Solutions and IBM Research IBM

Dr. John E. Kelly III Senior Vice President, Cognitive Solutions and IBM Research IBM As IBM senior vice president, Cognitive Solutions and IBM Research, Dr. John E. Kelly III is focused on the company’s investments in several of the fastest-growing and most strategic parts of the information technology market. His portfolio includes IBM Analytics, IBM Commerce, IBM Security and IBM Watson, as well as IBM Research and the company’s Intellectual Property team. He also oversees the development of units devoted to serving clients in specific industries, beginning with the 2015 launches of IBM Watson Health and IBM Watson Internet of Things. In this role, Dr. Kelly’s top priorities are to stimulate innovation in key areas of information technology and to bring those innovations into the marketplace quickly; to apply these innovations to help IBM clients succeed; and to identify and nurture new and future areas for investment and growth. He works closely with the leaders of these units to drive business development, accelerate technology transfer and invest for the future. Dr. Kelly was most recently senior vice president and director of IBM Research, only the tenth person to hold that position over the past seven decades. Under Dr. Kelly, IBM Research expanded its global footprint by adding four new labs (including IBM’s first in Africa, South America and Australia), creating a network of approximately 3,000 scientists and technical employees across 12 laboratories in 10 countries. During his tenure, IBM maintained and extended what is now 23 straight years of patent leadership. Most notably, Dr. -

2018 Corporate Responsibility Report

2018 Corporate Responsibility Report Trust and responsibility. Earned and practiced daily. #GoodTechIBM IBM 2018 Corporate Responsibility Report | 1 Trust and responsibility. Earned and practiced daily. We have seen, for more than a century, that when to the boardroom. They are core to every — We invested hundreds of millions of dollars in we apply science to real-world problems, we can relationship — with our employees, our clients, programs to help train and prepare the global create a tomorrow that is better than today. More our shareholders, and the communities in which workforce for this new era. These initiatives sustainable. More equitable. More secure. we live and work. include 21st century apprenticeship programs, returnships for women reentering In fact, we have never known a time when In this report, you will read about the many the workforce, veterans programs and science and technology had more potential to achievements we made to further this foundation volunteer skills-building sessions for more benefit society than right now. of trust and responsibility throughout 2018. than 3.2 million students worldwide. And we For example: helped scale the P-TECH™ school model — a In the last 10 years alone, the world has achieved six-year program that offers a high school Ginni Rometty at P-TECH in Brooklyn, N.Y., May 2019 stunning advancements, from breaking the — After reaching our aggressive goals to increase diploma and an associate’s degree, along AI winter to the dawn of quantum computing. our use of renewable energy and reduce CO2 with real-world working experience and These and other advanced technologies have emissions 4 years ahead of schedule, we set mentorship — at no cost to students. -

Jennifer Clark, Director of Manufacturing Operations Global Foundries | with HAROLD KING

LEADER PROFILE Jennifer Clark, Director of Manufacturing Operations Global Foundries | WITH HAROLD KING EMBRACING NEW IDEAS "BY ALWAYS LOOKING TO DO THINGS BETTER, YOU CREATE A CULTURE THAT EMBRACES NEW IDEAS AND REMAINS FOCUSED." Jennifer Clark at Global Foundries holding a silicon wafer. JENNIFER CLARK really liked math and science in high school and found herself to be good at both but never thought about what that meant in terms of a career. HV Mfg sits down with Jennifer to discuss her rising career path after a degree in Chemical Engineering and about her role as Director of Manufacturing Operations for Global Foundries. The Council of Industry Magazine and Resource Guide 11 HV MFG: Thank you for agreeing to talk with us this morning. The co-ops were great. At Timken I worked at their bearing plant How did you come to work in the manufacturing sector? and got to focus on plant safety. For co-op the second half of the year Timken was a little slow confirming that I could work there JC: When I was 17 years old, in high school in Northeast Ohio— again. While I was waiting for them IBM called and offered me the Canton area—I really liked math and science. I was also pretty the co-op at their semiconductor plant in Burlington. I hesitated good at both but never really thought about what that meant in because it was not what I was used to and it seemed so far away. terms of a career. One day in my junior year some people from But the manager called me and laid out all the reasons why I The Timken Company visited the school to talk about careers in should accept it.