Interactive Voice Response Polling in Election Campaigns: Differences with Live Interview Surveys

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Www. George Wbush.Com

Post Office Box 10648 Arlington, VA 2221 0 Phone. 703-647-2700 Fax: 703-647-2993 www. George WBush.com October 27,2004 , . a VIA FACSIMILE (202-219-3923) AND CERTIFIED MAIL == c3 F Federal Election Commission 999 E Street NW Washington, DC 20463 b ATTN: Office of General Counsel e r\, Re: MUR3525 Dear Federal Election Commission: On behalf of President George W. Bush and Vice President Richard B. Cheney as candidates for federal office, Karl Rove, David Herndon and Bush-Cheney ’04, Inc., this letter responds to the allegations contained in the complaint filed with the Federal Election Commission (the “Commission”) by Kerry-Edwards 2004, Inc. The Kerry Campaign’s complaint alleges that Bush-Cheney 2004 and fourteen other individuals and organizations violated the Federal Election Campaign Act of 197 1, as amended (2 U.S.C. $ 431 et seq.) (“the Act”). Specifically, the Kerry Campaign alleges that individuals and organizations named in the complaint illegally coordinated with one another to produce and air advertising about Senator. Kerry’s military service. 1 Considering that no entity by the name of Bush-Cheney 2004 appears to exist, 1’ Bush-Cheney ’04, Inc. (“Bush Campaign”) presumes that the Kerry Campaign mistakenly filed its complaint against a non-existent entity and actually intended to file against the Bush Campaign. Assuming that the foregoing presumption is correct; the Bush Campaign, on behalf of itself and President George W. Bush, Vice President Richard B. Cheney, Karl Rove, David Herndon (the “Parties”), responds as follows: Response to Allegations Against President George W. Bush, Vice President Richard B. -

Candidates, Campaigns, and Political Tides: Electoral Success in Colorado's 4Th District Megan Gwynne Maccoll Claremont Mckenna College

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2012 Candidates, Campaigns, and Political Tides: Electoral Success in Colorado's 4th District Megan Gwynne MacColl Claremont McKenna College Recommended Citation MacColl, Megan Gwynne, "Candidates, Campaigns, and Political Tides: Electoral Success in Colorado's 4th District" (2012). CMC Senior Theses. Paper 450. http://scholarship.claremont.edu/cmc_theses/450 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. CLAREMONT McKENNA COLLEGE CANDIDATES, CAMPAIGNS, AND POLITICAL TIDES: ELECTORAL SUCCESS IN COLORADO’S 4TH DISTRICT SUBMITTED TO PROFESSOR JON SHIELDS AND DEAN GREGORY HESS BY MEGAN GWYNNE MacCOLL FOR SENIOR THESIS SPRING/2012 APRIL 23, 2012 TABLE OF CONTENTS Introduction…………………………………………………………………………...…..1 Chapter One: The Congresswoman as Representative……………………………………4 Chapter Two: The Candidate as Political Maestro………………………………………19 Chapter Three: The Election as Referendum on National Politics....................................34 Conclusion……………………………………………………………………………….47 Bibliography……………………………………………………………………………..49 INTRODUCTION The 2010 congressional race in Colorado’s 4th District became political theater for national consumption. The race between two attractive, respected, and qualified candidates was something of an oddity in the often dysfunctional 2010 campaign cycle. Staged on the battleground of a competitive district in an electorally relevant swing state, the race between Republican Cory Gardner and Democratic incumbent Betsy Markey was a partisan fight for political momentum. The Democratic Party made inroads in the 4th District by winning the congressional seat in 2008 for the first time since the 1970s. Rep. Markey’s win over Republican incumbent Marilyn Musgrave was supposed to signal the long-awaited arrival of progressive politics in the district, after Rep. -

Keynote Speaker

Wednesday, October 24, 2012 Boston Convention and Exhibition Center 415 Summer Street, Boston Reception 5:30 p.m. Dinner 7:00 p.m. $100 for individuals RSVP by Friday, October 12 The Convention Center is located in the Seaport District, near South Station (approximately a 10 minute walk). Public transportation is avail- able via the Silver Line from South Station. Parking is available at the rear of the building at the South Parking Lot. Valet parking is also available. For more information, contact CHAPA at 617.742.0820. Keynote Speaker STUART ROTHENBERG Editor and Publisher of the Rothenberg Political Report Stuart Rothenberg is editor and publisher of The Rothenberg Political Report, a non-partisan political newsletter covering U.S. House, Senate and gubernatorial campaigns, Presidential politics and political de- velopments. He is also a twice-a-week columnist for Roll Call, Capitol Hill’s premier newspaper. A frequent soundbite, Mr. Rothenberg has appeared on Meet the Press, This Week, Face the Nation, The NewsHour, Nightline and many other television programs. He is often quoted in the nation’s major media, and his op-eds have appeared in The New York Times, The Washington Post, the Wall Street Journal and other newspapers. Mr. Rothenberg served as an election night analyst for the Newshour on PBS in 2008 and 2010 and for CBS News in 2006. Prior to that, he was an on-air political analyst for CNN for over a decade, including elec- tion nights from 1992 through 2004. He has also done on-air analysis for the Voice of America. -

The Candidates

The Candidates Family Background Bush Gore Career Highlights Bush Gore Personality and Character Bush Gore Political Communication Lab., Stanford University Family Background USA Today June 15, 2000; Page 1A Not in Their Fathers' Images Bush, Gore Apply Lessons Learned From Losses By SUSAN PAGE WASHINGTON -- George W. Bush and Al Gore share a reverence for their famous fathers, one a former president who led the Gulf War, the other a three-term Southern senator who fought for civil rights and against the Vietnam War. The presidential candidates share something else: a determination to avoid missteps that brought both fathers repudiation at the polls in their final elections. The younger Bush's insistence on relying on a trio of longtime and intensely loyal aides -- despite grumbling by GOP insiders that the group is too insular -- reflects his outrage at what he saw as disloyalty during President Bush's re-election campaign in 1992. He complained that high- powered staffers were putting their own agendas first, friends and associates say. Some of those close to the younger Gore trace his willingness to go on the attack to lessons he learned from the above-the-fray stance that his father took in 1970. Then-senator Albert Gore Sr., D-Tenn., refused to dignify what he saw as scurrilous attacks on his character with a response. The approach of Father's Day on Sunday underscores the historic nature of this campaign, as two sons of accomplished politicians face one Political Communication Lab., Stanford University another. Their contest reveals not only the candidates' personalities and priorities but also the influences of watching their famous fathers, both in victory and in defeat. -

No Midterm Wave Developing to Date

V19, N43 Thursday, July. 24, 2014 No midterm wave developing to date thing to ramp Obama unpopularity up turnout.” offset by GOP brand In past wave elections problems; status quo – the 1966 and 2006 election for Indiana blowbacks to the Vietnam By BRIAN A. HOWEY and Iraq wars, INDIANAPOLIS – The 2014 the Repub- election cycle conventional wis- lican U.S. dom went something like this: With House take- Obamacare so utterly unpopular, with overs of 1994 President Obama’s approval numbers and 2010, and mired in the low 40th percentile, the post-Wa- and the historical second mid-term tergate Demo- quicksand for the president’s party cratic gains in creating a conspicuous obstacle down ballot, this had the 1974 – wave years generally began to set up in July. The potential to be a “wave election.” operatives closest to the ground would begin sounding the For Hoosiers expecting waves, I would recom- alarms or calling out the volunteers. In the 1980 Reagan mend the Michigan City lighthouse as a cold front passes Revolution, the decisive wave didn’t really take shape until through. As far as the November ballot is concerned, late October. this has the look and feel of a status quo election both in The area for the greatest potential change, forging Indiana and across the nation. “I don’t think this is much a Republican majority in the U.S. Senate, is now a dubi- of a wave year,” said Mike Gentry, who heads the Indiana House Republican Campaign Committee. “There is nothing Continued on page 4 driving interest at the top of the ticket. -

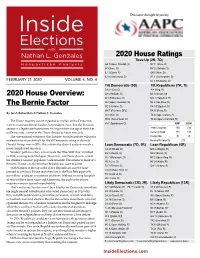

February 21, 2020 Volume 4, No

This issue brought to you by 2020 House Ratings Toss-Up (2R, 7D) GA 7 (Open; Woodall, R) NY 11 (Rose, D) IA 3 (Axne, D) NY 22 (Brindisi, D) IL 13 (Davis, R) OK 5 (Horn, D) IL 14 (Underwood, D) SC 1 (Cunningham, D) FEBRUARY 21, 2020 VOLUME 4, NO. 4 UT 4 (McAdams, D) Tilt Democratic (9D) Tilt Republican (7R, 1I) CA 21 (Cox, D) IA 4 (King, R) 2020 House Overview: GA 6 (McBath, D) MI 3 (Amash, I)# IA 1 (Finkenauer, D) MN 1 (Hagedorn, R) IA 2 (Open; Loebsack, D) NJ 2 (Van Drew, R) The Bernie Factor ME 2 (Golden, D) PA 1 (Fitzpatrck, R) MN 7 (Peterson, DFL) PA 10 (Perry, R) By Jacob Rubashkin & Nathan L. Gonzales NJ 3 (Kim, D) TX 22 (Open; Olson, R) NM 2 (Torres Small, D) TX 24 (Open; Marchant, R) The House majority wasn’t regarded as in play, unless Democrats VA 7 (Spanberger, D) GOP DEM were to nominate Bernie Sanders for president. Now that the Vermont senator is a legitimate frontrunner, his impact from the top of the ticket 116th Congress 200 234 on Democratic control of the House should be taken seriously. Currently Solid 170 199 The conventional wisdom is that Sanders’ socialist policies will make Competitive 30 35 re-election far more difficult for the 30 Democrats sitting in districts Needed for majority 218 Donald Trump won in 2016. But a district by district analysis reveals a Lean Democratic (7D, 1R) Lean Republican (5R) more complicated situation. CA 48 (Rouda, D) MO 2 (Wagner, R) Sanders’ path to victory is to recreate the ‘Blue Wall’ that crumbled KS 3 (Davids, D) NE 2 (Bacon, R) in 2016, winning back Michigan, Wisconsin, and Pennsylvania, where NJ 7 (Malinowski, D) NY 2 (Open; King, R) his strident economic populism could resonate. -

Analysis of 2010 Mid-Term Elections: a Case Study

ANALYSIS OF 2010 MID-TERM ELECTIONS: A CASE STUDY THESIS Presented to the Graduate Council of Texas State University-San Marcos in Partial Fulfillment of the Requirements for the Degree Master of ARTS by Kyle James Robinson, B.A. San Marcos, Texas May 2012 ANALYSIS OF 2010 MID-TERM ELECTIONS: A CASE STUDY Committee Members Approved: Cynthia Opheim, Chair Patricia Parent Theodore Hindson Approved: J. Michael Willoughby Dean of the Graduate College COPYRIGHT by Kyle James Robinson 2012 FAIR USE AND AUTHOR’S PERMISSION STATEMENT Fair Use This work is protected by the Copyright Laws of the United States (Public Law 94-553, section 107). Consistent with fair use as defined in the Copyright Laws, brief quotations from this material are allowed with proper acknowledgment. Use of this material for financial gain without the author’s express written permission is not allowed. Duplication Permission As the copyright holder of this work I, Kyle James Robinson, authorize duplication of this work, in whole or in part, for educational or scholarly purposes only. ACKNOWLEDGEMENTS I would like to thank, first and foremost, my fiancé Allison Hughes, who has been an amazing supporter of mine throughout this entire process. She has been a calming force when I needed it most, and my motivator when I did not want to write another word. Without her support and guidance, finishing my master’s degree would not have been possible. I also would like to thank my family, my parents Jim and Karen Robinson, my sister Lindsay Ames, brother-in-law Brent Ames, and my incredibly smart niece Megan Ames. -

Minority Statement: the New Jersey Legislative Select Committee On

Minority Statement: The New Jersey Legislative Select Committee on Investigation’s George Washington Bridge Inquiry December 8, 2014 Table of Contents Introduction Page 2 I: The Public Committee Started Down a Political Road Page 5 1. Democrats’ Politics Trumped Public Trust Page 7 2. ‘The Greater the Power, the More Dangerous the Abuse’ Page 9 3. Top Members Should’ve Been Banned from Committee Page 12 4. A Member Proactively Addressed Perceived Issues Page 22 II: A Questionable Choice for ‘Bipartisan’ Inquiry Page 25 1. A Go-To Firm for Democrats: Jenner & Block Page 27 2. Additional Problems with Committee Counsel Page 33 III: Co-Chairs Sabotaged the Inquiry Page 38 1. Prejudicial Comments: A Hunt for Attention Page 39 a. ‘Inquiry to Lynching’ Page 45 b. Co-Chairwoman: ‘The governor has to be responsible’ Page 49 c. Co-Chairs Should’ve Quit Committee, Too Page 62 d. Co-Chairs Did What They Criticized Mastro For Doing Page 63 e. Co-Chairs Continued to Advance Democrat Scheme Page 67 2. Unlawfully Leaked Documents? Page 72 IV: Inquiry’s Doom: Bungled Court Case Page 83 V: Republicans Tried to Develop a Successful Inquiry Page 87 1. Committee Should’ve Been Democratized Page 88 2. Painfully Wasteful Meetings Could’ve Been Avoided Page 90 VI: A High Price for Failure Page 98 1. Administration’s Transparency Opened Door for Reform Page 99 2. Democrats Shut the Door on Reform Page 103 3. Double-Standard for Democrat Abuses Page 111 a. Bipartisan Calls for Booker Inquiry Went Unanswered Page 111 b. Holland Tunnel Traffic Problems Considered OK Page 114 4. -

Messer Seeks GOP ‘Common Agenda’ Billionaire Are Finding Fifth Ranking Republican the Most Traction in the Surveys Post-Boehner Presidential Race

V21, N8 Thursday, Oct. 1, 2015 Messer seeks GOP ‘common agenda’ billionaire are finding Fifth ranking Republican the most traction in the surveys post-Boehner presidential race. Messer will stay House; calls for a plan in his current leader- ship position as head of By BRIAN A. HOWEY the Conference Forum NASHVILLE, Ind. – Five days after for Policy Development, House Speaker John Boehner resigned and an extraordinary rise six days after Pope Francis urged Congress to power for a second to find common term Republican. He ground, U.S. Rep. now finds himself as in Luke Messer, a unique and historic the fifth ranking position of trying to Republican, talked steer his party and his with Howey Politics institution into a more Indiana about productive place. these extraordinary events. Here is our The Shelbyville Republican conversation, which peppered his analyses with sports analo- took place mid-Tuesday gies, beginning with the torpid Indianapolis afternoon, just hours Colts and ending with Dan Dakich’s wisdom before the House to Darryl Thomas in the book “Season on Republican caucus was the Brink.” It was an appropriate metaphor U.S. Rep. Luke Messer, the fifth ranking House to meet and sort out as Americans hold Congress and its leader- Republican, believes the party needs a persuasvie ship in deep derision, while a Socialist and agenda. (HPI Photo by Brian A. Howey) Continued on page 3 The thud of Jud By BRIAN A. HOWEY NASHVILLE, Ind. – Eyebrows arched about a year ago when State Rep. Jud McMillin was elevated to major- ity leader of his super majority House Republican caucus. -

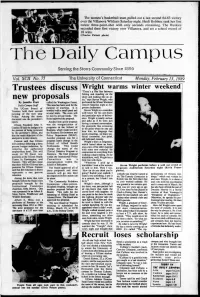

Trustees Discuss New Proposals

The women's basketball team pulled out a last second 64-63 victory over the Villanova Wildcats Saturday night. Heidi Robbins sank her first career three-point-shot with only seconds remaining. The Huskies recorded their first victory over Villanova, and set a school record of 18 wins. (Charles Pickett photo) The Daily Campus Serving the Storrs Community Since 1896 Vol. XCII No. 75 The University of Connecticut Monday, February 13,1989 Trustees discuss Wright warms winter weekend There's a fine line between fishing and standing on the shore and looking like an id- new proposals iot," says Steven Wright, who By Jennifer Pratt called the Washington Forest. performed the Winter Weekend Daily Campus Staff This area has been used for the concert Saturday night at Jor- The UConn Board of purpose of teaching. It is gensen Auditorium. Trustees held their second wooded with a small pond and Steven Wright is a comedian meeting of the new year several trails. The costs will well know for his one-liners Friday. Among the items be met by private funds. The and particular style of deliver- discussed was the president's Board approved the proposal. ance. Wright is totally serious report Another item up for proposal and talks as if he were just having a normal conversation. UConn President John T. was the reorganization of programs in the School of No gimmicks like screaming Casteen III said the budget is in to the point where no one can the process of being reviewed Business, which would involve the Business Environment and hear him, no language that by the governor's office, and needs censoring, no heavy po- that the main objective of the Policy Department (BEAP). -

STUART ROTHENBERG Founding Editor and Publisher, Rothenberg & Gonzales Political Report Columnist, Roll Call

Gotham Artists 550 3rd Avenue, 2nd Floor New York, NY 10016 Phone: 646-873-6601 Fax: 646-365-8890 E-Mail: [email protected] Web: www.gotham-artists.com STUART ROTHENBERG Founding Editor and Publisher, Rothenberg & Gonzales Political Report Columnist, Roll Call “I don’t think anybody reads Stuart Rothenberg’s stuff any closer than I do. When I find myself in agreement with him, I’m a lot more comfortable with my position. He’s a blend of academic credentials and living in the real world of politics.” Charlie Cook For years, Stuart Rothenberg has embodied the rare ability to report on all the political happenings in the nation with unbiased clarity and non-partisan precision. The publication he founded, the Rothenberg & Gonzales Political Report, provides honest, nonpartisan reporting and clear-eyed analysis of American elections and their potential ramifications, looking at U.S. House, Senate, and gubernatorial campaigns, presidential politics, and political developments. Exclusively represented by Leading Authorities speakers bureau, Rothenberg is one of the nation’s most popular political analysts and handicappers, and he captivates audiences with insightful, often humorous discussions about election results, the issues facing decision makers on Capitol Hill, and the nature of politics itself. His lectures are as up-to-date as today’s newspaper, and he presents his insider views on hotly debated issues and how the future of politics is being shaped by our nation’s current newsmakers. A Top Handicapper of the Political Horserace. In addition to commentary that has been featured in newspapers throughout the country, Rothenberg also writes a column in Capitol Hill’s Roll Call twice a week, and his op-eds have appeared in the Wall Street Journal, the Washington Post, the New York Times, and other newspapers. -

Fake Polls, Real Consequences: the Rise of Fake Polls and the Case for Criminal Liability

Missouri Law Review Volume 85 Issue 1 Article 7 Winter 2020 Fake Polls, Real Consequences: The Rise of Fake Polls and the Case for Criminal Liability Tyler Yeargain Follow this and additional works at: https://scholarship.law.missouri.edu/mlr Part of the Law Commons Recommended Citation Tyler Yeargain, Fake Polls, Real Consequences: The Rise of Fake Polls and the Case for Criminal Liability, 85 MO. L. REV. (2020) Available at: https://scholarship.law.missouri.edu/mlr/vol85/iss1/7 This Article is brought to you for free and open access by the Law Journals at University of Missouri School of Law Scholarship Repository. It has been accepted for inclusion in Missouri Law Review by an authorized editor of University of Missouri School of Law Scholarship Repository. For more information, please contact [email protected]. Yeargain: Fake Polls, Real Consequences Fake Polls, Real Consequences: The Rise of Fake Polls and the Case for Criminal Liability Tyler Yeargain* ABSTRACT For better or for worse, election polls drive the vast majority of political journalism and analysis. Polls are frequently taken at face value and reported breathlessly, especially when they show surprising or unexpected results. Though most pollsters adhere to sound methodological practices, the dependence of political journalism – and campaigns, independent political organizations, and so on – on polls opens a door for the unsavory. Fake polls have started to proliferate online. Their goal is to influence online political betting markets, so that their purveyors can make a quick buck at the expense of those they’ve tricked. This Article argues that these actions – the creation and promulgation of fake polls to influence betting markets – is a classic case of either commodities fraud, or wire fraud, or both, or conspiracy to commit either.