Can GAN Originate New Electronic Dance Music Genres?--Generating Novel Rhythm Patterns Using GAN with Genre Ambiguity Loss

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Music-Week-1993-05-0

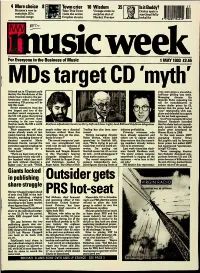

4 Morechoice 8 Town crier 10 Wisdom Kenyon'smaintain vowR3's to Take This Town Vintage comic is musical range visitsCroydon the streets active Marketsurprise Preview star of ■ ^ • H itmsKweek For Everyone in the Business of Music 1 MAY 1993 £2.65 iiistargetCO mftl17 Adestroy forced the eut foundationsin CD prices ofcould the half-hourtives were grillinggiven a lastone-and-a- week. wholeliamentary music selectindustry, committee the par- MalcolmManaging Field, director repeating hisSir toldexaraining this week. CD pricing will be reducecall for dealer manufacturers prices by £2,to twoSenior largest executives and two from of the "cosy"denied relationshipthat his group with had sup- a thesmallest UK will record argue companies that pricing in pliera and defended its support investingchanges willin theprevent new talentthem RichardOur Price Handover managing conceded director thatleader has in mademusic. the UK a world Kaufman adjudicates (centre) as Perry (left) and Ames (right) head EMI and PolyGram délégations atelythat his passed chain hadon thenot immedi-reduced claimsTheir alreadyarguments made will at echolast businesspeople rather without see athose classical fine Tradingmoned. has also been sum- industryPrivately profitability. witnesses who Warnerdealer Musicprice inintroduced 1988. by Goulden,week's hearing. managing Retailer director Alan of recordingsdards for years that ?" set the stan- RobinTemple Morton, managing whose director label othershave alreadyyet to appearedappear admit and managingIn the nextdirector session BrianHMV Discountclassical Centre,specialist warned Music the tionThe was record strengthened companies' posi-last says,spécialisés "We're intrying Scottish to put folk, out teedeep members concem thatalready the commit-believe lowerMcLaughlin prices saidbut addedhe favoured HMV thecommittee music against industry singling for outa independentsweek with the late inclusionHyperion of erwise.music that l'm won't putting be heard oth-out CDsLast to beweek overpriced, committee chair- hadly high" experienced CD sales. -

Electro House 2015 Download Soundcloud

Electro house 2015 download soundcloud CLICK TO DOWNLOAD TZ Comment by Czerniak Maciek. Lala bon. TZ Comment by Czerniak Maciek. Yeaa sa pette. TZ. Users who like Kemal Coban Electro House Club Mix ; Users who reposted Kemal Coban Electro House Club Mix ; Playlists containing Kemal Coban Electro House Club Mix Electro House by EDM Joy. Part of @edmjoy. Worldwide. 5 Tracks. Followers. Stream Tracks and Playlists from Electro House @ EDM Joy on your desktop or mobile device. An icon used to represent a menu that can be toggled by interacting with this icon. Best EDM Remixes Of Popular Songs - New Electro & House Remix; New Electro & House Best Of EDM Mix; New Electro & House Music Dance Club Party Mix #5; New Dirty Party Electro House Bass Ibiza Dance Mix [ FREE DOWNLOAD -> Click "Buy" ] BASS BOOSTED CAR MUSIC MIX BEST EDM, BOUNCE, ELECTRO HOUSE. FREE tracks! PLEASE follow and i will continue to post songs:) ALL CREDIT GOES TO THE PRODUCERS OF THE SONG, THIS PAGE IS FOR PROMOTIONAL USE ONLY I WILL ONLY POST SONGS THAT ARE PUT UP FOR FREE. 11 Tracks. Followers. Stream Tracks and Playlists from Free Electro House on your desktop or mobile device. Listen to the best DJs and radio presenters in the world for free. Free Download Music & Free Electronic Dance Music Downloads and Free new EDM songs and tracks. Get free Electro, House, Trance, Dubstep, Mixtape downloads. Exclusive download New Electro & House Best of Party Mashup, Bootleg, Remix Dance Mix Click here to download New Electro & House Best of Party Mashup, Bootleg, Remix Dance Mix Maximise Network, Eric Clapman and GANGSTA-HOUSE on Soundcloud Follow +1 entry I've already followed Maximise Music, Repost Media, Maximise Network, Eric. -

Master Thesis the Changes in the Dutch Dance Music Industry Value

Master thesis The changes in the Dutch dance music industry value chain due to the digitalization of the music industry University of Groningen, faculty of Management & Organization Msc in Business Administration: Strategy & Innovation Author: Meike Biesma Student number: 1677756 Supervisor: Iván Orosa Paleo Date: 11 May 2009 Abstract During this research, the Dutch dance music industry was investigated. Because of the changes in the music industry, that are caused by the internet, the Dutch dance music industry has changed accordingly. By means of a qualitative research design, a literature study was done as well as personal interviews were performed in order to investigate what changes occurred in the Dutch dance music industry value chain. Where the Dutch dance music industry used to be a physical oriented industry, the digital music format has replaced the physical product. This has had major implications for actors in the industry, because the digital sales have not compensated the losses that were caused by the decrease in physical sales. The main trigger is music piracy. Digital music is difficult to protect, and it was found that almost 99% of all music available on the internet was illegal. Because of the decline in music sales revenues, actors in the industry have had to change their strategies to remain profitable. New actors have emerged, like digital music retailers and digital music distributors. Also, record companies have changed their business model by vertically integrating other tasks within the dance music industry value chain to be able to stay profitable. It was concluded that the value chain of the Dutch dance music industry has changed drastically because of the digitalization of the industry. -

New Promo Pack 2021 4 1 1.Pdf

Promo Pack 2021 Danceparty247 was set up in April 2020 and is run by DJ Envy, DJ Wongy and DJ Te. It is a brand where DJ's and DJ/producers from around the world come together to stream weekly shows across the internet. As well as UK based DJ's we have DJs and producers from, Spain, Puerto Rico, Portugal, Egypt, Belgium, Czech Republic and South Africa. Danceparty247 streams across several platforms and across our own website danceparty247.club. We are PPL and PRS compliant on our website by using the Beathubz planform media player. We reach up to 10,000 unique listens a week. This is comprised of live listeners and views on recorded replays. Many of our shows attain 250-300+ concurrent listeners. In association with Type 2 Promotions we are looking to put on regular good quality events in several parts of the UK. Giving the opportunity for Danceparty247 DJ's to DJ Live. At the same time making sure that both the venue and ourselves mutually benefit from this arrangement. We are open to discussing any ideas you may have. We are looking in the first instance to put on a regular event once a month with yourselves. Given the opportunity we would like to arrange a Zoom meeting or WhatsApp video call with yourselves to discuss our proposal further. Benny Bereal, DJ Envy, DJ Wongy, DJ Te Email: [email protected] WhatsApp: Benny Bereal 07765083211 Simon Wright 07903557538 Martin Young 07851465067 Type2 Productions Benny Bereal I am an Actor, Writer, Director and Promoter. I have been working in the film and tv industry for 5 years and have worked on programmes on channels for the like of the BBC, ITV, CH5 and I have worked alongside names such as Pierce Brosnan, Dave Bautista, David Tennant and Margot Robbie. -

Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews На Arrhythmia Sound

2/22/2018 Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews на Arrhythmia Sound Home Content Contacts About Subscribe Gallery Donate Search Interviews Reviews News Podcasts Reports Home » Reviews Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) Submitted by: Quarck | 19.04.2008 no comment | 198 views Aaron Spectre , also known for the project Drumcorps , reveals before us completely new side of his work. In protovoves frenzy breakgrindcore Specter produces a chic downtempo, idm album. Lost Tracks the result of six years of work, but despite this, the rumor is very fresh and original. The sound is minimalistic without excesses, but at the same time the melodies are very beautiful, the bit powerful and clear, pronounced. Just want to highlight the composition of Break Ya Neck (Remix) , a bit mystical and mysterious, a feeling, like wandering somewhere in the http://www.arrhythmiasound.com/en/review/aaron-spectre-lost-tracks-ad-noiseam-2007.html 1/3 2/22/2018 Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews на Arrhythmia Sound dusk. There was a place and a vocal, in a track Degrees the gentle voice Kazumi (Pink Lilies) sounds. As a result, Lost Tracks can be safely put on a par with the works of such mastadonts as Lusine, Murcof, Hecq. Tags: downtempo, idm Subscribe Email TOP-2013 Submit TOP-2014 TOP-2015 Tags abstract acid ambient ambient-techno artcore best2012 best2013 best2014 best2015 best2016 best2017 breakcore breaks cyberpunk dark ambient downtempo dreampop drone drum&bass dub dubstep dubtechno electro electronic experimental female vocal future garage glitch hip hop idm indie indietronica industrial jazzy krautrock live modern classical noir oldschool post-rock shoegaze space techno tribal trip hop Latest The best in 2017 - albums, places 1-33 12/30/2017 The best in 2017 - albums, places 66-34 12/28/2017 The best in 2017. -

Garage House Music Whats up with That

Garage House Music whats up with that Future funk is a sample-based advancement of Nu-disco which formed out of the Vaporwave scene and genre in the early 2010s. It tends to be more energetic than vaporwave, including elements of French Home, Synth Funk, and making use of Vaporwave modifying techniques. A style coming from the mid- 2010s, often explained as a blend of UK garage and deep home with other elements and strategies from EDM, popularized in late 2014 into 2015, typically mixes deep/metallic/sax hooks with heavy drops somewhat like the ones discovered in future garage. One of the very first house categories with origins embeded in New York and New Jersey. It was named after the Paradise Garage bar in New york city that operated from 1977 to 1987 under the prominent resident DJ Larry Levan. Garage house established along with Chicago home and the outcome was home music sharing its resemblances, affecting each other. One contrast from Chicago house was that the vocals in garage house drew stronger impacts from gospel. Noteworthy examples consist of Adeva and Tony Humphries. Kristine W is an example of a musician involved with garage house outside the genre's origin of birth. Also understood as G-house, it includes very little 808 and 909 drum machine-driven tracks and often sexually explicit lyrics. See likewise: ghettotech, juke house, footwork. It integrates components of Chicago's ghetto house with electro, Detroit techno, Miami bass and UK garage. It includes four-on-the-floor rhythms and is normally faster than a lot of other dance music categories, at approximately 145 to 160 BPM. -

Musikstile Quelle: Alphabetisch Geordnet Von Mukerbude

MusikStile Quelle: www.recordsale.de Alphabetisch geordnet von MukerBude - 2-Step/BritishGarage - AcidHouse - AcidJazz - AcidRock - AcidTechno - Acappella - AcousticBlues - AcousticChicagoBlues - AdultAlternative - AdultAlternativePop/Rock - AdultContemporary -Africa - AfricanJazz - Afro - Afro-Pop -AlbumRock - Alternative - AlternativeCountry - AlternativeDance - AlternativeFolk - AlternativeMetal - AlternativePop/Rock - AlternativeRap - Ambient - AmbientBreakbeat - AmbientDub - AmbientHouse - AmbientPop - AmbientTechno - Americana - AmericanPopularSong - AmericanPunk - AmericanTradRock - AmericanUnderground - AMPop Orchestral - ArenaRock - Argentina - Asia -AussieRock - Australia - Avant -Avant-Garde - Avntg - Ballads - Baroque - BaroquePop - BassMusic - Beach - BeatPoetry - BigBand - BigBeat - BlackGospel - Blaxploitation - Blue-EyedSoul -Blues - Blues-Rock - BluesRevival - Blues - Spain - Boogie Woogie - Bop - Bolero -Boogaloo - BoogieRock - BossaNova - Brazil - BrazilianJazz - BrazilianPop - BrillBuildingPop - Britain - BritishBlues - BritishDanceBands - BritishFolk - BritishFolk Rock - BritishInvasion - BritishMetal - BritishPsychedelia - BritishPunk - BritishRap - BritishTradRock - Britpop - BrokenBeat - Bubblegum - C -86 - Cabaret -Cajun - Calypso - Canada - CanterburyScene - Caribbean - CaribbeanFolk - CastRecordings -CCM -CCM - Celebrity - Celtic - Celtic - CelticFolk - CelticFusion - CelticPop - CelticRock - ChamberJazz - ChamberMusic - ChamberPop - Chile - Choral - ChicagoBlues - ChicagoSoul - Child - Children'sFolk - Christmas -

Quantifying Music Trends and Facts Using Editorial Metadata from the Discogs Database

QUANTIFYING MUSIC TRENDS AND FACTS USING EDITORIAL METADATA FROM THE DISCOGS DATABASE Dmitry Bogdanov, Xavier Serra Music Technology Group, Universitat Pompeu Fabra [email protected], [email protected] ABSTRACT Discogs metadata contains information about music re- leases (such as albums or EPs) including artists name, track While a vast amount of editorial metadata is being actively list including track durations, genre and style, format (e.g., gathered and used by music collectors and enthusiasts, it vinyl or CD), year and country of release. It also con- is often neglected by music information retrieval and mu- tains information about artist roles and relations as well sicology researchers. In this paper we propose to explore as recording companies and labels. The quality of the data Discogs, one of the largest databases of such data available in Discogs is considered to be high among music collec- in the public domain. Our main goal is to show how large- tors because of its strict guidelines, moderation system and scale analysis of its editorial metadata can raise questions a large community of involved enthusiasts. The database and serve as a tool for musicological research on a number contains contributions by more than 347,000 people. It of example studies. The metadata that we use describes contains 8.4 million releases by 5 million artists covering a music releases, such as albums or EPs. It includes infor- wide range of genres and styles (although the database was mation about artists, tracks and their durations, genre and initially focused on Electronic music). style, format (such as vinyl, CD, or digital files), year and Remarkably, it is the largest open database containing country of each release. -

Ambient Music Guide's Best Albums of 2015 Part 2 - Chillout Mix

Ambient Music Guide's Best Albums of 2015 Part 2 - Chillout Mix START TRACK TITLE ARTIST ALBUM/E.P. (click to buy the release online) (m/s) 00’00 Expansion Ascendant Ætheral Code (Synphaera Records) 07’52 Terra Firma Pan Electric & Ishq Elemental Journey (Pink Lizard Music) 13’53 Nature Boy Another Fine Day A Good Place to Be (Interchill Records) 18’36 Luftrum 8 Motionfield Luftrum (Carpe Sonum Records) 25’37 Are We Learning Yet? Shakta (Seb Taylor) Collected Downtempo vol. 2 (Tribal Shift Records) 29’30 Are You Connectome? The Dread Plastination of Otis T Fernbank (thedreadband.com) 34’19 Messianic Hibernation (Seb Taylor) Collected Downtempo vol. 3 (Tribal Shift Records) 38’53 You Are The Stars Kick Bong A Waking Dream (Cosmicleaf) 43’33 Point Of View Kurt Stenzel Jodorowsky's Dune soundtrack (Cinewax) 45’55 World 1 Si Matthews Tales of Ten Worlds (Carpe Sonum) 54’46 Sea Surfaces Martin Nonstatic Granite (Ultimae Records) 60’42 Unfamiliar Shadows Twilight Archive Mood Chain (Electrophonogram) 65’39 Nespole Floating Points Elaenia (Luaka Bop) 70’14 Strands Ascendant Various - Ambient Online vol. 4 (Ambientonline.org) 77’40 Gonglaing Ebauche Adrift (Invisible Agent Records) 85’32 Voila St Germain St Germain (Nonesuch/Parlophone) 91’31 The Beginning + Viola Abakus + St Germain The Beginning/Dreamer e.p. (Modus Recordings) 97’28 Last Train To Lhasa (ambient Banco de Gaia Last Train To Lhasa: 20th Anniversary Edition (Disco mix) Gecko) 101’52 Last Train To Lhasa Banco de Gaia Last Train To Lhasa: 20th Anniversary Edition (Disco (Astropilot remix) Gecko) 107’14 Dreamer Abakus The Beginning/Dreamer e.p. -

Sooloos Collections: Advanced Guide

Sooloos Collections: Advanced Guide Sooloos Collectiions: Advanced Guide Contents Introduction ...........................................................................................................................................................3 Organising and Using a Sooloos Collection ...........................................................................................................4 Working with Sets ..................................................................................................................................................5 Organising through Naming ..................................................................................................................................7 Album Detail ....................................................................................................................................................... 11 Finding Content .................................................................................................................................................. 12 Explore ............................................................................................................................................................ 12 Search ............................................................................................................................................................. 14 Focus .............................................................................................................................................................. -

New Promo Pack.Pdf

Promo Pack 2021 Danceparty247 was set up in April 2020 and is run by DJ Envy, DJ Wongy and DJ Te. It is a brand where DJ's and DJ/producers from around the world come together to stream weekly shows across the internet. As well as UK based DJ's we have DJs and producers from, Spain, Puerto Rico, Portugal, Egypt, Belgium, Czech Republic and South Africa. Danceparty247 streams across several platforms and across our own website danceparty247.club. We are PPL and PRS compliant on our website by using the Beathubz planform media player. We reach up to 10,000 unique listens a week. This is comprised of live listeners and views on recorded replays. Many of our shows attain 250-300+ concurrent listeners. In association with Type 2 Promotions we are looking to put on regular good quality events in several parts of the UK. Giving the opportunity for Danceparty247 DJ's to DJ Live. At the same time making sure that both the venue and ourselves mutually benefit from this arrangement. We are open to discussing any ideas you may have. We are looking in the first instance to put on a regular event once a month with yourselves. Given the opportunity we would like to arrange a Zoom meeting or WhatsApp video call with yourselves to discuss our proposal further. Benny Bereal, DJ Envy, DJ Wongy, DJ Te Email: [email protected] WhatsApp: Benny Bereal 07765083211 Simon Wright 07903557538 Martin Young 07851465067 Type2 Productions Benny Bereal I am an Actor, Writer, Director and Promoter. I have been working in the film and tv industry for 5 years and have worked on programmes on channels for the like of the BBC, ITV, CH5 and I have worked alongside names such as Pierce Brosnan, Dave Bautista, David Tennant and Margot Robbie. -

BEATPORT LAUNCHES NEW ORGANIC HOUSE / DOWNTEMPO GENRE CATEGORY a New Space Dedicated to Deeper, Slower, and More Meditative Shades of House Music

FOR IMMEDIATE RELEASE BEATPORT LAUNCHES NEW ORGANIC HOUSE / DOWNTEMPO GENRE CATEGORY A new space dedicated to deeper, slower, and more meditative shades of house music. BERLIN, DE - JUNE 23, 2020 - Beatport, the world’s number one destination for electronic music, has announced that it has expanded its deep genre classifications even further, with the launch of Organic House / Downtempo. Cultivated by acts such as Sabo, BLOND:ISH, Britta Arnold, Shawni, Bedouin, Nicola Cruz, Oliver Koletzki, Lazarusman, Namito, Amonita, Behrouz, Amine K, Mira, Acid Pauli, M.A.N.D.Y., Damian Lazarus, YokoO, KMLN, Pachanga Boys, Monolink, and many more, the slow and radiant sound of this particular style of dance music has emerged from desert-dwelling parties to a place of global fandom that warrants its own space on Beatport. The genre includes storied labels such as Do Not Sit On The Furniture, Sol Selectas, Crosstown Rebels, Desert Dwellers, Get Physical, RADIANT., Hoomidaas Records, Pipe & Pochet, and Bercana Music. The new genre coalesced around feedback Beatport received from its prominent labels, artists, distributors, and the Organic House / Downtempo community. Whereas previously this genre was spread across Afro House, Deep House, Electronica, Melodic House & Techno, this music now has its own space in which to thrive and showcase its many talented artists and often long-running labels. Speaking about the launch, Raphael Pujol, Beatport Head of Curation, stated, “We are thrilled to give the extra visibility to this music through the creation of the Organic House / Downtempo genre classification. This unique sound was scattered over 3 or 4 genres and made it very difficult for customers to find the music they love, and to give the amount of exposure they deserve to labels and artists.” Echoing Pujol are a few of the genre’s leading artists, like Acid Pauli "Up to now it has been quite challenging to find this kind of music here.