Validity Semantics in Educational and Psychological Assessment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Validities of Pep and Some Characteristic Formulas in Modal Logic

THE VALIDITIES OF PEP AND SOME CHARACTERISTIC FORMULAS IN MODAL LOGIC Hong Zhang, Huacan He School of Computer Science, Northwestern Polytechnical University,Xi'an, Shaanxi 710032, P.R. China Abstract: In this paper, we discuss the relationships between some characteristic formulas in normal modal logic with their frames. We find that the validities of the modal formulas are conditional even though some of them are intuitively valid. Finally, we prove the validities of two formulas of Position- Exchange-Principle (PEP) proposed by papers 1 to 3 by using of modal logic and Kripke's semantics of possible worlds. Key words: Characteristic Formulas, Validity, PEP, Frame 1. INTRODUCTION Reasoning about knowledge and belief is an important issue in the research of multi-agent systems, and reasoning, which is set up from the main ideas of situational calculus, about other agents' states and actions is one of the most important directions in Al^^'^\ Papers [1-3] put forward an axiom scheme in reasoning about others(RAO) in multi-agent systems, and a rule called Position-Exchange-Principle (PEP), which is shown as following, was abstracted . C{(p^y/)^{C(p->Cy/) (Ce{5f',...,5f-}) When the length of C is at least 2, it reflects the mechanism of an agent reasoning about knowledge of others. For example, if C is replaced with Bf B^/, then it should be B':'B]'{(p^y/)-^iBf'B]'(p^Bf'B]'y/) 872 Hong Zhang, Huacan He The axiom schema plays a useful role as that of modus ponens and axiom K when simplifying proof. Though examples were demonstrated as proofs in papers [1-3], from the perspectives both in semantics and in syntax, the rule is not so compellent. -

Assessment Center Structure and Construct Validity: a New Hope

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2015 Assessment Center Structure and Construct Validity: A New Hope Christopher Wiese University of Central Florida Part of the Psychology Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Wiese, Christopher, "Assessment Center Structure and Construct Validity: A New Hope" (2015). Electronic Theses and Dissertations, 2004-2019. 733. https://stars.library.ucf.edu/etd/733 ASSESSMENT CENTER STRUCTURE AND CONSTRUCT VALIDITY: A NEW HOPE by CHRISTOPHER W. WIESE B.S., University of Central Florida, 2008 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Psychology in the College of Sciences at the University of Central Florida Orlando, Florida Summer Term 2015 Major Professor: Kimberly Smith-Jentsch © 2015 Christopher Wiese ii ABSTRACT Assessment Centers (ACs) are a fantastic method to measure behavioral indicators of job performance in multiple diverse scenarios. Based upon a thorough job analysis, ACs have traditionally demonstrated very strong content and criterion-related validity. However, researchers have been puzzled for over three decades with the lack of evidence concerning construct validity. ACs are designed to measure critical job dimensions throughout multiple situational exercises. However, research has consistently revealed that different behavioral ratings within these scenarios are more strongly related to one another (exercise effects) than the same dimension rating across scenarios (dimension effects). -

Construct Validity and Reliability of the Work Environment Assessment Instrument WE-10

International Journal of Environmental Research and Public Health Article Construct Validity and Reliability of the Work Environment Assessment Instrument WE-10 Rudy de Barros Ahrens 1,*, Luciana da Silva Lirani 2 and Antonio Carlos de Francisco 3 1 Department of Business, Faculty Sagrada Família (FASF), Ponta Grossa, PR 84010-760, Brazil 2 Department of Health Sciences Center, State University Northern of Paraná (UENP), Jacarezinho, PR 86400-000, Brazil; [email protected] 3 Department of Industrial Engineering and Post-Graduation in Production Engineering, Federal University of Technology—Paraná (UTFPR), Ponta Grossa, PR 84017-220, Brazil; [email protected] * Correspondence: [email protected] Received: 1 September 2020; Accepted: 29 September 2020; Published: 9 October 2020 Abstract: The purpose of this study was to validate the construct and reliability of an instrument to assess the work environment as a single tool based on quality of life (QL), quality of work life (QWL), and organizational climate (OC). The methodology tested the construct validity through Exploratory Factor Analysis (EFA) and reliability through Cronbach’s alpha. The EFA returned a Kaiser–Meyer–Olkin (KMO) value of 0.917; which demonstrated that the data were adequate for the factor analysis; and a significant Bartlett’s test of sphericity (χ2 = 7465.349; Df = 1225; p 0.000). ≤ After the EFA; the varimax rotation method was employed for a factor through commonality analysis; reducing the 14 initial factors to 10. Only question 30 presented commonality lower than 0.5; and the other questions returned values higher than 0.5 in the commonality analysis. Regarding the reliability of the instrument; all of the questions presented reliability as the values varied between 0.953 and 0.956. -

The Substitutional Analysis of Logical Consequence

THE SUBSTITUTIONAL ANALYSIS OF LOGICAL CONSEQUENCE Volker Halbach∗ dra version ⋅ please don’t quote ónd June óþÕä Consequentia ‘formalis’ vocatur quae in omnibus terminis valet retenta forma consimili. Vel si vis expresse loqui de vi sermonis, consequentia formalis est cui omnis propositio similis in forma quae formaretur esset bona consequentia [...] Iohannes Buridanus, Tractatus de Consequentiis (Hubien ÕÉßä, .ì, p.óóf) Zf«±§Zh± A substitutional account of logical truth and consequence is developed and defended. Roughly, a substitution instance of a sentence is dened to be the result of uniformly substituting nonlogical expressions in the sentence with expressions of the same grammatical category. In particular atomic formulae can be replaced with any formulae containing. e denition of logical truth is then as follows: A sentence is logically true i all its substitution instances are always satised. Logical consequence is dened analogously. e substitutional denition of validity is put forward as a conceptual analysis of logical validity at least for suciently rich rst-order settings. In Kreisel’s squeezing argument the formal notion of substitutional validity naturally slots in to the place of informal intuitive validity. ∗I am grateful to Beau Mount, Albert Visser, and Timothy Williamson for discussions about the themes of this paper. Õ §Z êZo±í At the origin of logic is the observation that arguments sharing certain forms never have true premisses and a false conclusion. Similarly, all sentences of certain forms are always true. Arguments and sentences of this kind are for- mally valid. From the outset logicians have been concerned with the study and systematization of these arguments, sentences and their forms. -

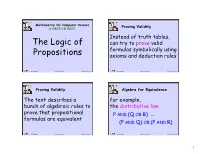

Propositional Logic (PDF)

Mathematics for Computer Science Proving Validity 6.042J/18.062J Instead of truth tables, The Logic of can try to prove valid formulas symbolically using Propositions axioms and deduction rules Albert R Meyer February 14, 2014 propositional logic.1 Albert R Meyer February 14, 2014 propositional logic.2 Proving Validity Algebra for Equivalence The text describes a for example, bunch of algebraic rules to the distributive law prove that propositional P AND (Q OR R) ≡ formulas are equivalent (P AND Q) OR (P AND R) Albert R Meyer February 14, 2014 propositional logic.3 Albert R Meyer February 14, 2014 propositional logic.4 1 Algebra for Equivalence Algebra for Equivalence for example, The set of rules for ≡ in DeMorgan’s law the text are complete: ≡ NOT(P AND Q) ≡ if two formulas are , these rules can prove it. NOT(P) OR NOT(Q) Albert R Meyer February 14, 2014 propositional logic.5 Albert R Meyer February 14, 2014 propositional logic.6 A Proof System A Proof System Another approach is to Lukasiewicz’ proof system is a start with some valid particularly elegant example of this idea. formulas (axioms) and deduce more valid formulas using proof rules Albert R Meyer February 14, 2014 propositional logic.7 Albert R Meyer February 14, 2014 propositional logic.8 2 A Proof System Lukasiewicz’ Proof System Lukasiewicz’ proof system is a Axioms: particularly elegant example of 1) (¬P → P) → P this idea. It covers formulas 2) P → (¬P → Q) whose only logical operators are 3) (P → Q) → ((Q → R) → (P → R)) IMPLIES (→) and NOT. The only rule: modus ponens Albert R Meyer February 14, 2014 propositional logic.9 Albert R Meyer February 14, 2014 propositional logic.10 Lukasiewicz’ Proof System Lukasiewicz’ Proof System Prove formulas by starting with Prove formulas by starting with axioms and repeatedly applying axioms and repeatedly applying the inference rule. -

A Validity Framework for the Use and Development of Exported Assessments

A Validity Framework for the Use and Development of Exported Assessments By María Elena Oliveri, René Lawless, and John W. Young A Validity Framework for the Use and Development of Exported Assessments María Elena Oliveri, René Lawless, and John W. Young1 1 The authors would like to acknowledge Bob Mislevy, Michael Kane, Kadriye Ercikan, and Maurice Hauck for their valuable input and expertise in reviewing various iterations of the framework as well as Priya Kannan, Don Powers, and James Carlson for their input throughout the technical review process. Copyright © 2015 Educational Testing Service. All Rights Reserved. ETS, the ETS logo, GRADUATE RECORD EXAMINATIONS, GRE, LISTENTING. LEARNING. LEADING, TOEIC, and TOEFL are registered trademarks of Educational Testing Service (ETS). EXADEP and EXAMEN DE ADMISIÓN A ESTUDIOS DE POSGRADO are trademarks of ETS. 1 Abstract In this document, we present a framework that outlines the key considerations relevant to the fair development and use of exported assessments. Exported assessments are developed in one country and are used in countries with a population that differs from the one for which the assessment was developed. Examples of these assessments include the Graduate Record Examinations® (GRE®) and el Examen de Admisión a Estudios de Posgrado™ (EXADEP™), among others. Exported assessments can be used to make inferences about performance in the exporting country or in the receiving country. To illustrate, the GRE can be administered in India and be used to predict success at a graduate school in the United States, or similarly it can be administered in India to predict success in graduate school at a graduate school in India. -

Construct Validity in Psychological Tests

CONSTRUCT VALIDITY IN PSYCHOLOGICAL TF.STS - ----L. J. CRONBACH and P. E. MEEID..----- validity seems only to have stirred the muddy waters. Portions of the distinctions we shall discuss are implicit in Jenkins' paper, "Validity for 'What?" { 33), Gulliksen's "Intrinsic Validity" (27), Goo<lenough's dis tinction between tests as "signs" and "samples" (22), Cronbach's sepa· Construct Validity in Psychological Tests ration of "logical" and "empirical" validity ( 11 ), Guilford's "factorial validity" (25), and Mosier's papers on "face validity" and "validity gen eralization" ( 49, 50). Helen Peak ( 52) comes close to an explicit state ment of construct validity as we shall present it. VALIDATION of psychological tests has not yet been adequately concep· Four Types of Validation tua1ized, as the APA Committee on Psychological Tests learned when TI1e categories into which the Recommendations divide validity it undertook (1950-54) to specify what qualities should be investigated studies are: predictive validity, concurrent validity, content validity, and before a test is published. In order to make coherent recommendations constrnct validity. The first two of these may be considered together the Committee found it necessary to distinguish four types of validity, as criterion-oriented validation procedures. established by different types of research and requiring different interpre· TI1e pattern of a criterion-oriented study is familiar. The investigator tation. The chief innovation in the Committee's report was the term is primarily interested in some criterion which he wishes to predict. lie constmct validity.* This idea was first formulated hy a subcommittee administers the test, obtains an independent criterion measure on the {Meehl and R. -

Questionnaire Validity and Reliability

Questionnaire Validity and reliability Department of Social and Preventive Medicine, Faculty of Medicine Outlines Introduction What is validity and reliability? Types of validity and reliability. How do you measure them? Types of Sampling Methods Sample size calculation G-Power ( Power Analysis) Research • The systematic investigation into and study of materials and sources in order to establish facts and reach new conclusions • In the broadest sense of the word, the research includes gathering of data in order to generate information and establish the facts for the advancement of knowledge. ● Step I: Define the research problem ● Step 2: Developing a research plan & research Design ● Step 3: Define the Variables & Instrument (validity & Reliability) ● Step 4: Sampling & Collecting data ● Step 5: Analysing data ● Step 6: Presenting the findings A questionnaire is • A technique for collecting data in which a respondent provides answers to a series of questions. • The vehicle used to pose the questions that the researcher wants respondents to answer. • The validity of the results depends on the quality of these instruments. • Good questionnaires are difficult to construct; • Bad questionnaires are difficult to analyze. •Identify the goal of your questionnaire •What kind of information do you want to gather with your questionnaire? • What is your main objective? • Is a questionnaire the best way to go about collecting this information? Ch 11 6 How To Obtain Valid Information • Ask purposeful questions • Ask concrete questions • Use time periods -

Validity and Soundness

1.4 Validity and Soundness A deductive argument proves its conclusion ONLY if it is both valid and sound. Validity: An argument is valid when, IF all of it’s premises were true, then the conclusion would also HAVE to be true. In other words, a “valid” argument is one where the conclusion necessarily follows from the premises. It is IMPOSSIBLE for the conclusion to be false if the premises are true. Here’s an example of a valid argument: 1. All philosophy courses are courses that are super exciting. 2. All logic courses are philosophy courses. 3. Therefore, all logic courses are courses that are super exciting. Note #1: IF (1) and (2) WERE true, then (3) would also HAVE to be true. Note #2: Validity says nothing about whether or not any of the premises ARE true. It only says that IF they are true, then the conclusion must follow. So, validity is more about the FORM of an argument, rather than the TRUTH of an argument. So, an argument is valid if it has the proper form. An argument can have the right form, but be totally false, however. For example: 1. Daffy Duck is a duck. 2. All ducks are mammals. 3. Therefore, Daffy Duck is a mammal. The argument just given is valid. But, premise 2 as well as the conclusion are both false. Notice however that, IF the premises WERE true, then the conclusion would also have to be true. This is all that is required for validity. A valid argument need not have true premises or a true conclusion. -

How to Create Scientifically Valid Social and Behavioral Measures on Gender Equality and Empowerment

April 2020 EMERGE MEASUREMENT GUIDELINES REPORT 2: How to Create Scientifically Valid Social and Behavioral Measures on Gender Equality and Empowerment BACKGROUND ON THE EMERGE PROJECT EMERGE (Evidence-based Measures of Empowerment for Research on Box 1. Defining Gender Equality and Gender Equality), created by the Center on Gender Equity and Health at UC San Diego, is a project focused on the quantitative measurement of gender Empowerment (GE/E) equality and empowerment (GE/E) to monitor and/or evaluate health and Gender equality is a form of social development programs, and national or subnational progress on UN equality in which one’s rights, Sustainable Development Goal (SDG) 5: Achieve Gender Equality and responsibilities, and opportunities are Empower All Girls. For the EMERGE project, we aim to identify, adapt, and not affected by gender considerations. develop reliable and valid quantitative social and behavioral measures of Gender empowerment is a type of social GE/E based on established principles and methodologies of measurement, empowerment geared at improving one’s autonomy and self-determination with a focus on 9 dimensions of GE/E- psychological, social, economic, legal, 1 political, health, household and intra-familial, environment and sustainability, within a particular culture or context. and time use/time poverty.1 Social and behavioral measures across these dimensions largely come from the fields of public health, psychology, economics, political science, and sociology. OBJECTIVE OF THIS REPORT The objective of this report is to provide guidance on the creation and psychometric testing of GE/E scales. There are many good GE/E measures, and we highly recommend using previously validated measures when possible. -

On Synthetic Undecidability in Coq, with an Application to the Entscheidungsproblem

On Synthetic Undecidability in Coq, with an Application to the Entscheidungsproblem Yannick Forster Dominik Kirst Gert Smolka Saarland University Saarland University Saarland University Saarbrücken, Germany Saarbrücken, Germany Saarbrücken, Germany [email protected] [email protected] [email protected] Abstract like decidability, enumerability, and reductions are avail- We formalise the computational undecidability of validity, able without reference to a concrete model of computation satisfiability, and provability of first-order formulas follow- such as Turing machines, general recursive functions, or ing a synthetic approach based on the computation native the λ-calculus. For instance, representing a given decision to Coq’s constructive type theory. Concretely, we consider problem by a predicate p on a type X, a function f : X ! B Tarski and Kripke semantics as well as classical and intu- with 8x: p x $ f x = tt is a decision procedure, a function itionistic natural deduction systems and provide compact д : N ! X with 8x: p x $ ¹9n: д n = xº is an enumer- many-one reductions from the Post correspondence prob- ation, and a function h : X ! Y with 8x: p x $ q ¹h xº lem (PCP). Moreover, developing a basic framework for syn- for a predicate q on a type Y is a many-one reduction from thetic computability theory in Coq, we formalise standard p to q. Working formally with concrete models instead is results concerning decidability, enumerability, and reducibil- cumbersome, given that every defined procedure needs to ity without reference to a concrete model of computation. be shown representable by a concrete entity of the model. -

Reliability & Validity

Another bit of jargon… Reliability & Validity In sampling… In measurement… • More is Better We are trying to represent a We are trying to represent a • Properties of a “good measure” population of individuals domain of behaviors – Standardization – Reliability – Validity • Reliability We select participants We select items – Inter-rater – Internal – External • Validity The resulting sample of The resulting scale/test of – Criterion-related participants is intended to items is intended to –Face – Content represent the population represent the domain – Construct For both “more is better” “more give greater representation” Whenever we’ve considered research designs and statistical conclusions, we’ve always been concerned with “sample size” We know that larger samples (more participants) leads to ... • more reliable estimates of mean and std, r, F & X2 • more reliable statistical conclusions • quantified as fewer Type I and II errors The same principle applies to scale construction - “more is better” • but now it applies to the number of items comprising the scale • more (good) items leads to a better scale… • more adequately represent the content/construct domain • provide a more consistent total score (respondent can change more items before total is changed much) Desirable Properties of Psychological Measures Reliability (Agreement or Consistency) Interpretability of Individual and Group Scores Inter-rater or Inter-observers reliability • do multiple observers/coders score an item the same way ? • critical whenever using subjective measures