Introduction to Validity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Internal Validity Is About Causal Interpretability

Internal Validity is about Causal Interpretability Before we can discuss Internal Validity, we have to discuss different types of Internal Validity variables and review causal RH:s and the evidence needed to support them… Every behavior/measure used in a research study is either a ... Constant -- all the participants in the study have the same value on that behavior/measure or a ... • Measured & Manipulated Variables & Constants Variable -- when at least some of the participants in the study • Causes, Effects, Controls & Confounds have different values on that behavior/measure • Components of Internal Validity • “Creating” initial equivalence and every behavior/measure is either … • “Maintaining” ongoing equivalence Measured -- the value of that behavior/measure is obtained by • Interrelationships between Internal Validity & External Validity observation or self-report of the participant (often called “subject constant/variable”) or it is … Manipulated -- the value of that behavior/measure is controlled, delivered, determined, etc., by the researcher (often called “procedural constant/variable”) So, every behavior/measure in any study is one of four types…. constant variable measured measured (subject) measured (subject) constant variable manipulated manipulated manipulated (procedural) constant (procedural) variable Identify each of the following (as one of the four above, duh!)… • Participants reported practicing between 3 and 10 times • All participants were given the same set of words to memorize • Each participant reported they were a Psyc major • Each participant was given either the “homicide” or the “self- defense” vignette to read From before... Circle the manipulated/causal & underline measured/effect variable in each • Causal RH: -- differences in the amount or kind of one behavior cause/produce/create/change/etc. -

Technology-Enabled and Universally Designed Assessment: Considering Access in Measuring the Achievement of Students with Disabilities— a Foundation for Research

The Journal of Technology, Learning, and Assessment Volume 10, Number 5 · November 2010 Technology-Enabled and Universally Designed Assessment: Considering Access in Measuring the Achievement of Students with Disabilities— A Foundation for Research Patricia Almond, Phoebe Winter, Renée Cameto, Michael Russell, Edynn Sato, Jody Clarke-Midura, Chloe Torres, Geneva Haertel, Robert Dolan, Peter Beddow, & Sheryl Lazarus www.jtla.org A publication of the Technology and Assessment Study Collaborative Caroline A. & Peter S. Lynch School of Education, Boston College Volume 10, Number 5 Technology-Enabled and Universally Designed Assessment: Considering Access in Measuring the Achievement of Students with Disabilities—A Foundation for Research Patricia Almond, Phoebe Winter, Renée Cameto, Michael Russell, Edynn Sato, Jody Clarke-Midura, Chloe Torres, Geneva Haertel, Robert Dolan, Peter Beddow, & Sheryl Lazarus Editor: Michael Russell [email protected] Technology and Assessment Study Collaborative Lynch School of Education, Boston College Chestnut Hill, MA 02467 Copy Editor: Jennifer Higgins Design: omas Ho mann Layout: Aimee Levy JTLA is a free online journal, published by the Technology and Assessment Study Collaborative, Caroline A. & Peter S. Lynch School of Education, Boston College. Copyright ©2010 by the Journal of Technology, Learning, and Assessment (ISSN 1540-2525). Permission is hereby granted to copy any article provided that the Journal of Technology, Learning, and Assessment is credited and copies are not sold. Preferred citation: Almond, P., Winter, P., Cameto, R., Russell, M., Sato, E., Clarke-Midura, J., Torres, C., Haertel, G., Dolan, R., Beddow, P., & Lazarus, S. (2010). Technology-Enabled and Universally Designed Assessment: Considering Access in Measuring the Achievement of Students with Disabilities—A Foundation for Research. -

Validity and Reliability of the Questionnaire for Compliance with Standard Precaution for Nurses

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Cadernos Espinosanos (E-Journal) Rev Saúde Pública 2015;49:87 Original Articles DOI:10.1590/S0034-8910.2015049005975 Marília Duarte ValimI Validity and reliability of the Maria Helena Palucci MarzialeII Miyeko HayashidaII Questionnaire for Compliance Fernanda Ludmilla Rossi RochaIII with Standard Precaution Jair Lício Ferreira SantosIV ABSTRACT OBJECTIVE: To evaluate the validity and reliability of the Questionnaire for Compliance with Standard Precaution for nurses. METHODS: This methodological study was conducted with 121 nurses from health care facilities in Sao Paulo’s countryside, who were represented by two high-complexity and by three average-complexity health care facilities. Internal consistency was calculated using Cronbach’s alpha and stability was calculated by the intraclass correlation coefficient, through test-retest. Convergent, discriminant, and known-groups construct validity techniques were conducted. RESULTS: The questionnaire was found to be reliable (Cronbach’s alpha: 0.80; intraclass correlation coefficient: (0.97) In regards to the convergent and discriminant construct validity, strong correlation was found between compliance to standard precautions, the perception of a safe environment, and the smaller perception of obstacles to follow such precautions (r = 0.614 and r = 0.537, respectively). The nurses who were trained on the standard precautions and worked on the health care facilities of higher complexity were shown to comply more (p = 0.028 and p = 0.006, respectively). CONCLUSIONS: The Brazilian version of the Questionnaire for I Departamento de Enfermagem. Centro Compliance with Standard Precaution was shown to be valid and reliable. -

The Validities of Pep and Some Characteristic Formulas in Modal Logic

THE VALIDITIES OF PEP AND SOME CHARACTERISTIC FORMULAS IN MODAL LOGIC Hong Zhang, Huacan He School of Computer Science, Northwestern Polytechnical University,Xi'an, Shaanxi 710032, P.R. China Abstract: In this paper, we discuss the relationships between some characteristic formulas in normal modal logic with their frames. We find that the validities of the modal formulas are conditional even though some of them are intuitively valid. Finally, we prove the validities of two formulas of Position- Exchange-Principle (PEP) proposed by papers 1 to 3 by using of modal logic and Kripke's semantics of possible worlds. Key words: Characteristic Formulas, Validity, PEP, Frame 1. INTRODUCTION Reasoning about knowledge and belief is an important issue in the research of multi-agent systems, and reasoning, which is set up from the main ideas of situational calculus, about other agents' states and actions is one of the most important directions in Al^^'^\ Papers [1-3] put forward an axiom scheme in reasoning about others(RAO) in multi-agent systems, and a rule called Position-Exchange-Principle (PEP), which is shown as following, was abstracted . C{(p^y/)^{C(p->Cy/) (Ce{5f',...,5f-}) When the length of C is at least 2, it reflects the mechanism of an agent reasoning about knowledge of others. For example, if C is replaced with Bf B^/, then it should be B':'B]'{(p^y/)-^iBf'B]'(p^Bf'B]'y/) 872 Hong Zhang, Huacan He The axiom schema plays a useful role as that of modus ponens and axiom K when simplifying proof. Though examples were demonstrated as proofs in papers [1-3], from the perspectives both in semantics and in syntax, the rule is not so compellent. -

What Teachers Know About Validity of Classroom Tests: Evidence from a University in Nigeria

IOSR Journal of Research & Method in Education (IOSR-JRME) e-ISSN: 2320–7388,p-ISSN: 2320–737X Volume 6, Issue 3 Ver. I (May. - Jun. 2016), PP 14-19 www.iosrjournals.org What Teachers Know About Validity of Classroom Tests: Evidence from a University in Nigeria Christiana Amaechi Ugodulunwa1,Sayita G. Wakjissa2 1,2Department of Educational Foundations, Faculty of Education, University of Jos, Nigeria Abstract: This study investigated university teachers’ knowledge of content and predictive validity of classroom tests. A sample of 89 teachers was randomly selected from five departments in the Faculty of Education of a university in Nigeria for the study. A 41-item Teachers’ Validity Knowledge Questionnaire (TVK-Q) was developed and validated. The data collectedwere analysed using descriptive statistics, t-test and ANOVA techniques. The results of the analysis show that the teachers have some knowledge of content-related evidence, procedure for ensuring coverage and adequate sampling of content and objectives, as well as correlating students’ scores in two measures for predictive validation. The study also reveals that the teachers lack adequate knowledge of criterion-related evidence of validity, concept of face validity and sources of invalidity of test scores irrespective of their gender, academic disciplines, years of teaching experience and ranks. The implications of the results were discussed and recommendations made for capacity building. Keywords:validity, teachers’ knowledge, classroom tests, evidence, university, Nigeria I. Introduction There is increased desire for school effectiveness and improvement at all levels of education in Nigeria. The central role of assessment in the school system for improving teaching and learning therefore demands that classroom tests should be valid and reliable measure of students’ real knowledge and skills and not testwiseness or test taking abilities. -

Statistical Analysis 8: Two-Way Analysis of Variance (ANOVA)

Statistical Analysis 8: Two-way analysis of variance (ANOVA) Research question type: Explaining a continuous variable with 2 categorical variables What kind of variables? Continuous (scale/interval/ratio) and 2 independent categorical variables (factors) Common Applications: Comparing means of a single variable at different levels of two conditions (factors) in scientific experiments. Example: The effective life (in hours) of batteries is compared by material type (1, 2 or 3) and operating temperature: Low (-10˚C), Medium (20˚C) or High (45˚C). Twelve batteries are randomly selected from each material type and are then randomly allocated to each temperature level. The resulting life of all 36 batteries is shown below: Table 1: Life (in hours) of batteries by material type and temperature Temperature (˚C) Low (-10˚C) Medium (20˚C) High (45˚C) 1 130, 155, 74, 180 34, 40, 80, 75 20, 70, 82, 58 2 150, 188, 159, 126 136, 122, 106, 115 25, 70, 58, 45 type Material 3 138, 110, 168, 160 174, 120, 150, 139 96, 104, 82, 60 Source: Montgomery (2001) Research question: Is there difference in mean life of the batteries for differing material type and operating temperature levels? In analysis of variance we compare the variability between the groups (how far apart are the means?) to the variability within the groups (how much natural variation is there in our measurements?). This is why it is called analysis of variance, abbreviated to ANOVA. This example has two factors (material type and temperature), each with 3 levels. Hypotheses: The 'null hypothesis' might be: H0: There is no difference in mean battery life for different combinations of material type and temperature level And an 'alternative hypothesis' might be: H1: There is a difference in mean battery life for different combinations of material type and temperature level If the alternative hypothesis is accepted, further analysis is performed to explore where the individual differences are. -

Curriculum B.Sc. Programme in Clinical Genetics 2020-21

BLDE (DEEMED TO BE UNIVERSITY) Choice Based Credit System (CBCS) Curriculum B.Sc. Programme in Clinical Genetics 2020-21 Published by BLDE (DEEMED TO BE UNIVERSITY) Declared as Deemed to be University u/s 3 of UGC Act, 1956 The Constituent College SHRI B. M. PATIL MEDICAL COLLEGE, HOSPITAL & RESEARCH CENTRE, VIJAYAPURA Smt. Bangaramma Sajjan Campus, B. M. Patil Road (Sholapur Road), Vijayapura - 586103, Karnataka, India. BLDE (DU): Phone: +918352-262770, Fax: +918352-263303 , Website: www.bldedu.ac.in, E-mail:[email protected] College: Phone: +918352-262770, Fax: +918352-263019, E-mail: [email protected] BLDE (Deemed to be University) Vision: To be a leader in providing quality medical education, healthcare & to become an Institution of eminence involved in multidisciplinary and translational research, the outcome of which can impact the health & the quality of life of people of this region. Mission: To be committed to promoting sustainable development of higher education, including health science education consistent with statutory and regulatory requirements. To reflect the needs of changing technology Make use of academic autonomy to identify dynamic educational programs To adopt the global concepts of education in the health care sector Clinical Genetics 1 BLDE (Deemed to be University) Type Duration Course Hours/ IA Exam Total of of Exam Code Course Name Week Marks Marks Marks Credits paper (Hours) SEMESTER-I BCT 1.1 Fundamentals of T 4 3 30 70 100 2 Cell Biology BCT 1.1P Fundamentals of P 3 3 15 35 50 1 Cell Biology -

The Substitutional Analysis of Logical Consequence

THE SUBSTITUTIONAL ANALYSIS OF LOGICAL CONSEQUENCE Volker Halbach∗ dra version ⋅ please don’t quote ónd June óþÕä Consequentia ‘formalis’ vocatur quae in omnibus terminis valet retenta forma consimili. Vel si vis expresse loqui de vi sermonis, consequentia formalis est cui omnis propositio similis in forma quae formaretur esset bona consequentia [...] Iohannes Buridanus, Tractatus de Consequentiis (Hubien ÕÉßä, .ì, p.óóf) Zf«±§Zh± A substitutional account of logical truth and consequence is developed and defended. Roughly, a substitution instance of a sentence is dened to be the result of uniformly substituting nonlogical expressions in the sentence with expressions of the same grammatical category. In particular atomic formulae can be replaced with any formulae containing. e denition of logical truth is then as follows: A sentence is logically true i all its substitution instances are always satised. Logical consequence is dened analogously. e substitutional denition of validity is put forward as a conceptual analysis of logical validity at least for suciently rich rst-order settings. In Kreisel’s squeezing argument the formal notion of substitutional validity naturally slots in to the place of informal intuitive validity. ∗I am grateful to Beau Mount, Albert Visser, and Timothy Williamson for discussions about the themes of this paper. Õ §Z êZo±í At the origin of logic is the observation that arguments sharing certain forms never have true premisses and a false conclusion. Similarly, all sentences of certain forms are always true. Arguments and sentences of this kind are for- mally valid. From the outset logicians have been concerned with the study and systematization of these arguments, sentences and their forms. -

Reliability and Validity: How Do These Concepts Influence Accurate Student Assessment?

LEARNING POINT Reliability and validity: How do these concepts influence accurate student assessment? There are two concepts central to ac- scale reads 50 pounds heavier than Internal consistency reliability can curate student assessment: reliability expected. A second reading shows a be thought of as the degree to which and validity. Very briefly explained, weight that is 60 pounds lighter than responses to all the items on a test reliability refers to the consistency of expected. Clearly, there is something “hang together.” This is expressed as test scores, where validity refers to wrong with this scale! It is not mea- a correlation between 0 and 1, with the degree that a test measures what suring the person’s weight accurately values closer to 1 indicating higher re- or consistently. This is liability or internal consistency within the basic idea behind the measure. Internal consistency reliability for education- values are impacted by the structure al measurements. of the test. Tests that consist of more homogeneous items (i.e., all mea- In practice, education- sure the same thing) will have higher al assessment is not internal consistency values than tests quite as straightfor- that have more divergent content. For ward as the bathroom example, we would expect a test that scale example. There contains only American history items are different ways to to have higher reliability (internal think of, and express, consistency) values than a test that the reliability of educa- contains American history, African tional tests. Depending history, and political science ques- on the format and purpose of the it purports to measure. -

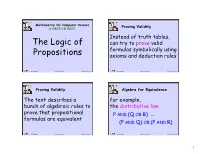

Propositional Logic (PDF)

Mathematics for Computer Science Proving Validity 6.042J/18.062J Instead of truth tables, The Logic of can try to prove valid formulas symbolically using Propositions axioms and deduction rules Albert R Meyer February 14, 2014 propositional logic.1 Albert R Meyer February 14, 2014 propositional logic.2 Proving Validity Algebra for Equivalence The text describes a for example, bunch of algebraic rules to the distributive law prove that propositional P AND (Q OR R) ≡ formulas are equivalent (P AND Q) OR (P AND R) Albert R Meyer February 14, 2014 propositional logic.3 Albert R Meyer February 14, 2014 propositional logic.4 1 Algebra for Equivalence Algebra for Equivalence for example, The set of rules for ≡ in DeMorgan’s law the text are complete: ≡ NOT(P AND Q) ≡ if two formulas are , these rules can prove it. NOT(P) OR NOT(Q) Albert R Meyer February 14, 2014 propositional logic.5 Albert R Meyer February 14, 2014 propositional logic.6 A Proof System A Proof System Another approach is to Lukasiewicz’ proof system is a start with some valid particularly elegant example of this idea. formulas (axioms) and deduce more valid formulas using proof rules Albert R Meyer February 14, 2014 propositional logic.7 Albert R Meyer February 14, 2014 propositional logic.8 2 A Proof System Lukasiewicz’ Proof System Lukasiewicz’ proof system is a Axioms: particularly elegant example of 1) (¬P → P) → P this idea. It covers formulas 2) P → (¬P → Q) whose only logical operators are 3) (P → Q) → ((Q → R) → (P → R)) IMPLIES (→) and NOT. The only rule: modus ponens Albert R Meyer February 14, 2014 propositional logic.9 Albert R Meyer February 14, 2014 propositional logic.10 Lukasiewicz’ Proof System Lukasiewicz’ Proof System Prove formulas by starting with Prove formulas by starting with axioms and repeatedly applying axioms and repeatedly applying the inference rule. -

Validity and Reliability in Quantitative Studies Evid Based Nurs: First Published As 10.1136/Eb-2015-102129 on 15 May 2015

Research made simple Validity and reliability in quantitative studies Evid Based Nurs: first published as 10.1136/eb-2015-102129 on 15 May 2015. Downloaded from Roberta Heale,1 Alison Twycross2 10.1136/eb-2015-102129 Evidence-based practice includes, in part, implementa- have a high degree of anxiety? In another example, a tion of the findings of well-conducted quality research test of knowledge of medications that requires dosage studies. So being able to critique quantitative research is calculations may instead be testing maths knowledge. 1 School of Nursing, Laurentian an important skill for nurses. Consideration must be There are three types of evidence that can be used to University, Sudbury, Ontario, given not only to the results of the study but also the demonstrate a research instrument has construct Canada 2Faculty of Health and Social rigour of the research. Rigour refers to the extent to validity: which the researchers worked to enhance the quality of Care, London South Bank 1 Homogeneity—meaning that the instrument mea- the studies. In quantitative research, this is achieved University, London, UK sures one construct. through measurement of the validity and reliability.1 2 Convergence—this occurs when the instrument mea- Correspondence to: Validity sures concepts similar to that of other instruments. Dr Roberta Heale, Validity is defined as the extent to which a concept is Although if there are no similar instruments avail- School of Nursing, Laurentian accurately measured in a quantitative study. For able this will not be possible to do. University, Ramsey Lake Road, example, a survey designed to explore depression but Sudbury, Ontario, Canada 3 Theory evidence—this is evident when behaviour is which actually measures anxiety would not be consid- P3E2C6; similar to theoretical propositions of the construct ered valid. -

A Validity Framework for the Use and Development of Exported Assessments

A Validity Framework for the Use and Development of Exported Assessments By María Elena Oliveri, René Lawless, and John W. Young A Validity Framework for the Use and Development of Exported Assessments María Elena Oliveri, René Lawless, and John W. Young1 1 The authors would like to acknowledge Bob Mislevy, Michael Kane, Kadriye Ercikan, and Maurice Hauck for their valuable input and expertise in reviewing various iterations of the framework as well as Priya Kannan, Don Powers, and James Carlson for their input throughout the technical review process. Copyright © 2015 Educational Testing Service. All Rights Reserved. ETS, the ETS logo, GRADUATE RECORD EXAMINATIONS, GRE, LISTENTING. LEARNING. LEADING, TOEIC, and TOEFL are registered trademarks of Educational Testing Service (ETS). EXADEP and EXAMEN DE ADMISIÓN A ESTUDIOS DE POSGRADO are trademarks of ETS. 1 Abstract In this document, we present a framework that outlines the key considerations relevant to the fair development and use of exported assessments. Exported assessments are developed in one country and are used in countries with a population that differs from the one for which the assessment was developed. Examples of these assessments include the Graduate Record Examinations® (GRE®) and el Examen de Admisión a Estudios de Posgrado™ (EXADEP™), among others. Exported assessments can be used to make inferences about performance in the exporting country or in the receiving country. To illustrate, the GRE can be administered in India and be used to predict success at a graduate school in the United States, or similarly it can be administered in India to predict success in graduate school at a graduate school in India.