Distributed Password Cracking with BOINC and Hashcat

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Truecrack Bruteforcing Per Volumi Truecrypt

Luca Vaccaro http://code.google.com/p/truecrack/ [email protected] User development guide. TrueCrypt © . software application used for on-the-fly encryption (OTFE). TrueCrack . bruteforce password cracker for TrueCrypt © (Copyrigth) volume files, optimazed with Nvidia Cuda technology. This software is Based on TrueCrypt, freely available athttp://www.truecrypt.org/ Master key . Crypt the volume of data. Generated one time in the volume creation phase from random value. Write inside the header section of the volume file. Header key . Crypt the header section of the volume file. Generated from a user password and a random salt (64 bytes). The salt is write in plain text in the first 64 bytes of volume file. Hard disk encryption: . Standard block cipher: XTS . Hash availables: AES, Serpent, Twofish . Default: AES Key derivation function: . Standard algorithm: PBKDF2 . Hash availables: RIPEMD160, SHA-512, Whirpool . Default: RIPEMD160 Master Header Key Key Plain Cipher Volume data data + file header Opening a TrueCrypt volume means to retrieve the Master Key from the Header section In the Header there are some fields (true, crc32) for checking the success of the decipher operation . If the password is right or wrong Header key User password salt Volume Master file key CUDA or Compute Unified Device Architecture is a parallel computing architecture developed by Nvidia. CUDA gives developers access to the virtual instruction set and memory of the parallel computational elements in CUDA GPUs. Each GPU is a collection of multicores. Each core can run mmore cuda «block», and each block can run a numbers of parallel «thread» 1. Level of parallilism : block 2. -

Analysis of Password Cracking Methods & Applications

The University of Akron IdeaExchange@UAkron The Dr. Gary B. and Pamela S. Williams Honors Honors Research Projects College Spring 2015 Analysis of Password Cracking Methods & Applications John A. Chester The University Of Akron, [email protected] Please take a moment to share how this work helps you through this survey. Your feedback will be important as we plan further development of our repository. Follow this and additional works at: http://ideaexchange.uakron.edu/honors_research_projects Part of the Information Security Commons Recommended Citation Chester, John A., "Analysis of Password Cracking Methods & Applications" (2015). Honors Research Projects. 7. http://ideaexchange.uakron.edu/honors_research_projects/7 This Honors Research Project is brought to you for free and open access by The Dr. Gary B. and Pamela S. Williams Honors College at IdeaExchange@UAkron, the institutional repository of The nivU ersity of Akron in Akron, Ohio, USA. It has been accepted for inclusion in Honors Research Projects by an authorized administrator of IdeaExchange@UAkron. For more information, please contact [email protected], [email protected]. Analysis of Password Cracking Methods & Applications John A. Chester The University of Akron Abstract -- This project examines the nature of password cracking and modern applications. Several applications for different platforms are studied. Different methods of cracking are explained, including dictionary attack, brute force, and rainbow tables. Password cracking across different mediums is examined. Hashing and how it affects password cracking is discussed. An implementation of two hash-based password cracking algorithms is developed, along with experimental results of their efficiency. I. Introduction Password cracking is the process of either guessing or recovering a password from stored locations or from a data transmission system [1]. -

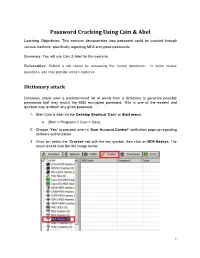

Password Cracking Using Cain & Abel

Password Cracking Using Cain & Abel Learning Objectives: This exercise demonstrates how password could be cracked through various methods, specifically regarding MD5 encrypted passwords. Summary: You will use Cain & Abel for this exercise. Deliverables: Submit a lab report by answering the review questions. In some review questions, you may provide screen captures. Dictionary attack Dictionary attack uses a predetermined list of words from a dictionary to generate possible passwords that may match the MD5 encrypted password. This is one of the easiest and quickest way to obtain any given password. 1. Start Cain & Abel via the Desktop Shortcut ‘Cain’ or Start menu. a. (Start > Programs > Cain > Cain). 2. Choose ‘Yes’ to proceed when a ‘User Account Control’ notification pops up regarding software authorization. 3. Once on, select the ‘Cracker’ tab with the key symbol, then click on MD5 Hashes. The result should look like the image below. 1 Collaborative Virtual Computer Lab (CVCLAB) Penn State Berks 4. As you might have noticed we don’t have any passwords to crack, thus for the next few steps we will create our own MD5 encrypted passwords. First, locate the Hash Calculator among a row of icons near the top. Open it. 5. Next, type into ‘Text to Hash’ the word password. It will generate a list of hashes pertaining to different types of hash algorithms. We will be focusing on MD5 hash so copy it. Then exit calculator by clicking ‘Cancel’ (Fun Fact: Hashes are case sensitive so any slight changes to the text will change the hashes generated, try changing a letter or two and you will see. -

User Authentication and Cryptographic Primitives

User Authentication and Cryptographic Primitives Brad Karp UCL Computer Science CS GZ03 / M030 16th November 2016 Outline • Authenticating users – Local users: hashed passwords – Remote users: s/key – Unexpected covert channel: the Tenex password- guessing attack • Symmetric-key-cryptography • Public-key cryptography usage model • RSA algorithm for public-key cryptography – Number theory background – Algorithm definition 2 Dictionary Attack on Hashed Password Databases • Suppose hacker obtains copy of password file (until recently, world-readable on UNIX) • Compute H(x) for 50K common words • String compare resulting hashed words against passwords in file • Learn all users’ passwords that are common English words after only 50K computations of H(x)! • Same hashed dictionary works on all password files in world! 3 Salted Password Hashes • Generate a random string of bytes, r • For user password x, store [H(r,x), r] in password file • Result: same password produces different result on every machine – So must see password file before can hash dictionary – …and single hashed dictionary won’t work for multiple hosts • Modern UNIX: password hashes salted; hashed password database readable only by root 4 Salted Password Hashes • Generate a random string of bytes, r Dictionary• For user password attack still x, store possible [H(r,x after), r] in attacker seespassword password file file! Users• Result: should same pick password passwords produces that different aren’t result close to ondictionary every machine words. – So must see password file -

Hash Crack: Password Cracking Manual

Hash Crack. Copyright © 2017 Netmux LLC All rights reserved. Without limiting the rights under the copyright reserved above, no part of this publication may be reproduced, stored in, or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise) without prior written permission. ISBN-10: 1975924584 ISBN-13: 978-1975924584 Netmux and the Netmux logo are registered trademarks of Netmux, LLC. Other product and company names mentioned herein may be the trademarks of their respective owners. Rather than use a trademark symbol with every occurrence of a trademarked name, we are using the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of this work, neither the author nor Netmux LLC, shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in it. While every effort has been made to ensure the accuracy and legitimacy of the references, referrals, and links (collectively “Links”) presented in this book/ebook, Netmux is not responsible or liable for broken Links or missing or fallacious information at the Links. Any Links in this book to a specific product, process, website, or service do not constitute or imply an endorsement by Netmux of same, or its producer or provider. The views and opinions contained at any Links do not necessarily express or reflect those of Netmux. -

Password Security - When Passwords Are There for the World to See

Password Security - When Passwords are there for the World to see Eleanore Young Marc Ruef (Editor) Offense Department, scip AG Research Department, scip AG [email protected] [email protected] https://www.scip.ch https://www.scip.ch Keywords: Bitcoin, Exchange, GitHub, Hashcat, Leak, OWASP, Password, Policy, Rapid, Storage 1. Preface password from a hash without having to attempt a reversal of the hashing algorithm. This paper was written in 2017 as part of a research project at scip AG, Switzerland. It was initially published online at Furthermore, if passwords are fed through hashing https://www.scip.ch/en/?labs.20170112 and is available in algorithms as is, two persons who happen to use the same English and German. Providing our clients with innovative password, will also have the same hash value. As a research for the information technology of the future is an countermeasure, developers have started adding random essential part of our company culture. user-specific values (the salt) to the password before calculating the hash. The salt will then be stored alongside 2. Introduction the password hash in the user account database. As such, even if two persons use the same password, their resulting The year 2016 has seen many reveals of successful attacks hash value will be different due to the added salt. on user account databases; the most notable cases being the attacks on Yahoo [1] and Dropbox [2]. Thanks to recent Modern GPU architectures are designed for large scale advances not only in graphics processing hardware (GPUs), parallelism. Currently, a decent consumer-grade graphics but also in password cracking software, it has become card is capable of performing on the order of 1000 dangerously cheap to determine the actual passwords from calculations simultaneously. -

Passwords CS 166: Information Security Authentication: Passwords

Passwords CS 166: Information Security Authentication: Passwords Prof. Tom Austin San José State University Access Control • Authentication: Are you who you say you are? – Determine whether access is allowed – human to machine, or machine to machine • Authorization: Are you allowed to do that? – Once you have access, what can you do? – Enforces limits on actions Chapter 7: Authentication Guard: Halt! Who goes there? Arthur: It is I, Arthur, son of Uther Pendragon, from the castle of Camelot. King of the Britons, defeater of the Saxons, sovereign of all England! ¾ Monty Python and the Holy Grail Then said they unto him, Say now Shibboleth: and he said Sibboleth: for he could not frame to pronounce it right. Then they took him, and slew him at the passages of Jordan: and there fell at that time of the Ephraimites forty and two thousand. ¾ Judges 12:6 Authentication: Are You Who You Say You Are? • How to authenticate human a machine? • Can be based on… –Something you know • e.g. password –Something you have • e.g. smartcard –Something you are • e.g. fingerprint Something You Know • Passwords • Lots of things act as passwords! –PIN –Social security number –Mother’s maiden name –Date of birth –Name of your pet, etc. Why Passwords? • Why is “something you know” more popular than “something you have” and “something you are”? • Cost: passwords are free • Convenience: easier for admin to reset pwd than to issue a new thumb Authenticating with Passwords I am Thor Prove it My password is Mjölnir Authenticating with Passwords I am Thor Prove it My -

UGRD 2015 Spring Bugg Chris.Pdf (464.4Kb)

We could consider using the Mighty Cracker Logo located in the Network Folder MIGHTY CRACKER Chris Bugg Chris Hamm Jon Wright Nick Baum Password Security • Password security is important. • Users • Weak and/or reused passwords • Developers and Admins • Choose insecure storage algorithms. • Mighty Cracker • Show real world impact of poor password security. OVERVIEW • We made a hash cracker. • Passwords are stored as hashes to protect them from intruders. • Our program uses several methods to ‘crack’ those hashes. • Networking • Spread work to multiple machines. • Cross Platform OTHER HASH CRACKING PRODUCTS • Hashcat • Cain and Abel • John the Ripper • THC-Hydra • Ophcrack • Network support is rare. WHAT IS HASHING • A way to encode a password to help protect it. • A mathematical one-way function. • MD5 hash • cf4ff726403b8a992fd43e09dd7b5717 • SHA-256 hash • 951e689364c979cc3aa17e6b0022ce6e4d0e3200d1c22dd68492c172241e0623 SUPPORTED HASHING ALGORITHMS • Current Algorithms • MD5 • SHA-1 • SHA-224 • SHA-256 • SHA-384 • SHA-512 WAYS TO CRACK • Cracking Modes • Single User • Network Mode • Methods of Cracking: • Brute Force • Dictionary • Rainbow Table • GUI or Console BRUTE FORCE • Systematically checking all possible keys until the correct one is found. • Worst case this would transverse the entire search space. • Slowest but will always find the solution if given enough time. DICTIONARY ATTACK • List of common passwords from leaks/hacks. • Many people choose common passwords • Written works of Shakespeare ~66,000 words • Oxford English Dictionary ~290,000 words • Small dictionary = 900,000 words • Medium dictionary = 14 million words • Large dictionary = 1.2 billion words RAINBOW TABLE • Can’t store all possible hash/key combinations. • 16 character key = 10^40th combinations • 10^50th atoms on earth • Rainbow tables • Reduced storage. -

Hao Xu; Title: Improving Rainbow Table Cracking Accuracy; Mentor(S): Xianping Wang, CITG

Student(s): Hao Xu; Title: Improving Rainbow Table Cracking Accuracy; Mentor(s): Xianping Wang, CITG. Abstract: Password cracking is the process of recovering plaintext passwords from data that has been stored in or transmitted by computing systems in cryptanalysis, computer security and digital forensics. There are many situations that require password cracking: helping users recover forgotten passwords, gaining unauthorized access to systems, checking password strength, etc. The most popular and applicable password cracking method is brute-force attack with various improvements such as dictionary attack, rainbow table attack. Usually they are accelerated with GPU, FPGA and ASIC. As passwords are usually stored in their hash codes instead of plaintext. to accelerate the password cracking process, caching the output of cryptographic hash codes of passwords, named as rainbow table, are used widely today. However, rainbow tables are usually created from exhaustive password dictionaries, in which many unusual combinations of letters, symbols and digits are contained, which decreased cracking efficiency and accuracy The efficiency and accuracy of rainbow table cracking can be improved from many aspects such as password patterns, computing engines, etc. In this research, we will employ many openly available leaked passwords, to find their distribution by maximum likelihood estimation, design a password generator based on the found distribution, generate rainbow tables on the passwords generated by the designed password generator. The accuracy of our rainbow table cracker will be compared with several popular password crackers --- John the Ripper password cracker, Cain and Abel, Hashcat , and Ophcrack " . -

A Survey of Password Attacks and Safe Hashing Algorithms

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 04 Issue: 12 | Dec-2017 www.irjet.net p-ISSN: 2395-0072 A Survey of Password Attacks and Safe Hashing Algorithms Tejaswini Bhorkar Student, Dept. of Computer Science and Engineering, RGCER, Nagpur, Maharashtra, India ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - To authenticate users, most web services use pair of message that would generate the same hash as that of the username and password. When a user wish to login to a web first message. Thus, the hash function should be resistant to application of the service, he/she sends their user name and second-pre-image. password to the web server, which checks if the given username already exist in the database and that the password is identical to 1.2 Hashing Algorithms the password set by that user. This last step of verifying the password of the user can be performed in different ways. The user Different hash algorithms are used in order to generate hash password may be directly stored in the database or the hash value values. Some commonly used hash functions include the of the password may be stored. This survey will include the study following: of password hashing. It will also include the need of password hashing, algorithms used for password hashing along with the attacks possible on the same. • MD5 • SHA-1 Key Words: Password, Hashing, Algorithms, bcrypt, scrypt, • SHA-2 attacks, SHA-1 • SHA-3 1.INTRODUCTION MD5: Web servers store the username and password of its users to authenticate its users. If the web servers store the passwords of This algorithm takes data of arbitrary length and produces its users directly in the database, then in such case, password message digest of 128 bits (i.e. -

Password Cracker Tutorial

Password cracker tutorial In cryptanalysis and computer security, password cracking is the process of recovering passwords[1] from data that has been stored in or transmitted by a computer system. A common approach (brute-force attack) is to repeatedly try guesses for the password and to check them against an available cryptographic hash of the password.[2] The purpose of password cracking might be to help a user recover a forgotten password (installing an entirely new password is less of a security risk, but it involves System Administration privileges), to gain unauthorized access to a system, or to act as a preventive measure whereby system administrators check for easily crackable passwords. On a file-by-file basis, password cracking is utilized to gain access to digital evidence to which a judge has allowed access, when a particular file's permissions are restricted. Time needed for password searches The time to crack a password is related to bit strength (see password strength), which is a measure of the password's entropy, and the details of how the password is stored. Most methods of password cracking require the computer to produce many candidate passwords, each of which is checked. One example is brute-force cracking, in which a computer tries every possible key or password until it succeeds. With multiple processors, this time can be optimized through searching from the last possible group of symbols and the beginning at the same time, with other processors being placed to search through a designated selection of possible passwords.[3] More common methods of password cracking, such as dictionary attacks, pattern checking, word list substitution, etc. -

Lab 3: MD5 and Rainbow Tables

Lab 3: MD5 and Rainbow Tables 50.020 Security Hand-out: February 9 Hand-in: February 16, 9pm 1 Objective • Hash password using MD5 • Crack MD5 hashes using brute-force and rainbow tables • Strengthen MD5 hash using salt and crack again the salted hashes • Compete in the hash breaking competition 2 Hashing password using MD5 • To warm up, compute a couple of MD5 hashes of strings of your choice – Observe the length of the output, and whether it depends on length of input • To generate the MD5 hash using the shell, try echo -n "foobar" | md5sum to compute hash of foobar • To generate the MD5 hash using python, use import hashlib module and its hexdigest() function. 3 Brute-Force and dictionary attack • For this exercise, use the fifteen hash values from the hash5.txt • Create a md5fun.py Python 3 script to find the corresponding input to create the challenge hash values • You need only to consider passwords with 5 lowercase and or numeric characters. Compute the hash values for each possible combination. To help reduce the search space we provide a dictionary with newline separated common words in words5.txt. Notice a resulting hash may be generated by a permutation of any word in the list, e.g. hello -> lhelo • Both hash5.txt and words5.txt elements are newline separated • Take note of the computation time of your algorithm to reverse all fifteen hashes. Consider the timeit python module: https://docs.python.org/3.6/library/timeit.html 1 4 Creating Rainbow Tables • Install the program rainbrowcrack-1.6.1-linux64.zip (http://project-rainbowcrack.com/rainbowcrack-1.6.1-linux64.zip).