Astrophotography with an Altitude-Azimuth Telescope Drive

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quick Start Guide

Quick Start Guide CEM120™ Center‐Balanced GoTo Equatorial Mount Models: #7300, #7301, #7302 PACKAGE CONTENTS1 1X Telescope mount 1X Hand controller (HC) – Go2Nova® #8407 1X Counterweight (10 kg/22 lbs) 1X Stainless steel counterweight shaft (4.6kg) 1X HC controller cable 1X RS232‐RJ9 serial cable 1X 12V5A AC adapter – 100‐240V 4X Base mounting screws 1X GPS external antenna 1X Wi‐Fi external antenna Quick Start Guide (this document) ONLINE RESOURCES (at www.iOptron.com, under “Support”) User’s Manual Tips for set up and using the products Hand controller and mount firmware upgrades (check online for the latest version) 1 The design and packaging may change from time to time without notice. STOP!!! Read the Instruction BEFORE setting up and using the mount! Worm/gear system damage due to improperly use will not be covered by warranty. Questions? Contact us at [email protected] Instruction for CEM120 Gear Switch and Axle Locking Knob Both RA and DEC have the same Gear Switch and Axle Locking Knob, the operations are the same. Gear Switch Axle Locking Knob As an example, here are the positions for the Gear Switch and Axle Locking Knob for RA axis: Fig.1: When transferring or installing the mount, lock the Axle Locking Knob and disengage the Gear Switch . So the RA won’t swing and there is no force applied onto the worm/ring gear. Fig.2: During mount balancing process, pull and turn the Axle Locking Knob to release it and leave the Gear Switch at disengaged position . Now the mount will swing freely in RA direction. -

Allview Mount Manual

Multi-Purpose Computerized Mount Instruction Manual Table of Contents Introduction .......................................................................................................................................................................................... 4 Warning .................................................................................................................................................................................. 4 Assembly................................................................................................................................................................................................ 5 Assembling the AllView™ Mount ......................................................................................................................................... 5 Tripod and Mount Setup ......................................................................................................................................... 5 Assembling and Installing the Mounting Bracket ..................................................................................................6 Fork Arm Configuration ..........................................................................................................................................................12 Inner Mounting Configuration: ...............................................................................................................................12 Outer Mounting Configuration: ..............................................................................................................................12 -

Find Your Telescope. Your Find Find Yourself

FIND YOUR TELESCOPE. FIND YOURSELF. FIND ® 2008 PRODUCT CATALOG WWW.MEADE.COM TABLE OF CONTENTS TELESCOPE SECTIONS ETX ® Series 2 LightBridge ™ (Truss-Tube Dobsonians) 20 LXD75 ™ Series 30 LX90-ACF ™ Series 50 LX200-ACF ™ Series 62 LX400-ACF ™ Series 78 Max Mount™ 88 Series 5000 ™ ED APO Refractors 100 A and DS-2000 Series 108 EXHIBITS 1 - AutoStar® 13 2 - AutoAlign ™ with SmartFinder™ 15 3 - Optical Systems 45 FIND YOUR TELESCOPE. 4 - Aperture 57 5 - UHTC™ 68 FIND YOURSEL F. 6 - Slew Speed 69 7 - AutoStar® II 86 8 - Oversized Primary Mirrors 87 9 - Advanced Pointing and Tracking 92 10 - Electronic Focus and Collimation 93 ACCESSORIES Imagers (LPI,™ DSI, DSI II) 116 Series 5000 ™ Eyepieces 130 Series 4000 ™ Eyepieces 132 Series 4000 ™ Filters 134 Accessory Kits 136 Imaging Accessories 138 Miscellaneous Accessories 140 Meade Optical Advantage 128 Meade 4M Community 124 Astrophotography Index/Information 145 ©2007 MEADE INSTRUMENTS CORPORATION .01 RECRUIT .02 ENTHUSIAST .03 HOT ShOT .04 FANatIC Starting out right Going big on a budget Budding astrophotographer Going deeper .05 MASTER .06 GURU .07 SPECIALIST .08 ECONOMIST Expert astronomer Dedicated astronomer Wide field views & images On a budget F IND Y OURSEL F F IND YOUR TELESCOPE ® ™ ™ .01 ETX .02 LIGHTBRIDGE™ .03 LXD75 .04 LX90-ACF PG. 2-19 PG. 20-29 PG.30-43 PG. 50-61 ™ ™ ™ .05 LX200-ACF .06 LX400-ACF .07 SERIES 5000™ ED APO .08 A/DS-2000 SERIES PG. 78-99 PG. 100-105 PG. 108-115 PG. 62-76 F IND Y OURSEL F Astronomy is for everyone. That’s not to say everyone will become a serious comet hunter or astrophotographer. -

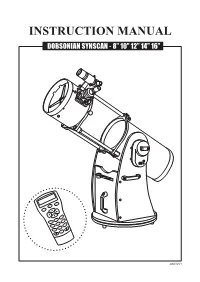

Dobsonian Synscan - 8” 10” 12” 14” 16”

INSTRUCTION MANUAL DOBSONIAN SYNSCAN - 8” 10” 12” 14” 16” SETUP ESC ENTER TOUR RATE UTILITY 1 2 3 M NGC IC 4 5 6 PLANET OBJECT USER 7 8 9 ID 0 180610V6-3.08 240412V1 TABLE OF CONTENTS DOBSONIAN BASE ASSEMBLY – – – – – – – – – – – – – – – – – – – – – – – – – – 3 3 PRIMARYTELESCOPE MIRROR SETUP INSTALLATION – – – – – – – – – – – – – – – – – – – – – – – – 7 7 TELESCOPE ALIGNING SETUP THE – –FINDERSCOPE – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – 810 ALIGNINGFOCUSING THE FINDERSCOPE – – – – – – – – – – – – – – – – – – – – – – 810 FOCUSINGPOWER REQUIREMENTS – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – 810 POWERPOWERING REQUIREMENTS THE DOBSONIAN – – – SYNSCAN – – – – – – – – – – – – – – – – – – – – – – 810 THE SYNSCANPOWERING AZ THE DOBSONIAN SYNSCAN – – – – – – – – – – – – – – – – 910 THE SYNSCANINTRODUCTION AZ – – – TO – –THE – – SYNSCAN – – – – – AZ– – – – – – – – – – – – – – – – – 911 INTRODUCTIONSYNSCAN AZ HAND TO THECONTROL SYNSCAN AZ – – – – – – – – – – – – – – – – – 911 AUTOTRACKINGSYNSCAN OPERATION AZ HAND CONTROL – – – – – – – – – – – – – – – – – – – – – 11 11 AUTOTRACKING INITIAL SETUP OPERATION – – – – – – – – – – – – – – – – – – – – – – – – – – 11 13 INITALAUTOMATIC SETUP TRACKING – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – 11 13 AZ GOTOAUTOMATIC OPERATION TRACKING – – – – – – – – – – – – – – – – – – – – – – – – – – 12 13 AZ GOTOINITIAL OPERATION SETUP – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – 12 14 INITIALSTAR ALIGNMENT SETUP – – – – – – – – – – – – – -

Patrick Moore's Practical Astronomy Series

Patrick Moore’s Practical Astronomy Series Other Titles in this Series Navigating the Night Sky Astronomy of the Milky Way How to Identify the Stars and The Observer’s Guide to the Constellations Southern/Northern Sky Parts 1 and 2 Guilherme de Almeida hardcover set Observing and Measuring Visual Mike Inglis Double Stars Astronomy of the Milky Way Bob Argyle (Ed.) Part 1: Observer’s Guide to the Observing Meteors, Comets, Supernovae Northern Sky and other transient Phenomena Mike Inglis Neil Bone Astronomy of the Milky Way Human Vision and The Night Sky Part 2: Observer’s Guide to the How to Improve Your Observing Skills Southern Sky Michael P. Borgia Mike Inglis How to Photograph the Moon and Planets Observing Comets with Your Digital Camera Nick James and Gerald North Tony Buick Telescopes and Techniques Practical Astrophotography An Introduction to Practical Astronomy Jeffrey R. Charles Chris Kitchin Pattern Asterisms Seeing Stars A New Way to Chart the Stars The Night Sky Through Small Telescopes John Chiravalle Chris Kitchin and Robert W. Forrest Deep Sky Observing Photo-guide to the Constellations The Astronomical Tourist A Self-Teaching Guide to Finding Your Steve R. Coe Way Around the Heavens Chris Kitchin Visual Astronomy in the Suburbs A Guide to Spectacular Viewing Solar Observing Techniques Antony Cooke Chris Kitchin Visual Astronomy Under Dark Skies How to Observe the Sun Safely A New Approach to Observing Deep Space Lee Macdonald Antony Cooke The Sun in Eclipse Real Astronomy with Small Telescopes Sir Patrick Moore and Michael Maunder Step-by-Step Activities for Discovery Transit Michael K. -

Three Decades of Small-Telescope Science

Three Decades of Small-Telescope Science A Report on the 2011 Society for Astronomical Sciences 30th Annual Sym- posium on Telescope Science Robert K. Buchheim For three decades, the Society for Astronomical Sciences has supported small telescope sci- ence, encouraged amateur research, and facilitated pro-am collaboration. On May 24-26, more than one hundred participants in the 30th anniversary SAS Symposium heard presentations cov- ering a wide range of astronomical topics, reaching from the laboratory to the distant cosmos. From Planets to Galaxies Sky & Telescope Editor-in-Chief Bob Naeye opened the technical session with a review of the amateur contributions to exoplanet discovery and research through monitoring of transits and micro-lensing events. R. Jay GaBany described his participation as an astro-imager in the search for evidence of galactic mergers. His exquisite deep images taken with his 24-inch telescope displayed the di- verse patterns of star streams that can be left behind when a dwarf galaxy is disrupted and ab- sorbed by a larger galaxy. He also noted that not all faint structures are evidence of galactic mergers: the faint ”loop” structure near M-81 is actually foreground “galactic cirrus” in our own Milky Way masquerading as a faux tidal loop. Education and Outreach Several papers related to education and outreach were presented. Debra Ceravolo applied her expertise in the generation and perception of colors, to describe a new method of merging narrow-band (e.g. Hα) and broad-band (e.g. RGB) images into a natural-color image that displays enhanced detail without glaring false colors. -

A Simple Guide to Backyard Astronomy Using Binoculars Or a Small Telescope

A Simple Guide to Backyard Astronomy Using Binoculars or a Small Telescope Assembled by Carol Beigel Where and When to Go Look at the Stars Dress for the Occasion Red Flashlight How to Find Things in the Sky Charts Books Gizmos Binoculars for Astronomy Telescopes for Backyard Astronomy Star Charts List of 93 Treasures in the Sky with charts Good Binocular Objects Charts to Get Oriented in the Sky Messier Objects Thumbnail Photographs of Messier Objects A Simple Guide to Backyard Astronomy Using Binoculars or a Small Telescope www.carolrpt.com/astroguide.htm P.1 The Earth’s Moon Moon Map courtesy of Night Sky Magazine http://skytonight.com/nightsky http://www.skyandtelescope.com/nightsky A Simple Guide to Backyard Astronomy Using Binoculars or a Small Telescope www.carolrpt.com/astroguide.htm P.2 A Simple Guide to Backyard Astronomy using Binoculars or a Small Telescope assembled by Carol Beigel in the Summer of 2007 The wonderment of the night sky is a passion that must be shared. Tracking the phases of the Moon, if only to plan how much light it will put into the sky at night, and bookmarking the Clear Sky Clock, affectionately known as the Cloud Clock become as common as breathing. The best observing nights fall about a week after the Full Moon until a few days after the New Moon. However, don't wait for ideal and see what you can see every night no matter where you are. I offer this simple guide to anyone who wants to look upward and behold the magnificence of the night sky. -

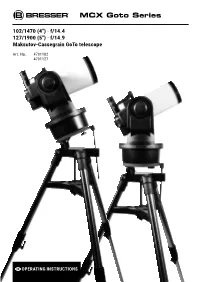

MCX Goto Series

MCX Goto Series 102/1470 (4") · f/14.4 127/1900 (5") · f/14.9 Maksutov-Cassegrain GoTo telescope Art. No. 4701102 4701127 EN OPERATING INSTRUCTIONS General warnings ! EN instructions carefully and do not attempt DANGER of material damage! This operating instruction to power this device with anything other Do not disassemble the device! In the booklet is to be considered as than power sources recommended in this event of a defect, please contact your part of this device. manual, otherwise there is a DANGER OF A dealer. They will contact our Service Center Read the safety instructions and the ELECTRIC SHOCK! and can arrange the return of this device operating manual carefully before using for repair if necessary. this device. Never bend, squeeze or pull power Keep this manual in a safe place for cables, connecting cables, extensions or Do not subject the device to excessive future reference. If this device is sold or connectors. Protect cables from sharp vibrations. passed on, these operating instructions edges and heat. Check this device, cables must be passed on to each subsequent and connections for damage before use. The manufacturer accepts no liability for owner/user of the product. Never attempt to operate a damaged voltage damage as a result of incorrectly device, or a device with damaged electrical inserted batteries, or the use of an [WARNING:]DANGER of bodily injury! parts! Damaged parts must be replaced unsuitable mains adapter! Never look directly at, or near the sun with immediately by an authorized service this device. There is a risk of PERMANENT agent. -

Astrophotography Ff10mm & 25Mm Super Eyepieces (1.25” Barrel) Ff Fully Baffled and Blackened Ff2” to 1.25” Adaptor with T2 Thread

OTAs PHOTOPHOTO GALLERY GALLERY OPTICAL TUBE ASSEMBLY M20 Sky-Watcher Esprit 100ED, Unmodified DSLR, ISO1600, stacked in DSS and processed in StarTools TAKEN BY COLIN M8 Sky-Watcher Esprit 100ED, Unmodified DSLR, ISO1600, stacked in DSS and processed in StarTools TAKEN BY COLIN 31 SKY-WATCHER CATALOGUE - 2019 OTAs LEVEL OF DIFFICULTY BLACK DIAMOND ACHROMATIC REFRACTORS INTERMEDIATE/ADVANCED MODEL MODEL SWBD1021-OTA SWBD1201-OTA 4 Inch 5 Inch OPTICAL TUBE ASSEMBLY 9x50mm FINDERSCOPE - Optional additional eyepieces available. KEY FEATURES VALUE FOR MONEY REFRACTORS f User level: intermediate & f Rolled steel, powder-coated tube advanced users f Cast aluminium tube rings, with “V” series dovetail bar f Recommended for introductory f Premium 2” diagonal astrophotography f 10mm & 25mm Super eyepieces (1.25” barrel) f Fully baffled and blackened f 2” to 1.25” adaptor with T2 thread The Sky-Watcher Black Diamond is an achromatic refractor that uses a 2-element fully multi-coated lens. It provides clear, crisp images of the popular celestial bodies and brighter deep sky objects. This Sky-Watcher telescope will reveal details in Saturn’s rings and Jupiter’s bands, whilst the cratered landscape of the Moon is a delight to observe over its different phases. It’s well suited for visual use and is a fine gateway RECOMMENDED to learn about astrophotography. MOUNTS f EQ3 f EQ5 MODEL APERTURE FOCAL FINDERSCOPE MAG. RRP f EQ6-R LENGTH f AZEQ5 SWBD1021-OTA 4”/102mm 1000mm 9x50mm 100x ; 36x $449.00 f AZEQ6 SWBD1201-OTA 5”/120mm 1000mm 9x50mm 100x ; 36x -

A Guide to Smartphone Astrophotography National Aeronautics and Space Administration

National Aeronautics and Space Administration A Guide to Smartphone Astrophotography National Aeronautics and Space Administration A Guide to Smartphone Astrophotography A Guide to Smartphone Astrophotography Dr. Sten Odenwald NASA Space Science Education Consortium Goddard Space Flight Center Greenbelt, Maryland Cover designs and editing by Abbey Interrante Cover illustrations Front: Aurora (Elizabeth Macdonald), moon (Spencer Collins), star trails (Donald Noor), Orion nebula (Christian Harris), solar eclipse (Christopher Jones), Milky Way (Shun-Chia Yang), satellite streaks (Stanislav Kaniansky),sunspot (Michael Seeboerger-Weichselbaum),sun dogs (Billy Heather). Back: Milky Way (Gabriel Clark) Two front cover designs are provided with this book. To conserve toner, begin document printing with the second cover. This product is supported by NASA under cooperative agreement number NNH15ZDA004C. [1] Table of Contents Introduction.................................................................................................................................................... 5 How to use this book ..................................................................................................................................... 9 1.0 Light Pollution ....................................................................................................................................... 12 2.0 Cameras ................................................................................................................................................ -

Smartstar® Cubepro GOTO Altaz Mount With

SmartStar® CubeProTM GOTO AltAz Mount with GPS #8200 Features: Grab ‘N Go altazimuth mount – The CubePro™: the only mount of its kind for ultimate rotation Metal worms and ring gears 8 lb payload for various scopes and cameras, with 3.1 lb mount head Go2Nova® 8408 hand controller with Advanced GOTONOVA® GOTO Technology 150,000+ object database with 60 user‐defined objects Large LCD screen with 4 lines and 21‐characters hand control with backlit LED buttons Dual‐axis servomotor with optical encoder 9 speed for precise mount moving control Built‐in 32‐channel Global Positioning System (GPS) Altazimuth/equatorial (AA/EQ) dual operation (need a wedge for EQ operation) Vixen‐type dovetail saddle 3lbs counterweight and stainless steel CW shaft included Operate on 8 AA batteries (not included) 3/8" threads to fit on camera mount 100~240V AC power adapter included, optional 12V DC adapter (#8418) available Serial port for both hand controller and main board firmware upgrade Latest ASCOM and iOptron Commander for mount remote control RS232‐RJ9 serial cable for firmware upgrade and computer control Sturdy 1.25” stainless steel tripod Optional StarFiTM WiFi adapter #8434 for mount wireless control Package Contents (may change without notice): The CubePro™ telescope mount with built‐in GPS Go2Nova® 8408 hand controller Controller cable 12V AC/DC adapter 1.25” tripod with stainless steel tripod legs 3 lbs counterweight and stainless steel CW shaft RS232‐RJ9 serial cable Two year limited warranty iOptron Corp. | 6E Gill Street | Woburn, MA 01801 USA | (781) 569‐0200 | Toll Free (866) 399‐4587 | www.iOptron.com Quick Start Guide: Center Tray knob locks in place Step 1. -

Backyard Astrophotography a How-To Story

Backyard Astrophotography A How-To Story Robert J. Vanderbei 2007 February 13 Amateur Astronomers Association of Princeton http://www.princeton.edu/∼rvdb Why Astrophotography? Long Exposures, Permanent Record, Digital Enhancement, Light Pollution! Visual Experience Long Exposure Light Pollution Subtracted Some Pictures Equipment In order of IMPORTANCE... 1. Mount 2. Camera Computer Software 3. Telescope (OTA) NOTE: This talk is about deep sky astrophotography. For imaging the moon and the planets, the issues are different. Astronomical CCD camera • Pixel size: 6.45 × 6.45 microns • Dimensions: 1392 x 1040 • Quant. Eff.: ∼ 65% • Readout Noise: ∼ 7 electrons • Cooling: ∼ 30◦C below ambient • Download: 3.5 seconds • Format: 16 bit • Weight: 350g Questar: A High-Quality Mount Questar: $4,500 Meade ETX-90: $500 Optically: comparable. Mechanically: a world of a difference. Example OTA: 200mm f/3.5 Vivitar lens ($30) Mount: Questar Camera: Starlight Express SXV-H9 Filter: Dichroic Hα Fundamental Principles • Focal length determines field of view • F-ratio determines exposure time Total exposure time = 156 mins. Field of view = 2.5◦. Combatting Light Pollution Narrow-Band Filters Visual Astronomy vs. Astrophotography • Aperture determines photon flux • Focal length determines field of view • F-ratio determines exposure time Image Acquisition 1. Move equipment outside (3 minutes). Let cool (in parallel). 2. Polar align (2 minutes). 3. Manually point at a known star (1 minute). 4. Fire up MaximDL, my image acquisition software (0 minutes). 5. Focus on bright star (3 minutes). 6. Center star in image (1 minute). 7. Fire up Cartes du Ciel, my computer’s planetarium program (0 minutes). 8.