Machine Learning for Condensed Matter Physics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Nature Index Journals

The Nature Index journals The current 12-month window on natureindex.com includes data from 57,681 primary research articles from the following science journals: Advanced Materials (1028 articles) American Journal of Human Genetics (173 articles) Analytical Chemistry (1633 articles) Angewandte Chemie International Edition (2709 articles) Applied Physics Letters (3609 articles) Astronomy & Astrophysics (1780 articles) Cancer Cell (109 articles) Cell (380 articles) Cell Host & Microbe (95 articles) Cell Metabolism (137 articles) Cell Stem Cell (100 articles) Chemical Communications (4389 articles) Chemical Science (995 articles) Current Biology (440 articles) Developmental Cell (204 articles) Earth and Planetary Science Letters (608 articles) Ecology (259 articles) Ecology Letters (120 articles) European Physical Journal C (588 articles) Genes & Development (193 articles) Genome Research (184 articles) Geology (270 articles) Immunity (159 articles) Inorganic Chemistry (1345 articles) Journal of Biological Chemistry (2639 articles) Journal of Cell Biology (229 articles) Journal of Clinical Investigation (298 articles) Journal of Geophysical Research: Atmospheres (829 articles) Journal of Geophysical Research: Oceans (493 articles) Journal of Geophysical Research: Solid Earth (520 articles) Journal of High Energy Physics (2142 articles) Journal of Neuroscience (1337 articles) Journal of the American Chemical Society (2384 articles) Molecular Cell (302 articles) Monthly Notices of the Royal Astronomical Society (2946 articles) Nano Letters -

Self-Peeling of Impacting Droplets

LETTERS PUBLISHED ONLINE: 11 SEPTEMBER 2017 | DOI: 10.1038/NPHYS4252 Self-peeling of impacting droplets Jolet de Ruiter†, Dan Soto† and Kripa K. Varanasi* Whether an impacting droplet1 sticks or not to a solid formation, 10 µs, is much faster than the typical time for the droplet surface has been conventionally controlled by functionalizing to completely crash22, 2R=v ∼1ms. These observations suggest that the target surface2–8 or by using additives in the drop9,10. the number of ridges is set by a local competition between heat Here we report on an unexpected self-peeling phenomenon extraction—leading to solidification—and fluid motion—opposing that can happen even on smooth untreated surfaces by it through local shear, mixing, and convection (see first stage of taking advantage of the solidification of the impacting drop sketch in Fig. 2c). We propose that at short timescales (<1 ms, and the thermal properties of the substrate. We control top row sketch of Fig. 2c), while the contact line of molten tin this phenomenon by tuning the coupling of the short- spreads outwards, a thin liquid layer in the immediate vicinity of timescale fluid dynamics—leading to interfacial defects upon the surface cools down until it forms a solid crust. At that moment, local freezing—and the longer-timescale thermo-mechanical the contact line pins, while the liquid above keeps spreading on a stresses—leading to global deformation. We establish a regime thin air film squeezed underneath. Upon renewed touchdown of map that predicts whether a molten metal drop impacting the liquid, a small air ridge remains trapped, forming the above- onto a colder substrate11–14 will bounce, stick or self-peel. -

Three-Component Fermions with Surface Fermi Arcs in Tungsten Carbide

LETTERS https://doi.org/10.1038/s41567-017-0021-8 Three-component fermions with surface Fermi arcs in tungsten carbide J.-Z. Ma 1,2, J.-B. He1,3, Y.-F. Xu1,2, B. Q. Lv1,2, D. Chen1,2, W.-L. Zhu1,2, S. Zhang1, L.-Y. Kong 1,2, X. Gao1,2, L.-Y. Rong2,4, Y.-B. Huang 4, P. Richard1,2,5, C.-Y. Xi6, E. S. Choi7, Y. Shao1,2, Y.-L. Wang 1,2,5, H.-J. Gao1,2,5, X. Dai1,2,5, C. Fang1, H.-M. Weng 1,5, G.-F. Chen 1,2,5*, T. Qian 1,5* and H. Ding 1,2,5* Topological Dirac and Weyl semimetals not only host quasi- bulk valence and conduction bands, which are protected by the two- particles analogous to the elementary fermionic particles in dimensional (2D) topological properties on time-reversal invariant high-energy physics, but also have a non-trivial band topol- planes in the Brillouin zone (BZ)24,26. ogy manifested by gapless surface states, which induce In addition to four- and two-fold degeneracy, it has been shown exotic surface Fermi arcs1,2. Recent advances suggest new that space-group symmetries in crystals may protect other types of types of topological semimetal, in which spatial symmetries degenerate point, near which the quasiparticle excitations are not protect gapless electronic excitations without high-energy the analogues of any elementary fermions described in the standard analogues3–11. Here, using angle-resolved photoemission model. Theory has proposed three-, six- and eight-fold degener- spectroscopy, we observe triply degenerate nodal points near ate points at high-symmetry momenta in the BZ in several specific the Fermi level of tungsten carbide with space group P 62m̄ non-symmorphic space groups3,4,10,11, where the combination of non- (no. -

Publications of Electronic Structure Group at UIUC

List of Publications and Theses Richard M. Martin January, 2013 Books Richard M. Martin, "Electronic Structure: Basic theory and practical methods," Cambridge University Press, 2004, Reprinted 2005, and 2008. Japanese translation in two volumes, 2010 and 2012. Richard M. Martin, Lucia Reining and David M. Ceperley, "Interacting Electrons," – in progress -- to be published by Cambridge University Press. Theses Directed Beaudet, Todd D., 2010, “Quantum Monte Carlo Study of Hydrogen Storage Systems” Yu, M., 2010, “Energy density method for solids, surfaces, and interfaces.” Vincent, Jordan D., 2006, “Quantum Monte Carlo calculations of the optical gaps of Ge nanoclusters” Das, Dyutiman, 2005, "Quantum Monte Carlo with a Stochastic Poisson Solver" Romero, Nichols, 2005, "Density Functional Study of Fullerene-Based Solids: Crystal Structure, Doping, and Electron-Phonon Interaction" Tsolakidis, Argyrios, 2003, "Optical response of finite and extended systems" Mattson, William David, 2003, "The complex behavior of nitrogen under pressure: ab initio simulation of the properties of structures and shock waves" Wilkens, Tim James, 2001, "Accurate treatments of Electronic Correlation: Phase Transitions in an Idealized 1D Ferroelectric and Modelling Experimental Quantum Dots" Souza, Ivo Nuno Saldanha Do Rosário E, 2000, "Theory of electronic polarization and localization in insulators with applications to solid hydrogen" Kim, Yong-Hoon , 2000, "Density-Functional Study of Molecules, Clusters, and Quantum Nanostructures: Developement of -

Calorimetric Study on Kinetics of Mesophase Transition in Thermotropic Copolyesters

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Directory of Open Access Journals IJRRAS 3 (1) ● April 2010 Talukdar & Achary ● Study on Kinetics of Mesophase Transition CALORIMETRIC STUDY ON KINETICS OF MESOPHASE TRANSITION IN THERMOTROPIC COPOLYESTERS Malabika Talukdar & P.Ganga Raju Achary Department Of Chemistry, Institute Of Technical Education and Research (ITER), S ‘O’A University, Bhubaneswar, Orissa, India ABSTRACT Macromolecular crystallization behaviour, especially those of liquid crystalline polymers, has been a subject of great interest. The crystallization process for the polyester samples under discussion are analyzed assuming a nucleation controlled process and by using the Avrami equation. The study mainly deals with the crystallization process from the mesophase of two thermotropic liquid crystalline polyesters exhibiting smectic order in their mesophasic state. In order to analyze crystallization behavior of the polyesters, crystallization rate constant and the Avrami exponent have been estimated. Total heat of crystallization and entropy change during the transition has been calculated for both the polyesters. Keywords: Crystallization; Mesophase; Nucleation; Orthorhombic; Smectic. 1. INTRODUCTION The transition kinetics of liquid crystal polymers appears to be of great interest in order to control the effect of processing conditions on the morphology as well as on the final properties of the materials. These LC polymers show at least three main transitions: a glass transition, a thermotropic transition from the crystal to the mesophase and the transition from the mesophase to the isotropic melt. The kinetics of phase transition in liquid crystals may be analyzed for three different processes, the three dimensional ordering from both the isotropic melt state and the mesophase and the formation of the mesophase from the isotropic melt. -

Soft Matter Theory

Soft Matter Theory K. Kroy Leipzig, 2016∗ Contents I Interacting Many-Body Systems 3 1 Pair interactions and pair correlations 4 2 Packing structure and material behavior 9 3 Ornstein{Zernike integral equation 14 4 Density functional theory 17 5 Applications: mesophase transitions, freezing, screening 23 II Soft-Matter Paradigms 31 6 Principles of hydrodynamics 32 7 Rheology of simple and complex fluids 41 8 Flexible polymers and renormalization 51 9 Semiflexible polymers and elastic singularities 63 ∗The script is not meant to be a substitute for reading proper textbooks nor for dissemina- tion. (See the notes for the introductory course for background information.) Comments and suggestions are highly welcome. 1 \Soft Matter" is one of the fastest growing fields in physics, as illustrated by the APS Council's official endorsement of the new Soft Matter Topical Group (GSOFT) in 2014 with more than four times the quorum, and by the fact that Isaac Newton's chair is now held by a soft matter theorist. It crosses traditional departmental walls and now provides a common focus and unifying perspective for many activities that formerly would have been separated into a variety of disciplines, such as mathematics, physics, biophysics, chemistry, chemical en- gineering, materials science. It brings together scientists, mathematicians and engineers to study materials such as colloids, micelles, biological, and granular matter, but is much less tied to certain materials, technologies, or applications than to the generic and unifying organizing principles governing them. In the widest sense, the field of soft matter comprises all applications of the principles of statistical mechanics to condensed matter that is not dominated by quantum effects. -

Department of Physics Review

The Blackett Laboratory Department of Physics Review Faculty of Natural Sciences 2008/09 Contents Preface from the Head of Department 2 Undergraduate Teaching 54 Academic Staff group photograph 9 Postgraduate Studies 59 General Departmental Information 10 PhD degrees awarded (by research group) 61 Research Groups 11 Research Grants Grants obtained by research group 64 Astrophysics 12 Technical Development, Intellectual Property 69 and Commercial Interactions (by research group) Condensed Matter Theory 17 Academic Staff 72 Experimental Solid State 20 Administrative and Support Staff 76 High Energy Physics 25 Optics - Laser Consortium 30 Optics - Photonics 33 Optics - Quantum Optics and Laser Science 41 Plasma Physics 38 Space and Atmospheric Physics 45 Theoretical Physics 49 Front cover: Laser probing images of jet propagating in ambient plasma and a density map from a 3D simulation of a nested, stainless steel, wire array experiment - see Plamsa Physics group page 38. 1 Preface from the Heads of Department During 2008 much of the headline were invited by, Ian Pearson MP, the within the IOP Juno code of practice grabbing news focused on ‘big science’ Minister of State for Science and (available to download at with serious financial problems at the Innovation, to initiate a broad ranging www.ioppublishing.com/activity/diver Science and Technology Facilities review of physics research under sity/Gender/Juno_code_of_practice/ Council (STFC) (we note that some the chairmanship of Professor Bill page_31619.html). As noted in the 40% of the Department’s research Wakeham (Vice-Chancellor of IOP document, “The code … sets expenditure is STFC derived) and Southampton University). The stated out practical ideas for actions that the start-up of the Large Hadron purpose of the review was to examine departments can take to address the Collider at CERN. -

Researchers Publish Review Article on the Physics of Interacting Particles 29 December 2020

Researchers publish review article on the physics of interacting particles 29 December 2020 wide range of branches of physics—from biophysics to quantum mechanics. The article is a so-called review article and was written by physicists Michael te Vrugt and Prof. Raphael Wittkowski from the Institute of Theoretical Physics and the Center for Soft Nanoscience at the University of Münster, together with Prof. Hartmut Löwen from the Institute for Theoretical Physics II at the University of Düsseldorf. The aim of such review articles is to provide an introduction to a certain subject area and to summarize and evaluate the current state of research in this area for the benefit of other researchers. "In our case we deal with a theory used in very many areas—the so- called dynamical density functional theory (DDFT)," explains last author Raphael Wittkowski. "Since we deal with all aspects of the subject, the article turned out to be very long and wide-ranging." DDFT is a method for describing systems consisting of a large number of interacting particles such as are found in liquids, for example. Understanding these systems is important in numerous fields of research such as chemistry, solid state physics or biophysics. This in turn leads to a large variety of applications for DDFT, for example in materials science and biology. "DDFT and related methods have been developed and applied by a number of researchers in a variety of contexts," says lead author Michael te Vrugt. "We investigated which approaches there are and how Time axis showing the number of publications relating to they are connected—and for this purpose we dynamical density functional theory. -

Nature Physics

nature physics GUIDE TO AUTHORS ABOUT THE JOURNAL Aims and scope of the journal Nature Physics publishes papers of the highest quality and significance in all areas of physics, pure and applied. The journal content reflects core physics disciplines, but is also open to a broad range of topics whose central theme falls within the bounds of physics. Theoretical physics, particularly where it is pertinent to experiment, also features. Research areas covered in the journal include: • Quantum physics • Atomic and molecular physics • Statistical physics, thermodynamics and nonlinear dynamics • Condensed-matter physics • Fluid dynamics • Optical physics • Chemical physics • Information theory and computation • Electronics, photonics and device physics • Nanotechnology • Nuclear physics • Plasma physics • High-energy particle physics • Astrophysics and cosmology • Biophysics • Geophysics Nature Physics is committed to publishing top-tier original research in physics through a fair and rapid review process. The journal features two research paper formats: Letters and Articles. In addition to publishing original research, Nature Physics serves as a central source for top- quality information for the physics community through the publication of Commentaries, Research Highlights, News & Views, Reviews and Correspondence. Editors and contact information Like the other Nature titles, Nature Physics has no external editorial board. Instead, all editorial decisions are made by a team of full-time professional editors, who are PhD-level physicists. Information about the scientific background of the editors is available at <http://www.nature.com/nphys/team.html>. A full list of journal staff appears on the masthead. Relationship to other Nature journals Nature Physics is editorially independent, and its editors make their own decisions, independent of the other Nature journals. -

Low Shear Rheological Behaviour of Two-Phase Mesophase Pitch

Published as: Shatish Ramjee, Brian Rand, Walter W. Focke. Low Shear Rheological Behaviour of Two-Phase Mesophase Pitch. Carbon 82 (2015), pp. 368-380. DOI : 10.1016/j.carbon.2014.10.082 Low Shear Rheological Behaviour of Two-Phase Mesophase Pitch Shatish Ramjee, Brian Rand*, Walter W. Focke SARChI Chair of Carbon Technology and Materials, Department of Chemical Engineering University of Pretoria, Pretoria, South Africa, 0002 Abstract: The low shear rate rheology of two phase mesophase pitches derived from coal tar pitch has been investigated. Particulate quinoline insolubles (QI) stabilised the mesophase spheres against coalescence. Viscosity measurements over the range 10 to 106 Pa.s were made at appropriate temperature ranges. Increasing shear thinning behaviour was evident with increasing mesophase content. At low mesophase contents the dominant effect on the near Newtonian viscosity was temperature but at higher contents it was the shear rate; temperature dependence declined to near zero. The data indicated that agglomeration could be occurring at intermediate mesophase volume fractions, 0.2-0.3. The Krieger-Dougherty function and its emulsion analogue indicated that in this region the mesophase pitch emulsions actually behaved like „hard‟ sphere systems and the effective volume fraction was estimated as a function of shear rate illustrating the change in extent of agglomeration. At the higher volume fractions approaching the maximum packing fraction, which could only be measured at higher temperatures, the shear thinning behaviour -

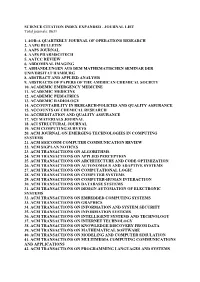

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total Journals: 8631

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total journals: 8631 1. 4OR-A QUARTERLY JOURNAL OF OPERATIONS RESEARCH 2. AAPG BULLETIN 3. AAPS JOURNAL 4. AAPS PHARMSCITECH 5. AATCC REVIEW 6. ABDOMINAL IMAGING 7. ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER UNIVERSITAT HAMBURG 8. ABSTRACT AND APPLIED ANALYSIS 9. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY 10. ACADEMIC EMERGENCY MEDICINE 11. ACADEMIC MEDICINE 12. ACADEMIC PEDIATRICS 13. ACADEMIC RADIOLOGY 14. ACCOUNTABILITY IN RESEARCH-POLICIES AND QUALITY ASSURANCE 15. ACCOUNTS OF CHEMICAL RESEARCH 16. ACCREDITATION AND QUALITY ASSURANCE 17. ACI MATERIALS JOURNAL 18. ACI STRUCTURAL JOURNAL 19. ACM COMPUTING SURVEYS 20. ACM JOURNAL ON EMERGING TECHNOLOGIES IN COMPUTING SYSTEMS 21. ACM SIGCOMM COMPUTER COMMUNICATION REVIEW 22. ACM SIGPLAN NOTICES 23. ACM TRANSACTIONS ON ALGORITHMS 24. ACM TRANSACTIONS ON APPLIED PERCEPTION 25. ACM TRANSACTIONS ON ARCHITECTURE AND CODE OPTIMIZATION 26. ACM TRANSACTIONS ON AUTONOMOUS AND ADAPTIVE SYSTEMS 27. ACM TRANSACTIONS ON COMPUTATIONAL LOGIC 28. ACM TRANSACTIONS ON COMPUTER SYSTEMS 29. ACM TRANSACTIONS ON COMPUTER-HUMAN INTERACTION 30. ACM TRANSACTIONS ON DATABASE SYSTEMS 31. ACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMS 32. ACM TRANSACTIONS ON EMBEDDED COMPUTING SYSTEMS 33. ACM TRANSACTIONS ON GRAPHICS 34. ACM TRANSACTIONS ON INFORMATION AND SYSTEM SECURITY 35. ACM TRANSACTIONS ON INFORMATION SYSTEMS 36. ACM TRANSACTIONS ON INTELLIGENT SYSTEMS AND TECHNOLOGY 37. ACM TRANSACTIONS ON INTERNET TECHNOLOGY 38. ACM TRANSACTIONS ON KNOWLEDGE DISCOVERY FROM DATA 39. ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 40. ACM TRANSACTIONS ON MODELING AND COMPUTER SIMULATION 41. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING COMMUNICATIONS AND APPLICATIONS 42. ACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMS 43. ACM TRANSACTIONS ON RECONFIGURABLE TECHNOLOGY AND SYSTEMS 44. -

Exploring Thein Meso Crystallization Mechanism by Characterizing the Lipid Mesophase Microenvironment During the Growth of Singl

Exploring the in meso crystallization mechanism rsta.royalsocietypublishing.org by characterizing the lipid mesophase microenvironment Research during the growth of single Cite this article: van`tHagLet al.2016 transmembrane α-helical Exploring the in meso crystallization mechanism by characterizing the lipid peptide crystals mesophase microenvironment during the 1,3,4 2,5 growth of single transmembrane α-helical Leonie van `t Hag , Konstantin Knoblich , Shane Phil.Trans.R.Soc.A peptide crystals. 374: A. Seabrook6, Nigel M. Kirby7, Stephen T. Mudie7, 20150125. http://dx.doi.org/10.1098/rsta.2015.0125 Deborah Lau4,XuLi1,3, Sally L. Gras1,3,8,Xavier 4 2,5 2,5 Accepted: 12 February 2016 Mulet , Matthew E. Call , Melissa J. Call , Calum J. Drummond4,9 and Charlotte E. Conn9 One contribution of 15 to a discussion meeting 1 issue ‘Soft interfacial materials: from Department of Chemical and Biomolecular Engineering, and 2 fundamentals to formulation’. Department of Medical Biology, The University of Melbourne, Parkville, Victoria 3052, Australia Subject Areas: 3Bio21 Molecular Science and Biotechnology Institute, physical chemistry, biochemistry The University of Melbourne, 30 Flemington Road, Parkville, Victoria 3052, Australia Keywords: 4 in meso crystallization, cubic mesophase, CSIRO Manufacturing Flagship, Private Bag 10, Clayton, Victoria local lamellar phase, DAP12. 3169, Australia 5Structural Biology Division, The Walter and Eliza Hall Institute of Authors for correspondence: Medical Research, 1G Royal Parade, Parkville, Victoria 3052,