Draco: Architectural and Operating System Support for System Call Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Flexible Lustre Management

Flexible Lustre management Making less work for Admins ORNL is managed by UT-Battelle for the US Department of Energy How do we know Lustre condition today • Polling proc / sysfs files – The knocking on the door model – Parse stats, rpc info, etc for performance deviations. • Constant collection of debug logs – Heavy parsing for common problems. • The death of a node – Have to examine kdumps and /or lustre dump Origins of a new approach • Requirements for Linux kernel integration. – No more proc usage – Migration to sysfs and debugfs – Used to configure your file system. – Started in lustre 2.9 and still on going. • Two ways to configure your file system. – On MGS server run lctl conf_param … • Directly accessed proc seq_files. – On MSG server run lctl set_param –P • Originally used an upcall to lctl for configuration • Introduced in Lustre 2.4 but was broken until lustre 2.12 (LU-7004) – Configuring file system works transparently before and after sysfs migration. Changes introduced with sysfs / debugfs migration • sysfs has a one item per file rule. • Complex proc files moved to debugfs • Moving to debugfs introduced permission problems – Only debugging files should be their. – Both debugfs and procfs have scaling issues. • Moving to sysfs introduced the ability to send uevents – Item of most interest from LUG 2018 Linux Lustre client talk. – Both lctl conf_param and lctl set_param –P use this approach • lctl conf_param can set sysfs attributes without uevents. See class_modify_config() – We get life cycle events for free – udev is now involved. What do we get by using udev ? • Under the hood – uevents are collect by systemd and then processed by udev rules – /etc/udev/rules.d/99-lustre.rules – SUBSYSTEM=="lustre", ACTION=="change", ENV{PARAM}=="?*", RUN+="/usr/sbin/lctl set_param '$env{PARAM}=$env{SETTING}’” • You can create your own udev rule – http://reactivated.net/writing_udev_rules.html – /lib/udev/rules.d/* for examples – Add udev_log="debug” to /etc/udev.conf if you have problems • Using systemd for long task. -

Version 7.8-Systemd

Linux From Scratch Version 7.8-systemd Created by Gerard Beekmans Edited by Douglas R. Reno Linux From Scratch: Version 7.8-systemd by Created by Gerard Beekmans and Edited by Douglas R. Reno Copyright © 1999-2015 Gerard Beekmans Copyright © 1999-2015, Gerard Beekmans All rights reserved. This book is licensed under a Creative Commons License. Computer instructions may be extracted from the book under the MIT License. Linux® is a registered trademark of Linus Torvalds. Linux From Scratch - Version 7.8-systemd Table of Contents Preface .......................................................................................................................................................................... vii i. Foreword ............................................................................................................................................................. vii ii. Audience ............................................................................................................................................................ vii iii. LFS Target Architectures ................................................................................................................................ viii iv. LFS and Standards ............................................................................................................................................ ix v. Rationale for Packages in the Book .................................................................................................................... x vi. Prerequisites -

Scalability of VM Provisioning Systems

Scalability of VM Provisioning Systems Mike Jones, Bill Arcand, Bill Bergeron, David Bestor, Chansup Byun, Lauren Milechin, Vijay Gadepally, Matt Hubbell, Jeremy Kepner, Pete Michaleas, Julie Mullen, Andy Prout, Tony Rosa, Siddharth Samsi, Charles Yee, Albert Reuther Lincoln Laboratory Supercomputing Center MIT Lincoln Laboratory Lexington, MA, USA Abstract—Virtual machines and virtualized hardware have developed a technique based on binary code substitution been around for over half a century. The commoditization of the (binary translation) that enabled the execution of privileged x86 platform and its rapidly growing hardware capabilities have (OS) instructions from virtual machines on x86 systems [16]. led to recent exponential growth in the use of virtualization both Another notable effort was the Xen project, which in 2003 used in the enterprise and high performance computing (HPC). The a jump table for choosing bare metal execution or virtual startup time of a virtualized environment is a key performance machine execution of privileged (OS) instructions [17]. Such metric for high performance computing in which the runtime of projects prompted Intel and AMD to add the VT-x [19] and any individual task is typically much shorter than the lifetime of AMD-V [18] virtualization extensions to the x86 and x86-64 a virtualized service in an enterprise context. In this paper, a instruction sets in 2006, further pushing the performance and methodology for accurately measuring the startup performance adoption of virtual machines. on an HPC system is described. The startup performance overhead of three of the most mature, widely deployed cloud Virtual machines have seen use in a variety of applications, management frameworks (OpenStack, OpenNebula, and but with the move to highly capable multicore CPUs, gigabit Eucalyptus) is measured to determine their suitability for Ethernet network cards, and VM-aware x86/x86-64 operating workloads typically seen in an HPC environment. -

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV)

Container and Kernel-Based Virtual Machine (KVM) Virtualization for Network Function Virtualization (NFV) White Paper August 2015 Order Number: 332860-001US YouLegal Lines andmay Disclaimers not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting: http://www.intel.com/ design/literature.htm. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http:// www.intel.com/ or from the OEM or retailer. Results have been estimated or simulated using internal Intel analysis or architecture simulation or modeling, and provided to you for informational purposes. Any differences in your system hardware, software or configuration may affect your actual performance. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Tests document performance of components on a particular test, in specific systems. -

Architectural Decisions for Linuxone Hypervisors

July 2019 Webcast Virtualization options for Linux on IBM Z & LinuxONE Richard Young Executive IT Specialist Virtualization and Linux IBM Systems Lab Services Wilhelm Mild IBM Executive IT Architect for Mobile, IBM Z and Linux IBM R&D Lab, Germany Agenda ➢ Benefits of virtualization • Available virtualization options • Considerations for virtualization decisions • Virtualization options for LinuxONE & Z • Firmware hypervisors • Software hypervisors • Software Containers • Firmware hypervisor decision guide • Virtualization decision guide • Summary 2 © Copyright IBM Corporation 2018 Why do we virtualize? What are the benefits of virtualization? ▪ Simplification – use of standardized images, virtualized hardware, and automated configuration of virtual infrastructure ▪ Migration – one of the first uses of virtualization, enable coexistence, phased upgrades and migrations. It can also simplify hardware upgrades by make changes transparent. ▪ Efficiency – reduced hardware footprints, better utilization of available hardware resources, and reduced time to delivery. Reuse of deprovisioned or relinquished resources. ▪ Resilience – run new versions and old versions in parallel, avoiding service downtime ▪ Cost savings – having fewer machines translates to lower costs in server hardware, networking, floor space, electricity, administration (perceived) ▪ To accommodate growth – virtualization allows the IT department to be more responsive to business growth, hopefully avoiding interruption 3 © Copyright IBM Corporation 2018 Agenda • Benefits of -

Amazon Workspaces Guia De Administração Amazon Workspaces Guia De Administração

Amazon WorkSpaces Guia de administração Amazon WorkSpaces Guia de administração Amazon WorkSpaces: Guia de administração Copyright © Amazon Web Services, Inc. and/or its affiliates. All rights reserved. As marcas comerciais e imagens de marcas da Amazon não podem ser usadas no contexto de nenhum produto ou serviço que não seja da Amazon, nem de qualquer maneira que possa gerar confusão entre os clientes ou que deprecie ou desprestigie a Amazon. Todas as outras marcas comerciais que não pertencem à Amazon pertencem a seus respectivos proprietários, que podem ou não ser afiliados, patrocinados pela Amazon ou ter conexão com ela. Amazon WorkSpaces Guia de administração Table of Contents O que é WorkSpaces? ........................................................................................................................ 1 Features .................................................................................................................................... 1 Architecture ............................................................................................................................... 1 Acesse o WorkSpace .................................................................................................................. 2 Pricing ...................................................................................................................................... 3 Como começar a usar ................................................................................................................. 3 Conceitos básicos: Instalação -

Free Gnu Linux Distributions

Free gnu linux distributions The Free Software Foundation is not responsible for other web sites, or how up-to-date their information is. This page lists the GNU/Linux distributions that are Linux and GNU · Why we don't endorse some · GNU Guix. We recommend that you use a free GNU/Linux system distribution, one that does not include proprietary software at all. That way you can be sure that you are. Canaima GNU/Linux is a distribution made by Venezuela's government to distribute Debian's Social Contract states the goal of making Debian entirely free. The FSF is proud to announce the newest addition to our list of fully free GNU/Linux distributions, adding its first ever small system distribution. Trisquel, Kongoni, and the other GNU/Linux system distributions on the FSF's list only include and only propose free software. They reject. The FSF's list consists of ready-to-use full GNU/Linux systems whose developers have made a commitment to follow the Guidelines for Free. GNU Linux-libre is a project to maintain and publish % Free distributions of Linux, suitable for use in Free System Distributions, removing. A "live" distribution is a Linux distribution that can be booted The portability of installation-free distributions makes them Puppy Linux, Devil-Linux, SuperGamer, SliTaz GNU/Linux. They only list GNU/Linux distributions that follow the GNU FSDG (Free System Distribution Guidelines). That the software (as well as the. Trisquel GNU/Linux is a fully free operating system for home users, small making the distro more reliable through quicker and more traceable updates. -

Systemd and Linux Watchdog

systemd and Linux Watchdog Run a program at... login? = .profile file boot? = systemd What to do if software locks up? 21-4-11 CMPT 433 Slides #14 © Dr. B. Fraser 1 systemd ● systemd used by most Linux distros as first user- space application to be run by the kernel. – 'd' means daemon: ... – Use systemd to run programs at boot (and many other things). 21-4-11 2 Jack of All Trades 21-4-11 https://www.zdnet.com/article/linus-torvalds-and-others-on- linuxs-systemd/ 3 systemd ● Replaces old “init” system: – Manages dependencies and allows concurrency when starting up applications – Does many things: login, networking, mounting, etc ● Controversy – Violates usual *nix philosophy of do one thing well. http://www.zdnet.com/article/linus-torvalds-and-others-on-linuxs-systemd/ – Some lead developers are said to have a bad attitude towards fixing “their” bugs. ● It's installed on the Beaglebone, so we'll use it! – Copy your code to BBG's eMMC (vs run over NFS). 21-4-11 4 Create a systemd service Assume 11-HttpsProcTimer ● Setup .service file: example installed to /opt/ (bbg)$ cd /lib/systemd/system (bbg)$ sudo nano foo.service [Unit] Description=HTTPS server to view /proc on port 8042 Use [Service] absolute User=root paths WorkingDirectory=/opt/10-HttpsProcTimer-copy/ ExecStart=/usr/bin/node /opt/10-HttpsProcTimer-copy/server.js SyslogIdentifier=HttpsProcServer [Install] WantedBy=multi-user.target 21-4-11 5 Controlling a Service ● Configure to run at startup (bbg)$ systemctl enable foo.service ● Manually Starting/Stopping Demo: Browse to (bbg)$ systemctl start foo.service https://192.168.7.2:3042 after reboot – Can replace start with stop or restart ● Status (bbg)$ systemctl status foo.service (bbg)$ journalctl -u foo.service (bbg)$ systemctl | grep HTTPS 21-4-11 6 Startup Script Suggestions ● If your app needs some startup steps, try a script: – copy app to file system (not running via NFS) – add 10s delay at startup ● I have found that some hardware configuration commands can fail if done too soon. -

Systemd-AFV.Pdf

Facultade de Informática ADMINISTRACIÓN DE SISTEMAS OPERATIVOS GRADO EN INGENIERÍA INFORMÁTICA MENCIÓN EN TECNOLOGÍAS DE LA INFORMACIÓN SYSTEMD Nombre del grupo: AFV Estudiante 1: Sara Fernández Martínez email 1: [email protected] Estudiante 2: Andrés Fernández Varela email 2: [email protected] Estudiante 3: Javier Taboada Núñez email 3: [email protected] Estudiante 4: Alejandro José Fernández Esmorís email 4: [email protected] Estudiante 5: Luis Pita Romero email 5: [email protected] A Coruña, mayo de 2021. Índice general 1 Introducción 1 1.1 ¿Qué es un sistema init? ................................... 1 1.2 Necesidad de una alternativa ................................. 1 2 ¿Qué es systemd? 2 2.1 Un poco de historia ...................................... 2 3 Units 5 4 Compatibilidad de systemd con SysV 6 5 Utilities 7 6 Systemctl 12 7 Systemd-boot: una alternativa a GRUB 15 8 Ventajas y Desventajas de Systemd 16 8.1 Ventajas ............................................ 16 8.1.1 Principales ventajas ................................. 16 8.1.2 Más en profundidad ................................. 16 8.2 Desventajas .......................................... 18 8.2.1 Principales desventajas ................................ 18 8.2.2 Más en profundidad ................................. 18 9 Conclusiones 20 Bibliografía 21 i Índice de figuras 2.1 Ejemplo ejecución machinectl shell. ............................. 3 2.2 Ejemplo ejecución systemd-analyze. ............................. 3 2.3 Ejemplo ejecución systemd-analyze-blame. -

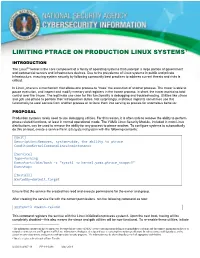

Limiting Ptrace on Production Linux Systems

1 LIMITING PTRACE ON PRODUCTION LINUX SYSTEMS INTRODUCTION The Linux®2 kernel is the core component of a family of operating systems that underpin a large portion of government and commercial servers and infrastructure devices. Due to the prevalence of Linux systems in public and private infrastructure, ensuring system security by following community best practices to address current threats and risks is critical. In Linux, ptrace is a mechanism that allows one process to “trace” the execution of another process. The tracer is able to pause execution, and inspect and modify memory and registers in the tracee process: in short, the tracer maintains total control over the tracee. The legitimate use case for this functionality is debugging and troubleshooting. Utilities like strace and gdb use ptrace to perform their introspection duties. Not surprisingly, malicious implants sometimes use this functionality to steal secrets from another process or to force them into serving as proxies for anomalous behavior. PROPOSAL Production systems rarely need to use debugging utilities. For this reason, it is often safe to remove the ability to perform ptrace-related functions, at least in normal operational mode. The YAMA Linux Security Module, included in most Linux distributions, can be used to remove the ability for any process to ptrace another. To configure systems to automatically do this on boot, create a service file in /etc/systemd/system with the following contents: [Unit] Description=Removes, system-wide, the ability to ptrace ConditionKernelCommandLine=!maintenance [Service] Type=forking Execstart=/bin/bash –c “sysctl -w kernel.yama.ptrace_scope=3” Execstop= [Install] WantedBy=default.target Ensure that the service file created has read and execute permissions for the owner and group. -

Daemon Management Under Systemd ZBIGNIEWSYSADMIN JĘDRZEJEWSKI-SZMEK and JÓHANN B

Daemon Management Under Systemd ZBIGNIEWSYSADMIN JĘDRZEJEWSKI-SZMEK AND JÓHANN B. GUÐMUNDSSON Zbigniew Jędrzejewski-Szmek he systemd project is the basic user-space building block used to works in a mixed experimental- construct a modern Linux OS. The main daemon, systemd, is the first computational neuroscience lab process started by the kernel, and it brings up the system and acts as and writes stochastic simulators T and programs for the analysis a service manager. This article shows how to start a daemon under systemd, of experimental data. In his free time he works describes the supervision and management capabilities that systemd pro- on systemd and the Fedora Linux distribution. vides, and shows how they can be applied to turn a simple application into [email protected] a robust and secure daemon. It is a common misconception that systemd is somehow limited to desktop distributions. This is hardly true; similarly to Jóhann B. Guðmundsson, the Linux kernel, systemd supports and is used on servers and desktops, but Penguin Farmer, IT Fireman, Archer, Enduro Rider, Viking- it is also in the cloud and extends all the way down to embedded devices. In Reenactor, and general general it tries to be as portable as the kernel. It is now the default on new insignificant being in an installations in Debian, Ubuntu, Fedora/RHEL/CentOS, OpenSUSE/SUSE, insignificant world, living in the middle of the Arch, Tizen, and various derivatives. North Atlantic on an erupting rock on top of the world who has done a thing or two in Systemd refers both to the system manager and to the project as a whole. -

Instant OS Updates Via Userspace Checkpoint-And

Instant OS Updates via Userspace Checkpoint-and-Restart Sanidhya Kashyap, Changwoo Min, Byoungyoung Lee, and Taesoo Kim, Georgia Institute of Technology; Pavel Emelyanov, CRIU and Odin, Inc. https://www.usenix.org/conference/atc16/technical-sessions/presentation/kashyap This paper is included in the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16). June 22–24, 2016 • Denver, CO, USA 978-1-931971-30-0 Open access to the Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC ’16) is sponsored by USENIX. Instant OS Updates via Userspace Checkpoint-and-Restart Sanidhya Kashyap Changwoo Min Byoungyoung Lee Taesoo Kim Pavel Emelyanov† Georgia Institute of Technology †CRIU & Odin, Inc. # errors # lines Abstract 50 1000K 40 100K In recent years, operating systems have become increas- 10K 30 1K 20 ingly complex and thus more prone to security and per- 100 formance issues. Accordingly, system updates to address 10 10 these issues have become more frequently available and 0 1 increasingly important. To complete such updates, users 3.13.0-x 3.16.0-x 3.19.0-x May 2014 must reboot their systems, resulting in unavoidable down- build/diff errors #layout errors Jun 2015 time and further loss of the states of running applications. #static local errors #num lines++ We present KUP, a practical OS update mechanism that Figure 1: Limitation of dynamic kernel hot-patching using employs a userspace checkpoint-and-restart mechanism, kpatch. Only two successful updates (3.13.0.32 34 and → which uses an optimized data structure for checkpoint- 3.19.0.20 21) out of 23 Ubuntu kernel package releases.