Structural Segmentation of Dhrupad Vocal Bandish Audio Based on Tempo

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Concept of Tala 1N Semi-Classical Music

The Concept of Tala 1n Semi-Classical Music Peter Manuel Writers on Indian music have generally had less difficulty defining tala than raga, which remains a somewhat abstract, intangible entity. Nevertheless, an examination of the concept of tala in Hindustani semi-classical music reveals that, in many cases, tala itself may be a more elusive and abstract construct than is commonly acknowledged, and, in particular, that just as a raga cannot be adequately characterized by a mere schematic of its ascending and descending scales, similarly, the number of matra-s in a tala may be a secondary or even irrelevant feature in the identification of a tala. The treatment of tala in thumri parallels that of raga in thumn; sharing thumri's characteristic folk affinities, regional variety, stress on sentimental expression rather than theoretical complexity, and a distinctively loose and free approach to theoretical structures. The liberal use of alternate notes and the casual approach to raga distinctions in thumri find parallels in the loose and inconsistent nomenclature of light-classical tala-s and the tendency to identify them not by their theoretical matra-count, but instead by less formal criteria like stress patterns. Just as most thumri raga-s have close affinities with and, in many cases, ongms in the diatonic folk modes of North India, so also the tala-s of thumri (viz ., Deepchandi-in its fourteen- and sixteen-beat varieties-Kaharva, Dadra, and Sitarkhani) appear to have derived from folk meters. Again, like the flexible, free thumri raga-s, the folk meters adopted in semi-classical music acquired some, but not all, of the theoretical and structural characteristics of their classical counterparts. -

12 NI 6340 MASHKOOR ALI KHAN, Vocals ANINDO CHATTERJEE, Tabla KEDAR NAPHADE, Harmonium MICHAEL HARRISON & SHAMPA BHATTACHARYA, Tanpuras

From left to right: Pandit Anindo Chatterjee, Shampa Bhattacharya, Ustad Mashkoor Ali Khan, Michael Harrison, Kedar Naphade Photo credit: Ira Meistrich, edited by Tina Psoinos 12 NI 6340 MASHKOOR ALI KHAN, vocals ANINDO CHATTERJEE, tabla KEDAR NAPHADE, harmonium MICHAEL HARRISON & SHAMPA BHATTACHARYA, tanpuras TRANSCENDENCE Raga Desh: Man Rang Dani, drut bandish in Jhaptal – 9:45 Raga Shahana: Janeman Janeman, madhyalaya bandish in Teental – 14:17 Raga Jhinjhoti: Daata Tumhi Ho, madhyalaya bandish in Rupak tal, Aaj Man Basa Gayee, drut bandish in Teental – 25:01 Raga Bhupali: Deem Dara Dir Dir, tarana in Teental – 4:57 Raga Basant: Geli Geli Andi Andi Dole, drut bandish in Ektal – 9:05 Recorded on 29-30 May, 2015 at Academy of Arts and Letters, New York, NY Produced and Engineered by Adam Abeshouse Edited, Mixed and Mastered by Adam Abeshouse Co-produced by Shampa Bhattacharya, Michael Harrison and Peter Robles Sponsored by the American Academy of Indian Classical Music (AAICM) Photography, Cover Art and Design by Tina Psoinos 2 NI 6340 NI 6340 11 at Carnegie Hall, the Rubin Museum of Art and Raga Music Circle in New York, MITHAS in Boston, A True Master of Khayal; Recollections of a Disciple Raga Samay Festival in Philadelphia and many other venues. His awards are many, but include the Sangeet Natak Akademi Puraskar by the Sangeet Natak Aka- In 1999 I was invited to meet Ustad Mashkoor Ali Khan, or Khan Sahib as we respectfully call him, and to demi, New Delhi, 2015 and the Gandharva Award by the Hindusthan Art & Music Society, Kolkata, accompany him on tanpura at an Indian music festival in New Jersey. -

DEFAULTER PART-A.Xlsx

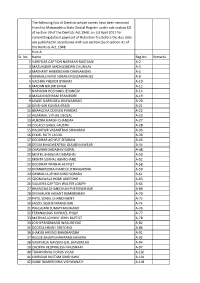

The following lists of Dentists whose names have been removed from the Maharashtra State Dental Register under sub-section (2) of section 39 of the Dentists Act,1948, on 1st April 2017 for committing default payment of Retention fee before the due date are published in accordance with sub section (5) of section 41 of the Dentists Act, 1948. Part-A Sr. No. Name Reg.No. Remarks 1 VARIFDAR CAPTION NARIMAN RUSTAMJI A-2 2 MAZUMDAR MADHUSNDAN CHUNILAL A-3 3 MAEHANT HARIKRISHAN DHANAMDAS A-5 4 GINWALS MINO SORABJI NUSSAWANJEE A-9 5 VACHHA PHEROX BYRAMJI A-10 6 MADAN BALBIR SINGH A-12 7 NARIMAN HOSHANG JEHANGIV A-14 8 MASANI BEHRAM FRAMRORE A-19 9 JAWLE NARENDEA BHAWANRAO A-20 10 DINSHAW KAVINA ERAEH A-21 11 BHARUCHA COOVER PHIRORE A-22 12 AGARWAL VITHAL DEOLAL A-23 13 AURORA HARISH CHANDAR A-27 14 COLACO ISABEL FAUSTIN A-28 15 HALDIPWR VASANTRAO SHAMRAO A-33 16 EEKIEL RUTH JACOB A-39 17 DEODHAR ACHYUT SITARAM A-43 18 DESIAI BHAGWENTRAI GULABSHAWEAR A-44 19 CHAVHAN SADASHIV GOPAL A-48 20 MISTRI JEHANGIR DADABHAI A-50 21 MEHTA SUKHAL ABHECHAND A-52 22 DEODHAR PRABHA ACHYUT A-58 23 DHANBHOORA MANECK JEHANGIRSHA A-59 24 GINWALLA JEHMI MINO SORABJI A-61 25 SOONAWALA HOMI ARDESHIR A-63 26 SIGUEIRA CAPTION WALTER JOSEPH A-65 27 BHANICHA DHANJISHAH PHERIZWSHAW A-66 28 DESHMUKH VASANT RAMKRISHAN A-70 29 PATIL SONAL CHANDHAKNT A-72 30 JAGOS JASSI BYARANSHAW A-74 31 PAHLAJANI SUMATI MUKHAND A-76 32 FERANANDAS RUPHAEL PHILIP A-77 33 MATHIAS JOHNNY JOHN BAPTIST A-78 34 JOSHI PANDMANG WASUDEVAO A-82 35 DCOSTA HENNY SERTORIO A-86 36 JHAKUR ARVIND BHAKHANDRA -

Ruchira Panda, Hindusthani Classical Vocalist and Composer

Ruchira Panda, Hindusthani Classical Vocalist and Composer SUR-TAAL, 1310 Survey Park, Kolkata -700075, India. Contact by : Phone: +91 9883786999 (India), +1 3095177955 (USA) Email Website Facebook - Personal Email: [email protected] Website: ruchirapanda.com Highlights: ❑ “A” grade artist of All India Radio. ❑ Playback-singer - ‘Traditional development of Thumri’ , National TV Channel. India. ❑ Discography : DVD (Live) “Diyaraa Main Waarungi” from Raga Music; CD Saanjh ( Questz World ); also on iTunes, Google Play Music , Amazon Music, Spotify ❑ Performed in almost all of the major Indian Classical Conferences (Tansen, Harivallabh, Dover Lane, Saptak, Gunijan, AACM, LearnQuest etc.) in different countries (India, USA, Canada, UK,Bangladesh, Uzbekistan, Tajikistan) ❑ Empaneled Artist of Hindustani Classical Music : ICCR (Indian Council for Cultural Relations, Government of India), and SPICMACAY ❑ Fellow of The Ministry of Culture, Govt. Of India (2009) ❑ JADUBHATTA AWARD, by Salt Lake Music Festival (2009) ; SANGEET SAMMAN, by Salt Lake Silver Music Festival (2011) ; SANGEET KALARATNA , by Matri Udbodhan Ashram, Patna Synopsis: Ruchira Panda, from Kolkata, India, is the current torch-bearer of the Kotali Gharana, a lineage of classical vocal masters originally hailing from Kotalipara in pre-partition Bengal. She is a solo vocalist and composer who performs across all the major Indian Classical festivals in India, USA, CANADA and Europe. Her signature voice has a deep timbre and high-octane power that also has mind-blowing flexibility to render superfast melodic patterns and intricate emotive glides across microtones. Her repertoire includes Khayal - the grand rendering of an Indian Raga, starting with improvisations at a very slow tempo where each beat cycle can take upwards of a minute, and gradually accelerating to an extremely fast tempo where improvised patterns are rendered at lightning speed . -

Vital Aspects of Bandish(Composition)In Hindustani Music Dr Abhay Dubey Assistant Professor Faculty of Performing Arts the M.S.University of Baroda Gujarat

© 2018 JETIR Octo 2018, Volume 5, Issue 10 www.jetir.org (ISSN-2349-5162) Vital Aspects of Bandish(Composition)in Hindustani Music Dr Abhay Dubey Assistant Professor Faculty of Performing Arts The M.S.University of Baroda Gujarat . Before we discuss about the vital aspect of bandish let us know what the Bandish is -:In Hindustani Classical Music Bandish is a Persian term used for Composition. A Bandish is condensed core statement that holds numerous possibilities bound inside it, possibilities that only the imagination of a skilled performer may unwind and make musically manifest. It is the Bandish that ensures consistency and integrity of a particular melodic and rhythmic thought For the formation of a Bandish following points are salient. (1) Ability to express much more by fewer words. (2) Raga selection as per poetry. (3) Selection of words as per Raag/time concept. (4) Laya/taal selection as per the poetry and temperament of raag. (5) Word Synthesis as per taal. (6) Purity of raag. (7) Synthesis of Sam & Mukhda in a Bandish. 1. ABILITY TO EXPRESS MUCH MORE BY FEWER WORDS A Bandish is ranked as an ideal Bandish if its fewer words have the potentiality to say much. The words express the more meaning, the more it will be embellishing from the language and poetry point of view. The beauty of language or the poetry is much manifested by the artistic synthesis of chhand and alankar. In a Bandish the usage of words should be according to the theme and the thoughts of a Bandish. For instance Piyarawa, mitva, Piya etc.However, these words are synonyms of Piya (Husband) yet each word contains different beauty, flair, fragrance and it has different emotions embedded in it. -

CULTURAL EVOLUTION a Case Study of Indian Music 1

CULTURAL EVOLUTION A Case Study of Indian Music 1 Sangeet Natak Journal of the Sangeet Natak Akademi, New Delhi, Vol. 35, Jan-Mar. 1975 Wim van der Meer This paper studies the relations between music, culture and the society which surrounds it, focussing on change as an evolutionary process. The classical music of North India serves as an example, but a similar approach may be applied to other branches of human culture, e.g. science. Evolution and change The complications involved in a study of social or cultural change can be real- ised if we see the vast amount of different and often contradictory theories about the phenomenon. Percy S. Cohen, after summarising a number of these theories, comes to the conclusion that there is no single theory which can explain social change: “Social systems can provide many sources of change” (1968: 204). This con- fusion is mainly due to the conceptual difficulties involved in the idea of change. Change is related to continuity, it describes a divergence from what we would nor- mally consider non-change. Frederick Barth makes this clear when he says: “For every analysis [of change] it is therefore necessary for us to make explicit our asser- tions about the nature of continuity” (1967: 665). Change is irreversibly connected to the progression of time. Hence we can only distinguish between degrees of change, which we have to relate to a #ctive projection of what continuity is. This appears also from Cohen's suggestion that we should distinguish between minor changes (which he considers part of persistence) and fundamental changes (which are genuine changes of the system) (1968: 175-8).2 A classical and powerful idea concerning change is that of evolution. -

The Chandigarh Sangeet Natak

DEPARTMENT OF PUBLIC RELATIONS CHANDIGARH ADMINISTRATION Press Release Chandigarh, 15th Feb, 2016: The Chandigarh Sangeet Natak Akademi organized a Classical Vocal Music Concert by Eminent Agra Gharana Guru Pandit Yashpaul and virtuoso Kashish Mittal, a young bureaucrat under Guru Shishya tradition series as part of the on going CSNA Basant Utasav-2016 at the Tagore Theatre here today. Akademi Chairman Kamal Arora welcomed the Chief Guest Sh. Anurag Aggarwal, IAS, Secretary Home and Cultural Affairs, and Sh. Jitender Yadav IAS, Director Culture U.T, who honoured the artists. Amidst greetings by applause Pandit Yashpaul brilliantly supported by Sh. Kashish Mittal, IAS, opened up the concert with an elaborate alaap in non Tom format in an exposition of ‘Raga Puriya Kalyan’. The duo traversed in all there octaves with adroit mastery over voice culture. They presented a slow paced (Vilimbat) bandish ‘Par Karo Meri Naiyaa’ in ek taal before a captivating fast (Drut Laya) composition ‘Ghadiyaan Ginat Rahi’ The duo then presented two composition in Raag Kedar including The Madhya laya Punjabi bandish ‘Rehnda E Yaar Dill Vich’ and a Drut bandish ‘Kan Kunwar Albela’. Having established an instant rapport with the audience Pandit Yashpaul and Kashish Mittal presented another Punjabi Bandish ‘Tendri Yaad Satawae’ in Raga Kafi Kanra. ‘Kaliyaan Sang Kart Rang Raliyaan’ was a master piece by Pandit Ji and Kashish Mittal in Raag Bahar. The audience reciprocated the melodious presentation with claps. The duo reflected the fragrance of Basant Ritu in their next offering ‘Aai Basant Bahar’ in raga Basant Bahar. The concert melted into the fervor of the Basant with thrilling compositions ‘Fagva Bridge Dekhan Ko Chali’ and ‘Dolat Sab Naar’ in pure Raga basant. -

THE FUTURISTIC and INNOVATIVE CHANGES in INDIAN MUSIC Dr.Amanpreet Kaur Kang Asst

THE FUTURISTIC AND INNOVATIVE CHANGES IN INDIAN MUSIC Dr.Amanpreet Kaur Kang Asst. professor Music vocal, GGS Khalsa College for Women Jhar Sahib Ludhiana There is no society on earth without music. Music is a vehicle of religious , social and symbolic life .Different theories opinions inferences concerning origin of music, developed from time to time .But these cannot be supported fully although there remains some clues information about music. But one thing is sure that concept and consciousness of music is not recent. it is supposed that after the recognition of Udat, Anudat and swarit other four swaras were recognized then saptak come into existence. It is creativity of human beings to discover various melodious tunes from these seven swaras. These creative humans discovered the passage of freedom self realization and peace by making musical sounds. Music also appeared as a medium of expression and also as a special language. A number of rages were formed out of seven swaras. The raga is supreme and is gradual unfolding the subject of classical Hindustani music presentation. Compositions or Bandishes are based upon the twin concept of raga and tala Indian Music’s unique gift to the World. Today Indian music is best represented by the farm Khayal which came into its own by mid eighteenth century when the popularity of Dhrupad form began to wave steadily after having enjoyed the status of primary form of main stage vocal classical music for over three hundred years. With the Mughal invasion innovations took place in Indian music. For example as Dhrupad gayan shelly got replaced by Khayal Gayan Shelly.In the Shade of Creating Some new Style of Singing which is easier than Dhrupad Khayal Gayan Shelly was Invented .A singer could explain the raag as much as he could according to his nature and imagination. -

University Faculty Details Page on DU Web-Site

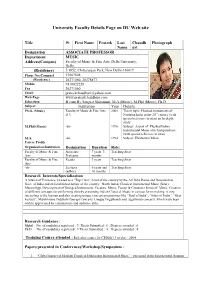

University Faculty Details Page on DU Web-site Title Dr. First Name Prateek Last Chaudh Photograph Name uri Designation ASSOCIATE PROFESSOR Department MUSIC Address(Campus) Faculty of Music & Fine Arts, Delhi University, Delhi. (Residence) J-1852, Chittaranjan Park, New Delhi-110019 Phone No.(Campus) 27667608 (Residence) 26271060, 26278877 Mobile 9810022228 Fax 26271060 Email [email protected] Web-Page www.prateekchaudhuri.com Education B.com(H), Sangeet Shiromani, M.A.(Music), M.Phil (Music), Ph.D Subject Institution Year Details Ph.D. (Music) Faculty of Music & Fine Arts, 2001 Thesis topic: Plucked instruments of D.U. Northern India in the 20th century (with special reference to sitar) an In-depth study M.Phil (Music) -do- 1996. Subject: Ascent of Plucked Indian Instrumental Music after Independence (with special reference to sitar) M.A. -do- 1994 Subject: Hindustani Music Career Profile Organization/Institution Designation Duration Role: Faculty of Music & Fine Associate 7 years 3 Teaching Sitar Arts Professor months Faculty of Music & Fine Reader 3 years Teaching Sitar Arts -do- Lecturer 6 years and Teaching Sitar (adhoc) 10 months Research Interests/Specialization A Sitarist of Eminence, Graded as a “Top Class” Artist of the country by the All India Radio and Doordarshan, Govt. of India and an Established Artiste of the country. North Indian Classical Instrumental Music (Sitar), Musicology, Development of Stringed Instruments, Creative Music, Fusion & Crossover forms of Music, Creation of different concepts for performing thereby presenting Indian Classical Music in various forms making it very interesting to the layman and also creating unique concept programmes like “Soul of India”, “Sitars of India”, “Sitar Ecstasy”, Murchhnana Paddhati Concept Concerts, Unique Trigulbandi and Jugalbandi concerts, which have been widely appreciated by connoisseurs and audience alike. -

Sangeet Kendra Catalogue Copy

Sangeet Kendra Catalogue Baithak Series (Vocal) Siddheshwari Devi ! Volume 1 - Poorab Thumri!! ! ! ! 1. Dole Re Joban Madamaati 2. Rasa Ki Boond Pari 3. Jhamak Jhuki Aayi 4. E Ri Haanri Nanandiyaa 5. Ranaji Main Bairaagan Hungi !Volume 2 - Poorab Thumri 1. Saba Ras Barase Nayanavaa 2. Shaam Bhai Bin Shyam 3. Are Guiyaan Daravajavaa Mein 4. Aavo Aavo Nagariyaa Badi Motibai ! Volume 1 - Poorab Thumri 1. Vasant Ritu Bahaar and Piya Sang Karat Boli Tholi 2. Bahiyaan Naa Maroro Giridhaari 3. Paani Bhare Ri Kaun Albele Ki Naar Jhamaajham 4. Saiyaandaman !Volume 2 - Poorab Thumri 1. Tori Tirachhi Nazar Laage Pyari Re 2. Sab Ban Ambuvaa Mahurila 3. Naahak Laaye Gawanvaa ! Volume 3 - Poorab Thumri 1. Raga Kamod - Khayal 2. Raga Mishra Sindhura - Thumri 3. Raga Bageshree - Khayal 4. Raga Mishra Kafi - Tappa 5. Raga Mishra Jhinjhoti - Tappa Ustad Sharafat Hussain Khan ! Volume 1 - Agra Gharana 1. Raga Saavani - Alap (Vilambit) 2. Raga Saavani - Alap (Madhyalaya) 3. Raga Saavani - Alap (Drut) 4. Raga Saavani - Khayal in Vilambit Ektala (E Ri Mai Aaj) ! ! Volume 2 - Agra Gharana 1. Raga Saavani - Khayal in Drut Teentala (E Ri Faagun Jhula Daare) 2. Raga Nat Bihaag - Khayal in Drut Teentala (Jhan Jhan Jhan Jhan Payal Baaje Ma) 3. Raga Sohini - Drut Teentala ! Volume 3 - Agra Gharana 1. Raga Jayjaywanti - Khayal Vilambit Ektala (Vaaye Kahaan Kal Ho) 2. Raga Jayjaywanti - Khayal Drut Teentala (Naadaan Akhiyaan Laagi) ! Volume 4 - Agra Gharana 1. Raga Raamkali - Khayal in Vilambit Ektala (Aaj Radhe Tore Badan Par) 2. Raga Raamkali - Khayal in Drut Teentala (Un Sang Laagi) 3. Raga Bhairavi - Dadra (Banaavo Batiyaan) Dagar Brothers (Nasir Zahiruddin Dagar and Nasir Faiyazuddin Dagar) ! Volume 1 - Dhrupad 1. -

Hindustani Music (Vocal/ Instrumental) Submitted to University Grants Commission New Delhi Under Choice Based Credit System

Appendix-XXXIII E.C. dated 03.07.2017/14-15.07.2017 (Page No. 353-393) Syllabus of B.A. (Prog.) Hindustani Music (Vocal/ Instrumental) Submitted to University Grants Commission New Delhi Under Choice Based Credit System CHOICE BASED CREDIT SYSTEM 2015 DEPARTMENT OF MUSIC FACULTY OF MUSIC & FINE ARTS UNIVERSITY OF DELHI DELHI-110007 1 353 Appendix-XXXIII CHOICE BASED CREDIT SYSTEM INE.C. B.A. dated PROGRAMME 03.07.2017/14-15.07.2017 HINDUSTANI MUSIC (VOCAL/INSTRUMENTAL)(Page No. 353-393) CORE COURSE Ability Skill Elective: Elective: Semester Enhancement Enhancement Discipline Generic (GE) Compulsory Course Course (SEC) Specific DSE (AECC) 1 English/MIL-1 (English/MIL Communication)/ Environmental DSC-1A Theory of Indian Science Music: Unit-1 Practical: Unit-2 II Theory of Indian Music General Environmental & Biographies Unit-I Science/(English/MI L Communication) Practical : Unit-II III Theory: Unit-1 Ancient SEC-1 Granthas & Contribution of musicologists Value based & Practical Practical : Unit-2 Oriented course for Hindustani Music (Vocal/Instrum ental) Credits-4 IV Theory : Unit-1 SEC-2 Medieval Granthas & Contribution of Musicians Value based & Practical Practical : Unit-2 Oriented course for Hindustani Music (Vocal/Instrum ental) Credits-4 V SEC-3 DSE-1A Theory: Generic Elective Value based & Vocal / -1 (Vocal/ Practical Instrumental Instrumental Oriented (Hindustani Music) course for Music) Credit-2 Credit-6 Hindustani Music DSE-2A (Vocal/Instrum Practical: ental) Vocal / Credits-4 Instrumental (Hindustani Music) Credit-4 VI SEC-4 DSE-1B Generic Elective Value based & Theory: -2 (Vocal/ Practical Vocal / Instrumental Oriented Instrumental Music) course for (Hindustani Credit-6 Hindustani Music) Credit-2 Music (Vocal/Instrum DSE-2B ental) Practical: Credits-4 Vocal / Instrumental (Hindustani Music) Credit-4 2 354 Appendix-XXXIII E.C. -

Rhythmic Structure Based Segmenta[On for Hindustani Music

Rhythmic Structure based segmentaon for Hindustani music T.P.Vinutha Prof. Preeti Rao Department of Electrical Engineering Indian Institute of Technology Bombay OUTLINE • Introduc0on – Hindustani Music concert – Bases of segmentaon, our approach – Problem addressed, mo0vaon • Rhythm – Percussion rhythmic structure as cue • Implementaon – Database – Audio segmentaon system • Conclusion & Future scope • References INTRODUCTION •Hindustani music concert is highly structured polyphonic music, with percussive and melodic accompaniments •Khyal style of Hindustani music – is a predominantly improvised music tradi0on operang within a well-defined raga (melodic) and tala (rhythmic) framework Raag Deshkar, PiyaJaag Bases for segmentaon • Musical Structure – Repe00ons, contrast and homogeneity of musical aspects such as • Melody – Steady in Alap and rendered around ‘sa’, few gamaks and spread throughout the octave in sthayi, traversing up to upper octave in Antara, more dynamic in Tan and Sargam • Timbre – Instrument change or change in singer – Vowels are used in Alap, Aakar vistar and Tans, but consonants in Bol-alap, Sargam secons • Rhythm – Rhythm refers to all aspects of musical 0me paerns – the inherent tempo of the melodic piece, the way syllables of the lyrics are sung or the way strokes in instruments are played • Rhythm is used as cue for segmentaBon – As it is more explicitly changing in most of the secons Problem addressed , Mo0vaon • Problem addressed • Automacally locate the points of significant change in the rhythmic structure of the