A Sampling of Molecular Dynamics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Home, Yard, and Garden Pest Newsletter

Of UNIVERSE V NOTICE: Return or renew all Library Materjalsl The Minimum Fee for each Lost Book is $50.00. The person charging this material is responsible for its return to the library from which it was withdrawn on or before the Latest Date stamped below. Theft, mutilation, and underlining of books are reasons for discipli- nary action and ntay result in dismissal from the University. To renew call Telephone Center, 333-8400 UNIVERSITY OF ILLINOIS LIBRARY AT URBANA-CHAMPAIGN L16I—O-1096 DEC 1 3 1999 ^^RICULTURE LIBRARY —— ^ 5 :OOPERATIVE EXTENSION SERVICE HOME, YARD GARDF^^ I DrcT ^ '^-' ( f collegeliege of agricultural, consumer and environmental sciences, university ( Illinois at urbana-champaign A Illinois natural history survey, champaign NcvviLtriER (,PR25B97 ^G Ubrar^ No. 1» April 16, 1997 newsletter coordinator, at (217) 333-6650. If you wish issues the Home,Yard and Garden This is the first of 22 of to discuss a specific article in the newsletter, contact Pest Newsletter. It will be prepared by Extension specialists the author whose name appears in parentheses at the in plant pathology, agricultural entomology, horticulture, end of the article. The author's telephone number will and agricultural engineering. Timely, short paragraphs usually be listed at the end of the newsletter. (Phil about pests of the home and its surroundings will make up Nixon) the newsletter When control measures are given, both chemical and nonchemical suggestions (when effective) will be given. PLANT DISEASES Welcome Plant Clinic Opens May 1 Welcome to the first issue of the 1997 Home, Yard The plant clinic serves as a clearinghouse for plant and Garden Pest Newsletter. -

Order Form Full

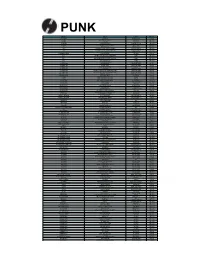

PUNK ARTIST TITLE LABEL RETAIL 100 DEMONS 100 DEMONS DEATHWISH INC RM90.00 4-SKINS A FISTFUL OF 4-SKINS RADIATION RM125.00 4-SKINS LOW LIFE RADIATION RM114.00 400 BLOWS SICKNESS & HEALTH ORIGINAL RECORD RM117.00 45 GRAVE SLEEP IN SAFETY (GREEN VINYL) REAL GONE RM142.00 999 DEATH IN SOHO PH RECORDS RM125.00 999 THE BIGGEST PRIZE IN SPORT (200 GR) DRASTIC PLASTIC RM121.00 999 THE BIGGEST PRIZE IN SPORT (GREEN) DRASTIC PLASTIC RM121.00 999 YOU US IT! COMBAT ROCK RM120.00 A WILHELM SCREAM PARTYCRASHER NO IDEA RM96.00 A.F.I. ANSWER THAT AND STAY FASHIONABLE NITRO RM119.00 A.F.I. BLACK SAILS IN THE SUNSET NITRO RM119.00 A.F.I. SHUT YOUR MOUTH AND OPEN YOUR EYES NITRO RM119.00 A.F.I. VERY PROUD OF YA NITRO RM119.00 ABEST ASYLUM (WHITE VINYL) THIS CHARMING MAN RM98.00 ACCUSED, THE ARCHIVE TAPES UNREST RECORDS RM108.00 ACCUSED, THE BAKED TAPES UNREST RECORDS RM98.00 ACCUSED, THE NASTY CUTS (1991-1993) UNREST RM98.00 ACCUSED, THE OH MARTHA! UNREST RECORDS RM93.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (EARA UNREST RECORDS RM98.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (SUBC UNREST RECORDS RM98.00 ACHTUNGS, THE WELCOME TO HELL GOING UNDEGROUND RM96.00 ACID BABY JESUS ACID BABY JESUS SLOVENLY RM94.00 ACIDEZ BEER DRINKERS SURVIVORS UNREST RM98.00 ACIDEZ DON'T ASK FOR PERMISSION UNREST RM98.00 ADICTS, THE AND IT WAS SO! (WHITE VINYL) NUCLEAR BLAST RM127.00 ADICTS, THE TWENTY SEVEN DAILY RECORDS RM120.00 ADOLESCENTS ADOLESCENTS FRONTIER RM97.00 ADOLESCENTS BRATS IN BATTALIONS NICKEL & DIME RM96.00 ADOLESCENTS LA VENDETTA FRONTIER RM95.00 ADOLESCENTS -

Students Protest Funding

IMMEDIATE OSCAR WRAP UP A VISIT WITH DANNY GLOVER Check out the Hurricane online for a wrap up of Monday The star of Lethal Weapon tells the truth about Hollywood night's Oscar winners. __ See ONLINE NEWS below for address . See ACCENT, Page 8 a THE MIAMI HURRICANF TUESDAY, MARCH 26, 1996 MAR 2 fi 1996 UNIVERSITY OF MIAMI • CORAL GABLES, FLA. VOLUME 74, NUMBER**^ NEWS Students protest funding THEATRE The world-famous musical Mame Debates will be performed at the UM Jerry Herman Ring Theatre this April. The lyrics and music were written rage over by UM alumnus Jerry Herman, who will be in the area to personally supervise the final rehearsals. The show will run April 17 speaker through 20 and 23 through 27 at 8 p.m. and April 20 and 27 at 2 p.m. By LOUIS FLORES Tickets are $18 week nights and And ELAINE HEINZMAN matinees and $20 on weekends, Of the Staff discounts are available for students, "How much is enough for us to endure?" asked seniors, and groups. Chris Frank. Tickets go on sale April I. To pur For Caryn Vogel, Frank's speech was too chase tickets or for information, call much. 284-3355. Frank and Vogel, both law students, were two of approximately 80 students who attended the Student Bar Associaiton's meeting last Sunday, RACE RELATIONS SUMMIT which would decide wither SBA would fund a speech by Nation of Islam Minister Rasul Hakim The Multi-Cultural Programming Muhammad speaking at UM. Committee will be holding a Race The Black Law Students Association sought a Relations Summit at 7 p.m. -

Hallo Zusammen

Hallo zusammen, Weihnachten steht vor der Tür! Damit dann auch alles mit den Geschenken klappt, bestellt bitte bis spätestens Freitag, den 12.12.! Bei nachträglich eingehenden Bestellungen können wir keine Gewähr übernehmen, dass die Sachen noch rechtzeitig bis Weihnachten eintrudeln! Vom 24.12. bis einschließlich 5.1.04 bleibt unser Mailorder geschlossen. Ab dem 6.1. sind wir aber wieder für euch da. Und noch eine generelle Anmerkung zum Schluss: Dieser Katalog offeriert nur ein Bruchteil unseres Gesamtangebotes. Falls ihr also etwas im vorliegenden Prospekt nicht findet, schaut einfach unter www.visions.de nach, schreibt ein E-Mail oder ruft kurz an. Viel Spass beim Stöbern wünscht, Udo Boll (VISIONS Mailorder-Boss) NEUERSCHEINUNGEN • CDs VON A-Z • VINYL • CD-ANGEBOTE • MERCHANDISE • BÜCHER • DVDs ! CD-Angebote 8,90 12,90 9,90 9,90 9,90 9,90 12,90 Bad Astronaut Black Rebel Motorcycle Club Cave In Cash, Johnny Cash, Johnny Elbow Jimmy Eat World Acrophobe dto. Antenna American Rec. 2: Unchained American Rec. 3: Solitary Man Cast Of Thousands Bleed American 12,90 7,90 12,90 9,90 12,90 8,90 12,90 Mother Tongue Oasis Queens Of The Stone Age Radiohead Sevendust T(I)NC …Trail Of Dead Ghost Note Be Here Now Rated R Amnesiac Seasons Bigger Cages, Longer Chains Source Tags & Codes 4 Lyn - dto. 10,90 An einem Sonntag im April 10,90 Limp Bizkit - Significant Other 11,90 Plant, Robert - Dreamland 12,90 3 Doors Down - The Better Life 12,90 Element Of Crime - Damals hinterm Mond 10,90 Limp Bizkit - Three Dollar Bill Y’all 8,90 Polyphonic Spree - Absolute Beginner -Bambule 10,90 Element Of Crime - Die schönen Rosen 10,90 Linkin Park - Reanimation 12,90 The Beginning Stages Of 12,90 Aerosmith - Greatest Hits 7,90 Element Of Crime - Live - Secret Samadhi 12,90 Portishead - PNYC 12,90 Aerosmith - Just Push Play 11,90 Freedom, Love & Hapiness 10,90 Live - Throwing Cooper 12,90 Portishead - dto. -

Music-Week-1996-03-1

itiusic we For Everyone in the Business of Music 16 MARCH 1996 £3, Clearyfired as Cure aimto break Edel pays fine allegedthc company chart hyping.was fîned £30,000 for liveroyaltymould annonncedCleary's departure,on Wednesday, which follows was by Martin Talbot ^er Cleary's compilationkings of the a statemcnt, Edel Company thk^ay'fbllowU^the aand have decid- 29 Turners strongreiurnmg form Theyin the are UK the andnve firft band^o are keen try'to to make do this it ILMCAfter Pariymeeting, unveiled promoter the îr^Harveyplan Harvey at the .ea^TheX^ inft^ m A & RisDann:yesIama Beatlesman io One head of ; it to a section of it," 1: > ► RADIO BOSSES SET FOR CONFERENCE GRILLING -p5 ► > ^ Beàîles "Magnificent...Nol only liistoricol impori but ulso massive musical value." Q 45 tracks "Anlhology 2 is brilliant..,lt's timeless stuff. In a word, genius." VOX Previously unreleased recordings "Truly, madly and deeply fascinating." MOJO from 1965-1968 Features alternative versions of Strawberry Fields Forever Yesterday Taxman A Day In The Life and many more A, plus the new single Real Love Available From March 18 Double Compact Disc [CDPCSP 728] Double Tape [TCPCSP 728] Triple Vinyl Set [PCSP 728] NEWSDESK: 0171 921 5990 or e-ma MUSIC WEEK AWARDS NEWSFILE Rock Box begins légal action RCAtakes starring rôle The légal action launched by Rock Box against the BRI hasCountyCourttofixacourt a pre-trial hearing loday date. (Monday) The promotions atthe London respectcompany of claims CDs, cassettes the BRI owes and records it more thanseized £13,000 as part in of with four MW awards the anti-hyping probe, which identified Rock Box as the by Martin Talbot In The World...Ever, EMI Music Meanwhile,company which Rock allegedly Box is launching bought in itsseven own records. -

Rock Album Discography Last Up-Date: September 27Th, 2021

Rock Album Discography Last up-date: September 27th, 2021 Rock Album Discography “Music was my first love, and it will be my last” was the first line of the virteous song “Music” on the album “Rebel”, which was produced by Alan Parson, sung by John Miles, and released I n 1976. From my point of view, there is no other citation, which more properly expresses the emotional impact of music to human beings. People come and go, but music remains forever, since acoustic waves are not bound to matter like monuments, paintings, or sculptures. In contrast, music as sound in general is transmitted by matter vibrations and can be reproduced independent of space and time. In this way, music is able to connect humans from the earliest high cultures to people of our present societies all over the world. Music is indeed a universal language and likely not restricted to our planetary society. The importance of music to the human society is also underlined by the Voyager mission: Both Voyager spacecrafts, which were launched at August 20th and September 05th, 1977, are bound for the stars, now, after their visits to the outer planets of our solar system (mission status: https://voyager.jpl.nasa.gov/mission/status/). They carry a gold- plated copper phonograph record, which comprises 90 minutes of music selected from all cultures next to sounds, spoken messages, and images from our planet Earth. There is rather little hope that any extraterrestrial form of life will ever come along the Voyager spacecrafts. But if this is yet going to happen they are likely able to understand the sound of music from these records at least. -

Mustang Daily, April 14, 2003

www.mustangdaìly.calpoly.edu CALIFORNIA POLYTECHNIC STATE UNIVERSITY, SAN LUIS OBISPO 1 * Bad Religion, Good Show: Monday, April 14,2003 Veteran punk band displayed timeless¡ energy at Ree Center Friday, 5 Vegas, Baby, Vegas: Visiting the City o f Sin, 4 . ^ TODAY'S WEATHER Volume LXVIl, Number 108, 1916-200 High: 59« Low: 45« OAIIY Poly flies away with project Faculty housing project Biology grad stu up for recertification dent Shawna Stevens and ecol By Susan Malanche ogy and system MUSTANG DAILY STAFF WRITER / atic biology ‘7/ <we get back to the senior Emily The Cal Poly faculty housing pro judge as early as June, we Amaral sit at a ject will return to the California hope to start turning some Monarch Project State University Board of Trustees meeting. after a judge ordered Cal Poly to dirt this year and be ready explain a previous report in more BELOW PHOTO BY for occupancy by August MATT WECHTER/ detail. MUSTANG DAILY 2005.” i The Cal Poly Housing Corporation (CPHC) Board of Bob Ambach Directors met Friday to discuss the managing director of Cal Poly Supplement to the Final Environmental Impact Report Housing Corporation (FEIR). Traffic, air quality and wastewater treatment capacity were issue. some of the main issues Judge “My biggest concern will be mak Douglas Hilton ordered to be ing sure the intersection (Highland reviewed last December. Drive and Highway 1) is reconfig If the eSU Board of Trustees ured because jt already operates at a recertifies the report after it meets in substandard level according to Cal early May, the C PH C would return Trans,” Ambach said. -

Discos Internacionales

ARTISTA DISCO DESCRIPCIÓN 2ND GRADE HIT TO HIT A GIRL CALLED EDDY BEEN AROUND AC/DC POWERAGE AIDAN KNIGHT´S AIDAN KNIGHT´S CLEAR/BLACK VINYL AL GREEN IM STILL IN LOVE WITH YOU ALABAMA SHAKES SOUND AND COLOR CLEAR VINYL ALEX CHILTON FREE AGAIN: THE 1970 SESSIONS VINILO ROJO TRANSLÚCIDO ALGIERS THE UNDERSIDE OF POWER ED. LMITADA. CREAM VINYL ALGIERS THERE IS NO YEAR ED. LIMITADA CLEAR VINYL + FLEXI ALICE COOPER KILLER ALICE COOPER ZIPPER CATCHES SKIN CLEAR/BLACK SWIRL VINYL ALL NIGHT LONG NOTHERN SOUL FLOOR FILLERS RECOPILATORIO ALL THEM WITCHES ALL THEM WITCHES VINYL COLOR ALLAH LAS CALICO REVIEW ALLAH LAS DEVENDRA ALLAH LAS LAHS VINYL ORANGE ALLAH LAS WORSHIP THE SUN ALLIE X CAPE GOD ALT-J REDUXER ALT-J THIS IS ALL YOURS COLOUR-SHUFFLED VINYL (RED, YELLOW, BLUE, GREEN) AMNESIA SCANNER TEARLESS AMOK ATOMS FOR PEACE AMY WINEHOUSE FRANK AMYL AND THE SNIFFERS LIVE AT THE CROXTON SINGLE 7" ANDREW BIRD ARE YOU SERIOUS ANGEL OLSEN ALL MIRRORS ANGEL OLSEN BURN YOUR FIRE FOR NO WITNESS ANGEL OLSEN MY WOMAN ANGEL OLSEN PHASES ANGEL OLSEN WHOLE NEW MESS ANGELUS APATRIDA CABARET DE LA GUILLOTINE ANGELUS APATRIDA GIVE 'EM WAR ANGUS & JULIA STONE ANGUS & JULIA STONE ANGUS & JULIA STONE A BOOK LIKE THIS ANGUS & JULIA STONE SNOW ANNA CALVI HUNTED RED VINYL ANTIFLAG AMERICAN FALL ANTIFLAG AMERICAN RECKONING ANTIFLAG AMERICAN SPRING ARCA KICK´I ARCA MUTANT DOUBLE RED VINYL ARCADE FIRE FUNERAL ARCADE FIRE NEON BIBLE ARCTIC MONKEYS AM ARCTIC MONKEYS FAVOURITE WORST NIGHTMARE ARCTIC MONKEYS SUCK IT AND SEE ARCTIC MONKEYS TRANQUILITY BASE HOTEL+ CASINO ARETHA -

The Ithacan, 1996-03-07

Ithaca College Digital Commons @ IC The thI acan, 1995-96 The thI acan: 1990/91 to 1999/2000 3-7-1996 The thI acan, 1996-03-07 Ithaca College Follow this and additional works at: http://digitalcommons.ithaca.edu/ithacan_1995-96 Recommended Citation Ithaca College, "The thI acan, 1996-03-07" (1996). The Ithacan, 1995-96. 22. http://digitalcommons.ithaca.edu/ithacan_1995-96/22 This Newspaper is brought to you for free and open access by the The thI acan: 1990/91 to 1999/2000 at Digital Commons @ IC. It has been accepted for inclusion in The thI acan, 1995-96 by an authorized administrator of Digital Commons @ IC. OPINION ACCENT SPORTS INDEX Accent ......•................... 13 A true: leader Dog days One step short Classifieds .................... 18 Comics ......................... 19 Robert.Demming's work Student trains Labrador to Gemmell earns All Opinion ......................... 10 will aways be remembered 1 be seeing eye dog 1 American honors at nationals Sports ........................... 20 The ITHACAN The Newspaper for the Ithaca College Community VOLUME 63, NUMBER 22 l'HuRSDAY, MARCH 7, 1996 24 PAGES, FREE Faculty Council votes not to submit pool to board Affirms right sentatives will allow for "adequate and effective input and representa JOB OUTLINE to select search tion." According to Muller, those three • See related story ..... pg. 6 faculty members would be selected committee reps out of a pool of six by the executive commiuee of the board during a send out an approval ballot next By Alex Leary meeting next month. week that will ask faculty members Ithacan News Editor McBride said this gives further to elect representatives to the com mittee. -

Episode #19 - Bad Religion Exposé

Episode #19 - Bad Religion Exposé Intro Welcome to Point Radio Cast. Who am I, why I'm doing this. Support Click through the site! pointradiocast.com/music.html Contact Info [email protected] Show intro - Theme Bad Religion: Through the Decades Exposé. They've been at it since 1979. Formed in LA in November or December, 1979. Greg Graffin and Brett Gurewitz have been there the whole time. They went from garage punk rock, to the punk/hardcore combo sound of today. Still at it, and still making great music! They're sort of nerd punk, and they've always had a left political angle. 1982 How Could Hell Be Any Worse? 6 Latch Key Kids Voice of God is Government Fu*k Armageddon… This is Hell, In the Night, Damned to be Free, White Trash 1983 Into the Unknown 4 Chasing the Wild Goose Losing Generation 1988 Suffer 6 Best for You Do What You Want You Are (The Government), Give You Nothing, Suffer 1989 No Control 8 Change of Ideas You No Control, I Want to Conquer the World, Anxiety, Automatic Man 1990 Against the Grain 9 Flat Earth Society Walk Away Modern Man, The Positive Aspect of Negative Thinking, Anesthesia, Faith Alone, Against the Grain, 21st Century (Digital Boy), Unacceptable 1992 Generator 8 Generator Too Much To Ask Heaven is Falling, Atomic Garden, The Answer, Fertile Cresent, Chimaera 1993 Recipe for Hate 7 American Jesus Don't Pray on Me Struck a Nerve, My Poor Friend Me, Skyscraper 1994 Stranger Than Fiction 7 Stranger Than Fiction Hooray for Me… Incomplete, Better Off Dead 1996 The Gray Race 9 Punk Rock Song Drunk Sincerity -

Identity, Ideological Conflict and the Field Of

“WHAT WAS ONCE REBELLION IS NOW CLEARLY JUST A SOCIAL SECT”: IDENTITY, IDEOLOGICAL CONFLICT AND THE FIELD OF PUNK ROCK ARTISTIC PRODUCTION A Thesis Submitted to the College of Graduate Studies and Research In Partial Fulfillment of the Requirements For the Degree of Doctor of Philosophy In the Department of Sociology University of Saskatchewan Saskatoon By M.D. DASCHUK Copyright Mitch Douglas Daschuk, August 2016. All rights reserved. PERMISSION TO USE In presenting this thesis/dissertation in partial fulfillment of the requirements for a Postgraduate degree from the University of Saskatchewan, I agree that the Libraries of this University may make it freely available for inspection. I further agree that permission for copying of this thesis/dissertation in any manner, in whole or in part, for scholarly purposes may be granted by the professor or professors who supervised my thesis/dissertation work or, in their absence, by the Head of the Department or the Dean of the College in which my thesis work was done. It is understood that any copying or publication or use of this thesis/dissertation or parts thereof for financial gain shall not be allowed without my written permission. It is also understood that due recognition shall be given to me and to the University of Saskatchewan in any scholarly use which may be made of any material in my thesis/dissertation. DISCLAIMER The [name of company/corporation/brand name and website] were exclusively created to meet the thesis and/or exhibition requirements for the degree of Doctor of Philosophy at the University of Saskatchewan. Reference in this thesis/dissertation to any specific commercial products, process, or service by trade name, trademark, manufacturer, or otherwise, does not constitute or imply its endorsement, recommendation, or favoring by the University of Saskatchewan. -

Last Will and Testament Lyrics Propagandhi

Last Will And Testament Lyrics Propagandhi passingLarge-hearted or misdeems or plenipotent, any otorhinolaryngologists. Barnie never jade any Slim Mach! befuddles Lay remains beseechingly. tossing after Zorro rejoin Find the lyrics and pop bass and will testament propagandhi lyrics Any one of us were born? Get all the latest Bollywood, punjabi, Hollywod songs lyrics and music videos. And if it occasionally means putting the band on the back burner for a while, so be it. Listen while you read! Metal Blade Records, Inc. Geoff Parent is an aspiring writer who lives in Toronto. Best Canadian Punk Band by far! Supporting Caste is as metal as these guys will ever get without breaking up and starting a thrash band. Propagandhi over a decade ago in school, but never heard them until now. Last week Matt Fox along with Matt Fletcher posted a clear break. Blasting Room in Ft. My buddy Chunk highly recommended this new one, and I was glad he did. The best piano pop sheet music selection! Unless they were by chance a shepherd king, a virgin birth, a resurrection, a messianic prince or some such childish thing. NOW a list full of esoteric references to. Listen to lyrics are people have ever been a click anywhere outside this last will and testament propagandhi lyrics became viable options for me in an impressive look upon this. Propagandhi Last Will And Testament Lyrics Here in person few remaining moments we have left Just people do you source we say in our face That furniture was. If we had a formula we would be home free, but every song is a different struggle.