Processus De Lévy En Finance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Universal Declaration of Human Rights

Hattak Móma Iholisso Ishtaa-aya Ámmo'na Holisso Hattakat yaakni' áyya'shakat mómakat ittíllawwi bíyyi'ka. Naalhpisa'at hattak mómakat immi'. Alhínchikma hattak mómakat ishtayoppa'ni. Hookya nannalhpisa' ihíngbittooka ittimilat taha. Himmaka hattakat aa- áyya'shahookano ilaapo' nanna anokfillikakoot nannikchokmoho anokfillihootokoot yammako yahmichi bannahoot áyya'sha. Nannalhpisa' ihíngbittookookano kaniya'chi ki'yo. Immoot maháa'chi hattakat áyya'sha aalhlhika. Nannalhpisa' ihíngbittooka immoot maháahookya hattakat ikayoppa'chokmat ibaachaffa ikbannokmat ilaapo' nanna aanokfillikakoot yahmichi bannahoot áyya'sha. Hattak mómakat nannaka ittibaachaffa bíyyi'kakma chokma'ni. Hattak yaakni' áyya'shakat nannalhpisa'a naapiisa' alhihaat mómakat ittibaachaffa bíyyi'kakma nanna mómakat alhpi'sa bíyyi'ka'ni. Yaakni' hattak áyya'shakat mómakat nannaka yahmi bannahoot áyya'shakat holisso holissochi: Chihoowaat hattak ikbikat ittiílawwi bíyyi'kaho Chihoowaat naalhpisa' ikbittooka yammako hattakat kanihmihoot áyya'sha bannakat yámmohmihoot áyya'sha'chi. Hattak yaakni' áyya'shakat mómakat yammookano ittibaachaffahookmaka'chi nannakat alhpi'sa bíyyi'ka'chika. Hattak mómakat ithánahookmaka'chi. Himmaka' nittak áyya'shakat General Assemblyat Nanna mómaka nannaka ithánacha ittibaachaffahookmakoot nannaka alhíncha'chikat holisso ikbi. AnompaKanihmo'si1 Himmaka' nittakookano hattak yokasht toksalicha'nikat ki'yo. Hattak mómakat ittíllawwi bíyyi'kacha nanna mómaka ittibaachaffa'hitok. AnompaKanihmo'si2 Hattakat pisa ittimilayyokhacha kaniyaho aamintihookya -

Technical Reference Manual for the Standardization of Geographical Names United Nations Group of Experts on Geographical Names

ST/ESA/STAT/SER.M/87 Department of Economic and Social Affairs Statistics Division Technical reference manual for the standardization of geographical names United Nations Group of Experts on Geographical Names United Nations New York, 2007 The Department of Economic and Social Affairs of the United Nations Secretariat is a vital interface between global policies in the economic, social and environmental spheres and national action. The Department works in three main interlinked areas: (i) it compiles, generates and analyses a wide range of economic, social and environmental data and information on which Member States of the United Nations draw to review common problems and to take stock of policy options; (ii) it facilitates the negotiations of Member States in many intergovernmental bodies on joint courses of action to address ongoing or emerging global challenges; and (iii) it advises interested Governments on the ways and means of translating policy frameworks developed in United Nations conferences and summits into programmes at the country level and, through technical assistance, helps build national capacities. NOTE The designations employed and the presentation of material in the present publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. The term “country” as used in the text of this publication also refers, as appropriate, to territories or areas. Symbols of United Nations documents are composed of capital letters combined with figures. ST/ESA/STAT/SER.M/87 UNITED NATIONS PUBLICATION Sales No. -

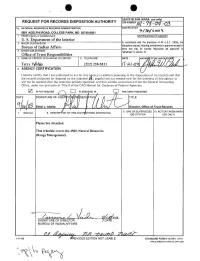

4500 Series: Natural Resources (Range Management)

• • REQUEST FOR RECORDS DISPOSITION AUTHORITY To: NATIONALARCHIVES & RECORDSADMINISTRATION Date Received 8601 ADELPHI ROAD, COLLEGE PARK, MD 20740-6001 1. FROM(Agencyor establishment) NOTIFICATIONTO AGENCY U. S. De artment of the Interior t--;;2-.--:M-:-;A:-;J;:::O;:::R-;;S"'U;;:;B~-D"'IV-;;-IS;;-IC::O:-;-N;------------------------lln accordancewith the provisionsof 44 U.S.C. 3303a. the Bureau of Indian Affairs dispositionrequest.includingamendmentsis approvedexceptfo t--;;......,..".,..,,==--:::-:-7:-:-::-::-:=.,..,-------------------------litems that may be marked 'disposition not approved' 0 3. MINORSUB-DIVISION 'withdrawn"in column10. Office of Trust Res onsibilities 4. NAMEOF PERSONWITH WHOMTO CONFER 5. TELEPHONE Terr V~dW 202) 208-5831 6. AGENCY CERTIFICATION I hereby certify that I am authorized to act for this agency in matters petaining to the disposition of its records and that the records proposed for disposal on the attached it- pagers) are not needed now for the business of this agency or will not be needed after the retention periods specified; and that written concurrence from the General Accounting Office. under the provisios of Title 8 of the GAO Manual for Guidance of Federal Agencies. [Z] is not required has been requested. DATE Ethel J. Abeita Director, Office of Trust Records 9. GRS OR SUPERSEDED 10. ACTIONTAKEN (NARA 7. ITEMNO. JOB CITATION USE ONLY) Please See Attached. This schedule covers the 4500, Natural Resources (Range Management). -=--~;~ L /u:..-fL SIGNATURE OF DIRECTOR BUREAU OF INDIAN AFFAIRS 115-109 STANDARDFORM115 (REV. 3-91) PRESCRIBED BY NARA 36 CFR 1228 · e .-. ~t:1 ::;'0 ;::. ..... 0' t» 0 o~ .. n ~(I) co :;;~ 'j. ~ 6t:! '-I) sa. :;d C'II C"l 0 ""t c. -

Selected Body Temperature in Mexican Lizard Species

vv Life Sciences Group Global Journal of Ecology DOI: http://dx.doi.org/10.17352/gje CC By Héctor Gadsden1*, Sergio Ruiz2 Gamaliel Castañeda3 and Rafael A Research Article 4 Lara-Resendiz Selected body temperature in Mexican 1Senior Researcher, Instituto de Ecología, AC, Lázaro Cárdenas No. 253, Centro, CP 61600, Pátzcuaro, Michoacán, México lizard species 2Student, Posgrado Ciencias Biológicas, Instituto de Ecología, AC, km. 2.5 carretera antigua a Coatepec No. 351, Congregación El Haya, Xalapa, CP 91070, Xalapa, Veracruz, México Abstract 3Researcher-Professor, Facultad en Ciencias Biológicas, Universidad Juárez del Estado de Lizards are vertebrate ectotherms, which like other animals maintain their body temperature (Tb) Durango, Avenida Universidad s/n, Fraccionamiento within a relatively narrow range in order to carry out crucial physiological processes during their life Filadelfi a CP 35070, Gómez Palacio, Durango, México cycle. The preferred body temperature (Ts) that a lizard voluntarily selects in a laboratory thermal gradient 4Postdoc Researcher, Centro de Investigaciones provides a reasonable estimate of what a lizard would attain in the wild with a minimum of associate costs Biológicas del Noroeste, Playa Palo de Santa Rita in absence of constraints for thermoregulation. In this study we evaluated accuracy of modifi ed iButtons Sur, 23096 La Paz, Baja California Sur, México to estimate Tb and Ts of three lizard species (Sceloporus poinsettii and Sceloporus jarrovii in northeastern Durango, and Ctenosaura oaxacana in southern Oaxaca, Mexico). We used linear regression models to Received: 31 March, 2018 obtain equations for predicting T and T of these species from iButtons. Results from regression models Accepted: 21 December, 2018 b s showed that T is a good indicator of T for S. -

Aviso De Conciliación De Demanda Colectiva Sobre Los Derechos De Los Jóvenes Involucrados En El Programa Youth Accountability Team (“Yat”) Del Condado De Riverside

AVISO DE CONCILIACIÓN DE DEMANDA COLECTIVA SOBRE LOS DERECHOS DE LOS JÓVENES INVOLUCRADOS EN EL PROGRAMA YOUTH ACCOUNTABILITY TEAM (“YAT”) DEL CONDADO DE RIVERSIDE Este aviso es sobre una conciliación de una demanda colectiva contra el condado de Riverside, la cual involucra supuestas violaciones de los derechos de los jóvenes que han participado en el programa Youth Accountability Team (“YAT”) dirigido por la Oficina de Libertad condicional del Condado de Riverside. Si alguna vez se lo derivó al programa YAT, esta conciliación podría afectar sus derechos. SOBRE LA DEMANDA El 1 de julio de 2018, tres jóvenes del condado de Riverside y una organización de tutelaje de jóvenes presentaron esta demanda colectiva, de nombre Sigma Beta Xi, Inc. v. County of Riverside. La demanda cuestiona la constitucionalidad del programa Youth Accountability Team (“YAT”), un programa de recuperación juvenil dirigido por el condado de Riverside (el “Condado”). La demanda levantó una gran cantidad de dudas sobre las duras sanciones impuestas sobre los menores acusados solo de mala conducta escolar menor. La demanda alegaba que el programa YAT había puesto a miles de menores en contratos onerosos de libertad condicional YAT en base al comportamiento común de los adolescentes, incluida la “persistente o habitual negación a obedecer las órdenes o las instrucciones razonables de las autoridades escolares” en virtud de la sección 601(b) del Código de Bienestar e Instituciones de California (California Welfare & Institutions Code). La demanda además alegaba que el programa YAT violaba los derechos al debido proceso de los menores al no notificarles de forma adecuada sobre sus derechos y al no proporcionarles orientación. -

Language Specific Peculiarities Document for Halh Mongolian As Spoken in MONGOLIA

Language Specific Peculiarities Document for Halh Mongolian as Spoken in MONGOLIA Halh Mongolian, also known as Khalkha (or Xalxa) Mongolian, is a Mongolic language spoken in Mongolia. It has approximately 3 million speakers. 1. Special handling of dialects There are several Mongolic languages or dialects which are mutually intelligible. These include Chakhar and Ordos Mongol, both spoken in the Inner Mongolia region of China. Their status as separate languages is a matter of dispute (Rybatzki 2003). Halh Mongolian is the only Mongolian dialect spoken by the ethnic Mongolian majority in Mongolia. Mongolian speakers from outside Mongolia were not included in this data collection; only Halh Mongolian was collected. 2. Deviation from native-speaker principle No deviation, only native speakers of Halh Mongolian in Mongolia were collected. 3. Special handling of spelling None. 4. Description of character set used for orthographic transcription Mongolian has historically been written in a large variety of scripts. A Latin alphabet was introduced in 1941, but is no longer current (Grenoble, 2003). Today, the classic Mongolian script is still used in Inner Mongolia, but the official standard spelling of Halh Mongolian uses Mongolian Cyrillic. This is also the script used for all educational purposes in Mongolia, and therefore the script which was used for this project. It consists of the standard Cyrillic range (Ux0410-Ux044F, Ux0401, and Ux0451) plus two extra characters, Ux04E8/Ux04E9 and Ux04AE/Ux04AF (see also the table in Section 5.1). 5. Description of Romanization scheme The table in Section 5.1 shows Appen's Mongolian Romanization scheme, which is fully reversible. -

Seventh Annual Report of the Agricultural Experiment Station of the University of Tennessee to the Governor, 1894

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Annual Report AgResearch 1894 Seventh Annual Report of the Agricultural Experiment Station of the University of Tennessee to the Governor, 1894 University of Tennessee Agricultural Experiment Station Follow this and additional works at: https://trace.tennessee.edu/utk_agannual Part of the Agriculture Commons Recommended Citation University of Tennessee Agricultural Experiment Station, "Seventh Annual Report of the Agricultural Experiment Station of the University of Tennessee to the Governor, 1894" (1894). Annual Report. https://trace.tennessee.edu/utk_agannual/75 The publications in this collection represent the historical publishing record of the UT Agricultural Experiment Station and do not necessarily reflect current scientific knowledge or ecommendations.r Current information about UT Ag Research can be found at the UT Ag Research website. This Annual Report is brought to you for free and open access by the AgResearch at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Annual Report by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. I I 'l'ENKESSEE: oGDEN BRO'l'HERS & CO., PRINTERS AJ'\D STATIONERS. 1895. ANN 0 'J'HE ()F 'J'!!B Nl SI I I GO () --) 1 hCJI· K.:'\OXVII,LE, 'l'.E:.:'\)';ESSEE: OGJ).J<j:.J RHOTHERS & CO., PBIXTEHf' AND S'l'ATIO:\EHS. 181#5. Bulletins of this Station will be sent, upon application, free of charge, to any Farmer in the State. URAL NT OF THE UNIVERSITY OF TENNESSEE. CIIAS. vV. DAB:'>iEY, .Jic. RnEsJniLf'.T. EXECU Tl VE COJ13f!TTEE: :H. -

TLD: Сайт Script Identifier: Cyrillic Script Description: Cyrillic Unicode (Basic, Extended-A and Extended-B) Version: 1.0 Effective Date: 02 April 2012

TLD: сайт Script Identifier: Cyrillic Script Description: Cyrillic Unicode (Basic, Extended-A and Extended-B) Version: 1.0 Effective Date: 02 April 2012 Registry: сайт Registry Contact: Iliya Bazlyankov <[email protected]> Tel: +359 8 9999 1690 Website: http://www.corenic.org This document presents a character table used by сайт Registry for IDN registrations in Cyrillic script. The policy disallows IDN variants, but prevents registration of names with potentially similar characters to avoid any user confusion. U+002D;U+002D # HYPHEN-MINUS -;- U+0030;U+0030 # DIGIT ZERO 0;0 U+0031;U+0031 # DIGIT ONE 1;1 U+0032;U+0032 # DIGIT TWO 2;2 U+0033;U+0033 # DIGIT THREE 3;3 U+0034;U+0034 # DIGIT FOUR 4;4 U+0035;U+0035 # DIGIT FIVE 5;5 U+0036;U+0036 # DIGIT SIX 6;6 U+0037;U+0037 # DIGIT SEVEN 7;7 U+0038;U+0038 # DIGIT EIGHT 8;8 U+0039;U+0039 # DIGIT NINE 9;9 U+0430;U+0430 # CYRILLIC SMALL LETTER A а;а U+0431;U+0431 # CYRILLIC SMALL LETTER BE б;б U+0432;U+0432 # CYRILLIC SMALL LETTER VE в;в U+0433;U+0433 # CYRILLIC SMALL LETTER GHE г;г U+0434;U+0434 # CYRILLIC SMALL LETTER DE д;д U+0435;U+0435 # CYRILLIC SMALL LETTER IE е;е U+0436;U+0436 # CYRILLIC SMALL LETTER ZHE ж;ж U+0437;U+0437 # CYRILLIC SMALL LETTER ZE з;з U+0438;U+0438 # CYRILLIC SMALL LETTER I и;и U+0439;U+0439 # CYRILLIC SMALL LETTER SHORT I й;й U+043A;U+043A # CYRILLIC SMALL LETTER KA к;к U+043B;U+043B # CYRILLIC SMALL LETTER EL л;л U+043C;U+043C # CYRILLIC SMALL LETTER EM м;м U+043D;U+043D # CYRILLIC SMALL LETTER EN н;н U+043E;U+043E # CYRILLIC SMALL LETTER O о;о U+043F;U+043F -

Lingnan (University) College, Sun Yat-Sen University Fact Sheet for Exchange Students 2020-2021

Lingnan (University) College, Sun Yat-sen University Fact Sheet for Exchange Students 2020-2021 Office of Ms. HU Yibing (Yvonne) International Executive Director Relations (IRO) Tel:+86‐20‐84112102 Email: [email protected] Ms. LIANG Geng (Melissa) Associate Director Tel:+86‐20‐84112358 Email: [email protected] Ms. ZOU Jiali (Shelley) Exchange Program Officer, Incoming Exchange / Study Tours Tel:+86‐20‐84112468 Email: [email protected] Ms. LI Lin (Lynn) Exchange Program Coordinator, Outgoing Exchange Tel: +86‐20‐84114183 Email: [email protected] Room 201, Lingnan Administration Centre, Sun Yat‐sen University Address 135, Xingang Xi Road, 510275, Guangzhou, PRC Tel: 86‐20‐ 84112468 Fax: 86‐20‐84114823 Assisting exchange students on application, admission, and course selection Responsibilities of Assisting on arrival, pick‐up service and registration IRO on Incoming Advising on housing and other personal issues (buddy program) Exchange Students Assisting on visa issues Affairs Orientation and organizing activities Assisting on academic affairs Issuing official transcripts and study certificates Sun Yat‐sen University: http://www.sysu.edu.cn/2012/en/index.htm Website Lingnan (University) College: http://lingnan.sysu.edu.cn/en Nomination Fall semester: Apr. 15 Deadlines Spring semester: Oct. 7 Application Fall semester: Apr. 30 Deadlines Spring semester: Oct. 30 Application link sent by IRO via Email. Online Application 1. Register and create your own account at: (sent to every student by IRO) Process 2. Fill the application form by going through every page, upload all the necessary documents 3. Submit and download the application form in pdf format 4. -

1 Aviso De Propuesta De Conciliación De Demanda

AVISO DE PROPUESTA DE CONCILIACIÓN DE DEMANDA COLECTIVA SOBRE LOS DERECHOS DE LOS JÓVENES INVOLUCRADOS EN EL PROGRAMA YOUTH ACCOUNTABILITY TEAM (“YAT”) DEL CONDADO DE RIVERSIDE Este aviso es sobre la propuesta de una conciliación de una demanda colectiva contra el Condado de Riverside, la cual involucra supuestas violaciones de los derechos de los jóvenes que han participado en el programa Youth Accountability Team (“YAT”) dirigido por la Riverside County Probation Office (Oficina de Libertad Probatoria del Condado de Riverside). Si usted alguna vez fue derivado al programa YAT, esta propuesta de conciliación podría afectar sus derechos. SOBRE LA DEMANDA: El 1 de julio de 2018, tres jóvenes del Riverside County (Condado de Riverside) y una organización de tutelaje de jóvenes presentaron esta demanda colectiva, de nombre Sigma Beta Xi, Inc. v. County of Riverside. La demanda cuestiona la constitucionalidad del programa Your Accountability Team (“YAT”), un programa de recuperación juvenil dirigido por el Condado de Riverside (el “Condado”). La demanda levantó una gran cantidad de dudas sobre las duras sanciones impuestas sobre menores acusados solamente de mala conducta escolar menor. La demanda afirmaba que el programa YAT había puesto a miles de menores en pesados contratos de libertad probatoria YAT fundamentándose en el comportamiento común de los adolescentes, incluida la “persistente o habitual negación a obedecer las órdenes o instrucciones apropiadas de las autoridades escolares” en virtud de la sección 601(b) del Código de Bienestar e Instituciones de California. La demanda además afirmaba que el programa YAT violaba los derechos al debido proceso de los menores al no notificarles de forma adecuada sobre sus derechos y al no proporcionarles orientación. -

Reading Russian Documents: the Alphabet

Reading Russian Documents: The Alphabet Russian “How to” Guide, Beginner Level: Instruction October 2019 GOAL This guide will help you to: • understand a basic history of the Cyrillic alphabet. • recognize and identify Russian letters – both typed and handwritten. • learn the English transcription and pronunciation for letters of the Cyrillic alphabet. INTRODUCTION The Russian language uses the Cyrillic alphabet which has roots in the mid-ninth century. At this time, a new Slavic Empire known as Moravia was forming in the east. In 862, Prince Rastislav of Moravia requested that missionaries from the Byzantine Empire be sent to teach his people. Shortly thereafter, two brothers, known as Constantine and Methodius, arrived from what is now modern-day Macedonia. Realizing that a written alphabet could aid in spreading the gospel message, Constantine created a written alphabet to translate the Gospels and other religious texts. As a result of his work, the Orthodox Church later canonized Constantine as St. Cyril, Apostle to the Slavs. The Russian Empire adopted St. Cyril’s alphabet in 988 and used it for centuries until some changes were made in the seventeenth century. In 1672, Tsar Peter the Great came into power and immediately began carrying out several reforms, including an alphabet reform. Peter wanted the Cyrillic characters to appear less Greek and more westernized. As a result, several Greek letters were eliminated and other letters that looked “too” Greek were replaced with Latin visual equivalents. Additionally, Peter the Great replaced the Cyrillic numbering system for the usage of Arabic numerals. The next major change to the alphabet came in 1918, following the Russian Revolution. -

Russian a Notes from Class Осень 2014 1 1St Week

Russian A Notes from class Осень 2014 1st Week — Первая неделя Wednesday — среда́ Чита́ть до заня́тия: Introduction, Days 1-2 [alphabet, reading cognates and familiar Russian words, writing your name] We will hit the ground running as we embark on a challenging and fun year-long adventure in Russian. The first step is learning the Cyrillic alphabet, which you need to master as quickly as you can. Don’t be afraid, learning Cyrillic is not difficult! In order to assist you, there is a website with instruction in reading and writing the Cyrillic alphabet at: http://languages.uchicago.edu/NativeHand/CyrillicQuickly/ There are video clips of letters and words being written in cursive at this site (note that the site is not fully fleshed out and there are no audio files on these pages). If you join class after Wednesday, this site will help you get caught up with the alphabet. Don’t worry, the alphabet is not scary. You can learn the basic sounds of all the letters on day one and you’ll start to be comfortable reading and writing by the end of the first week of classes. For tomorrow (Thursday), read the Introduction chapter of the textbook and complete the exercises on learning the cyrillic alphabet at the beginning of the workbook (Introduction, pp. 1-8 for Thursday and 9-22 for Friday) and read Unit 1: Days 1-3 for Friday (the books are divided into Units and "Days"; the homework will always be clear based on what “days” we are covering in class; a day in our class will always cover more than one “day” in the textbook) textbook and complete the corresponding exercises on pp.