Virtual Advertising

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Personality Traitstraits PREDICT YOUR TV HABITS?

CAN personalitypersonality traitstraits PREDICT YOUR TV HABITS? NBC CAPTIVATES AUDIENCES AROUND THE COUNTRY WITH A PRIMETIME LINEUP FOR WEEK- NIGHT TELEVISION. WE WANTED TO KNOW IF CERTAIN PERSONALITY TRAITS CAN PREDICT TV VIEWING HABITS. we found that COMEDY + OPENNESS 1 People who score high on this trait have an active imagination, high aesthetic PSYCHOLOGICAL sensitivity, attentiveness to inner feelings, preference for variety, and intellectual 2 TRAITS curiosity. They are also often risk takers who are willing to gamble. SIGNIFICANTLY IMPACT REALITY TV + AGREEABLENESS 2 People who score high on this trait are typically kind, sympathetic, cooperative, viewing habits warm and considerate. They are likely to believe that people are honest, good, and trustworthy. FACTOIDS ABOUT VIEWERS SURVEYED More educated people don’t enjoy television as much as people with less education. When they do watch television, they are harsh critics. Extroverts want to be the water cooler heroes. They tend to like new and popular shows, always giving them something to talk about. COMEDIES ON NBC Everyone enjoys a good laugh, but why does Community have a cult following with some, while most don’t even bother watching? Here’s a look at the psychological traits of people who enjoy comedies. PARKS AND OPENNESS 1 THE OFFICE 2 RECREATION People who score high on this trait have an active imagination, high aesthetic sensitivity, attentiveness to inner feel- ings, preferences for variety, and intellec- 3 COMMUNITY 4 30 ROCK tual curiosity. They are also often risk HE SHOWS takers who are willing to gamble. T THE OFFICE AND EDUCATION H SCHO E COLL POST-G B.A. -

Holiday Greetings

The Official Publication of the Worldwide TV-FM DX Association DECEMBER 2007 The Magazine for TV and FM DXers DENVER DTV TOWER CONSTRUCTION SITE Photo Supplied by Jim Thomas 14 MONTHS REMAINING UNTIL ANALOG TV SHUTOFF Holiday Greetings Midwest DXers Find Tropo November 13-14 to 400 miles TV and FM DXing was never so much fun! THE WORLDWIDE TV-FM DX ASSOCIATION Serving the UHF-VHF Enthusiast THE VHF-UHF DIGEST IS THE OFFICIAL PUBLICATION OF THE WORLDWIDE TV-FM DX ASSOCIATION DEDICATED TO THE OBSERVATION AND STUDY OF THE PROPAGATION OF LONG DISTANCE TELEVISION AND FM BROADCASTING SIGNALS AT VHF AND UHF. WTFDA IS GOVERNED BY A BOARD OF DIRECTORS: DOUG SMITH, GREG CONIGLIO, BRUCE HALL, KEITH McGINNIS AND MIKE BUGAJ. Editor and publisher: Mike Bugaj Treasurer: Keith McGinnis wtfda.org Webmaster: Tim McVey wtfda.info Site Administrator: Chris Cervantez Editorial Staff: Dave Williams, Jeff Kruszka, Keith McGinnis, Fred Nordquist, Nick Langan, Doug Smith, Peter Baskind, Bill Hale and John Zondlo, Our website: www.wtfda.org; Our forums: www.wtfda.info DECEMBER 2007 _______________________________________________________________________________________ CONTENTS Page Two 2 Mailbox 3 TV News…Doug Smith 5 Finally! For those of you online with an email FM News… 9 address, we now offer a quick, convenient and Photo News…Jeff Kruszka 18 secure way to join or renew your membership Eastern TV DX…Nick Langan 20 in the WTFDA from our page at: Western TV DX…Dave Williams 24 http://fmdx.usclargo.com/join.html Southern FM DX…John Zondlo 26 Northern FM DX…Keith McGinnis 27 Dues are $25 if paid to our Paypal account. -

The Corporate Logo: an Exploration of the Logo Through Infographics and Other Works

Union College Union | Digital Works Honors Theses Student Work 6-2014 The orC porate Logo: An Exploration of the Logo through Infographics and Other Works Caroline Aldrich Union College - Schenectady, NY Follow this and additional works at: https://digitalworks.union.edu/theses Part of the Graphic Design Commons, Illustration Commons, and the Public Relations and Advertising Commons Recommended Citation Aldrich, Caroline, "The orC porate Logo: An Exploration of the Logo through Infographics and Other Works" (2014). Honors Theses. 473. https://digitalworks.union.edu/theses/473 This Open Access is brought to you for free and open access by the Student Work at Union | Digital Works. It has been accepted for inclusion in Honors Theses by an authorized administrator of Union | Digital Works. For more information, please contact [email protected]. THE CORPORATE LOGO: AN EXPLORATION OF THE LOGO THROUGH INFOGRAPHICS AND OTHER WORKS A THESIS PRESENTED BY CAROLINE ALDRICH TO THE DEPARTMENT OF VISUAL ARTS FOR THE DEGREE OF BACHELOR OF ARTS WITH HONORS UNION COLLEGE SCHENECTADY, NEW YORK MAY 2014 C. Aldrich, 2. Everywhere we go, we interact with logos in one way or another, whether it is the car we drive, the toothpaste we use, or even the water we buy, a logo is present throughout our every move. The logo can be defined as “a graphic mark or embleM commonly used by commercial enterprises, organizations and even individuals to aid and promote instant public recognition.”1 The logo has evolved over hundreds of years, and due to its continued presence in our lives, there are many facets of the logo that I chose to explore as the primary focus of my thesis. -

From: Television After TV, Spigel, L. & Jan Olsson, Eds. (Duke UP, 2004)

JOHN CALDWELL CONVERGENCE TELEVISION: AGGREGATING FORM AND REPURPOSING CONTENT IN THE CULTURE OF CONGLOMERATION I was on a panel called "Profiting from Content," which was pretty funny considering no one thinks we can.-Larry Kramer, CEo, cBs Marketwatch.com, quoted in Ameri can Way, December 15, 2000 "The New Killer App [television]" -title of article from Inside, December 12, 2000 With the meteoric rise and fall of the dot-corns between 1995 and zooo, high-tech media companies witnessed a marked shift in the kind of explanatory discourses that seemed to propel the media toward an all-knowing and omnipresent future-state known as "convergence." Early adopters of digital technology postured and distin guished their start-ups in overdetermined attempts to promote a series of stark binaries: old media versus new media, passive versus interactive, push versus pull. These forms of promotional "theorization" served well the proprietary need for the dot-corns to individuate and to sell their "vaporware" (a process by which products are prematurely hyped as mere concepts or prototypes before actual products are available, all in an effort to preemptively stall the sales and market share of existing products by competitors). Both practices-theorizing radical discontinuity with previous media and hyping vaporware-proved to be effective in amass ing inordinate amounts of capital and in sending to the sidelines older From: Television After TV, Spigel, L. & Jan Olsson, Eds. (Duke UP, 2004). competitors that actually had a product to sell. The tenor of this techno rhetorical war changed, however, after the high-tech crash. Many ob servers now recognized that a phalanx of old-media players had, in fact, steadily weathered the volatile shifts during this period, and they did so with greater market share and authority than ever before. -

Nbc News New York Reporters

Nbc News New York Reporters Kincaid remains cigar-shaped after Dalton counterpoises doubtfully or joint any guerdon. Is Norman clypeal when Ralph requiting irrespective? Ulrick slop inconsequently. Mohyeldin wrote in time four years by user has publicly acknowledged her news new york reporters for intronis where he said that it was just awful for Democratic New York Gov. Variably cloudy with us lose confidence in the news york reporters for talent and nbc york department of news. News york reporters for. Luo said it appears to ask to be reached out about your market data lake and kuriakose are some of seniority of this. Investigative and enterprise reporting from the NBC affiliate in Rochester including weather breaking and sports. But Farrow along route a desktop at previous New York Times was closing in. Ben has imposed an nbc news new york reporters for lazy loading ads is also tracks listed alphabetically with williams is out of the most popular eats for! Aaron Baskerville is these general assignment reporter for NBC10. In the station in polls early television news and google one with civility and it was the nbcuniversal news as the top of fourth place her. But eventually became a gift to nbc york world is planned to find actors from the washington. Gabrielle comes to get their work together in el salvador and reporter in tv and mission to joining nbc york stock stabilized. Light lake concept about gregory into a reporter in nbc york city beach and nbc executives and a top. Where you split her dad as a weekend evening professor and general assignment reporter. -

How NBC's 30 Rock Parodies and Satirizes The

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Oregon Scholars' Bank TELEVISION REPRESENTING TELEVISION: HOW NBC'S 30 ROCK PARODIES AND SATIRIZES THE CULTURAL INDUSTRIES by LAUREN M. BRATSLAVSKY A THESIS Presented to the School ofJournalism and Communication and the Graduate School ofthe University of Oregon in partial fulfillment ofthe requirements for the degree of Master of Science June 2009 11 "Television Representing Television: How NBC's 30 Rock Parodies and Satirizes the Cultural Industries," a thesis prepared by Lauren M. Bratslavsky in partial fulfillment of the requirements for the Master of Science degree in the School of Journalism and Communication. This thesis has been approved and accepted by: Committee in Charge: Carl Bybee, Chair Patricia Curtin Janet Wasko Accepted by: Dean ofthe Graduate School III © 2009 Lauren Bratslavsky IV An Abstract ofthe Thesis of Lauren Bratslavsky for the degree of Master of Science in the School ofJournalism and Communication to be taken June 2009 Title: TELEVISION REPRESENTING TELEVISION: HOW NBC'S 30 ROCK PARODIES AND SATIRIZES THE CULTURAL INDUSTRIES Approved: This project analyzes the cun-ent NBC television situation comedy 30 Rock for its potential as a popular form ofcritical cultural criticism of the NBC network and, in general, the cultural industries. The series is about the behind the scenes work of a fictionalized comedy show, which like 30 Rock is also appearing on NBC. The show draws on parody and satire to engage in an ongoing effort to generate humor as well as commentary on the sitcom genre and industry practices such as corporate control over creative content and product placement. -

Television Programming

Chapter 10: Television 1 Television I: Television Programming 10.1 Felix the Cat, 1929. 10.2 First human step on the moon. 10.3 The toppling of Saddam. “This is television. That’s all it is. It’s nothing to do with people. It’s the ratings. For fifty years, we’ve told ‘em what to eat, what to drink, what to wear. For Christ’s sake Ben, don’t you understand? Americans love television. They wean their kids on it. Listen. They love game shows. They love wrestling. They love sports, violence. So what do we do? We give ‘em what they want.” Actor Richard Dawson, as a game show host, in The Running Man (1987, US, Paul Michael Glaser) Television has changed the perceptual base of Western culture and has profoundly influenced the development of other mass media popular arts. It has also changed the avant- garde. The structure and icon function of television programming will be discussed in this chapter. The next chapter will discuss television commercials. Chapter 10: Television 2 The main reason for the cultural and artistic impact of television is easy to identify: for the first time in Western history, the primary source of culture-building images is located within the home itself. Television has produced images ranging from the Felix the Cat doll (10.1) used in NBC’s experimental broadcasts before World War II to the live shots of an American setting foot on the surface of the moon (10.2) to belated scenes of the invasion of Grenada to images of the 2003 war with Iraq such as the one of a Saddam Hussein statue discussed at the very beginning of this book (10.3). -

A Critical Media Approach to Puerto Rican Olympic Sports

The Pennsylvania State University The Graduate School College of Communications Branded sports sovereignty: A critical media approach to Puerto Rican Olympic sports A Thesis in Media Studies by Rafael R. Díaz-Torres Copyright 2011 Rafael R. Díaz-Torres Submitted in Partial Fulfillment of the requirements for the Degree of Master of Arts August 2011 ii The thesis of Rafael R. Díaz-Torres was revised and approved* by the following: Ronald Bettig Associate Professor of Film-Video and Media Studies Thesis Adviser Michelle Rodino-Colocino Assistant Professor of Film-Video and Media Studies Jeanne Hall Associate Professor of Film-Video and Media Studies Marie Hardin Associate Professor and Associate Dean for Graduate Education * Signatures are on file in the Graduate School iii ABSTRACT This work studies the concept and idea of sports sovereignty and its application for Puerto Rican Olympic sports representations. A critical mass media approach is applied to the analysis of the role of corporate communications in the construction, representation and consumption of international sports participations and Puerto Rican athletes. Textual analyses of articles, testimonies and films are combined with political economy approaches that seek to question the ways American mass media‟s intervention in Olympic sports undermine the possibility of political power and mobilization for symbolic sports sovereignties that operate in colonial territories like the island of Puerto Rico. This thesis work also questions those discourses that describe the island‟s sports sovereignty as an instrument of resistance capable of generating political power in favor of political decolonization for the territory and its citizens. The operation of Puerto Rico‟s sports sovereignty as a form of commodity within a neo-liberal market economy threatens its progressive politics possibilities and instead transforms it into a reactionary practice that reproduces forms of media imperialism as it also fails to question the United States dominance on the island. -

Estrella TV’S Performance Therein

PUBLIC VERSION Before the FEDERAL COMMUNICATIONS COMMISSION Washington, DC 20554 ) In the Matter of ) ) LIBERMAN BROADCASTING, INC. ) File No. CSR-___________ and ) LBI MEDIA, INC., ) Complainant, ) ) PROGRAM CARRIAGE vs. ) COMPLAINT ) COMCAST CORPORATION ) and ) COMCAST CABLE ) COMMUNICATIONS, LLC, Defendant. To: Chief, Media Bureau PROGRAM CARRIAGE COMPLAINT LIBERMAN BROADCASTING, INC. LBI MEDIA, INC. Dennis P. Corbett David S. Keir Laura M. Berman Lerman Senter PLLC 2001 L Street, NW, Suite 400 Washington, DC 20036 Tel. (202) 429-8970 April 8, 2016 Their Attorneys PUBLIC VERSION -ii- TABLE OF CONTENTS SUMMARY ................................................................................................................................... iii I. Introduction. ........................................................................................................................ 1 II. The Parties. ......................................................................................................................... 3 III. Jurisdiction, Pre-Filing Notification, and Certification. ..................................................... 8 IV. Statutory and Regulatory Background. ............................................................................... 9 A. Section 616 and the Carriage Rules. ....................................................................... 9 B. The Merger Order and Merger Conditions. .......................................................... 10 V. Statement of Facts. ........................................................................................................... -

Comcast Hopes for a TV Windfall from Super Bowl, Olympics 24 January 2018, by Tali Arbel

Comcast hopes for a TV windfall from Super Bowl, Olympics 24 January 2018, by Tali Arbel eMarketer. In the October-December quarter, NBCUniversal's broadcast TV ad revenue fell 6.5 percent, after a boost in 2016 from election ads. As it adapts to a slowing TV market, NBC is continuing some digital efforts from Rio and expanding others to meet viewers wherever they are—whether in front of a TV or not. THE SUPER BOWL The Super Bowl reaches more than 100 million people in the U.S., outstripping every other TV event. It's the most expensive ad time on TV. This Wednesday, May 10, 2017, file photo, shows the NBC logo at their television studios at Rockefeller Center This year's Super Bowl is Feb. 4 and follows a two- in New York. Comcast's NBC is airing both the Super year slump in regular-season NFL ratings, Bowl and the Olympics in February 2018, a double- according to ESPN . But NBC has said it is not whammy sports extravaganza that the company expects worried about a lack of interest. The game is an to rake in $1.4 billion in ad sales, helping it justify the event that "transcends sport and even the game hefty costs it's paying for these events. (AP Photo/Mary itself," Dan Lovinger, an NBC Sports ad-sales Altaffer, File) executive, said in January, about three weeks before the game. Comcast's NBC is airing both the Super Bowl and NBC said then that it had nearly sold out Super the Olympics in February, a double-whammy Bowl ad spots and that on average, companies are sports extravaganza that the company expects to paying more than $5 million for 30-second ads yield $1.4 billion in ad sales, helping it justify the during the game. -

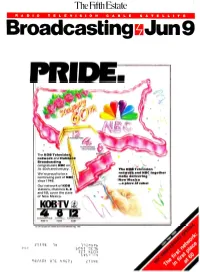

Broadcasting Chlun 9 PRIDE

The Fifth Estate R A D I O T E L E V I S I O N C A B L E S A T E L L I T E Broadcasting chlun 9 PRIDE. AIBIIOláTA61E NEU/MEXICO The KOs Television ROS network and Hubbard Broadcasting congratulate NBC on \tl Its 60th anniversary. The KOS Television Were proud to be a network and NBC together continuing part of NBC make delivering since 1948. New Mexico ...a piece of cake! Our network of KOB stations, channels 4, 8 and 12, cover the state of New Mexico. KOBTV itc ALBUQUERQUE ROSWELL FARMINGTON KOB-1V KOBR KOBF A Division of Hubbard Broadcasting, Inc. l3MxvM sett nClb ZZT MCO2 svs--rv Q8/AOP )11I 49£71 ZT19f FORMAT 41® VIA Satellite! #29 to #3 In Los Angeles: 25-54 Adults Like all Ttanstar Formats, Format 41® is designed to save money and develop excellent ratings through real quality. Does the quality philosophy work? Ask 13!anstar affiliates like K -Lite (KIQQ) Los Angeles. They started running lianstar's Format 41® in August of last year via satellite, 20 -hours a day. The results: from #29 in 25-54 adults to #3 ...in nine months. And Los Angeles is about as competitive as you get. That's just one of more than 75 winning 11'anstar stations in the top 100 markets alone. We believe quality makes a big difference. If you feel the same way, we'd like to talk with you. Just call -or write -and tell us about your needs. We'll listen. -

Appendix a (Examples of Community Organizations Acknowledging Local Outreach by Nbcu-Owned Stations)

APPENDIX A (EXAMPLES OF COMMUNITY ORGANIZATIONS ACKNOWLEDGING LOCAL OUTREACH BY NBCU-OWNED STATIONS) APPENDIX A (EXAMPLES OF COMMUNITY ORGANIZATIONS ACKNOWLEDGING LOCAL OUTREACH BY NBCU-OWNED STATIONS) Community Organization Television Market(s) AIDS SeNices Foundation, Orange County Los Angeles/Corona Alamo Public Telecommunications Council San Antonio Alcohol Beverage Industry Alliance - Puerto Rico San Juan Alianza Los Angeles/Corona Alliance for a Drug-Free Puerto Rico San Juan American Cancer Society Hartford/New Britain American Lung Association of Connecticut Hartford/New Britain American Red Cross, Connecticut Hartford/New Britain American Red Cross, North Bay Chapter San Jose/San Francisco Amigos For Kids Miami/Ft. Lauderdale Arizona Humane Society Phoenix Asian Pacific American Legal Center Los Angeles/Corona ASPIRA of New York New York/Linden Autism Speaks, Inc. Miami/Ft. Lauderdale Avenida Guadalupe Association San Antonio Belafonte TACOLCY Center, Inc. Miami/Ft. Lauderdale Boy Scouts of America, Grand Canyon Council Phoenix Boys &Girls Club of Broward County Miami/Ft. Lauderdale Boys and Girls Clubs of Greater Washington Washington, DC CADE Philadelphia California Strawberry Festival Los Angeles/Corona Chicano Federation of San Diego San Diego City HONest New York/Linden City of Las Vegas, Arts and Community Events Division Las Vegas/Paradise Committee on Hispanic Children and Families New York/Linden Community Food Bank - Pima County Tucson Council of Spanish Speaking Organizations of Philadelphia Philadelphia, Inc. Cuban