Parallel Ordered Sets Using Join

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interval Trees Storing and Searching Intervals

Interval Trees Storing and Searching Intervals • Instead of points, suppose you want to keep track of axis-aligned segments: • Range queries: return all segments that have any part of them inside the rectangle. • Motivation: wiring diagrams, genes on genomes Simpler Problem: 1-d intervals • Segments with at least one endpoint in the rectangle can be found by building a 2d range tree on the 2n endpoints. - Keep pointer from each endpoint stored in tree to the segments - Mark segments as you output them, so that you don’t output contained segments twice. • Segments with no endpoints in range are the harder part. - Consider just horizontal segments - They must cross a vertical side of the region - Leads to subproblem: Given a vertical line, find segments that it crosses. - (y-coords become irrelevant for this subproblem) Interval Trees query line interval Recursively build tree on interval set S as follows: Sort the 2n endpoints Let xmid be the median point Store intervals that cross xmid in node N intervals that are intervals that are completely to the completely to the left of xmid in Nleft right of xmid in Nright Another view of interval trees x Interval Trees, continued • Will be approximately balanced because by choosing the median, we split the set of end points up in half each time - Depth is O(log n) • Have to store xmid with each node • Uses O(n) storage - each interval stored once, plus - fewer than n nodes (each node contains at least one interval) • Can be built in O(n log n) time. • Can be searched in O(log n + k) time [k = # -

Augmentation: Range Trees (PDF)

Lecture 9 Augmentation 6.046J Spring 2015 Lecture 9: Augmentation This lecture covers augmentation of data structures, including • easy tree augmentation • order-statistics trees • finger search trees, and • range trees The main idea is to modify “off-the-shelf” common data structures to store (and update) additional information. Easy Tree Augmentation The goal here is to store x.f at each node x, which is a function of the node, namely f(subtree rooted at x). Suppose x.f can be computed (updated) in O(1) time from x, children and children.f. Then, modification a set S of nodes costs O(# of ancestors of S)toupdate x.f, because we need to walk up the tree to the root. Two examples of O(lg n) updates are • AVL trees: after rotating two nodes, first update the new bottom node and then update the new top node • 2-3 trees: after splitting a node, update the two new nodes. • In both cases, then update up the tree. Order-Statistics Trees (from 6.006) The goal of order-statistics trees is to design an Abstract Data Type (ADT) interface that supports the following operations • insert(x), delete(x), successor(x), • rank(x): find x’s index in the sorted order, i.e., # of elements <x, • select(i): find the element with rank i. 1 Lecture 9 Augmentation 6.046J Spring 2015 We can implement the above ADT using easy tree augmentation on AVL trees (or 2-3 trees) to store subtree size: f(subtree) = # of nodes in it. Then we also have x.size =1+ c.size for c in x.children. -

Advanced Data Structures

Advanced Data Structures PETER BRASS City College of New York CAMBRIDGE UNIVERSITY PRESS Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, São Paulo Cambridge University Press The Edinburgh Building, Cambridge CB2 8RU, UK Published in the United States of America by Cambridge University Press, New York www.cambridge.org Information on this title: www.cambridge.org/9780521880374 © Peter Brass 2008 This publication is in copyright. Subject to statutory exception and to the provision of relevant collective licensing agreements, no reproduction of any part may take place without the written permission of Cambridge University Press. First published in print format 2008 ISBN-13 978-0-511-43685-7 eBook (EBL) ISBN-13 978-0-521-88037-4 hardback Cambridge University Press has no responsibility for the persistence or accuracy of urls for external or third-party internet websites referred to in this publication, and does not guarantee that any content on such websites is, or will remain, accurate or appropriate. Contents Preface page xi 1 Elementary Structures 1 1.1 Stack 1 1.2 Queue 8 1.3 Double-Ended Queue 16 1.4 Dynamical Allocation of Nodes 16 1.5 Shadow Copies of Array-Based Structures 18 2 Search Trees 23 2.1 Two Models of Search Trees 23 2.2 General Properties and Transformations 26 2.3 Height of a Search Tree 29 2.4 Basic Find, Insert, and Delete 31 2.5ReturningfromLeaftoRoot35 2.6 Dealing with Nonunique Keys 37 2.7 Queries for the Keys in an Interval 38 2.8 Building Optimal Search Trees 40 2.9 Converting Trees into Lists 47 2.10 -

Search Trees

Lecture III Page 1 “Trees are the earth’s endless effort to speak to the listening heaven.” – Rabindranath Tagore, Fireflies, 1928 Alice was walking beside the White Knight in Looking Glass Land. ”You are sad.” the Knight said in an anxious tone: ”let me sing you a song to comfort you.” ”Is it very long?” Alice asked, for she had heard a good deal of poetry that day. ”It’s long.” said the Knight, ”but it’s very, very beautiful. Everybody that hears me sing it - either it brings tears to their eyes, or else -” ”Or else what?” said Alice, for the Knight had made a sudden pause. ”Or else it doesn’t, you know. The name of the song is called ’Haddocks’ Eyes.’” ”Oh, that’s the name of the song, is it?” Alice said, trying to feel interested. ”No, you don’t understand,” the Knight said, looking a little vexed. ”That’s what the name is called. The name really is ’The Aged, Aged Man.’” ”Then I ought to have said ’That’s what the song is called’?” Alice corrected herself. ”No you oughtn’t: that’s another thing. The song is called ’Ways and Means’ but that’s only what it’s called, you know!” ”Well, what is the song then?” said Alice, who was by this time completely bewildered. ”I was coming to that,” the Knight said. ”The song really is ’A-sitting On a Gate’: and the tune’s my own invention.” So saying, he stopped his horse and let the reins fall on its neck: then slowly beating time with one hand, and with a faint smile lighting up his gentle, foolish face, he began.. -

Computational Geometry: 1D Range Tree, 2D Range Tree, Line

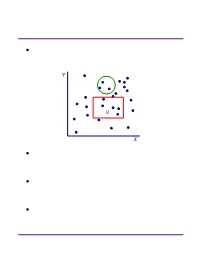

Computational Geometry Lecture 17 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: point set polygon L17.2 Computational geometry Algorithms for solving “geometric problems” in 2D and higher. Fundamental objects: point line segment line Basic structures: triangulation convex hull L17.3 Orthogonal range searching Input: n points in d dimensions • E.g., representing a database of n records each with d numeric fields Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box: • Are there any points? • How many are there? • List the points. L17.4 Orthogonal range searching Input: n points in d dimensions Query: Axis-aligned box (in 2D, a rectangle) • Report on the points inside the box Goal: Preprocess points into a data structure to support fast queries • Primary goal: Static data structure • In 1D, we will also obtain a dynamic data structure supporting insert and delete L17.5 1D range searching In 1D, the query is an interval: First solution using ideas we know: • Interval trees • Represent each point x by the interval [x, x]. • Obtain a dynamic structure that can list k answers in a query in O(k lg n) time. L17.6 1D range searching In 1D, the query is an interval: Second solution using ideas we know: • Sort the points and store them in an array • Solve query by binary search on endpoints. • Obtain a static structure that can list k answers in a query in O(k + lg n) time. Goal: Obtain a dynamic structure that can list k answers in a query in O(k + lg n) time. -

Range Searching

Range Searching ² Data structure for a set of objects (points, rectangles, polygons) for efficient range queries. Y Q X ² Depends on type of objects and queries. Consider basic data structures with broad applicability. ² Time-Space tradeoff: the more we preprocess and store, the faster we can solve a query. ² Consider data structures with (nearly) linear space. Subhash Suri UC Santa Barbara Orthogonal Range Searching ² Fix a n-point set P . It has 2n subsets. How many are possible answers to geometric range queries? Y 5 Some impossible rectangular ranges 6 (1,2,3), (1,4), (2,5,6). 1 4 Range (1,5,6) is possible. 3 2 X ² Efficiency comes from the fact that only a small fraction of subsets can be formed. ² Orthogonal range searching deals with point sets and axis-aligned rectangle queries. ² These generalize 1-dimensional sorting and searching, and the data structures are based on compositions of 1-dim structures. Subhash Suri UC Santa Barbara 1-Dimensional Search ² Points in 1D P = fp1; p2; : : : ; png. ² Queries are intervals. 15 71 3 7 9 21 23 25 45 70 72 100 120 ² If the range contains k points, we want to solve the problem in O(log n + k) time. ² Does hashing work? Why not? ² A sorted array achieves this bound. But it doesn’t extend to higher dimensions. ² Instead, we use a balanced binary tree. Subhash Suri UC Santa Barbara Tree Search 15 7 24 3 12 20 27 1 4 9 14 17 22 25 29 1 3 4 7 9 12 14 15 17 20 22 24 25 27 29 31 u v xlo =2 x hi =23 ² Build a balanced binary tree on the sorted list of points (keys). -

I/O-Efficient Spatial Data Structures for Range Queries

I/O-Efficient Spatial Data Structures for Range Queries Lars Arge Kasper Green Larsen MADALGO,∗ Department of Computer Science, Aarhus University, Denmark E-mail: [email protected],[email protected] 1 Introduction Range reporting is a one of the most fundamental topics in spatial databases and computational geometry. In this class of problems, the input consists of a set of geometric objects, such as points, line segments, rectangles etc. The goal is to preprocess the input set into a data structure, such that given a query range, one can efficiently report all input objects intersecting the range. The ranges most commonly considered are axis-parallel rectangles, halfspaces, points, simplices and balls. In this survey, we focus on the planar orthogonal range reporting problem in the external memory model of Aggarwal and Vitter [2]. Here the input consists of a set of N points in the plane, and the goal is to support reporting all points inside an axis-parallel query rectangle. We use B to denote the disk block size in number of points. The cost of answering a query is measured in the number of I/Os performed and the space of the data structure is measured in the number of disk blocks occupied, hence linear space is O(N=B) disk blocks. Outline. In Section 2, we set out by reviewing the classic B-tree for solving one- dimensional orthogonal range reporting, i.e. given N points on the real line and a query interval q = [q1; q2], report all T points inside q. In Section 3 we present optimal solutions for planar orthogonal range reporting, and finally in Section 4, we briefly discuss related range searching problems. -

Parallel Range, Segment and Rectangle Queries with Augmented Maps

Parallel Range, Segment and Rectangle Queries with Augmented Maps Yihan Sun Guy E. Blelloch Carnegie Mellon University Carnegie Mellon University [email protected] [email protected] Abstract The range, segment and rectangle query problems are fundamental problems in computational geometry, and have extensive applications in many domains. Despite the significant theoretical work on these problems, efficient implementations can be complicated. We know of very few practical implementations of the algorithms in parallel, and most implementations do not have tight theoretical bounds. In this paper, we focus on simple and efficient parallel algorithms and implementations for range, segment and rectangle queries, which have tight worst-case bound in theory and good parallel performance in practice. We propose to use a simple framework (the augmented map) to model the problem. Based on the augmented map interface, we develop both multi-level tree structures and sweepline algorithms supporting range, segment and rectangle queries in two dimensions. For the sweepline algorithms, we also propose a parallel paradigm and show corresponding cost bounds. All of our data structures are work-efficient to build in theory (O(n log n) sequential work) and achieve a low parallel depth (polylogarithmic for the multi-level tree structures, and O(n) for sweepline algorithms). The query time is almost linear to the output size. We have implemented all the data structures described in the paper using a parallel augmented map library. Based on the library each data structure only requires about 100 lines of C++ code. We test their performance on large data sets (up to 108 elements) and a machine with 72-cores (144 hyperthreads). -

Lecture Notes of CSCI5610 Advanced Data Structures

Lecture Notes of CSCI5610 Advanced Data Structures Yufei Tao Department of Computer Science and Engineering Chinese University of Hong Kong July 17, 2020 Contents 1 Course Overview and Computation Models 4 2 The Binary Search Tree and the 2-3 Tree 7 2.1 The binary search tree . .7 2.2 The 2-3 tree . .9 2.3 Remarks . 13 3 Structures for Intervals 15 3.1 The interval tree . 15 3.2 The segment tree . 17 3.3 Remarks . 18 4 Structures for Points 20 4.1 The kd-tree . 20 4.2 A bootstrapping lemma . 22 4.3 The priority search tree . 24 4.4 The range tree . 27 4.5 Another range tree with better query time . 29 4.6 Pointer-machine structures . 30 4.7 Remarks . 31 5 Logarithmic Method and Global Rebuilding 33 5.1 Amortized update cost . 33 5.2 Decomposable problems . 34 5.3 The logarithmic method . 34 5.4 Fully dynamic kd-trees with global rebuilding . 37 5.5 Remarks . 39 6 Weight Balancing 41 6.1 BB[α]-trees . 41 6.2 Insertion . 42 6.3 Deletion . 42 6.4 Amortized analysis . 42 6.5 Dynamization with weight balancing . 43 6.6 Remarks . 44 1 CONTENTS 2 7 Partial Persistence 47 7.1 The potential method . 47 7.2 Partially persistent BST . 48 7.3 General pointer-machine structures . 52 7.4 Remarks . 52 8 Dynamic Perfect Hashing 54 8.1 Two random graph results . 54 8.2 Cuckoo hashing . 55 8.3 Analysis . 58 8.4 Remarks . 59 9 Binomial and Fibonacci Heaps 61 9.1 The binomial heap . -

Uwaterloo Latex Thesis Template

In-Memory Storage for Labeled Tree-Structured Data by Gelin Zhou A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Doctor of Philosophy in Computer Science Waterloo, Ontario, Canada, 2017 c Gelin Zhou 2017 Examining Committee Membership The following served on the Examining Committee for this thesis. The decision of the Examining Committee is by majority vote. Johannes Fischer External Examiner Professor J. Ian Munro Supervisor Professor Meng He Co-Supervisor Assistant Professor Gordon V. Cormack Internal Examiner Professor Therese Biedl Internal Examiner Professor Gordon B. Agnew Internal-external Examiner Associate Professor ii I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. iii Abstract In this thesis, we design in-memory data structures for labeled and weights trees, so that various types of path queries or operations can be supported with efficient query time. We assume the word RAM model with word size w, which permits random accesses to w-bit memory cells. Our data structures are space-efficient and many of them are even succinct. These succinct data structures occupy space close to the information theoretic lower bounds of the input trees within lower order terms. First, we study the problems of supporting various path queries over weighted trees. A path counting query asks for the number of nodes on a query path whose weights lie within a query range, while a path reporting query requires to report these nodes. -

Adaptive and Approximate Orthogonal Range Counting∗

Adaptive and Approximate Orthogonal Range Counting∗ Timothy M. Chany Bryan T. Wilkinsonz Abstract The model we use in this paper is the standard w- We present three new results on one of the most basic bit word RAM [15]. In this model, the coordinates of problems in geometric data structures, 2-D orthogonal range the points of P and the vertices of Q are taken from counting. All the results are in the w-bit word RAM model. a fixed-size universe [U] = f1;:::;Ug. Furthermore, • It is well known that there are linear-space data struc- operations on single words take constant time, and it tures for 2-D orthogonal range counting with worst- is assumed that w = Ω(log U) and w = Ω(log n) so case optimal query time O(logw n). We give an O(n log log n)-space adaptive data structure that im- that any coordinate or index into the input array fits proves the query time to O(log log n + logw k), where in a single word. Bounds on the space requirements k is the output count. When k = O(1), our bounds match the state of the art for the 2-D orthogonal of data structures are given in words, unless stated range emptiness problem [Chan, Larsen, and P˘atra¸scu, otherwise. We will assume that U = O(n), since we SoCG 2011]. can operate in rank space [16] (i.e., replace coordinates • We give an O(n log log n)-space data structure for ap- of points with their ranks), at the expense of performing proximate 2-D orthogonal range counting that can com- an initial predecessor search during each query. -

Rt0 RANGE TREES and PRIORITY SEARCH TREES Hanan Samet

rt0 RANGE TREES AND PRIORITY SEARCH TREES Hanan Samet Computer Science Department and Center for Automation Research and Institute for Advanced Computer Studies University of Maryland College Park, Maryland 20742 e-mail: [email protected] Copyright © 1998 Hanan Samet These notes may not be reproduced by any means (mechanical or elec- tronic or any other) without the express written permission of Hanan Samet rt1 RANGE TREES • Balanced binary search tree • All data stored in the leaf nodes • Leaf nodes linked in sorted order by a doubly-linked list • Searching for [B : E] 1. find node with smallest value ≥B or largest ≤B 2. follow links until reach node with value >E • O (log2 N +F ) time to search, O (N · log2 N ) to build, and O (N ) space for N points and F answers • Ex: sort points in 2-d on their x coordinate value 55 ≤ ≥ 30 83 15 43 70 88 5 25355060808590 Denver Omaha Chicago Mobile Toronto Buffalo Atlanta Miami (5,45) (25,35) (35,40) (50,10) (60,75) (80,65) (85,15) (90,5) Copyright © 1998 by Hanan Samet rt2 2-D RANGE TREES • Binary tree of binary trees • Sort all points along one dimension (say x) and store them in the leaf nodes of a balanced binary tree such as a range tree (single line) • Each nonleaf node contains a 1-d range tree of the points in its subtrees sorted along y (double lines) • Ex: 55 A AA38 A' 13 55 8 25 43 70 30 B 83 C 5 10 15 35 40 45 65 75 38 40 B' C' (5,45) (90,5) (60,75) (25,35) (50,10) (35,40) (80,65) 23 43 (85,15) 10 70 10 35 40 45 5 15 65 75 15 D 43 E 70 F 88 G (90,5) (5,45) (60,75) (35,40) (85,15) (80,65) (50,10) (25,35) 40 D' 25 E' 70 F' 10 G' 5 HI25 35 JK50 60 LM80 85 NO90 (5,45) (25,35) (35,40) (50,10) (60,75) (80,65) (85,15) (90,5) 35 45 10 40 65 75 5 15 (25,35) (5,45) (50,10)(35,40) (80,65)(60,75) (90,5) (85,15) • Actually, don’t need the 1-d range tree in y at the root and at the sons of the root Copyright © 1998 by Hanan Samet 5 4 3 21 rt3 v g z rb SEARCHING 2-D RANGE TREES ([BX:EX],[BY:EY]) 1.