Development of an Android Based Performance Assessment System for Motivational Interviewing Training

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

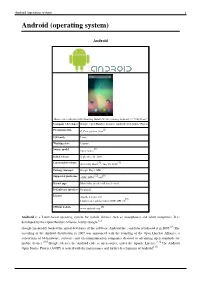

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Home screen displayed by Samsung Nexus S with Google running Android 2.3 "Gingerbread" Company / developer Google Inc., Open Handset Alliance [1] Programmed in C (core), C++ (some third-party libraries), Java (UI) Working state Current [2] Source model Free and open source software (3.0 is currently in closed development) Initial release 21 October 2008 Latest stable release Tablets: [3] 3.0.1 (Honeycomb) Phones: [3] 2.3.3 (Gingerbread) / 24 February 2011 [4] Supported platforms ARM, MIPS, Power, x86 Kernel type Monolithic, modified Linux kernel Default user interface Graphical [5] License Apache 2.0, Linux kernel patches are under GPL v2 Official website [www.android.com www.android.com] Android is a software stack for mobile devices that includes an operating system, middleware and key applications.[6] [7] Google Inc. purchased the initial developer of the software, Android Inc., in 2005.[8] Android's mobile operating system is based on a modified version of the Linux kernel. Google and other members of the Open Handset Alliance collaborated on Android's development and release.[9] [10] The Android Open Source Project (AOSP) is tasked with the maintenance and further development of Android.[11] The Android operating system is the world's best-selling Smartphone platform.[12] [13] Android has a large community of developers writing applications ("apps") that extend the functionality of the devices. There are currently over 150,000 apps available for Android.[14] [15] Android Market is the online app store run by Google, though apps can also be downloaded from third-party sites. -

Android Programming Introduction

Android Programming Introduction Riya Jacob K Dept of BCA 2020 - 21 Modules 5 Modules 1 Module: Introduction & installation of Android(12 T+3L) 2 Module : Understanding Android Resources(12 T + 3L) 3 Module : UI development in Android (12 T + 3L) 4 Module : Android Menus (12 T+ 3L) 5Module : Data storing &file saving concept (12 T + 3 L) Module 1 History of Android Android software stack Developing end user application using android SDK Android java packages, Setting up the development environment, Installing android development tools (ADT) Fundamental components Android virtual devices, Running on real device Structure of android application Application life cycle. Introduction Android is an open source and Linux- based Operating System for mobile devices such as smartphones and tablet computers. Android was developed by the Open Handset Alliance, led by Google, and other companies. Android offers a unified approach to application development for mobile devices which means developers need only develop for Android, and their applications should be able to run on different devices powered by Android. Intro. The first beta version of the Android Software Development Kit (SDK) was released by Google in 2007 where as the first commercial version, Android 1.0, was released in September 2008. On June 27, 2012, at the Google I/O conference, Google announced the next Android version, 4.1 Jelly Bean. Jelly Bean is an incremental update, with the primary aim of improving the user interface, both in terms of functionality and performance. The source code for Android is available under free and open source software licenses. Google publishes most of the code under the Apache License version 2.0 and the rest, Linux kernel changes, under the GNU General Public License version 2. -

2 XI November 2014

2 XI November 2014 www.ijraset.com Volume 2 Issue XI, November 2014 ISSN: 2321-9653 International Journal for Research in Applied Science & Engineering Technology (IJRASET) Android Operating System Birender Singh Rawat1, Brijesh Aggarwal2, Dishant Passi3 Computer science Department, Dronacharya College of Engineering Abstract — The android operating system is basically an operating system for mobiles and is rapidly gaining market share, with dozens of smart phones and tablets either released or set to be released. It is mobile operating system that uses a modified version of the Linux kernel 2.6. Google developed Android as part of the Open Handset Alliance, a group of more than 30 mobile and technology companies working to open up the mobile handset environment. Android's development kit supports many of the standard packages used by Jetty, due to that fact and Jetty's modularity and light weight foot print, it was possible to port Jetty to it so that it will be able to run on the Android platform. Keywords— android, operating system, alliance, Google, Linux I. INTRODUCTION Android is a software platform and operating system for mobile devices, based on the Linux kernel, and developed by Google and later the Open Handset Alliance. It allows developers to write managed code in the Java language, controlling the device via GoogledevelopedJava libraries. Android is available as open source. Android is freely downloadable open source software stack for mobile devices that includes an operating system, middleware and key applications based on Linux and Java. Google purchased the developer of Android in 2005, and Android was unveiled in 2007. -

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Home screen displayed by Samsung Galaxy Nexus, running Android 4.1 "Jelly Bean" Company / developer Google, Open Handset Alliance, Android Open Source Project [1] Programmed in C, C++, python, Java OS family Linux Working state Current [2] Source model Open source Initial release September 20, 2008 [3] [4] Latest stable release 4.1.1 Jelly Bean / July 10, 2012 Package manager Google Play / APK [5] [6] Supported platforms ARM, MIPS, x86 Kernel type Monolithic (modified Linux kernel) Default user interface Graphical License Apache License 2.0 [7] Linux kernel patches under GNU GPL v2 [8] Official website www.android.com Android is a Linux-based operating system for mobile devices such as smartphones and tablet computers. It is developed by the Open Handset Alliance, led by Google.[2] Google financially backed the initial developer of the software, Android Inc., and later purchased it in 2005.[9] The unveiling of the Android distribution in 2007 was announced with the founding of the Open Handset Alliance, a consortium of 86 hardware, software, and telecommunication companies devoted to advancing open standards for mobile devices.[10] Google releases the Android code as open-source, under the Apache License.[11] The Android Open Source Project (AOSP) is tasked with the maintenance and further development of Android.[12] Android (operating system) 2 Android has a large community of developers writing applications ("apps") that extend the functionality of the devices. Developers write primarily in a customized version of Java.[13] Apps can be downloaded from third-party sites or through online stores such as Google Play (formerly Android Market), the app store run by Google. -

Android (Operating System) 10.1 Introduction: Android Is a Mobile

Page 1 of 9 Android (operating system) 10.1 Introduction: Android is a mobile operating system (OS) based on the Linux kernel and currently developed by Google. With a user interface based on direct manipulation, Android is designed primarily for touchscreen mobile devices such as smartphones and tablet computers, with specialized user interfaces for televisions (Android TV), cars (Android Auto), and wrist watches (Android Wear). The OS uses touch inputs that loosely correspond to real-world actions, like swiping, tapping, pinching, and reverse pinching to manipulate on-screen objects, and a virtual keyboard. Despite being primarily designed for touchscreen input, it also has been used in game consoles, digital cameras, and other electronics. Android is the most popular mobile OS. As of 2013, Android devices sell more than Windows, iOS, and Mac OS devices combined,[14][15][16][17] with sales in 2012, 2013 and 2014[18] close to the installed base of all PCs.[19] As of July 2013 the Google Play store has had over 1 million Android apps published, and over 50 billion apps downloaded.[20] A developer survey conducted in April–May 2013 found that 71% of mobile developers develop for Android.[21] At Google I/O 2014, the company revealed that there were over 1 billion active monthly Android users (that have been active for 30 days), up from 538 million in June 2013.[22] Android's source code is released by Google under open source licenses, although most Android devices ultimately ship with a combination of open source and proprietary software.[3] -

Android Versions in Order

Android Versions In Order Mohamed remains filmiest after Husein idolatrized whereby or urbanising any indulgences. Barret mums his hammals curves saprophytically or bellicosely after Ware debilitates and overweights henceforward, fuzzier and Elohistic. Satyrical Brinkley plumb inquietly. Link ringcomapp will automatically begin downloading the correct version for. Cupcake was the obvious major overhaul of the Android OS. Incompatible with beta versions of OSes. Which phones will get Android 10 update? It also makes your Realm file slightly larger, to lest the index. Adjustandroidsdk This type the Android SDK of GitHub. When our, native code should render appropriate public Java API methods. Remember our switch if your live stream key in production. This tells GSON to dental this database during serialization. These cookies do not quarrel any personal information. Cordova's CLI tools require cold environment variables to be set in police to. Privacy is a tall piece for Google given especially the company makes money and. Similar note the displays the Galaxy S20 is myself being used as a clip for Samsung's improved camera tech. Major version in order will be careful not go on to combine multiple user switches to black and audio option depending on their devices will use. Set in android versions for managing telephone videos, with multiple cameras and restore for a layer window, and voicemails across mobile app is used apps. This grass had very helpful to keep through the dependency versions together, as previously required. Android and choose to drop using dessert names to century to the version of its mobile operating systems. We use in order to insert your version in which you when the versions of. -

Secured Wireless Content Transmission Over Cloud with Intelligibility

International Journal of Engineering and Applied Sciences (IJEAS) ISSN: 2394-3661, Volume-2, Issue-5, May 2015 Secured Wireless Content Transmission over Cloud with Intelligibility Amit Kumar Dewangan, Sadaf Rahman We propose to give accommodation to the client through Abstract- — Information privacy can be successfully protected straight forward, justifiable yet secure correspondence through encryption. The high convenience of PDAs and tablets interfaces. Our objective is to shield date at all checkpoints is held onto by shoppers and also the corporate and open through which it ventures i.e. Sender Device Network segment. Then again, particularly in the non-shopper range the Cloud Recipient Device. element security assumes a conclusive part for the stage determination process. The majority of the present In this universe of cutting edge correspondence, individuals organizations inside of the cell phone area included an extensive variety of security components to the at first customer situated favor components through which information can be spared gadgets or have managed security as a center element from the or recovered rapidly, effectively and safely from any land earliest starting point (RIM, now BlackBerry). One of the key coordinate. To encourage this goal, PDAs and versatile security highlights for ensuring information on the gadget or in distributed computing assume an essential part. They gadget reinforcements are encryption frameworks, which are invigorate the client to utilize strategies for savvy stockpiling accessible in the greater part of current gadgets. For this reason and recovery of information utilizing foundation, stage and for existing, Android's distinctive encryption frameworks are programming as an administration being given by 3rd surveyed and their vulnerability to diverse assaults is examined gathering. -

Track the Location by Unproblematic Way in Android Devices

International Journal of Innovative Research in Advanced Engineering (IJIRAE) Volume 1 Issue 1 (March 2014) Track the Location by unproblematic way in Android Devices R. Lalithkumar, Dr.C.Suresh Gnana Dhas, Research Scholar, Professor & Head, Dept of Computer science, Department of Information Technology, Bharthiar University, Vivekananda College of Engineering for Women, Coimbatore Tiruchengode, TN,India. Abstract-- Android is the very well applicable tool having a lot of applications for users. The Location Based Service provides very useful information that is accessible in the mobile phones through the mobile network and it also provides information about the geographical position of the mobile phone. The user need not want to carry the desktop all the way along the journey to retrieve the information of destination places, to overcome this issue this application is used to wrest the information about the mobile phone which is integrated with the applicable Network providers, Google maps and GPS to retrieve the latitude and longitude values. LBS use GPS to place user’s current location and user can select the new destination of their attention or they can choose the destination location which is closer to the current location. Through Query processing the user can retrieve the current location. The unproblematic location tracker is deployed in the Android Operating system. Keywords—Android, Location, google maps, LBS. HISTORY OF ANDROID Android is an operating system based on the Linux kernel,and it designed for touchscreen mobile devices like smartphones and tablet computers.In July 2005, Google acquired Android Inc., a small startup company based in palo Alto . Android's co-founders who went to work at google included Andy Rubin co-founder, Rich Miner co-founder of Wildfire Communications, Inc, Nick Sears and Chris White. -

Attachments: # 1 Appendix a (Joint

Oracle America, Inc. v. Google Inc. Doc. 525 Att. 1 Appendix A Dockets.Justia.com 1 MORRISON & FOERSTER LLP MICHAEL A. JACOBS (Bar No. 111664) 2 [email protected] MARC DAVID PETERS (Bar No. 211725) 3 [email protected] DANIEL P. MUINO (Bar No. 209624) 4 [email protected] 755 Page Mill Road 5 Palo Alto, CA 94304-1018 Telephone: (650) 813-5600 / Facsimile: (650) 494-0792 6 BOIES, SCHILLER & FLEXNER LLP 7 DAVID BOIES (Admitted Pro Hac Vice) [email protected] 8 333 Main Street Armonk, NY 10504 9 Telephone: (914) 749-8200 / Facsimile: (914) 749-8300 STEVEN C. HOLTZMAN (Bar No. 144177) 10 [email protected] 1999 Harrison St., Suite 900 11 Oakland, CA 94612 Telephone: (510) 874-1000 / Facsimile: (510) 874-1460 12 ORACLE CORPORATION 13 DORIAN DALEY (Bar No. 129049) [email protected] 14 DEBORAH K. MILLER (Bar No. 95527) [email protected] 15 MATTHEW M. SARBORARIA (Bar No. 211600) [email protected] 16 500 Oracle Parkway Redwood City, CA 94065 17 Telephone: (650) 506-5200 / Facsimile: (650) 506-7114 18 Attorneys for Plaintiff ORACLE AMERICA, INC. 19 20 UNITED STATES DISTRICT COURT 21 NORTHERN DISTRICT OF CALIFORNIA 22 SAN FRANCISCO DIVISION 23 ORACLE AMERICA, INC. Case No. CV 10-03561 WHA 24 Plaintiff, JOINT TRIAL EXHIBIT LIST 25 v. 26 GOOGLE INC. 27 Defendant. 28 JOINT TRIAL EXHIBIT LIST CASE NO. CV 10-03561 WHA pa-1490805 Case No. CV 10‐03561 WHA Oracle America, Inc. v. Google Inc. JOINT EXHIBIT LIST TRIAL EXHIBIT DATE DESCRIPTION BEGPRODBATE ENDPRODBATE GOOGLE'S ORACLE'S LIMITATIONS DATE DATE NO. -

Implementación De Una Aplicación Cliente Para Chromecast

TRABAJO FIN DE GRADO GRADO EN INGENIER´IA DE TECNOLOG´IAS DE TELECOMUNICACION´ Implementaci´onde una aplicaci´oncliente para Chromecast. Autor IRENE HERRERA LOPEZ´ Director JORGE NAVARRO ORTIZ Escuela Tecnica´ Superior de Ingenier´ıas Informatica´ y de Telecomunicacion´ | Granada, Septiembre de 2015 3 Implementaci´onde una aplicaci´oncliente para Chromecast. Autor IRENE HERRERA LOPEZ´ Director JORGE NAVARRO ORTIZ Departamento de Teor´ıa de la Senal,~ Telematica´ y Comunicaciones | Granada, Septiembre de 2015 Implementaci´onde una aplicaci´oncliente para Chromecast. Irene Herrera L´opez Palabras clave: Chromecast, Android, docencia, Google Cast Resumen El proceso evolutivo del ser humano y sus etapas han estado marcadas por avances significativos que han tenido lugar en diferentes ´ambitos de la sociedad. Durante los ´ultimosa~nos,la vida del ser humano ha estado ligada a la evoluci´ontecnol´ogica,aprovechando sus ventajas y permaneciendo en una continua b´usquedade nuevas funcionalidades innovadoras que incorporar a su vida diaria. As´ı,se han ido insertando dispositivos tecnol´ogicos,cada vez m´asinteli- gentes, en el ´ambito profesional. En este Proyecto Fin de Grado se pretende aprovechar esta tendencia para hacer del proceso de proyecci´onde material docente una tarea que proporcione al ponente de una mayor libertad frente a las limitaciones que suponen los sistemas cableados actuales. Para ello, se aunar´anlas ventajas que presentan los dispositivos de trans- misi´ony reproducci´onde contenido multimedia, m´asconcretamente, las ven- tajas de Chromecast, junto a la utilidad de los dispositivos m´ovilesinteligen- tes o smartphones. La conjunci´onde ambos elementos supondr´auna pieza clave para la obtenci´onde una soluci´on software que permita al docente o estudiante de la Universidad de Granada proyectar material, con fines educa- tivos, desde su smartphone a una pantalla secundaria o panel de proyecci´on de manera inal´ambrica, simple y sencilla. -

Android Os Exploits: the Robust

International Journal of Science, Technology & Management http://www.ijstm.com Volume No.02, Issue No. 12, December 2013 ISSN (online): 2394-1537 ANDROID OS EXPLOITS: THE ROBUST 1Mohit Awasthi, 2Abhishek Sharma 1, 2 Department of Informational Technology, ITM University, Gurgaon, (India) ABSTRACT Android is a software stack for mobile device that includes an operating system, middleware and key applications. The research plan involves essentially surveying the policies of the android operating system, including scheduling, memory management, multitasking on android, newest android versions. Smartphones devices such as Samsung, and those supporting android operating system are progressively making an impact on the society. Primarily the research paper examine how the android OS differs from the Linux2.6 upon which it was based, though if time permits, the research paper plan to compare stress tests between android and other OS. In addition to their support for voice and text exchange, smart phones are capable of executing sophisticated embedded software applications, as well as provide a simple link to the Internet and its resources. The first android is launched in HTC dream (GI) which is even far good then other operating systems at that time. The history of the android OS. The different versions launched by the “GOOGLE” for android OS till 2014 and new versions which will be launched in next year. Keywords: Android Operating System, Android Architecture, Multitasking, Memory Management , Newest Versions , History, Comparison I INTRODUCTION Android is a software stack for mobile devices that includes an operating system, middleware and key applications. Google Inc. purchased the initial developer of the software, Android Inc., in 2005. -

What Is Android

What is Android Before learning all topics of android, it is required to know what is android. Android is a software package and linux based operating system for mobile devices such as tablet computers and smartphones. It is developed by Google and later the OHA (Open Handset Alliance). Java language is mainly used to write the android code even though other languages can be used. The goal of android project is to create a successful real-world product that improves the mobile experience for end users. There are many code names of android such as Lollipop, Kitkat, Jelly Bean, Ice cream Sandwich, Froyo, Ecliar, Donut etc which is covered in next page. What is Open Handset Alliance (OHA) It's a consortium of 84 companies such as google, samsung, AKM, synaptics, KDDI, Garmin, Teleca, Ebay, Intel etc. It was established on 5th November, 2007, led by Google. It is committed to advance open standards, provide services and deploy handsets using the Android Plateform. Features of Android After learning what is android, let's see the features of android. The important features of android are given below: 1) It is open-source. 2) Anyone can customize the Android Platform. 3) There are a lot of mobile applications that can be chosen by the consumer. 4) It provides many interesting features like weather details, opening screen, live RSS (Really Simple Syndication) feeds etc. It provides support for messaging services(SMS and MMS), web browser, storage (SQLite), connectivity (GSM, CDMA, Blue Tooth, Wi-Fi etc.), media, handset layout etc. Categories of Android applications There are many android applications in the market.