Applying Test Automation to GSM Network Element - Architectural Observations and Case Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GSM Network Element Modification Or Upgrade Required for GPRS. Mobile Station (MS) New Mobile Station Is Required to Access GPRS

GPRS GPRS architecture works on the same procedure like GSM network, but, has additional entities that allow packet data transmission. This data network overlaps a second- generation GSM network providing packet data transport at the rates from 9.6 to 171 kbps. Along with the packet data transport the GSM network accommodates multiple users to share the same air interface resources concurrently. Following is the GPRS Architecture diagram: GPRS attempts to reuse the existing GSM network elements as much as possible, but to effectively build a packet-based mobile cellular network, some new network elements, interfaces, and protocols for handling packet traffic are required. Therefore, GPRS requires modifications to numerous GSM network elements as summarized below: GSM Network Element Modification or Upgrade Required for GPRS. Mobile Station (MS) New Mobile Station is required to access GPRS services. These new terminals will be backward compatible with GSM for voice calls. BTS A software upgrade is required in the existing Base Transceiver Station(BTS). BSC The Base Station Controller (BSC) requires a software upgrade and the installation of new hardware called the packet control unit (PCU). The PCU directs the data traffic to the GPRS network and can be a separate hardware element associated with the BSC. GPRS Support Nodes (GSNs) The deployment of GPRS requires the installation of new core network elements called the serving GPRS support node (SGSN) and gateway GPRS support node (GGSN). Databases (HLR, VLR, etc.) All the databases involved in the network will require software upgrades to handle the new call models and functions introduced by GPRS. -

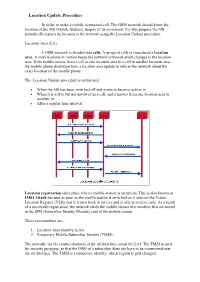

Location Update Procedure

Location Update Procedure In order to make a mobile terminated call, The GSM network should know the location of the MS (Mobile Station), despite of its movement. For this purpose the MS periodically reports its location to the network using the Location Update procedure. Location Area (LA) A GSM network is divided into cells. A group of cells is considered a location area. A mobile phone in motion keeps the network informed about changes in the location area. If the mobile moves from a cell in one location area to a cell in another location area, the mobile phone should perform a location area update to inform the network about the exact location of the mobile phone. The Location Update procedure is performed: When the MS has been switched off and wants to become active, or When it is active but not involved in a call, and it moves from one location area to another, or After a regular time interval. Location registration takes place when a mobile station is turned on. This is also known as IMSI Attach because as soon as the mobile station is switched on it informs the Visitor Location Register (VLR) that it is now back in service and is able to receive calls. As a result of a successful registration, the network sends the mobile station two numbers that are stored in the SIM (Subscriber Identity Module) card of the mobile station. These two numbers are:- 1. Location Area Identity (LAI) 2. Temporary Mobile Subscriber Identity (TMSI). The network, via the control channels of the air interface, sends the LAI. -

Modeling the Use of an Airborne Platform for Cellular Communications Following Disruptions

Dissertations and Theses 9-2017 Modeling the Use of an Airborne Platform for Cellular Communications Following Disruptions Stephen John Curran Follow this and additional works at: https://commons.erau.edu/edt Part of the Aviation Commons, and the Communication Commons Scholarly Commons Citation Curran, Stephen John, "Modeling the Use of an Airborne Platform for Cellular Communications Following Disruptions" (2017). Dissertations and Theses. 353. https://commons.erau.edu/edt/353 This Dissertation - Open Access is brought to you for free and open access by Scholarly Commons. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of Scholarly Commons. For more information, please contact [email protected]. MODELING THE USE OF AN AIRBORNE PLATFORM FOR CELLULAR COMMUNICATIONS FOLLOWING DISRUPTIONS By Stephen John Curran A Dissertation Submitted to the College of Aviation in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Aviation Embry-Riddle Aeronautical University Daytona Beach, Florida September 2017 © 2017 Stephen John Curran All Rights Reserved. ii ABSTRACT Researcher: Stephen John Curran Title: MODELING THE USE OF AN AIRBORNE PLATFORM FOR CELLULAR COMMUNICATIONS FOLLOWING DISRUPTIONS Institution: Embry-Riddle Aeronautical University Degree: Doctor of Philosophy in Aviation Year: 2017 In the wake of a disaster, infrastructure can be severely damaged, hampering telecommunications. An Airborne Communications Network (ACN) allows for rapid and accurate information exchange that is essential for the disaster response period. Access to information for survivors is the start of returning to self-sufficiency, regaining dignity, and maintaining hope. Real-world testing has proven that such a system can be built, leading to possible future expansion of features and functionality of an emergency communications system. -

Wireless Networks

SUBJECT WIRELESS NETWORKS SESSION 3 Getting to Know Wireless Networks and Technology SESSION 3 Case study Getting to Know Wireless Networks and Technology By Lachu Aravamudhan, Stefano Faccin, Risto Mononen, Basavaraj Patil, Yousuf Saifullah, Sarvesh Sharma, Srinivas Sreemanthula Jul 4, 2003 Get a brief introduction to wireless networks and technology. You will see where this technology has been, where it is now, and where it is expected to go in the future. Wireless networks have been an essential part of communication in the last century. Early adopters of wireless technology primarily have been the military, emergency services, and law enforcement organizations. Scenes from World War II movies, for example, show soldiers equipped with wireless communication equipment being carried in backpacks and vehicles. As society moves toward information centricity, the need to have information accessible at any time and anywhere (as well as being reachable anywhere) takes on a new dimension. With the rapid growth of mobile telephony and networks, the vision of a mobile information society (introduced by Nokia) is slowly becoming a reality. It is common to see people communicating via their mobile phones and devices. The era of the pay phones is past, and pay phones stand witness as a symbol of the way things were. With today's networks and coverage, it is possible for a user to have connectivity almost anywhere. Growth in commercial wireless networks occurred primarily in the late 1980s and 1990s, and continues into the 2000s. The competitive nature of the wireless industry and the mass acceptance of wireless devices have caused costs associated with terminals and air time to come down significantly in the last 10 years. -

Cellular Technology.Pdf

Cellular Technologies Mobile Device Investigations Program Technical Operations Division - DFB DHS - FLETC Basic Network Design Frequency Reuse and Planning 1. Cellular Technology enables mobile communication because they use of a complex two-way radio system between the mobile unit and the wireless network. 2. It uses radio frequencies (radio channels) over and over again throughout a market with minimal interference, to serve a large number of simultaneous conversations. 3. This concept is the central tenet to cellular design and is called frequency reuse. Basic Network Design Frequency Reuse and Planning 1. Repeatedly reusing radio frequencies over a geographical area. 2. Most frequency reuse plans are produced in groups of seven cells. Basic Network Design Note: Common frequencies are never contiguous 7 7 The U.S. Border Patrol uses a similar scheme with Mobile Radio Frequencies along the Southern border. By alternating frequencies between sectors, all USBP offices can communicate on just two frequencies Basic Network Design Frequency Reuse and Planning 1. There are numerous seven cell frequency reuse groups in each cellular carriers Metropolitan Statistical Area (MSA) or Rural Service Areas (RSA). 2. Higher traffic cells will receive more radio channels according to customer usage or subscriber density. Basic Network Design Frequency Reuse and Planning A frequency reuse plan is defined as how radio frequency (RF) engineers subdivide and assign the FCC allocated radio spectrum throughout the carriers market. Basic Network Design How Frequency Reuse Systems Work In concept frequency reuse maximizes coverage area and simultaneous conversation handling Cellular communication is made possible by the transmission of RF. This is achieved by the use of a powerful antenna broadcasting the signals. -

GSM Network and Services

GSM Network and Services Nodes and protocols - or a lot of three letter acronyms 1 GSM Network and Services 2G1723 Johan Montelius PSTN A GSM network - public switched telephony network MSC -mobile switching center HLR MS BSC VLR - mobile station - base station controller BTS AUC - base transceiver station PLMN - public land mobile network 2 GSM Network and Services 2G1723 Johan Montelius The mobile station • Mobile station (MS) consist of – Mobile Equipment – Subscriber Identity Module (SIM) • The operator owns the SIM – Subscriber identity – Secret keys for encryption – Allowed networks – User information – Operator specific applications 3 GSM Network and Services 2G1723 Johan Montelius Mobile station addresses - IMSI • IMSI - International Mobile Subscriber Identity – 240071234567890 • Mobile Country Code (MCC), 3 digits ex 240 • Mobile Network Code (MNC), 2 digits ex 07 • Mobile Subscriber Id Number (MSIN), up to 10 digits – Identifies the SIM card 4 GSM Network and Services 2G1723 Johan Montelius Mobile station addresses - IMEI • IMEI – International Mobile Equipment Identity – fifteen digits written on the back of your mobile – has changes format in phase 2 and 2+ – Type Allocation (8), Serial number (6), Check (1) – Used for stolen/malfunctioning terminals 5 GSM Network and Services 2G1723 Johan Montelius Address of a user - MSISDN • Mobile Subscriber ISDN Number - E.164 – Integrated Services Digital Networks, the services of digital telephony networks – this is your phone number • Structure – Country Code (CC), 1 – 3 digits (46 for Sweden) – National Destination Code (NDC), 2-3 digits (709 for Vodafone) – Subscriber Number (SN), max 10 digits (757812 for me) • Mobile networks thus distinguish the address to you from the address to the station. -

Tr 143 901 V13.0.0 (2016-01)

ETSI TR 1143 901 V13.0.0 (201616-01) TECHNICAL REPORT Digital cellular telecocommunications system (Phahase 2+); Feasibility Study on generic access to A/Gb intinterface (3GPP TR 43.9.901 version 13.0.0 Release 13) R GLOBAL SYSTETEM FOR MOBILE COMMUNUNICATIONS 3GPP TR 43.901 version 13.0.0 Release 13 1 ETSI TR 143 901 V13.0.0 (2016-01) Reference RTR/TSGG-0143901vd00 Keywords GSM ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org/standards-search The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the only prevailing document is the print of the Portable Document Format (PDF) version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: https://portal.etsi.org/People/CommiteeSupportStaff.aspx Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI. -

![Lect12-GSM-Network-Elements [Compatibility Mode]](https://docslib.b-cdn.net/cover/3183/lect12-gsm-network-elements-compatibility-mode-1923183.webp)

Lect12-GSM-Network-Elements [Compatibility Mode]

GSM Network Elements and Interfaces Functional Basics GSM System Architecture The mobile radiotelephone system includes the following subsystems • Base Station Subsystem (BSS) • Network and Switching Subsystem (NSS) • Operations and Maintenance Subsystem (OSS) GSM Network Structure : Concept PLMN Terrestrial Public Land Mobile Network public mobile communications network network Mobile Um terminal device Air Interface PSTN BSS Public Switched Base Station Telephone Network Subsystem radio access NSS BSS Network Switching ISDN Base Station Subsystem Subsystem Integrated Services MS radio access Control/switching of Digital Network Mobile mobile services Station BSS Base Station Subsystem PDN radio access Public Data Network Mobile network Fixed network components components GSM-PLMN: Subsystems PLMN Terrestrial Public Land Mobile Network RSS Network Radio SubSystem PSTN Public Switched Telephone Network ISDN Integrated Services MS BSS NSS Digital Network Mobile Base Station Network Switching Station Subsystem Subsystem PDN Public Data Network OSS Operation SubSystem Function Units in GSM---PLMN-PLMN Radio Network Switching SubSystem Subsystem RSS = NSS Other networks Mobile Base Station Station +++ Subsystem MSMSMS BSSBSSBSS ACACAC EIREIREIR BTS HLR VLR TTT PSTN RRR BSCBSCBSC AAA MSC ISDN UUU Data OMCOMC----RRRR OMCOMC----SSSS MS = netnetnet-net --- ME + SIM works Operation SubSystem OSS Functional Units in GSM---PLMN-PLMN Phase 2+ RSS NSS Other networks MS +++ BSS ACACAC EIREIREIR BTS HLR/GR VLR TTT PSTN RRR BSCBSCBSC AAA MSC ISDN UUU CSE Data networks SGSN GGSN Inter/ intranet OMCOMC----BBBB OMCOMC----SSSS OSS Base station subsystem (BSS) The base station subsystem includes Base Transceiver Stations (BTS) that provides the radio link with MSs. • BTSs are controlled by a Base Station Controller (BSC),which also controls the Trans-Coder-Units (TCU). -

Wireless and Mobile Networks

Wireless and Mobile Networks Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] Audio/Video recordings of this lecture are available on-line at: http://www.cse.wustl.edu/~jain/cse473-16/ Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse473-16/ ©2016 Raj Jain 7-1 Overview 1. Wireless Link Characteristics 2. Wireless LANs and PANs 3. Cellular Networks 4. Mobility Management 5. Impact on Higher Layers Note: This class lecture is based on Chapter 7 of the textbook (Kurose and Ross) and the figures provided by the authors. Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse473-16/ ©2016 Raj Jain 7-2 Wireless Link Overview Characteristics q Mobile vs. Wireless q Wireless Networking Challenges q Peer-to-Peer or Base Stations? q Code Division Multiple Access (CDMA) q Direct-Sequence Spread Spectrum q Frequency Hopping Spread Spectrum Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse473-16/ ©2016 Raj Jain 7-3 Mobile vs Wireless Mobile Wireless q Mobile vs Stationary q Wireless vs Wired q Wireless ⇒ media sharing issues q Mobile ⇒ routing, addressing issues Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse473-16/ ©2016 Raj Jain 7-4 Wireless Networking Challenges 1. Propagation Issues: Shadows, Multipath 2. Interference ⇒ High loss rate, Variable Channel ⇒ Retransmissions and Cross-layer optimizations 3. Transmitters and receivers moving at high speed ⇒ Doppler Shift 4. Low power transmission ⇒ Limited reach 100mW in WiFi base station vs. 100 kW TV tower 5. License-Exempt spectrum ⇒ Media Access Control 6. -

Sysmocom - S.F.M.C

OsmoBSC User Manual i sysmocom - s.f.m.c. GmbH OsmoBSC User Manual by Holger Freyther, Harald Welte, and Neels Hofmeyr Copyright © 2012-2018 sysmocomDRAFT - s.f.m.c. GmbH Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with the Invariant Sections being just ’Foreword’, ’Acknowledgements’ and ’Preface’, with no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". The Asciidoc source code of this manual can be found at http://git.osmocom.org/osmo-gsm-manuals/ DRAFT 1.7.0-269-g37288, 2021-Sep-27 OsmoBSC User Manual ii HISTORY NUMBER DATE DESCRIPTION NAME 1 February 2016 Initial OsmoBSC manual, recycling OsmoNITB HW sections 2 October 2018 Add Handover chapter: document new neighbor NH configuration, HO algorithm 2 and inter-BSC handover. Copyright © 2012-2018 sysmocom - s.f.m.c. GmbH DRAFT 1.7.0-269-g37288, 2021-Sep-27 OsmoBSC User Manual iii Contents 1 Foreword 1 1.1 Acknowledgements..................................................1 1.2 Endorsements.....................................................2 2 Preface 2 2.1 FOSS lives by contribution!..............................................2 2.2 Osmocom and sysmocom...............................................2 2.3 Corrections......................................................3 2.4 Legal disclaimers...................................................3 -

End-To-End Mobile Communication Security Testbed Using Open Source Applications in Virtual Environment

End-to-End Mobile Communication Security Testbed Using Open Source Applications in Virtual Environment K. Jijo George1, A. Sivabalan1, T. Prabhu1 and Anand R. Prasad2 1NEC India Pvt. Ltd. Chennai, India 2NEC Corporation. Tokyo, Japan E-mail: {k.george; sivabalan.arumugam; prabhu.t}@necindia.in; [email protected] Received 20 January 2015; Accepted 01 May 2015 Abstract In this paper we present an end-to-end mobile communication testbed that utilizes various open source projects. The testbed consists of Global System for Mobiles (GSM), General Packet Radio Service (GPRS) and SystemArchi- tecture Evolution/Long Term Evolution(SAE/LTE) elements implemented on a virtual platform. Our goal is to utilize the testbed to perform security analysis. We used virtualization to get flexibility and scalability in implementation. So as to prove the usability of the testbed, we reported some of the test results in this paper. These tests are mainly related to security. The test results prove that the testbed functions properly. Keywords: LTE, Amarisoft, Testbed, Security, OpenBTS, OpenBSC, OsmoSGSN, OpenGGSN, OpenIMS. 1 Introduction Mobile network complexity has increased with time due to the coexistence of multiple technologies like GSM, GPRS, Universal Mobile Telecommunica- tions System (UMTS) and SAE/LTE. Telecom service providers are trying to accommodate the existing customer service (GSM, i.e. voice only) and also Journal of ICT, Vol. 3, 67–90. doi: 10.13052/jicts2245-800X.314 c 2015 River Publishers. All rights reserved. 68 K. J. George et al. trying to provide cutting edge services such as Live TV, video conference etc. without discontinuing the old services. -

Mobility Testbed Development (Openbts Testbed) and Its Integration with Voiit, Webrtc & NG-911 Testbeds 90/100

Mobility Testbed Development (OpenBTS Testbed) and its Integration with VoIIT, WebRTC & NG-911 Testbeds 90/100 Sushma Sitaram A20137272 May 09, 2014 1 Table of Contents 1 Abstract ....................................................................................................................... 2 2 Introduction ................................................................................................................. 3 3 Goals Of The Project .................................................................................................. 3 4 Milestones Of The Project .......................................................................................... 4 5 Infrastructure Needed.................................................................................................. 4 6 GSM Architecture ....................................................................................................... 5 7 Openbts Application Suite .......................................................................................... 6 8 Logical Diagram ......................................................................................................... 7 9 Physical Diagram ........................................................................................................ 8 10 Execution Of The Project............................................................................................ 9 10.1 Testbed Setup ...................................................................................................... 9 10.2 Initial Testing