Evaluation, Assessment, and Testing Current Issues in Comparative

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2014 High School National Tournament Results

2014 NATIONAL SPEECH & DEBATE TOURNAMENT The Heartland Pride Nationals Hosted by East Kansas and Three Trails Districts June 15-20, 2014 These unofficial un-audited electronic results have been provided as a service of the National Speech and Debate Association. Bruno E. Jacob / Pi Kappa Delta National Award in Speech National Speech & Debate Tournament 1 1554 Nova HS, FL 2 1548 Plano Sr. HS, TX 3 1537 West HS – Iowa City, IA 4 1536 Parkview HS, MO 5 1534 Leland HS, CA 6 1523 Cherry Creek HS, CO 7 1508 Albuquerque Academy, NM 8 1438 Dowling Catholic HS, IA 9 1427 Regis HS, NY 10 1422 Bellarmine College Prep, CA These unofficial un-audited electronic results have been provided as a service of the National Speech & Debate Association. National Speech & Debate Association Schools of Outstanding Distinction Bellarmine College Prep, CA Cypress Bay HS, FL Desert Vista HS, AZ Eagan HS, MN James Logan HS, CA Miramonte HS, CA Munster HS, IN Newton South HS, MA Nova HS, FL Plano West Sr. HS, TX Speech Schools of Excellence Speech Schools of Honor Apple Valley HS, MN Beavercreek HS, OH Blue Springs HS, MO Bishop McGuinness HS, OK Brophy College Prep, AZ Brentwood Academy, TN Denver East HS, CO Buffalo Grove HS, IL Fullerton Joint Union HS, CA Chanhassen HS, MN Gabrielino HS, CA Clovis East HS, CA Grapevine HS, TX Dougherty Valley HS, CA Hattiesbiurg HS, MS Hastings HS, TX Hinsdale Central HS, IL James Madison Memorial HS, WI Jackson HS, OH Kent Denver School, CO Leland HS, CA Larue County HS, KY Monte Vista HS – Danville, CA Liberty Sr. -

OACAC Regional Institute, Shanghai August 17-‐18, 2015 -‐-‐ Attendee List

OACAC Regional Institute, Shanghai August 17-18, 2015 -- Attendee list (as of Aug 10) First Name Last Name Institution Institution Location Sherrie Huan The University of Sydney Australia Rhett Miller University of Sydney Australia Alexander Bari MODUL University Vienna Austria Sven Clarke The University of British Columbia Canada Leanne Stillman University of Guelph Canada David Zutautas University of Toronto Canada Matthew Abbate Dulwich ColleGe ShanGhai China Katherine Arnold ShanGhai Qibao DwiGht HiGh School China LihenG Bai Shenzhen Cuiyuan Middle School China Michelle Barini ShanGhai American School-PudonG China David Barrutia Beijing No. 4 HiGh School China John Beck Due West Education ConsultinG Company Limited China Christina Chandler EducationUSA China Barbara Chen Johns Hopkins Center for Talented Youth China DonGsonG Chen ZhenGzhou Middle School China Jane Chen TsinGhua University HiGh School China Marilyn Cheng Bridge International Education China Jennifer Cheong Suzhou SinGapore International School China Chorku Cheung Yew ChunG International School China Jeffrey Cho Shenzhen Middle School China Gloria Chyou InitialView China Alice Cokeng ShenyanG No.2 Sino-Canadian HiGh School China Valery Cooper YK Pao School China Ted Corbould ShanGhai United International School China Terry Crawford InitialView China Sabrina Dubik KinGlee HiGh School China Kelly Flanagan Yew ChunG International School ShanGhai Century Park China Candace Gadomski KinGlee HiGh School China Lucien Giordano Dulwich ColleGe Suzhou China Hamilton GreGG HGIEC -

Meet Michael Michael Giambelluca Is Prep's New President

CREIGHTON PREP ALUMNI NEWS MEET MICHAEL MICHAEL GIAMBELLUCA IS PREP'S NEW PRESIDENT ATHLETIC RECORD ENROLLMENT ALUMNI IN THE SUMMER 2013 10 FOR 2013 12 HALL OF FAME 24 WORKPLACE PRESIDENT’S MESSAGE Greetings I feel blessed and energized to be writing you as I begin my tenure as the 32nd president of Creighton Prep, a school whose formative mission, exceptional quality, loyal following and supportive atmosphere remind me so much of Jesuit High School in New Orleans, where I recently completed my 12th year as principal. Both school communities are so very blessed in so many ways. As I begin my work at Prep, I want to thank the Creighton Prep Governing Board, the Search Committee, my predecessor Father Andy Alexander, S.J. ’66, Tim McIntire at Carney Sandoe & Associates and all the Prep constituents who took the time to be part of the very thorough process that helped select and transition me to my role as president, a position from which I will be honored and humbled to serve a community that means so much to so many people, a community that has already been so welcoming to my wife Donnamaria and me. On page four of this Alumni News, you can find out more about the selection process and my background as well as some of my reflections on education and my priorities going forward. As mentioned there, I will be focused on advancing the mission of Prep for and through all members of our school community. As I begin my tenure, I will be doing a great deal of listening and learning about this wonderful institution, and this process will result in us both celebrating what we are doing well and improving upon what we might be able to do better. -

2021 Finalist Directory

2021 Finalist Directory April 29, 2021 ANIMAL SCIENCES ANIM001 Shrimply Clean: Effects of Mussels and Prawn on Water Quality https://projectboard.world/isef/project/51706 Trinity Skaggs, 11th; Wildwood High School, Wildwood, FL ANIM003 Investigation on High Twinning Rates in Cattle Using Sanger Sequencing https://projectboard.world/isef/project/51833 Lilly Figueroa, 10th; Mancos High School, Mancos, CO ANIM004 Utilization of Mechanically Simulated Kangaroo Care as a Novel Homeostatic Method to Treat Mice Carrying a Remutation of the Ppp1r13l Gene as a Model for Humans with Cardiomyopathy https://projectboard.world/isef/project/51789 Nathan Foo, 12th; West Shore Junior/Senior High School, Melbourne, FL ANIM005T Behavior Study and Development of Artificial Nest for Nurturing Assassin Bugs (Sycanus indagator Stal.) Beneficial in Biological Pest Control https://projectboard.world/isef/project/51803 Nonthaporn Srikha, 10th; Natthida Benjapiyaporn, 11th; Pattarapoom Tubtim, 12th; The Demonstration School of Khon Kaen University (Modindaeng), Muang Khonkaen, Khonkaen, Thailand ANIM006 The Survival of the Fairy: An In-Depth Survey into the Behavior and Life Cycle of the Sand Fairy Cicada, Year 3 https://projectboard.world/isef/project/51630 Antonio Rajaratnam, 12th; Redeemer Baptist School, North Parramatta, NSW, Australia ANIM007 Novel Geotaxic Data Show Botanical Therapeutics Slow Parkinson’s Disease in A53T and ParkinKO Models https://projectboard.world/isef/project/51887 Kristi Biswas, 10th; Paxon School for Advanced Studies, Jacksonville, -

2020 Issue 2

THE VIGIL ISSUE 2 2020 TABLE Maps—3 CAMPUS OF RoMUNnce in the air—4 MAP CONTENTS The Importance of MUN—5 Daily Reports—6 january eighteenth, CISSMUN in Action—8 twenty-twenty Power Over the People—10 YYou, Yes You, Can Get Into College—11 CISSMUN in Action—12 Neither Nor—14 PUDONG STAFF MAP Editors-in-Chief Head Photographer Photographers Evelyn Shi Claire Chen Ethan Koh Jenny Fu Taha Noon Emilie Zhang Layout Staff Ziv Liu Ethan Chin Nika Sedighi Layout Editor Daniel Wan Lena He Staff Writers Anna Jin Steven Yeh Kelly Kim Editor Yin Yin Htwe Sulynn Yeh Hayley Szymanek Joey Lin Thiri Yadanar San Henie Zhang Isabella Zumbolo Tommy Song Assistant Editor Alan Deutsch page 2 page 3 roMUNce in the air CISSMUN To: _________________________ From: _________________________ CISSMUN To: _________________________ From: _________________________ CISSMUN To: _________________________ From: _________________________ CISSMUN CISSMUN To: _________________________ To: _________________________ From: _________________________ From: _________________________ CISSMUN To: _________________________ From: _________________________ by Hayley Szymanek and Evelyn Shi page 4 The Importance of MUN By: Hayley Szymanek Despite this being an MUN conference, not many people seem to have an overly positive opinion of the UN. Going around the conference and asking how people felt about the UN, most dele- gates stammered or gave half-hearted comments about the idea being better than the execution. CISSMUN attendee Alan Deutsch sighed and muttered, “obligatory.” If we, the people who choose to participate in a UN simulation, don’t even have a favorable view of the UN, then what’s the point? What’s the point of MUN if we can’t even agree that the UN is important? The value of MUN is not in the Model of the United Nations. -

Things You Should Know As an Expat in Shanghai

THINGS YOU SHOULD KNOW AS AN EXPAT IN SHANGHAI PARTNERS IN MANAGING1 YOUR WEALTH CONTENTS WELCOME TO SHANGHAI 3 ABOUT ST. JAMES’S PLACE WEALTH MANAGEMENT 4 DIFFERENT TYPES OF VISA IN CHINA 5 EXPATRIATE DEMOGRAPHICS 6 USEFUL APPS TO DOWNLOAD 7 CHINESE SIM CARD PACKAGES 8 GETTING AROUND 9 FINDING AN APARTMENT 11 BANKING 13 CLIMATE 16 AIR AND WATER QUALITY 17 COST OF LIVING 18 INTERNATIONAL SCHOOLS 20 HEALTHCARE 21 INTERNATIONAL HOSPITALS AND CLINICS 22 OTHER USEFUL INFORMATION 23 THINGS TO SEE AND DO 25 2 WELCOME TO SHANGHAI OLIVER WICKHAM Head of Business – China St. James’s Place Wealth Management Shanghai has been attracting expats for more than 150 years. Foreign workers have settled in the city because it offers the chance to capitalise on China’s thriving economy – but without moving completely beyond the comforts of home. It’s exotic but not starkly unfamiliar, and while it maintains the flavour of its early roots, the transport, buildings and lifestyle are all very modern. At St. James’s Place Wealth Management, we appreciate that relocating to a new country can be daunting. This guide draws upon the experiences of many of our Shanghai Team who themselves have experienced this move first hand. Given our strong links within the international business community and years of experience serving the expatriate population in Shanghai, we think we are in a good position to help your transition into this great city. One of the things that makes Shanghai so popular with foreign workers is the ease with which comforts from home can be found. -

UNIVERSITY of CALIFORNIA, IRVINE Shanghai's Wandering

UNIVERSITY OF CALIFORNIA, IRVINE Shanghai’s Wandering Ones: Child Welfare in a Global City, 1900–1953 DISSERTATION submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in History by Maura Elizabeth Cunningham Dissertation Committee: Professor Jeffrey N. Wasserstrom, Chair Professor Kenneth L. Pomeranz Professor Anne Walthall 2014 © 2014 Maura Elizabeth Cunningham DEDICATION To the Thompson women— Mom-mom, Aunt Marge, Aunt Gin, and Mom— for their grace, humor, courage, and love. ii TABLE OF CONTENTS Page LIST OF FIGURES iv LIST OF TABLES AND GRAPHS v ACKNOWLEDGEMENTS vi CURRICULUM VITAE x ABSTRACT OF THE DISSERTATION xvii INTRODUCTION: The Century of the Child 3 PART I: “Save the Children!”: 1900–1937 22 CHAPTER 1: Child Welfare and International Shanghai in Late Qing China 23 CHAPTER 2: Child Welfare and Chinese Nationalism 64 PART II: Crisis and Recovery: 1937–1953 103 CHAPTER 3: Child Welfare and the Second Sino-Japanese War 104 CHAPTER 4: Child Welfare in Civil War Shanghai 137 CHAPTER 5: Child Welfare in Early PRC Shanghai 169 EPILOGUE: Child Welfare in the 21st Century 206 BIBLIOGRAPHY 213 iii LIST OF FIGURES Page Frontispiece: Two sides of childhood in Shanghai 2 Figure 1.1 Tushanwan display, Panama-Pacific International Exposition 25 Figure 1.2 Entrance gate, Tushanwan Orphanage Museum 26 Figure 1.3 Door of Hope Children’s Home in Jiangwan, Shanghai 61 Figure 2.1 Street library, Shanghai 85 Figure 2.2 Ah Xi, Zhou Ma, and the little beggar 91 Figure 3.1 “Motherless Chinese Baby” 105 Figure -

International Schools Guide

Britannica International School INTERNATIONAL Shanghai 上海不列颠英国学校 SCHOOLS "To provide quality teaching & learning experiences for students & staff, focusing on excellence & internationalism, so all may individually & collectively achieve their full potential." Date founded 2013 New for 2015 Affliations with: Duke of Edinburgh International Award, British Gymnastics Association, ASA Swimming Programme Grade level Pre-Nursery to Year 11 Curriculum English National Curriculum, IGCSE and A-Level Languages taught English including EAL programme, Spanish, French, Korean, Mandarin, Japanese 09 School strengths Personalised education, Co-curricular activities, Location & facilities, World languages programme Tuition ¥118,660 – 210,000 year Description With an emphasis on high academic standards, strong pastoral care & a broad, balanced curriculum, Britannica offers the best of British independent school education. Our dynamic & caring staff are experienced in delivering the English National Curriculum. We embrace & celebrate a multicultural population, providing a personalised programme to meet the needs of all students. Small classes & an inclusive policy ensure pupils are fully supported across the curriculum. www.britannicashanghai.com Email: [email protected] Telephone: 6402 7889 Address: 1988 Gubei Lu, Gubei, Changning District Learn about international school options, from preschool to high school The British International School The British International Shanghai (BISS) Pudong School Shanghai (BISS) Puxi 上海英国学校 上海英国学校 "Our -

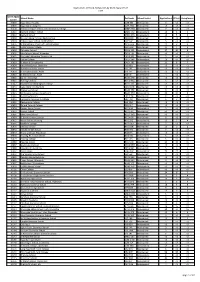

2017 Admissions Cycle

Applications, Offers & Acceptances by UCAS Apply Centre 2017 UCAS Apply School Name Postcode School Sector Applications Offers Acceptances Centre 10002 Ysgol David Hughes LL59 5SS Maintained 4 <3 <3 10006 Ysgol Gyfun Llangefni LL77 7NG Maintained <3 <3 <3 10008 Redborne Upper School and Community College MK45 2NU Maintained 7 <3 <3 10011 Bedford Modern School MK41 7NT Independent 13 6 5 10012 Bedford School MK40 2TU Independent 19 5 5 10018 Stratton Upper School, Bedfordshire SG18 8JB Maintained 4 <3 <3 10024 Cedars Upper School, Bedfordshire LU7 2AE Maintained 5 <3 <3 10026 St Marylebone Church of England School W1U 5BA Maintained 11 3 3 10027 Luton VI Form College LU2 7EW Maintained 17 4 3 10029 Abingdon School OX14 1DE Independent 27 10 8 10030 John Mason School, Abingdon OX14 1JB Maintained <3 <3 <3 10031 Our Lady's Abingdon Trustees Ltd OX14 3PS Independent 5 <3 <3 10032 Radley College OX14 2HR Independent 10 <3 <3 10033 St Helen & St Katharine OX14 1BE Independent 21 8 7 10034 Heathfield School, Berkshire SL5 8BQ Independent <3 <3 <3 10036 The Marist Senior School SL5 7PS Independent <3 <3 <3 10038 St Georges School, Ascot SL5 7DZ Independent <3 <3 <3 10039 St Marys School, Ascot SL5 9JF Independent 4 <3 <3 10040 Garth Hill College RG42 2AD Maintained <3 <3 <3 10041 Ranelagh School RG12 9DA Maintained 3 <3 <3 10042 Bracknell and Wokingham College RG12 1DJ Maintained <3 <3 <3 10043 Ysgol Gyfun Bro Myrddin SA32 8DN Maintained <3 <3 <3 10044 Edgbarrow School RG45 7HZ Maintained 3 <3 <3 10045 Wellington College, Crowthorne RG45 7PU -

2011 Candidates for the Presidential Scholars Program

Candidates for the Presidential Scholars Program January 2011 [*] An asterisk indicates a Candidate for Presidential Scholar in the Arts. Candidates are grouped by their legal place of residence; the state abbreviation listed, if different, may indicate where the candidate attends school. Alabama AL - Auburn - Youssef Biaz, Auburn High School AL - Auburn - Lena G. Liu, Auburn High School AL - Birmingham - Aaron K. Banks, Homewood High School AL - Birmingham - Connor C. Cocke, Spain Park High School AL - Birmingham - Haley K. Delk, Mountain Brook High School AL - Birmingham - Catherine B. Hughes, Mountain Brook High School AL - Birmingham - Samuel C. Johnson, Hoover High School AL - Birmingham - Laura A. Krannich, Vestavia Hills High School AL - Birmingham - Jeffrey Y. Liu, Indian Springs School AL - Birmingham - Lawrence Y. Liu, Indian Springs School AL - Birmingham - Sarah McClees, Mountain Brook High School AL - Birmingham - Valentina J. Raman, Vestavia Hills High School AL - Birmingham - Hannah M. Robinson, Homewood High School AL - Birmingham - Owen M. Scott, Vestavia Hills High School AL - Birmingham - Charles W. Sides, Jefferson County International Baccalaureate School AL - Birmingham - Michael R. Stanton, Vestavia Hills High School AL - Cullman - Natalie P. Johnson, Cullman High School AL - Daphne - John S. Clark, Bayside Academy AL - Decatur - Catherine Y. Keller, Randolph School AL - Dothan - Jared A. Watkins, Houston Academy AL - Hazel Green - Christina M. Pickering, Hazel Green High School AL - Homewood - Elizabeth M. Gauntt, Homewood High School AL - Hoover - Bryan D. Anderson, Hoover High School AL - Hoover - [ * ] Kyra F. Wharton, Hoover High School AL - Huntsville - Teja Alapati, Randolph School AL - Huntsville - Kevin J. Byrne, Randolph School AL - Huntsville - Clinton B. Caudle, Randolph School AL - Huntsville - Elizabeth H. -

Strong Performers and Successful Reformers in Education

Strong Performers and Successful Reformers in Education Lessons from PIsA for the UnIted stAtes This work is published on the responsibility of the Secretary-General of the OECD. The opinions expressed and arguments employed herein do not necessarily reflect the official views of the Organisation or of the governments of its member countries. Please cite this publication as: OECD (2010), Strong Performers and Successful Reformers in Education: Lessons from PISA for the United States http://dx.doi.org/10.1787/9789264096660-en ISBN 978-92-64-09665-3 (print) ISBN 978-92-64-09666-0 (PDF) Photo credits: Fotolia.com © Ainoa Getty Images © John Foxx Corrigenda to OECD publications may be found on line at: www.oecd.org/publishing/corrigenda. © OECD 2010 You can copy, download or print OECD content for your own use, and you can include excerpts from OECD publications, databases and multimedia products in your own documents, presentations, blogs, websites and teaching materials, provided that suitable acknowledgment of OECD as source and copyright owner is given. All requests for public or commercial use and translation rights should be submitted to [email protected]. Requests for permission to photocopy portions of this material for public or commercial use shall be addressed directly to the Copyright Clearance Center (CCC) at [email protected] or the Centre français d’exploitation du droit de copie (CFC) at [email protected]. Foreword united States President Barack obama has launched one of the world’s most ambitious education reform agendas. entitled “race to the top”, the agenda encourages uS states to adopt internationally benchmarked standards and assessments as a framework within which they can prepare students for success in college and the workplace; recruit, develop, reward, and retain effective teachers and principals; build data systems that measure student success and inform teachers and principals how they can improve their practices; and turn around their lowest- performing schools. -

Bachelor Thesis

CHINESE TARGET MARKET RESEARCH FOR VIRTUAL REALITY TECHNOLOGY IN EDUCATION FIELD Jiayi Li-Haapaniemi Bachelor‟s thesis Autumn 2010 International Business Oulu University of Applied Sciences ABSTRACT Oulu University of Applied Sciences Degree programme in International Business Author: Jiayi Li-Haapaniemi Title of Bachelor‟s thesis: Chinese Target Market Research for Virtual Reality Technology in Education Field Supervisor(s): Helena Ahola Term and year of completion: Autumn 2010 Number of pages: 68 + 11 ABSTRACT This thesis studies three Chinese target markets for virtual learning environment technology. The emphasis is to contribute the valuable market information to Commissioner Applebones Ltd. The research releaser problems are: 1) What is the situation of the Chinese Educational Market? a) What is the customer behaviour of the target markets? b) What are the basic policies and regulations in the relevant business field? 2) Who are the possible business contacts or what is the focus group? A qualitative method was chosen for this research and the data was gathered by desktop research and in-depth interviews. The scope of this research was defined as junior high schools and the criteria were defined by the commissioner as technological-oriented, foreign language oriented, experimental type of schools. The basic theoretical framework for this study was obtained from pertinent literature and complemented by updated information gained from online source. The findings and results of this study are of concern to the general introduction of the Chinese education market. This also includes the relevant regulation and policies, customer behaviour in terms of school principals‟ role in decision making. The lists of the schools that are fulfilling the given criteria in Shanghai and Hangzhou region and the current information communication technology (ICT) situation and development of Hong Kong Fung Kai Innovative School are presented, as well as the possibility of pilot testing for Virtual learning environment technology.