Memory, I/O and Multiprocessing Considerations on ABAQUS and MATLAB

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Comprehensive Review for Central Processing Unit Scheduling Algorithm

IJCSI International Journal of Computer Science Issues, Vol. 10, Issue 1, No 2, January 2013 ISSN (Print): 1694-0784 | ISSN (Online): 1694-0814 www.IJCSI.org 353 A Comprehensive Review for Central Processing Unit Scheduling Algorithm Ryan Richard Guadaña1, Maria Rona Perez2 and Larry Rutaquio Jr.3 1 Computer Studies and System Department, University of the East Caloocan City, 1400, Philippines 2 Computer Studies and System Department, University of the East Caloocan City, 1400, Philippines 3 Computer Studies and System Department, University of the East Caloocan City, 1400, Philippines Abstract when an attempt is made to execute a program, its This paper describe how does CPU facilitates tasks given by a admission to the set of currently executing processes is user through a Scheduling Algorithm. CPU carries out each either authorized or delayed by the long-term scheduler. instruction of the program in sequence then performs the basic Second is the Mid-term Scheduler that temporarily arithmetical, logical, and input/output operations of the system removes processes from main memory and places them on while a scheduling algorithm is used by the CPU to handle every process. The authors also tackled different scheduling disciplines secondary memory (such as a disk drive) or vice versa. and examples were provided in each algorithm in order to know Last is the Short Term Scheduler that decides which of the which algorithm is appropriate for various CPU goals. ready, in-memory processes are to be executed. Keywords: Kernel, Process State, Schedulers, Scheduling Algorithm, Utilization. 2. CPU Utilization 1. Introduction In order for a computer to be able to handle multiple applications simultaneously there must be an effective way The central processing unit (CPU) is a component of a of using the CPU. -

The Different Unix Contexts

The different Unix contexts • User-level • Kernel “top half” - System call, page fault handler, kernel-only process, etc. • Software interrupt • Device interrupt • Timer interrupt (hardclock) • Context switch code Transitions between contexts • User ! top half: syscall, page fault • User/top half ! device/timer interrupt: hardware • Top half ! user/context switch: return • Top half ! context switch: sleep • Context switch ! user/top half Top/bottom half synchronization • Top half kernel procedures can mask interrupts int x = splhigh (); /* ... */ splx (x); • splhigh disables all interrupts, but also splnet, splbio, splsoftnet, . • Masking interrupts in hardware can be expensive - Optimistic implementation – set mask flag on splhigh, check interrupted flag on splx Kernel Synchronization • Need to relinquish CPU when waiting for events - Disk read, network packet arrival, pipe write, signal, etc. • int tsleep(void *ident, int priority, ...); - Switches to another process - ident is arbitrary pointer—e.g., buffer address - priority is priority at which to run when woken up - PCATCH, if ORed into priority, means wake up on signal - Returns 0 if awakened, or ERESTART/EINTR on signal • int wakeup(void *ident); - Awakens all processes sleeping on ident - Restores SPL a time they went to sleep (so fine to sleep at splhigh) Process scheduling • Goal: High throughput - Minimize context switches to avoid wasting CPU, TLB misses, cache misses, even page faults. • Goal: Low latency - People typing at editors want fast response - Network services can be latency-bound, not CPU-bound • BSD time quantum: 1=10 sec (since ∼1980) - Empirically longest tolerable latency - Computers now faster, but job queues also shorter Scheduling algorithms • Round-robin • Priority scheduling • Shortest process next (if you can estimate it) • Fair-Share Schedule (try to be fair at level of users, not processes) Multilevel feeedback queues (BSD) • Every runnable proc. -

Comparing Systems Using Sample Data

Operating System and Process Monitoring Tools Arik Brooks, [email protected] Abstract: Monitoring the performance of operating systems and processes is essential to debug processes and systems, effectively manage system resources, making system decisions, and evaluating and examining systems. These tools are primarily divided into two main categories: real time and log-based. Real time monitoring tools are concerned with measuring the current system state and provide up to date information about the system performance. Log-based monitoring tools record system performance information for post-processing and analysis and to find trends in the system performance. This paper presents a survey of the most commonly used tools for monitoring operating system and process performance in Windows- and Unix-based systems and describes the unique challenges of real time and log-based performance monitoring. See Also: Table of Contents: 1. Introduction 2. Real Time Performance Monitoring Tools 2.1 Windows-Based Tools 2.1.1 Task Manager (taskmgr) 2.1.2 Performance Monitor (perfmon) 2.1.3 Process Monitor (pmon) 2.1.4 Process Explode (pview) 2.1.5 Process Viewer (pviewer) 2.2 Unix-Based Tools 2.2.1 Process Status (ps) 2.2.2 Top 2.2.3 Xosview 2.2.4 Treeps 2.3 Summary of Real Time Monitoring Tools 3. Log-Based Performance Monitoring Tools 3.1 Windows-Based Tools 3.1.1 Event Log Service and Event Viewer 3.1.2 Performance Logs and Alerts 3.1.3 Performance Data Log Service 3.2 Unix-Based Tools 3.2.1 System Activity Reporter (sar) 3.2.2 Cpustat 3.3 Summary of Log-Based Monitoring Tools 4. -

A Simple Chargeback System for SAS® Applications Running on UNIX and Linux Servers

SAS Global Forum 2007 Systems Architecture Paper: 194-2007 A Simple Chargeback System For SAS® Applications Running on UNIX and Linux Servers Michael A. Raithel, Westat, Rockville, MD Abstract Organizations that run SAS on UNIX and Linux servers often have a need to measure overall SAS usage and to charge the projects and users who utilize the servers. This allows an organization to pay for the hardware, software, and labor necessary to maintain and run these types of shared servers. However, performance management and accounting software is normally expensive. Purchasing such software, configuring it, and managing it may be prohibitive in terms of cost and in terms of having staff with the right skill sets to do so. Consequently, many organizations with UNIX or Linux servers do not have a reliable way to measure the overall use of SAS software and to charge individuals and projects for its use. This paper presents a simple methodology for creating a chargeback system on UNIX and Linux servers. It uses basic accounting programs found in the UNIX and Linux operating systems, and exploits them using SAS software. The paper presents an overview of the UNIX/Linux “sa” command and the basic accounting system. Then, it provides a SAS program that utilizes this command to capture and store monthly SAS usage statistics. The paper presents a second SAS program that reads the monthly SAS usage SAS data set and creates both a chargeback report and a general usage report. After reading this paper, you should be able to easily adapt the sample SAS programs to run on servers in your own environment. -

CS414 SP 2007 Assignment 1

CS414 SP 2007 Assignment 1 Due Feb. 07 at 11:59pm Submit your assignment using CMS 1. Which of the following should NOT be allowed in user mode? Briefly explain. a) Disable all interrupts. b) Read the time-of-day clock c) Set the time-of-day clock d) Perform a trap e) TSL (test-and-set instruction used for synchronization) Answer: (a), (c) should not be allowed. Which of the following components of a program state are shared across threads in a multi-threaded process ? Briefly explain. a) Register Values b) Heap memory c) Global variables d) Stack memory Answer: (b) and (c) are shared 2. After an interrupt occurs, hardware needs to save its current state (content of registers etc.) before starting the interrupt service routine. One issue is where to save this information. Here are two options: a) Put them in some special purpose internal registers which are exclusively used by interrupt service routine. b) Put them on the stack of the interrupted process. Briefly discuss the problems with above two options. Answer: (a) The problem is that second interrupt might occur while OS is in the middle of handling the first one. OS must prevent the second interrupt from overwriting the internal registers. This strategy leads to long dead times when interrupts are disabled and possibly lost interrupts and lost data. (b) The stack of the interrupted process might be a user stack. The stack pointer may not be legal which would cause a fatal error when the hardware tried to write some word at it. -

Cgroups Py: Using Linux Control Groups and Systemd to Manage CPU Time and Memory

cgroups py: Using Linux Control Groups and Systemd to Manage CPU Time and Memory Curtis Maves Jason St. John Research Computing Research Computing Purdue University Purdue University West Lafayette, Indiana, USA West Lafayette, Indiana, USA [email protected] [email protected] Abstract—cgroups provide a mechanism to limit user and individual resource. 1 Each directory in a cgroupsfs hierarchy process resource consumption on Linux systems. This paper represents a cgroup. Subdirectories of a directory represent discusses cgroups py, a Python script that runs as a systemd child cgroups of a parent cgroup. Within each directory of service that dynamically throttles users on shared resource systems, such as HPC cluster front-ends. This is done using the the cgroups tree, various files are exposed that allow for cgroups kernel API and systemd. the control of the cgroup from userspace via writes, and the Index Terms—throttling, cgroups, systemd obtainment of statistics about the cgroup via reads [1]. B. Systemd and cgroups I. INTRODUCTION systemd A frequent problem on any shared resource with many users Systemd uses a named cgroup tree (called )—that is that a few users may over consume memory and CPU time. does not impose any resource limits—to manage processes When these resources are exhausted, other users have degraded because it provides a convenient way to organize processes in access to the system. A mechanism is needed to prevent this. a hierarchical structure. At the top of the hierarchy, systemd creates three cgroups By placing throttles on physical memory consumption and [2]: CPU time for each individual user, cgroups py prevents re- source exhaustion on a shared system and ensures continued • system.slice: This contains every non-user systemd access for other users. -

Linux CPU Schedulers: CFS and Muqss Comparison

Umeå University 2021-06-14 Bachelor’s Thesis In Computing Science Linux CPU Schedulers: CFS and MuQSS Comparison Name David Shakoori Gustafsson Supervisor Anna Jonsson Examinator Ola Ringdahl CFS and MuQSS Comparison Abstract The goal of this thesis is to compare two process schedulers for the Linux operating system. In order to provide a responsive and interactive user ex- perience, an efficient process scheduling algorithm is important. This thesis seeks to explain the potential performance differences by analysing the sched- ulers’ respective designs. The two schedulers that are tested and compared are Con Kolivas’s MuQSS and Linux’s default scheduler, CFS. They are tested with respect to three main aspects: latency, turn-around time and interactivity. Latency is tested by using benchmarking software, the turn- around time by timing software compilation, and interactivity by measuring video frame drop percentages under various background loads. These tests are performed on a desktop PC running Linux OpenSUSE Leap 15.2, using kernel version 5.11.18. The test results show that CFS manages to keep a generally lower latency, while turn-around times differs little between the two. Running the turn-around time test’s compilation using a single process gives MuQSS a small advantage, while dividing the compilation evenly among the available logical cores yields little difference. However, CFS clearly outper- forms MuQSS in the interactivity test, where it manages to keep frame drop percentages considerably lower under each tested background load. As is apparent by the results, Linux’s current default scheduler provides a more responsive and interactive experience within the testing conditions, than the alternative MuQSS. -

Types of Operating System 3

Types of Operating System 3. Batch Processing System The OS in the early computers was fairly simple. Its major task was to transfer control automatically from one job to the next. The OS was always resident in memory. To speed up processing, operators batched together jobs with similar requirement/needs and ran them through the computer as a group. Thus, the programmers would leave their programs with the operator. The operator would sort programs into batches with similar requirements and, as the computer became available, would run each batch. The output from each job would be sent back to the appropriate programmer. Memory layout of Batch System Operating System User Program Area Batch Processing Operating System JOBS Batch JOBS JOBS JOBS JOBS Monitor OPERATING SYSTEM HARDWARE Advantages: ➢ Batch processing system is particularly useful for operations that require the computer or a peripheral device for an extended period of time with very little user interaction. ➢ Increased performance as it was possible for job to start as soon as previous job is finished without any manual intervention. ➢ Priorities can be set for different batches. Disadvantages: ➢ No interaction is possible with the user while the program is being executed. ➢ In this execution environment , the CPU is often idle, because the speeds of the mechanical I/O devices are intrinsically slower than are those of electronic devices. 4. Multiprogramming System The most important aspect of job scheduling is the ability to multi-program. Multiprogramming increases CPU utilization by organizing jobs so that CPU always has one to execute. The idea is as follows: The operating system keeps several jobs in memory simultaneously. -

CPU Scheduling

Chapter 5: CPU Scheduling Operating System Concepts – 10th Edition Silberschatz, Galvin and Gagne ©2018 Chapter 5: CPU Scheduling Basic Concepts Scheduling Criteria Scheduling Algorithms Thread Scheduling Multi-Processor Scheduling Real-Time CPU Scheduling Operating Systems Examples Algorithm Evaluation Operating System Concepts – 10th Edition 5.2 Silberschatz, Galvin and Gagne ©2018 Objectives Describe various CPU scheduling algorithms Assess CPU scheduling algorithms based on scheduling criteria Explain the issues related to multiprocessor and multicore scheduling Describe various real-time scheduling algorithms Describe the scheduling algorithms used in the Windows, Linux, and Solaris operating systems Apply modeling and simulations to evaluate CPU scheduling algorithms Operating System Concepts – 10th Edition 5.3 Silberschatz, Galvin and Gagne ©2018 Basic Concepts Maximum CPU utilization obtained with multiprogramming CPU–I/O Burst Cycle – Process execution consists of a cycle of CPU execution and I/O wait CPU burst followed by I/O burst CPU burst distribution is of main concern Operating System Concepts – 10th Edition 5.4 Silberschatz, Galvin and Gagne ©2018 Histogram of CPU-burst Times Large number of short bursts Small number of longer bursts Operating System Concepts – 10th Edition 5.5 Silberschatz, Galvin and Gagne ©2018 CPU Scheduler The CPU scheduler selects from among the processes in ready queue, and allocates the a CPU core to one of them Queue may be ordered in various ways CPU scheduling decisions may take place when a -

Sample Answers

Operating Systems (G53OPS) - Examination Operating Systems (G53OPS) - Examination Question 1 Question 2 The development of operating systems can be seen to be closely associated with the development of computer hardware. With regard to process synchronisation describe what is meant by race conditions? Describe the main developments of operating systems that occurred at each computer generation. (5 Marks) (17 Marks) Describe two methods that allow mutual exclusion with busy waiting to be implemented. Ensure you state any problems with the methods you describe. George 2+ and George 3 were mainframe operating systems used on ICL mainframes. What are the main differences between the two operating systems? (10 Marks) (8 Marks) Describe an approach of mutual exclusion that does not require busy waiting. (10 Marks) Graham Kendall Graham Kendall Operating Systems (G53OPS) - Examination Operating Systems (G53OPS) - Examination Question 3 Question 4 What is meant by pre-emptive scheduling? Intuitively, an operating systems that allows multiprogramming provides better CPU (3 marks) utilisation than a monoprogramming operating system. However, there are benefits in a monoprogramming operating system. Describe these benefits. Describe the following scheduling algorithms • Non-preemptive, First Come First Served (FCFS) (7 marks) • Round Robin (RR) • Multilevel Feedback Queue Scheduling We can demonstrate, using a model, that multiprogramming does provide better CPU utilisation. Describe such a model. How can RR be made to mimic FCFS? Use the model to show how we can predict CPU utilisation when we add extra memory. (15 marks) Graph paper is supplied for this question, should you need it. The Shortest Job First (SJF) scheduling algorithm can be proven to produce the minimum (18 marks) average waiting time. -

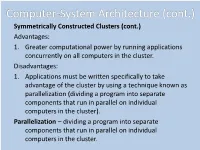

Computer-System Architecture (Cont.) Symmetrically Constructed Clusters (Cont.) Advantages: 1

Computer-System Architecture (cont.) Symmetrically Constructed Clusters (cont.) Advantages: 1. Greater computational power by running applications concurrently on all computers in the cluster. Disadvantages: 1. Applications must be written specifically to take advantage of the cluster by using a technique known as parallelization (dividing a program into separate components that run in parallel on individual computers in the cluster). Parallelization – dividing a program into separate components that run in parallel on individual computers in the cluster. Computer-System Architecture (cont.) interconnect interconnect computer computer computer storage area network (SAN) General structure of a clustered system. Operating-System Structure The operating system provides the environment within which programs are executed. Operating systems must have the ability to multiprogram. (A single program cannot, in general, keep either the CPU or the I/O devices busy at all times.) Single users frequently have multiple programs running. Multiprogramming increases CPU utilization by organizing jobs (code and data) so that the CPU always has one to execute. The operating system keeps several jobs in memory (on disk) simultaneously, which is called a job pool. This pool consists of all processes residing on disk awaiting allocation of main memory. (to be executed) Operating-System Structure 0 operating system job 1 job 2 job 3 job 4 job 5 512M Memory layout for the multiprogramming system. The set of jobs in memory can be a subset of the jobs kept in the job pool. The operating system picks and begins to execute one of the jobs in memory. Eventually, the job may have to wait for some task, such as an I/O operation to complete. -

Billing the CPU Time Used by System Components on Behalf of Vms Boris Djomgwe Teabe, Alain Tchana, Daniel Hagimont

Billing the CPU Time Used by System Components on Behalf of VMs Boris Djomgwe Teabe, Alain Tchana, Daniel Hagimont To cite this version: Boris Djomgwe Teabe, Alain Tchana, Daniel Hagimont. Billing the CPU Time Used by System Components on Behalf of VMs. 13th IEEE International Conference on Services Computing (SCC 2016), Jun 2016, San Francisco, CA, United States. pp. 307-315. hal-01782591 HAL Id: hal-01782591 https://hal.archives-ouvertes.fr/hal-01782591 Submitted on 2 May 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Open Archive TOULOUSE Archive Ouverte ( OATAO ) OATAO is an open acce ss repository that collects the work of Toulouse researchers and makes it freely available over the web where possible. This is an author-deposited version published in : http://oatao.univ-toulouse.fr/ Epri nts ID : 18957 To link to this article URL : http://dx.doi.org/10.1109/SCC.2016.47 To cite this version : Djomgwe Teabe, Boris and Tchana, Alain- Bouzaïde and Hagimont, Daniel Billing the CPU Time Used by System Components on Behalf of VMs. (2016) In: 13th IEEE International Conference on Services Computing (SCC 2016), 27 June 2016 - 2 July 2016 (San Francisco, CA, United States).