Autonomous Weapon Systems and International Humanitarian Law

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Managing the Human Weapon System: a Vision for an Air Force Human-Performance Doctrine

Calhoun: The NPS Institutional Archive DSpace Repository Faculty and Researchers Faculty and Researchers Collection 2009 Managing the Human Weapon System: A Vision for an Air Force Human-Performance Doctrine Tvaryanas, A.P. Tvaryanas, A.P., Brown, L., and Miller, N.L. "Managing the Human Weapon System: A Vision for an Air Force Human-Performance Doctrine", Air & Space Power Journal, pp. 34-41, Summer 2009. http://hdl.handle.net/10945/36508 Downloaded from NPS Archive: Calhoun In air combat, “the merge” occurs when opposing aircraft meet and pass each other. Then they usually “mix it up.” In a similar spirit, Air and Space Power Journal’s “Merge” articles present contending ideas. Readers are free to join the intellectual battlespace. Please send comments to [email protected] or [email protected]. Managing the Human Weapon System A Vision for an Air Force Human-Performance Doctrine LT COL ANTHONY P. TVARYANAS, USAF, MC, SFS COL LEX BROWN, USAF, MC, SFS NITA L. MILLER, PHD* The basic planning, development, organization and training of the Air Force must be well rounded, covering every modern means of waging air war. The Air Force doctrines likewise must be !exible at all times and entirely uninhibited by tradition. —Gen Henry H. “Hap” Arnold N A RECENT paper on America’s Air special operations forces’ declaration that Force, Gen T. Michael Moseley asserted “humans are more important than hardware” that we are at a strategic crossroads as a in asymmetric warfare.2 Consistent with this consequence of global dynamics and view, in January 2004, the deputy secretary of Ishifts in the character of future warfare; he defense directed the Joint Staff to “develop also noted that “today’s con!uence of global the next generation of . -

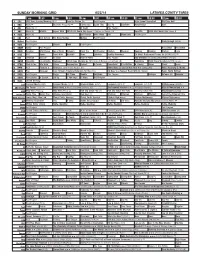

Sunday Morning Grid 6/22/14 Latimes.Com/Tv Times

SUNDAY MORNING GRID 6/22/14 LATIMES.COM/TV TIMES 7 am 7:30 8 am 8:30 9 am 9:30 10 am 10:30 11 am 11:30 12 pm 12:30 2 CBS CBS News Sunday Morning (N) Å Face the Nation (N) Paid Program High School Basketball PGA Tour Golf 4 NBC News Å Meet the Press (N) Å Conference Justin Time Tree Fu LazyTown Auto Racing Golf 5 CW News (N) Å In Touch Paid Program 7 ABC News (N) Wildlife Exped. Wild 2014 FIFA World Cup Group H Belgium vs. Russia. (N) SportCtr 2014 FIFA World Cup: Group H 9 KCAL News (N) Joel Osteen Mike Webb Paid Woodlands Paid Program 11 FOX Paid Joel Osteen Fox News Sunday Midday Paid Program 13 MyNet Paid Program Crazy Enough (2012) 18 KSCI Paid Program Church Faith Paid Program 22 KWHY Como Paid Program RescueBot RescueBot 24 KVCR Painting Wild Places Joy of Paint Wyland’s Paint This Oil Painting Kitchen Mexican Cooking Cooking Kitchen Lidia 28 KCET Hi-5 Space Travel-Kids Biz Kid$ News LinkAsia Healthy Hormones Ed Slott’s Retirement Rescue for 2014! (TVG) Å 30 ION Jeremiah Youssef In Touch Hour of Power Paid Program Into the Blue ›› (2005) Paul Walker. (PG-13) 34 KMEX Conexión En contacto Backyard 2014 Copa Mundial de FIFA Grupo H Bélgica contra Rusia. (N) República 2014 Copa Mundial de FIFA: Grupo H 40 KTBN Walk in the Win Walk Prince Redemption Harvest In Touch PowerPoint It Is Written B. Conley Super Christ Jesse 46 KFTR Paid Fórmula 1 Fórmula 1 Gran Premio Austria. -

International Treaties Signed by the State of Palestine

INTERNATIONAL TREATIES SIGNED BY THE STATE OF PALESTINE Area Name of Treaty and Date of its Adoption Entry into force 1. Convention Against Torture and other Cruel, Inhuman or Degrading 2 May 2014 Treatment or Punishment (CAT), 10 December 1984 2. Convention on the Elimination of All Forms of Discrimination Against 2 May 2014 Women (CEDAW), 18 December 1979. 3. Convention on the Political Rights of Women, 31 March 1953 2 April 2015 4. Convention on the Rights of the Child, 20 November 1989. 2 May 2014 Human 5. Convention on the Rights of Persons with Disabilities, 13 December 2 May 2014 Rights 2006. 6. International Covenant on Civil and Political Rights (ICCPR), 16 Decem- 2 July 2014 ber 1966. 7. International Convention on the Elimination of all Forms of Racial Dis- 2 May 2014 crimination, 7 March 1966. 8. International Covenant on Economic, Social and Cultural Rights 2 July 2014 (ICESCR), 16 December 1966. 9. Optional Protocol to the Convention of the Rights of the Child on the 7 May 2014 Involvement of Children in Armed Conflict, 25 May 2000 10. Hague Convention (IV) respecting the Laws and Customs of War on - Land and its annex: Regulations concerning the Laws and Customs of War on Land. The Hague, 18 October 1907 11. Geneva Convention (I) for the Amelioration of the Condition of the 2 April 2014 Wounded and Sick in Armed Forces in the Field, 12 August 1949 12. Geneva Convention (II) for the Amelioration of the Condition of 2 April 2014 Wounded, Sick and Shipwrecked Members of Armed Forces at Sea, 12 August 1949 Interna- 13. -

Swordplay Through the Ages Daniel David Harty Worcester Polytechnic Institute

Worcester Polytechnic Institute Digital WPI Interactive Qualifying Projects (All Years) Interactive Qualifying Projects April 2008 Swordplay Through The Ages Daniel David Harty Worcester Polytechnic Institute Drew Sansevero Worcester Polytechnic Institute Jordan H. Bentley Worcester Polytechnic Institute Timothy J. Mulhern Worcester Polytechnic Institute Follow this and additional works at: https://digitalcommons.wpi.edu/iqp-all Repository Citation Harty, D. D., Sansevero, D., Bentley, J. H., & Mulhern, T. J. (2008). Swordplay Through The Ages. Retrieved from https://digitalcommons.wpi.edu/iqp-all/3117 This Unrestricted is brought to you for free and open access by the Interactive Qualifying Projects at Digital WPI. It has been accepted for inclusion in Interactive Qualifying Projects (All Years) by an authorized administrator of Digital WPI. For more information, please contact [email protected]. IQP 48-JLS-0059 SWORDPLAY THROUGH THE AGES Interactive Qualifying Project Proposal Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for graduation by __ __________ ______ _ _________ Jordan Bentley Daniel Harty _____ ________ ____ ________ Timothy Mulhern Drew Sansevero Date: 5/2/2008 _______________________________ Professor Jeffrey L. Forgeng. Major Advisor Keywords: 1. Swordplay 2. Historical Documentary Video 3. Higgins Armory 1 Contents _______________________________ ........................................................................................0 Abstract: .....................................................................................................................................2 -

Republic of Kazakhstan

Deposited on 26 November 2020 Republic of Kazakhstan Status of List of Reservations and Notifications This document contains the consolidated list of reservations and notifications made by Republic of Kazakhstan upon deposit of the instrument of ratification pursuant to Articles 28(5) and 29(1) of the Convention, and subsequent to that deposit. 1 Deposited on 26 November 2020 Article 2 – Interpretation of Terms Notification - Agreements Covered by the Convention Pursuant to Article 2(1)(a)(ii) of the Convention, Republic of Kazakhstan wishes the following agreements to be covered by the Convention: Other Original/ Date of Date of Title Contracting Amending Entry into Signature Jurisdiction Instrument Force 1 Конвенция между Правительством Республики Армения и Правительством Республики Казахстан об избежании двойного Armenia Original 06-11-2006 19-01-2011 налогообложения и предотвращении уклонения от налогообложения в отношении налогов на доходы и на имущество 2 Convention between the Republic of Austria and the Republic of Austria Original 10-09-2004 01-03-2006 Kazakhstan with respect to Taxes on Income and on Capital 3 Конвенция между Original 16-09-1996 07-05-1997 Правительством Республики Азербайджан и Правительством Казахстанской Республики об избежании двойного Azerbaijan Amending налогообложения и 03-04-2017 27-04-20181 Instrument (a) предотвращении уклонения от налогообложения в отношении налогов на доходы и на имущество 4 Соглашение между Original 11-04-1997 13-12-1997 Правительством Республики Казахстан и Правительством Республики -

Adrian Maher

ADRIAN MAHER WRITER/DIRECTOR/SUPERVISING PRODUCER Los Angeles, CA Cell: (310) 922-3080 Email: [email protected] Website: www.maherproductions.com ____________________________________________________________________________________________________________ PROFESSIONAL EXPERIENCE – REALITY AND DOCUMENTARY TELEVISION More than two decades of experience as a writer/director of documentary television programs, print journalist, on-air reporter, book author and private investigator. Strong writing, directing, producing, voiceover, investigative, digital media, and managerial skills. UNSUNG HOLLYWOOD: HARLEM GLOBETROTTERS: (TV ONE NETWORK) (ASmith and Company) Wrote, Directed and Produced a one -hour episode on the history of the Harlem Globetrotters from their founding in Chicago in 1927 to their present-day status as one of the most recognizable sports brands in the world. SPLIT-SECOND DECISION: (MSNBC) (The Gurin Company) Wrote, Directed and Produced three one-hour documentaries about people caught in catastrophic situations and the instant decisions they make to survive. UNSUNG HOLLYWOOD: RICHARD ROUNDTREE: (TV ONE NETWORK) (ASmith and Company) Wrote, and Produced a one-hour episode/biography on America’s first black-action movie star – Richard Roundtree – (star of “Shaft”) and the “blaxploitation” era in Hollywood films. TRIGGERS: WEAPONS THAT CHANGED THE WORLD (MILITARY CHANNEL) (Morningstar Entertainment) Wrote and Produced several episodes on history’s most fearsome weapons including “Gatling Guns,” “Tanks” and “Light Machine Guns.” -

A HRC WG 6 34 KAZ 2 Kazakhstan Annex E

Tables for UN Compilation on Kazakhstan I. Scope of international obligations1 A. International human rights treaties2 Status during previous cycle Action after review Not ratified/not accepted Ratification, accession or ICERD (1998) CRPD (2015) ICCPR -OP 2 succession ICESCR (2006) ICRMW/* ICCPR (2006) CEDAW (1998) CAT (1998) OP-CAT (2008) CRC (1994) OP-CRC-AC (2003) OP-CRC-SC (2001) CRPD (signature, 2008) ICPPED (2009) Complaints procedures, ICERD, art. 14 (2008) OP-ICESCR (signature, inquiries and 2010) OP-ICESCR (signature, urgent action3 2010) ICCPR, art. 41 ICCPR-OP 1 (2009) OP-CRC-IC OP-CEDAW, art. 8 (2001) ICRMW CAT, arts. 20 (1998), 21 OP-CRPD (signature, 2008) and 22 (2008) ICPPED, arts. 31 and 32 OP-CRPD (signature, 2008) Reservations and / or declarations Status during previous cycle Action after review Current Status ICCPR -OP 1 (Declaration, ICCPR -OP 1 (Declaration, art. 1, 2009) art. 1) OP-CAT (Declaration, art. OP-CAT (Declaration, art. 24.1, 2010) 24.1) OP-CRC-AC (Declaration, OP-CRC-AC (Declaration, art.3.2, minimum age of art.3.2, minimum age of recruitment at 19 years recruitment at 19 years) 2003) B. Other main relevant international instruments Status during previous cycle Action after review Not ratified Ratification, accession or Convention on the Convention against Rome Statute of the succession Prevention and Punishment Discrimination in Education International Criminal Court of the Crime of Genocide (2016) Conventions on stateless persons4 ILO Conventions Nos. 169 and 1895 Geneva Conventions of 12 August 1949 and Additional Protocols thereto6 Conventions on refugees7 Palermo Protocol8 ILO fundamental Conventions9 II. -

ICRC Statement -- Status of the Protocols Additional

United Nations General Assembly 73rd Session Sixth Committee General debate on the Status of the Protocols additional to the Geneva Conventions of 1949 and relating to the protection of victims of armed conflicts [Agenda item 83, to be considered in October 2018] Statement by the International Committee of the Red Cross (ICRC) October 2018 Mr / Madam Chair, On the occasion of the 40th anniversary of the Additional Protocols of 1977, the ICRC took several steps to promote the universalization and implementation of these instruments. Its initiatives included the publication of a policy paper on the impact and practical relevance of the Additional Protocols, raising the question of accession in its dialogue with States, and highlighting the relevance of these instruments through national and regional events. Among other steps taken, the ICRC wrote to States not yet party to the Additional Protocols encouraging them to adhere to these instruments. We reiterate our call to States that have not yet done so, to ratify the Additional Protocols and other key instruments of IHL. At the time of writing, 174 States are party to Additional Protocol I and 168 States are party to Additional Protocol II. Since our last intervention, Burkina Faso and Madagascar became States number 73 and 74 to ratify Additional Protocol III. In April 2018, Palestine made a declaration pursuant to Article 90 of Additional Protocol I making it the 77th State to accept the competence of the International Humanitarian Fact-Finding Commission. We welcome the fact that the number of States party to this and other key instruments of IHL has continued to grow. -

Palestine's Accession to Multilateral Treaties: Effective Circumvention Of

Palestine’s Accession to Multilateral Treaties 81 Palestine’s Accession to Multilateral Treaties: Effective Circumvention of the Statehood Question and its Consequences * ** Shadi SAKRAN & HAYASHI Mika Abstract In the light of the question about the Palestinian statehood that divides the international community and the academic opinions, this note argues that the accession to multilateral treaties by Palestine has proven to be a clever way of circumventing this unsettled statehood question. The key to this effective circumvention is the depositary. Both in the treaties deposited with the UN Secretary- General and with a number of national governments, the instruments of accession by Palestine have been accepted by the depositaries without requiring or producing any clarification of the statehood question. The note will also show the legal and the practical consequences of the treaty accessions by Palestine. The legal consequences are different for different groups of States Parties. As a result of the treaty accession, (1) the rights and obligations of any given treaty arise between Palestine and States that do not explicitly oppose its accession, including those who do not recognize Palestine as a State; (2) the opposing States cannot deny the accession on behalf of all the States Parties, but can at least prevent the treaty relationship from arising between Palestine and themselves. The practical consequence of the treaty accession is that within such a treaty, and strictly for the purpose of that treaty, the accession allows Palestine to act like a State. Introduction The question of Palestinian statehood is deeply controversial, as it can be seen in the divided academic opinions1 and the divided attitudes of States.2 The Resolution * Doctoral student, Graduate School of International Cooperation Studies, Kobe University. -

Adoption of an Additional Distinctive Emblem

Volume 88 Number 186 March 2006 REPORTS AND DOCUMENTS Adoption of an Additional Distinctive Emblem On 12 and 13 September 2005, Switzerland opened informal consultations on the holding of a diplomatic conference necessary for the adoption of a Third Protocol Additional to the Geneva Conventions on the Emblem in which all States partyto the Geneva Conventions were invited to take part. Meeting in Seoul from 16 to 18 November 2005, the Council of Delegates adopted by consensus a resolution in which the Council: N welcomed the work achieved since the 28th International Conference, in particular by the Government of Switzerland in its capacity as depositary of the Geneva Conventions, resulting in the convening on December 5, 2005 of the diplomatic conference necessary for the adoption of the proposed Third Protocol Additional to the Geneva Conventions on the Emblem; N urged National Societies to approach their respective governments in order to underline to them the necessity to settle the question of the emblem at the diplomatic conference through the adoption of the proposed draft third additional Protocol; N requested the Standing Commission, the ICRC and the Federation as a matter of urgency to undertake the measures needed to give effect to the third Protocol after its adoption, especially with a view to ensuring the achievement as soon as possible of the Movement’s principle of universality.1 The Diplomatic Conference was convened by Switzerland and held in Geneva from 5 to 8 December 2005. International Review of the Red Cross 1 Council of Delegates, Seoul, 16–18 November 2005, Resolution 5: ‘‘Follow-up to Resolution 5 of the Council of Delegates in 2003, Emblem’’, International Review of the Red Cross, Vol. -

Summary of the Geneva Conventions of 1949 and Their Additional Protocols International Humanitarian Law April 2011

Summary of the Geneva Conventions of 1949 and Their Additional Protocols International Humanitarian Law April 2011 Overview: Protecting the Byzantine Empire and the Lieber Code The Red Cross Vulnerable in War used during the United States Civil War. and International International humanitarian law (IHL) is The development of modern Humanitarian Law a set of rules that seek for humanitarian international humanitarian law is The Red Cross and the Geneva reasons to limit the effects of armed credited to the efforts of 19th century Conventions were born when Henry conflict. IHL protects persons who are Swiss businessman Henry Dunant. In Dunant witnessed the devastating not or who are no longer participating in 1859, Dunant witnessed the aftermath consequences of war at a battlefield hostilities and it restricts the means and in Italy. In the aftermath of that battle, of a bloody battle between French methods of warfare. IHL is also known Dunant argued successfully for the and Austrian armies in Solferino, Italy. as the law of war and the law of armed creation of a civilian relief corps to The departing armies left a battlefield respond to human suffering during conflict. littered with wounded and dying men. conflict, and for rules to set limits on A major part of international Despite Dunant’s valiant efforts to how war is waged. humanitarian law is contained in the mobilize aid for the soldiers, thousands Inspired in part by her work in the four Geneva Conventions of 1949 that died. Civil War, Clara Barton would later have been adopted by all nations in found the American Red Cross and In “A Memory of Solferino,” his book also advocate for the U.S. -

The 29Th International Conference of the Red Cross and Red Crescent, Geneva, 20–22 June 2006: Challenges and Outcome Franc¸Ois Bugnion*

Volume 89 Number 865 March 2007 REPORTS AND DOCUMENTS The 29th International Conference of the Red Cross and Red Crescent, Geneva, 20–22 June 2006: challenges and outcome Franc¸ois Bugnion* 1. From the Diplomatic Conference on the emblem to the 29th International Conference of the Red Cross and Red Crescent On 8 December 2005, the Diplomatic Conference on the emblem, convened by the Swiss government as the depositary of the Geneva Conventions and their Additional Protocols, adopted by ninety-eight votes to twenty-seven, with ten abstentions, the Protocol additional to the Geneva Conventions of 12 August 1949, and relating to the Adoption of an Additional Distinctive Emblem (Protocol III).1 While it was regrettable that the international community became divided over the issue, the adoption of Protocol III was nevertheless an important success and marked a decisive step towards resolving a question that had long prevented the International Red Cross and Red Crescent Movement from reaching the universality to which it aspired and improving a situation that was perceived * Franc¸ois Bugnion is diplomatic advisor of the ICRC and was director for International Law and Co- operation within the Movement at the time of the Conference. 1 Final Act of the Diplomatic Conference on the adoption of the Third Protocol additional to the Geneva Conventions of 12 August 1949, and relating to the adoption of an Additional Distinctive Emblem (Protocol III), paragraphs 21 and 23. The Final Act of the Diplomatic Conference and Protocol III of 8 December 2005 were published in the International Review of the Red Cross, No.