Revolutionizing High Performance Computing Nvidia® Tesla™ Revolutionizing High Performance Computing / NVIDIA® TESLATM

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Paramountcy of Reconfigurable Computing

Energy Efficient Distributed Computing Systems, Edited by Albert Y. Zomaya, Young Choon Lee. ISBN 978-0-471--90875-4 Copyright © 2012 Wiley, Inc. Chapter 18 The Paramountcy of Reconfigurable Computing Reiner Hartenstein Abstract. Computers are very important for all of us. But brute force disruptive architectural develop- ments in industry and threatening unaffordable operation cost by excessive power consumption are a mas- sive future survival problem for our existing cyber infrastructures, which we must not surrender. The pro- gress of performance in high performance computing (HPC) has stalled because of the „programming wall“ caused by lacking scalability of parallelism. This chapter shows that Reconfigurable Computing is the sil- ver bullet to obtain massively better energy efficiency as well as much better performance, also by the up- coming methodology of HPRC (high performance reconfigurable computing). We need a massive cam- paign for migration of software over to configware. Also because of the multicore parallelism dilemma, we anyway need to redefine programmer education. The impact is a fascinating challenge to reach new hori- zons of research in computer science. We need a new generation of talented innovative scientists and engi- neers to start the beginning second history of computing. This paper introduces a new world model. 18.1 Introduction In Reconfigurable Computing, e. g. by FPGA (Table 15), practically everything can be implemented which is running on traditional computing platforms. For instance, recently the historical Cray 1 supercomputer has been reproduced cycle-accurate binary-compatible using a single Xilinx Spartan-3E 1600 development board running at 33 MHz (the original Cray ran at 80 MHz) 0. -

Nvidia Tesla Gpu Computing Processor Ushers in The

For further information, contact: Andrew Humber NVIDIA Corporation (408) 486 8138 [email protected] FOR IMMEDIATE RELEASE: NVIDIA TESLA GPU COMPUTING PROCESSOR USHERS IN THE ERA OF PERSONAL SUPERCOMPUTING New NVIDIA Tesla Solutions Bring Unprecedented, High-Density Parallel Processing to the HPC Market “NVIDIA Tesla™ is going to make discovery of huge oil reserves possible through faster and more accurate interpretation of geophysical data.” —Steve Briggs, Headwave, Inc. “NVIDIA Tesla will give us a 100-fold increase in some of our programs, and this is on desktop machines where previously we would have had to run these calculations on a cluster.” —John Stone, University of Illinois Urbana-Champaign “NVIDIA Tesla has opened up completely new worlds for computational electromagnetics.” — Ryan Schneider, Acceleware SANTA CLARA, CA—JUNE 20, 2007—High-performance computing in fields like the geosciences, molecular biology, and medical diagnostics enable discoveries that transform billions of lives every day. Universities, research institutions, and companies in these and other fields face a daunting challenge—as their simulation models become exponentially complex, so does their need for vast computational resources. NVIDIA took a giant step in meeting this challenge with its announcement of a new class of processors based on a revolutionary new GPU. Under the NVIDIA® Tesla™ brand, NVIDIA will offer a family of GPU computing products that will place the power previously available only from supercomputers in the hands of every scientist and engineer. Today’s workstations will be transformed into “personal supercomputers.” “Today’s science is no longer confined to the laboratory; scientists employ computer simulations before a single physical experiment is performed. -

A Personal Supercomputer for Climate Research

CSAIL Computer Science and Artificial Intelligence Laboratory Massachusetts Institute of Technology A Personal Supercomputer for Climate Research James C. Hoe, Chris Hill In proceedings of SuperComputing'99, Portland, Oregon 1999, August Computation Structures Group Memo 425 The Stata Center, 32 Vassar Street, Cambridge, Massachusetts 02139 A Personal Sup ercomputer for Climate Research Computation Structures Group Memo 425 August 24, 1999 James C. Ho e Lab for Computer Science Massachusetts Institute of Technology Cambridge, MA 02139 jho [email protected] Chris Hill, Alistair Adcroft Dept. of Earth, Atmospheric and Planetary Sciences Massachusetts Institute of Technology Cambridge, MA 02139 fcnh,[email protected] To app ear in Pro ceedings of SC'99 This work was funded in part by grants from the National Science Foundation and NOAA and with funds from the MIT Climate Mo deling Initiative[23]. The hardware development was carried out at the Lab oratory for Computer Science, MIT and was funded in part by the Advanced Research Pro jects Agency of the Department of Defense under the Oce of Naval Research contract N00014-92-J- 1310 and Ft. Huachuca contract DABT63-95-C-0150. James C. Ho e is supp orted byanIntel Foundation Graduate Fellowship. The computational resources for this work were made available through generous donations from Intel Corp oration. We acknowledge Arvind and John Marshall for their continuing supp ort. We thank Andy Boughton and Larry Rudolph for their invaluable technical input. A Personal Sup ercomputer for Climate Research James C. Ho e Chris Hill, Alistair Adcroft Lab for Computer Science Dept. of Earth, Atmospheric and Planetary Sciences Massachusetts Institute of Technology Massachusetts Institute of Technology Cambridge, MA 02139 Cambridge, MA 02139 jho [email protected] fcnh,[email protected] August 24, 1999 Abstract We describ e and analyze the p erformance of a cluster of p ersonal computers dedicated to coupled climate simulations. -

Intellectual Personal Supercomputer for Solving R&D Problems

ISSN 2409-9066. Sci. innov. 2016, 12(5): 15—27 doi: https://doi.org/10.15407/scine12.05.015 Khimich, O.M., Molchanov, I.M., Mova, V.І., Nikolaichuk, О.O., Popov, O.V., Chistjakova, Т.V., Jakovlev, M.F., Tulchinsky, V.G., and Yushchenko, R.А. Glushkov Institute of Cybernetics, the NAS of Ukraine, 40, Glushkov Av., Кyiv, 03187, Ukraine, tel.: +38 (044) 526-60-88, fax: +38 (044) 526-41-78 INTELLECTUAL PERSONAL SUPERCOMPUTER FOR SOLVING R&D PROBLEMS New Ukrainian intelligent personal supercomputer of hybrid architecture «Inparcom-pg» has been developed for mod- eling in defense industry, engineering, construction, etc. Intelligent software for automated analysis and numeric solution of computational problems with approximate data has been developed. It has been used for implementation of simulation applications in construction, welding, and underground filtration. Keywords: modeling, simulation, intelligent computer, hybrid architecture, computational mathematics, and approxi- mate data. Mathematical modeling and associated com- computer capabilities despite the multi-core pro- puter experiment is currently among the main cessors. GPGPU technology (from General-Pur- tools for studying various natural phenomena pose Graphic Processing Units) meets the de- and processes in society, economy, science and mand of accelerating calculations on multi-core technology. Simulation significantly reduces the computers for big numbers of similar arithmetic development time and cost of new objects in en- operations. It means general purpose computing ergy and resource-saving. It increases efficiency on video cards. of real test planning and makes it possible to con- The approach has bumped the development of sider several variants to select the best. -

Downloaded for Personal Non-Commercial Research Or Study, Without Prior Permission Or Charge

Bissland, Lesley (1996) Hardware and software aspects of parallel computing. PhD thesis http://theses.gla.ac.uk/3953/ Copyright and moral rights for this thesis are retained by the author A copy can be downloaded for personal non-commercial research or study, without prior permission or charge This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the Author The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the Author When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given Glasgow Theses Service http://theses.gla.ac.uk/ [email protected] Hardware and Software aspects of Parallel Computing BY Lesley Bissland A thesis submitted to the University of Glasgow for the degree of Doctor of Philosophy in the Faculty of Science Department of Chemistry January 1996 © L. Bissland 1996 Abstract Parallel computing has developed in an attempt to satisfy the constant demand for greater computational power than is available from the fastest processorsof the time. This has evolved from parallelism within a single Central ProcessingUnit to thousands of CPUs working together.The developmentof both novel hardware and software for parallel multiprocessor systemsis presentedin this thesis. A general introduction to parallel computing is given in Chapter 1. This covers the hardware design conceptsused in the field such as vector processors,array processors and multiprocessors. The basic principles of software engineering for parallel machines (i. e. decomposition, mapping and tuning) are also discussed. -

Guide to Building Your Own Tesla Personal Supercomputer System Print PDF

Guide to Building Your Own Tesla Personal Supercomputer System Print PDF This guide is to help you build a Tesla Personal Supercomputer. If you have experience in building systems/workstations, you may want to build your own system. Otherwise, the easiest thing is to buy an off-the-shelf Tesla Personal Supercomputer from one of these resellers. As with building any system, it is at your own risk and responsibility. There are many possible components to choose from when you build such a system. NVIDIA provides general guidance, but cannot test every configuration and combination of components. Minimum Specifications of Main Components These minimum specifications are for folks who want to label their system a “Tesla Personal Supercomputer”. You can of course build a workstation with fewer Tesla GPUs in it. 3x Tesla C1060 Quad-core CPU: 2.33 GHz (Intel or AMD) 12 GB of system memory (4GB of system memory per Tesla C1060) Linux 64-bit or Windows XP 64-bit System acoustics < 45 dBA 1200 W power supply Example of Complete 4 Tesla C1060 System Configuration This is a list of suggested components to build a 4x Tesla C1060 PSC. Several of these components such as the memory, CPU, power supply, case, can be substituted with an appropriate equivalent and suitable component. We do not certify any components for the PSC; this is left to the system builders. 4 Tesla C1060 Configuration Motherboard Tyan S7025 PCI-e bandwidth 4x PCI-e x16 Gen2 slots Tesla GPUs 4x Tesla C1060 On-board graphics (works with Linux, Windows requires NVIDIA GPU in Graphics -

Embedded Graphics Processing Units

Embedded Graphics Processing Units by Patrick H. Stakem (c) 2017 Number 18 in the Computer Architecture Series 1 Table of Contents Introduction......................................................................................4 Author..............................................................................................5 The Architectures.............................................................................5 The ALU......................................................................................5 The FPU......................................................................................6 The GPU......................................................................................6 Graphics Data Structure........................................................11 Graphic Operations on data..................................................11 Massively Parallel Architectures....................................................14 Supercomputer on a Module..........................................................16 Embedded Processors....................................................................19 CUDA.......................................................................................23 GPU computing.........................................................................24 Massively Parallel Systems ...........................................................27 Interconnection of CPU/GPU's and memory on a single chip..27 Embedded GPU Products..............................................................28 Nvidia -

Supercomputer at Affordable Price

November 10, 2008 Supercomputer at Affordable Price Supermicro Cost-effective Personal Supercomputer in Volume Production SAN JOSE, Calif., Nov 10, 2008 /PRNewswire-FirstCall via COMTEX News Network/ -- Super Micro Computer, Inc. (Nasdaq: SMCI), a leader in application-optimized, high performance server solutions, today announced the addition of the world's most affordable personal supercomputer to its SuperBlade(R) family. Supermicro's new 10-blade server system based on the SBI-7125C-T3 blade provides the industry's most cost-effective blade solution, while also being the greenest personal supercomputer and office computing solution. Based on Supermicro's Server Building Block Solutions(R) architecture, the SBI-7125C-T3 can also be optimized for 14-blade configurations which are ideal for HPC and datacenter applications. (Photo: http://www.newscom.com/cgi-bin/prnh/20081110/CLM907 ) "Our new SuperBlade(R) solution can be a very cost-effective supercomputer. Now our customers can affordably enable a personal supercomputer next to their desks with the same computing power previously only available via large server installations," said Charles Liang, CEO and president of Supermicro. "This optimized blade solution features 93%* power supply efficiency, innovative and highly efficient thermal and cooling system designs, and industry-leading system performance-per-watt (290+ GFLOPS/kW*), making it the greenest, most power-saving blade solution." At an affordable price point, the highly efficient SuperBlade(R) minimizes operating costs to provide the best total cost of ownership (TCO) solution available. This solution will particularly enable customers with a limited budget who need high- performance computing. This supercomputer is ideal for scientists and researchers in life science, bioinformatics, physics, chemistry and similar fields of engineering and science. -

22Fumrpy7imdq.Pdf —

Computing Machines Torsten van den Berg Elisabeth Bommes Wolfgang K. H¨ardle Alla Petukhina Torsten van den Berg Elisabeth Bommes Wolfgang K. H¨ardle Alla Petukhina Ladislaus von Bortkiewicz Chair of Statistics, Collaborative Risk Center 649 Economic Risk Humboldt-Universit¨at zu Berlin, Germany Photographer: Paul Melzer c 2016 by Torsten van den Berg, Elisabeth Bommes, Wolfgang Karl H¨ardle, Alla Petukhina Computing Machines is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported License. See http://creativecommons.org/licenses/by-sa/3.0/ for more information. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. With friendly support of Contents Preface x 1 Mechanical Calculators 1 1 Introduction 3 2 Devices 13 1 X x X 14 2 Consul 16 3 Superautomat SAL IIc 18 4 Brunsviga 13 RK 20 5 Melitta V/16 22 6 MADAS A 37 24 iii 7 Walther DE 100 26 2 Pocket Calculators 27 1 Introduction 29 2 Devices 43 3 Personal Computers 46 1 Introduction 47 2 Devices 61 1 PET 62 2 Victor 9000 64 3 Robotron A 5120 66 4 5150 68 5 ZX Spectrum 70 6 People 72 7 IIe 74 8 VG 8020 76 9 KC85/2 78 iv 10 CPC 80 11 Robotron 1715 82 12 C16 84 13 ZX Spectrum Clone 86 14 PS/2 88 15 Macintosh Classic 90 16 Amiga 500 Plus 92 17 SPARCstation 10 94 18 Indy 96 19 Ultra 2 98 20 Power Macintosh 8200/120 100 21 iMac G3 102 22 Power Macintosh G3 104 23 Power Macintosh G4 106 24 iMac G4 -

Nvidia Tesla the Personal Supercomputer

International Journal of Allied Practice, Research and Review Website: www.ijaprr.com (ISSN 2350-1294) Nvidia Tesla The Personal Supercomputer Sameer Ahmad1, Umer Amin2,Mr. Zubair M Paul 3 1Student, Department of CSE& SSM College OF Engg.& Tech., India 2Student, Department of CSE& SSM College of Engg.& Tech., India 3Guide, Department of CSE& SSM College of Engg.& Tech., India Abstract:-The NVIDIA® Tesla™ Personal Supercomputer is based on the new and important NVIDIA CUDA™ parallel computing architecture and poweredby up to 900-960 parallelprocessingcores. The Tesla Personal Supercomputer is a desktop computer that is supported byNVIDIACorporation. This Tesla Personal Computer is built by Dell, Lenovo and other companies. It has the ability to perform computations 250 times faster than a multi-CPU core PC or workstation. The CUDA™ parallel computing architecture enables developers to utilize C programming with NVIDIA GPUs to run the most complex computationally-intensive applications. CUDA is easy to learn and has become widely adopted by thousands of application developers worldwide to accelerate the most performance demanding applications. In November 2006 NVIDIA’S tesla was introduced in the NVIDIA GeForce 8800 GPU. This helps in parallel computing applications written in C language and thereby enabling high performance. The Tesla Graphics and computing architecture is available in two different series which are GeForce 8-series GPU’s and Quadro GPU’s for laptops, desktops, workstations, and servers. It also provides the processing architecture for the Tesla GPU computing platforms introduced in 2007 for high-performance computing Keywords: NVIDIA; GPU; CUDA; Supercomputer; Tesla Graphics. I. INTRODUCTION The Tesla Personal Supercomputer is a desktop computer that is backed by Nvidia and was built by Dell, Lenovo and other big companies. -

Core-2018.Pdf

2018 C O RE A Publication of iPhone 360 Feature the Computer Reimagining Live Programming History Museum Creating a Culture of Learning Cover: Detail of Steve Jobs, initial iPhone re- lease, January 9, 2007. This page: Detail of IBM Simon, 1994. The world’s fi rst smart- phone had only screen- based keys, like the later iPhone. Discover more technologies and products that led to the iPhone on page 26. B CORE 2018 MUSEUM UPDATES iPHONE 360 FEATURES 2 7 12 56 24 38 Contributors Behind the Graphic Art Documenting the Recent Artifact How the iPhone From California to 3 of CHM Live Stories of Our Fellows Donations Took Off China and Beyond: iPhone in the Global Chairman’s Letter 8 19 58 32 Economy 4 CHM Collections Creating a Culture of Franklin “Pitch” & Stacking the Deck: Exhibit Culture, Art Learning at CHM Catherine H. Johnson Choices the Enabled 46 Reimagining Live & Design the iPhone App Store A Downward Gaze Programming 59 Lifetime Giving Society, Donors & Partners C O RE 2018 26 4 15 22 52 From Big Names to Big Ideas: Beyond Inclusion: iPhone 360 Feature Somersaults and Moon Reimaging Live Programming Gaps, Glitches & Imaginative On January 9, 2007, Steve Landings: A Conversation with Learn how CHM Live is expand- Failure in Computing Jobs announced three new Margaret Hamilton ing its live programming and Computing is the result of choic- products—a widescreen iPod, Excerpted from her oral history, offering audiences new ways es made by people, whose own a revolutionary phone, and a Margaret Hamilton refl ects on to connect computer history to time, experiences, and biases “breakthrough internet commu- the Apollo 11 mission and the today’s technology-driven world. -

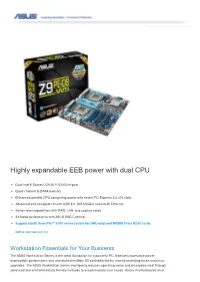

Highly Expandable EEB Power with Dual CPU

Highly expandable EEB power with dual CPU Dual Intel® Socket LGA 2011/C602 chipset Quad channel 8-DIMM memory Enhanced parallel GPU computing power with seven PCI Express 3.0 x16 slots Advanced and complete I/O with USB 3.0, SATA 6Gb/s and Intel® Ethernet Server-level capabilities with RAID, LAN, and capture cards 3X faster performance with ASUS SSD Caching Support Intel® Xeon Phi™ 3100 series (active fan SKU only) and NVIDIA Tesla K20C cards. Add to comparison list Workstation Essentials for Your Business The ASUS Workstation Series is the ideal foundation for a powerful PC. It delivers awesome power, dependable performance and unmatched multiple I/O scalability for the most demanding tasks and future upgrades. The ASUS Workstation Series intelligently reduces operating noise and dissipates heat through advanced and environmentally friendly methods to accommodate user needs. Series motherboards also bring you ultimate reliability and quality through our 24x24 initiative, which means 24-hour non-stop operation and a 24- month life cycle supply guarantee so you're not compelled to change computers often.Instead, let the ASUS Workstation Series improve the quality of your work and life. ASUS Workstation Exclusive Features Enhanced parallel GPU computing power with seven PCI Express 3.0 x16 slots Personal supercomputer-grade performance is achieved when working in tandem with discrete NVIDIA® CUDA technology — providing unprecedented return on investment. Users can count on up to four Tesla cards with dual Intel® Xeon® E5-2600 processors for intensive parallel computing and massive data handling capabilities, delivering nearly 4 teraflops of performance. This offers an excellent desktop replacement for cluster computing.