Lyrics-Based Analysis and Classification of Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lyric Provinces in the English Renaissance

Lyric Provinces in the English Renaissance Harold Toliver LYRIC PROVINCES IN THE ENGLISH RENAISSANCE Harold Toliver Poets are by no means alone in being pre pared to see new places in settled ways and to describe them in received images. Outer regions will be assimilated into dynasties, never mind the hostility of the natives. The ancient wilderness of the Mediterranean and certain biblical expectations of what burning bushes, gods, and shepherds shall exist every where encroach upon the deserts of Utah and California. Those who travel there see them and other new landscapes in terms of old myths of place and descriptive topoi; and their descriptions take on the structure, to nality, and nomenclature of the past, their language as much recollection as greeting. In Professor Toliver's view, the concern of the literary historian is with, in part, that ever renewed past as it is introduced under the new conditions that poets confront, and is, therefore, with the parallel movement of liter ary and social history. Literature, he reminds us, is inseparable from the rest of discourse (as the study of signs in the past decade has made clearer), but is, nevertheless, distinct from social history in that poets look less to common discourse for their models than to specific literary predecessors. Careful observ ers of place, they seek to bring what is distinct and valuable in it within some sort of verbal compass and relationship with the speaker. They bring with them, however, formulas and tf > w if a loyalty to a cultural heritage that both com plicate and confound the lyric address to place. -

Canada Needs You Volume One

Canada Needs You Volume One A Study Guide Based on the Works of Mike Ford Written By Oise/Ut Intern Mandy Lau Content Canada Needs You The CD and the Guide …2 Mike Ford: A Biography…2 Connections to the Ontario Ministry of Education Curriculum…3 Related Works…4 General Lesson Ideas and Resources…5 Theme One: Canada’s Fur Trade Songs: Lyrics and Description Track 2: Thanadelthur…6 Track 3: Les Voyageurs…7 Key Terms, People and Places…10 Specific Ministry Expectations…12 Activities…12 Resources…13 Theme Two: The 1837 Rebellion Songs: Lyrics and Description Track 5: La Patriote…14 Track 6: Turn Them Ooot…15 Key Terms, People and Places…18 Specific Ministry Expectations…21 Activities…21 Resources…22 Theme Three: Canadian Confederation Songs: Lyrics and Description Track 7: Sir John A (You’re OK)…23 Track 8: D’Arcy McGee…25 Key Terms, People and Places…28 Specific Ministry Expectations…30 Activities…30 Resources…31 Theme Four: Building the Wild, Wild West Songs: Lyrics and Description Track 9: Louis & Gabriel…32 Track 10: Canada Needs You…35 Track 11: Woman Works Twice As Hard…36 Key Terms, People and Places…39 Specific Ministry Expectations…42 Activities…42 Resources…43 1 Canada Needs You The CD and The Guide This study guide was written to accompany the CD “Canada Needs You – Volume 1” by Mike Ford. The guide is written for both teachers and students alike, containing excerpts of information and activity ideas aimed at the grade 7 and 8 level of Canadian history. The CD is divided into four themes, and within each, lyrics and information pertaining to the topic are included. -

FIVE CHALLENGES and SOLUTIONS in ONLINE MUSIC TEACHER EDUCATION Page 1 of 10

FIVE CHALLENGES AND SOLUTIONS IN ONLINE MUSIC TEACHER EDUCATION Page 1 of 10 Volume 5, No. 1 September 2007 FIVE CHALLENGES AND SOLUTIONS IN ONLINE MUSIC TEACHER EDUCATION David G. Hebert Boston University [email protected] “Nearly 600 graduate students?”1 As remarkable as it may sound, that is the projected student population for the online graduate programs in music education at Boston University School of Music by the end of 2007. With the rapid proliferation of online courses among mainstream universities in recent years, it is likely that more online music education programs will continue to emerge in the near future, which begs the question of what effects this new development will have on the profession. Can online education truly be of the same quality as a traditional face-to-face program? How is it possible to effectively manage such large programs, particularly at the doctoral level? For some experienced music educators, it may be quite difficult to set aside firmly entrenched reservations and objectively consider the new possibilities for teaching and research afforded by recent technology. Yet the future is already here, and nearly 600 music educators have seized the opportunity. Through online programs, the internet has become the latest tool for offering professional development to practicing educators who otherwise would not have access, particularly those currently engaged in full-time employment or residing in rural areas. Recognizing the new opportunities afforded by recent technological developments, Director of the Boston University School of Music, Professor Andre De Quadros and colleagues launched the nation’s first online doctoral program in music education in 2005. -

Song Lyrics of the 1950S

Song Lyrics of the 1950s 1951 C’mon a my house by Rosemary Clooney Because of you by Tony Bennett Come on-a my house my house, I’m gonna give Because of you you candy Because of you, Come on-a my house, my house, I’m gonna give a There's a song in my heart. you Apple a plum and apricot-a too eh Because of you, Come on-a my house, my house a come on My romance had its start. Come on-a my house, my house a come on Come on-a my house, my house I’m gonna give a Because of you, you The sun will shine. Figs and dates and grapes and cakes eh The moon and stars will say you're Come on-a my house, my house a come on mine, Come on-a my house, my house a come on Come on-a my house, my house, I’m gonna give Forever and never to part. you candy Come on-a my house, my house, I’m gonna give I only live for your love and your kiss. you everything It's paradise to be near you like this. Because of you, (instrumental interlude) My life is now worthwhile, And I can smile, Come on-a my house my house, I’m gonna give you Christmas tree Because of you. Come on-a my house, my house, I’m gonna give you Because of you, Marriage ring and a pomegranate too ah There's a song in my heart. -

An Introduction to Music Studies Pdf, Epub, Ebook

AN INTRODUCTION TO MUSIC STUDIES PDF, EPUB, EBOOK Jim Samson,J. P. E. Harper-Scott | 310 pages | 31 Jan 2009 | CAMBRIDGE UNIVERSITY PRESS | 9780521603805 | English | Cambridge, United Kingdom An Introduction to Music Studies PDF Book To see what your friends thought of this book, please sign up. An analysis of sociomusicology, its issues; and the music and society in Hong Kong. Critical Entertainments: Music Old and New. Other Editions 6. The examination measures knowledge of facts and terminology, an understanding of concepts and forms related to music theory for example: pitch, dynamics, rhythm, melody , types of voices, instruments, and ensembles, characteristics, forms, and representative composers from the Middle Ages to the present, elements of contemporary and non-Western music, and the ability to apply this knowledge and understanding in audio excerpts from musical compositions. An Introduction to Music Studies by J. She has been described by the Harvard Gazette as "one of the world's most accomplished and admired music historians". The job market for tenure track professor positions is very competitive. You should have a passion for music and a strong interest in developing your understanding of music and ability to create it. D is the standard minimum credential for tenure track professor positions. Historical studies of music are for example concerned with a composer's life and works, the developments of styles and genres, e. Mus or a B. For other uses, see Musicology disambiguation. More Details Refresh and try again. Goodreads helps you keep track of books you want to read. These models were established not only in the field of physical anthropology , but also cultural anthropology. -

Lyrics for Songs of Our World

Lyrics for Songs of Our World Every Child Words and Music by Raffi and Michael Creber © 1995 Homeland Publishing From the album Raffi Radio Every child, every child, is a child of the universe Here to sing, here to sing, a song of beauty and grace Here to love, here to love, like a flower out in bloom Every girl and boy a blessing and a joy Every child, every child, of man and woman-born Fed with love, fed with love, in the milk that mother’s own A healthy child, healthy child, as the dance of life unfolds Every child in the family, safe and warm bridge: Dreams of children free to fly Free of hunger, and war Clean flowing water and air to share With dolphins and elephants and whales around Every child, every child, dreams of peace in this world Wants a home, wants a home, and a gentle hand to hold To provide, to provide, room to grow and to belong In the loving village of human kindness Lyrics for Songs of Our World Blue White Planet Words and music by Raffi, Don Schlitz © 1999 Homeland Publishing, SOCAN-New Hayes Music-New Don Songs, admin. By Carol Vincent and Associates, LLC, All rights reserved. From the album Country Goes Raffi Chorus: Blue white planet spinnin’ in space Our home sweet home Blue white planet spinnin’ in space Our home sweet home Home for the children you and me Home for the little ones yet to be Home for the creatures great and small Blue white planet Blue white planet (chorus) Home for the skies and the seven seas Home for the towns and the farmers’ fields Home for the trees and the air we breathe Blue white planet -

Elements of Sociology of Music in Today's Historical

AD ALTA JOURNAL OF INTERDISCIPLINARY RESEARCH ELEMENTS OF SOCIOLOGY OF MUSIC IN TODAY’S HISTORICAL MUSICOLOGY AND MUSIC ANALYSIS aKAROLINA KIZIŃSKA national identity, and is not limited to ethnographic methods. Rather, sociomusicologists use a wide range of research methods Adam Mickiewicz University, ul. Szamarzewskiego 89A Poznań, and take a strong interest in observable behavior and musical Poland interactions within the constraints of social structure. e-mail: [email protected] Sociomusicologists are more likely than ethnomusicologists to make use of surveys and economic data, for example, and tend to focus on musical practices in contemporary industrialized Abstract: In this article I try to show the incorporation of the elements of sociology of 6 societies”. Classical musicology, and it’s way of emphasizing music by such disciplines as historical musicology and music analysis. For that explain how sociology of music is understood, and how it is connected to critical historiographic and analytical rather than sociological theory, criticism or aesthetic autonomy. I cite some of the musicologists that wrote approaches to research, is the reason why sociomusicology was about doing analysis in context and broadening the research of musicology (e.g. Jim regarded as a small subdiscipline for a long time. But the Samson, Joseph Kerman). I also present examples of the inclusion of sociology of music into historical musicology and music analysis – the approach of Richard increasing popularity of ethnomusicology and new musicology Taruskin in and Suzanne Cusick. The aim was to clarify some of the recent changes in (as well as the emergence of interdisciplinary field of cultural writing about music, that seem to be closer today to cultural studies than classical studies), created a situation in which sociomusicology is not only musicology. -

Toward a New Comparative Musicology

Analytical Approaches To World Music 2.2 (2013) 148-197 Toward a New Comparative Musicology Patrick E. Savage1 and Steven Brown2 1Department of Musicology, Tokyo University of the Arts 2Department of Psychology, Neuroscience & Behaviour, McMaster University We propose a return to the forgotten agenda of comparative musicology, one that is updated with the paradigms of modern evolutionary theory and scientific methodology. Ever since the field of comparative musicology became redefined as ethnomusicology in the mid-20th century, its original research agenda has been all but abandoned by musicologists, not least the overarching goal of cross-cultural musical comparison. We outline here five major themes that underlie the re-establishment of comparative musicology: (1) classification, (2) cultural evolution, (3) human history, (4) universals, and (5) biological evolution. Throughout the article, we clarify key ideological, methodological and terminological objections that have been levied against musical comparison. Ultimately, we argue for an inclusive, constructive, and multidisciplinary field that analyzes the world’s musical diversity, from the broadest of generalities to the most culture-specific particulars, with the aim of synthesizing the full range of theoretical perspectives and research methodologies available. Keywords: music, comparative musicology, ethnomusicology, classification, cultural evolution, human history, universals, biological evolution This is a single-spaced version of the article. The official version with page numbers 148-197 can be viewed at http://aawmjournal.com/articles/2013b/Savage_Brown_AAWM_Vol_2_2.pdf. omparative musicology is the academic comparative musicology and its modern-day discipline devoted to the comparative study successor, ethnomusicology, is too complex to of music. It looks at music (broadly defined) review here. -

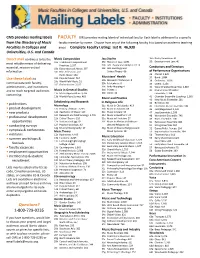

I:\My Drive\Mailing Labels\Mailing Labels

CMS provides mailing labels FACULTY CMS provides mailing labels of individual faculty. Each label is addressed to a specific from the Directory of Music faculty member by name. Choose from any of the following faculty lists based on academic teaching Faculties in Colleges and areas. Complete Faculty Listing: List B: 46,930 Universities, U.S. and Canada. Direct mail continues to be the Music Composition Jazz Studies 35h Radio/Television: 43 10a Traditional Compositional 29a History of Jazz: 1,694 35i Entertainment Law: 40 most reliable means of delivering 29b Jazz Theory and Analysis: 2, Practices: 2,397 114 Conductors and Directors essential, mission‐critical 10b Electroacoustic Music: 397 29c Jazz Sociology and information. 10c Film, Television, and Critical Theory: 66 of Performance Organizations Radio Music: 2 36 Choral: 2,528 80 Musicians' Health 10d Popular Music: 454 37 Band: 1,884 Use these labels to 30a Alexander Technique: 10e World Folk Music: 51 8 38 Orchestra: 1,036 communicate with faculty, 30b Feldenkrais: 0 10f Orchestration: 2,037 39 Opera: 1,259 administrators, and institutions 30c Body Mapping: 0 40 Vocal Chamber Ensemble: 1,084 and to reach targeted audiences Music in General Studies 30d Pilates: 1 41 Instrumental Chamber 30e Other: 10 concerning: 11a Music Appreciation: 3,765 Ensemble: 1,398 11b World Music Survey: 42 Chamber Ensemble Coaching: 1,3 463 Music and Practice 65 43 New Music Ensemble: 261 Scholarship and Research • publications in Religious Life 44 Bell Choir: 86 Musicology 31a Music in Christianity: 413 -

Copyright Matters

Copyright Matters The copyright clearance statement is included on the competition entry form. By completing and submitting the entry form, the quartet or the president/ team leader, on behalf of the chorus, is stating that to the best of their knowledge the arrangements the group is performing are legal arrangements; either they have been cleared through the copyright process, are original works, or are songs in the public domain. Generally, when you purchase music you can assume that appropriate permissions have been obtained. However, if you are not certain, it is acceptable to ask the music provider to verify that the arrangement has cleared the copyright approval process. Please be aware that lyrics, like the music itself, are copyright protected. Legal issues typically occur only when a performer makes musical or lyrical changes to a published arrangement without permission. Altering a few words to personalize the song for the performer is usually permissible; however, if the lyrical changes involve a parody, with very few exceptions a performer must obtain permission from the copyright holder to make such changes. Parody lyrics are defined by Jay Althouse in his book, Copyright: The Complete Guide for Music Educators, as follows: “Parody lyrics are lyrics which replace the original lyrics of a vocal work or are added to an instrumental work. In general, any parody lyric or the revision of a lyric that changes the integrity (character) of the work requires authorization from the copyright owner.” Copyright holders have the right to say “no” to any particular arrangement of their music, and they exercise that right frequently when it comes to parodies. -

Proceedings, the 57Th Annual Meeting, 1981

PROCEEDINGS THE FIFTY-SEVENTH ANNUAL MEETING National Association of Schools of Music NUMBER 70 APRIL 1982 NATIONAL ASSOCIATION OF SCHOOLS OF MUSIC PROCEEDINGS OF THE 57th ANNUAL MEETING Dallas, Texas I98I COPYRIGHT © 1982 ISSN 0190-6615 NATIONAL ASSOCIATION OF SCHOOLS OF MUSIC 11250 ROGER BACON DRIVE, NO. 5, RESTON, VA. 22090 All rights reserved including the right to reproduce this book or parts thereof in any form. CONTENTS Speeches Presented Music, Education, and Music Education Samuel Lipman 1 The Preparation of Professional Musicians—Articulation between Elementary/Secondary and Postsecondary Training: The Role of Competitions Robert Freeman 11 Issues in the Articulation between Elementary/Secondary and Postsecondary Training Kenneth Wendrich 19 Trends and Projections in Music Teacher Placement Charles Lutton 23 Mechanisms for Assisting Young Professionals to Organize Their Approach to the Job Market: What Techniques Should Be Imparted to Graduating Students? Brandon Mehrle 27 The Academy and the Marketplace: Cooperation or Conflict? Joseph W.Polisi 30 "Apples and Oranges:" Office Applications of the Micro Computer." Lowell M. Creitz 34 Microcomputers and Music Learning: The Development of the Illinois State University - Fine Arts Instruction Center, 1977-1981. David L. Shrader and David B. Williams 40 Reaganomics and Arts Legislation Donald Harris 48 Reaganomics and Art Education: Realities, Implications, and Strategies for Survival Frank Tirro 52 Creative Use of Institutional Resources: Summer Programs, Interim Programs, Preparatory Divisions, Radio and Television Programs, and Recordings Ray Robinson 56 Performance Competitions: Relationships to Professional Training and Career Entry Eileen T. Cline 63 Teaching Aesthetics of Music Through Performance Group Experiences David M.Smith 78 An Array of Course Topics for Music in General Education Robert R. -

Money for Something: Music Licensing in the 21St Century

Money for Something: Music Licensing in the 21st Century Updated February 23, 2021 Congressional Research Service https://crsreports.congress.gov R43984 SUMMARY R43984 Money for Something: Music Licensing in the February 23, 2021 21st Century Dana A. Scherer Songwriters and recording artists are generally entitled to receive compensation for Specialist in (1) reproductions, distributions, and public performances of the notes and lyrics they create (the Telecommunications musical works), as well as (2) reproductions, distributions, and certain digital public Policy performances of the recorded sound of their voices combined with instruments (the sound recordings). The amount they receive, as well as their control over their music, depends on market forces, contracts between a variety of private-sector entities, and laws governing copyright and competition policy. Who pays whom, as well as who can sue whom for copyright infringement, depends in part on the mode of listening to music. Congress enacted several major updates to copyright laws in 2018 in the Orrin G. Hatch-Bob Goodlatte Music Modernization Act (MMA; P.L. 115-264). The MMA modified copyright laws related to the process of granting and receiving statutory licenses for the reproduction and distribution of musical works (known as “mechanical licenses”). The law set forth terms for the creation of a nonprofit “mechanical licensing collective” through which owners of copyrights in musical works could collect royalties from online music services. The law also changed the standards used by a group of federal administrative law judges, the Copyright Royalty Board, to set royalty rates for some statutory copyright licenses, as well as the standards used by a federal court to set rates for licenses to publicly perform musical works offered by two organizations representing publishers and composers, ASCAP and BMI.