CHR Grammars Upon Based Formalism Grammar a (TPLP) Programming Logic of Practice and Theory in Appear to Te Ulte Fcr Ae Titrsigt Osdrcrfrlangu for to CHR Problems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Efficient Implementation of the Head-Corner Parser

An Efficient Implementation of the Head-Corner Parser Gertjan van Noord" Rijksuniversiteit Groningen This paper describes an efficient and robust implementation of a bidirectional, head-driven parser for constraint-based grammars. This parser is developed for the OVIS system: a Dutch spoken dialogue system in which information about public transport can be obtained by telephone. After a review of the motivation for head-driven parsing strategies, and head-corner parsing in particular, a nondeterministic version of the head-corner parser is presented. A memorization technique is applied to obtain a fast parser. A goal-weakening technique is introduced, which greatly improves average case efficiency, both in terms of speed and space requirements. I argue in favor of such a memorization strategy with goal-weakening in comparison with ordinary chart parsers because such a strategy can be applied selectively and therefore enormously reduces the space requirements of the parser, while no practical loss in time-efficiency is observed. On the contrary, experiments are described in which head-corner and left-corner parsers imple- mented with selective memorization and goal weakening outperform "standard" chart parsers. The experiments include the grammar of the OV/S system and the Alvey NL Tools grammar. Head-corner parsing is a mix of bottom-up and top-down processing. Certain approaches to robust parsing require purely bottom-up processing. Therefore, it seems that head-corner parsing is unsuitable for such robust parsing techniques. However, it is shown how underspecification (which arises very naturally in a logic programming environment) can be used in the head-corner parser to allow such robust parsing techniques. -

Modular Logic Grammars

MODULAR LOGIC GRAMMARS Michael C. McCord IBM Thomas J. Watson Research Center P. O. Box 218 Yorktown Heights, NY 10598 ABSTRACT scoping of quantifiers (and more generally focalizers, McCord, 1981) when the building of log- This report describes a logic grammar formalism, ical forms is too closely bonded to syntax. Another Modular Logic Grammars, exhibiting a high degree disadvantage is just a general result of lack of of modularity between syntax and semantics. There modularity: it can be harder to develop and un- is a syntax rule compiler (compiling into Prolog) derstand syntax rules when too much is going on in which takes care of the building of analysis them. structures and the interface to a clearly separated semantic interpretation component dealing with The logic grammars described in McCord (1982, scoping and the construction of logical forms. The 1981) were three-pass systems, where one of the main whole system can work in either a one-pass mode or points of the modularity was a good treatment of a two-pass mode. [n the one-pass mode, logical scoping. The first pass was the syntactic compo- forms are built directly during parsing through nent, written as a definite clause grammar, where interleaved calls to semantics, added automatically syntactic structures were explicitly built up in by the rule compiler. [n the two-pass mode, syn- the arguments of the non-terminals. Word sense tactic analysis trees are built automatically in selection and slot-filling were done in this first the first pass, and then given to the (one-pass) pass, so that the output analysis trees were actu- semantic component. -

A Definite Clause Version of Categorial Grammar

A DEFINITE CLAUSE VERSION OF CATEGORIAL GRAMMAR Remo Pareschi," Department of Computer and Information Science, University of Pennsylvania, 200 S. 33 rd St., Philadelphia, PA 19104,t and Department of Artificial Intelligence and Centre for Cognitive Science, University of Edinburgh, 2 Buccleuch Place, Edinburgh EH8 9LW, Scotland remo(~linc.cis.upenn.edu ABSTRACT problem by adopting an intuitionistic treatment of implication, which has already been proposed We introduce a first-order version of Catego- elsewhere as an extension of Prolog for implement- rial Grammar, based on the idea of encoding syn- ing hypothetical reasoning and modular logic pro- tactic types as definite clauses. Thus, we drop gramming. all explicit requirements of adjacency between combinable constituents, and we capture word- order constraints simply by allowing subformu- 1 Introduction lae of complex types to share variables ranging over string positions. We are in this way able Classical Categorial Grammar (CG) [1] is an ap- to account for constructiods involving discontin- proach to natural language syntax where all lin- uous constituents. Such constructions axe difficult guistic information is encoded in the lexicon, via to handle in the more traditional version of Cate- the assignment of syntactic types to lexical items. gorial Grammar, which is based on propositional Such syntactic types can be viewed as expressions types and on the requirement of strict string ad- of an implicational calculus of propositions, where jacency between combinable constituents. atomic propositions correspond to atomic types, We show then how, for this formalism, parsing and implicational propositions account for com- can be efficiently implemented as theorem proving. plex types. -

Chapter 2 INTRODUCTION to LABORATORY ACTIVITIES

31 Chapter 2 INTRODUCTION TO LABORATORY ACTIVITIES he laboratory activities introduced in this chapter and used elsewhere in the text are not required to understand definitional techniques and T formal specifications, but we feel that laboratory practice will greatly enhance the learning experience. The laboratories provide a way not only to write language specifications but to test and debug them. Submitting speci- fications as a prototyping system will uncover oversights and subtleties that are not apparent to a casual reader. This laboratory approach also suggests that formal definitions of programming languages can be useful. The labora- tory activities are carried out using Prolog. Readers not familiar with Prolog, should consult Appendix A or one of the references on Prolog (see the further readings at the end of this chapter) before proceeding. In this chapter we develop a “front end” for a programming language pro- cessing system. Later we use this front end for a system that check the con- text-sensitive part of the Wren grammar and for prototype interpreters based on semantic specifications that provide implementations of programming lan- guages. The front end consists of two parts: 1. A scanner that reads a text file containing a Wren program and builds a Prolog list of tokens representing the meaningful atomic components of the program. 2. A parser that matches the tokens to the BNF definition of Wren, produc- ing an abstract syntax tree corresponding to the Wren program. Our intention here is not to construct production level software but to for- mulate an understandable, workable, and correct language system. -

Addison Wesley

Formal Syntax and Semantics of Programming Languages A Laboratory Based Approach Kenneth Slonneger University of Iowa Barry L. Kurtz Louisiana Tech University Addison-Wesley Publishing Company Reading, Massachusetts • Menlo Park, California • New York • Don Mills, Ontario Wokingham, England • Amsterdam • Bonn • Sydney • Singapore Tokyo • Madrid • San Juan • Milan • Paris Senior Acquisitions Editor: Tom Stone Assistant Editor: Kathleen Billus Production Coordinator: Marybeth Mooney Cover Designer: Diana C. Coe Manufacturing Coordinator: Evelyn Beaton The procedures and applications presented in this book have been included for their instructional value. They have been tested with care but are not guaranteed for any particular purpose. The publisher does not offer any war- ranties or representations, nor does it accept any liabilities with respect to the programs or applications. Library of Congr ess Cataloging-in-Publication Data Slonneger, Kenneth. Formal syntax and semantics of programming languages: a laboratory based approach / Kenneth Slonneger, Barry L. Kurtz. p.cm. Includes bibliographical references and index. ISBN 0-201-65697-3 1.Pr ogramming languages (Electronic computers)--Syntax. 2.Pr ogramming languages (Electronic computers)--Semantics. I. Kurtz, Barry L. II. Title. QA76.7.S59 1995 005.13'1--dc20 94-4203 CIP Reproduced by Addison-Wesley from camera-ready copy supplied by the authors. Copyright © 1995 by Addison-Wesley Publishing Company, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the publisher. Printed in the United States of America. ISBN 0-201-65697-3 12345678910-MA-979695 Dedications To my father, Robert Barry L. -

Recovery, Convergence and Documentation of Languages

Recovery, Convergence and Documentation of Languages by Vadim Zaytsev September 14, 2010 VRIJE UNIVERSITEIT Recovery, Convergence and Documentation of Languages ACADEMISCH PROEFSCHRIFT ter verkrijging van de graad Doctor aan de Vrije Universiteit Amsterdam, op gezag van de rector magnificus prof.dr. L.M. Bouter, in het openbaar te verdedigen ten overstaan van de promotiecommissie van de faculteit der Exacte Wetenschappen op woensdag 27 oktober 2010 om 15.45 uur in de aula van de universiteit, De Boelelaan 1105 door Vadim Valerievich Zaytsev geboren te Rostov aan de Don, Rusland promotoren: prof.dr. R. Lammel¨ prof.dr. C. Verhoef Dit onderzoek werd ondersteund door de Nederlandse Organisatie voor Wetenschappelijk Onderzoek via: This research has been sponsored by the Dutch Organisation of Scientific Research via: NWO 612.063.304 LPPR: Language-Parametric Program Restructuring Acknowledgements Working on a PhD is supposed to be an endeavour completed in seclusion, but in practice one cannot survive without the help and support from others, fruitful scientific discus- sions, collaborative development of tools and papers and valuable pieces of advice. My work was supervised by Prof. Dr. Ralf Lammel¨ and Prof. Dr. Chris Verhoef, who often believed in me more than I did and were always open to questions and ready to give expert advice. They have made my development possible. LPPR colleagues — Jan Heering, Prof. Dr. Paul Klint, Prof. Dr. Mark van den Brand — have been a rare yet useful source of new research ideas. All thesis reading committee members have dedicated a lot of attention to my work and delivered exceptionally useful feedback on the late stage of the research: Prof. -

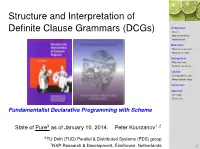

Fundamentalist Declarative Programming with Scheme

Structure and Interpretation of Definite Clause Grammars (DCGs) Introduction Vision Why we want this? Related work Motivation What we do not want What we do want Background Why, why, why ... Problem statement Solution Solving left-recursion Meta-syntactic sugar Conclusion Appendix Acronyms References Fundamentalist Declarative Programming with Scheme State of Pure3 as of January 10, 2014. Peter Kourzanov1;2 2TU Delft (TUD) Parallel & Distributed Systems (PDS) group 1 NXP Research & Development, Eindhoven, Netherlands 1.1 Abstract Pure3 ≡ Declarative approach to Declarative parsing with Declarative tools DCGs is a technique that allows one to embed a parser for a context-sensitive language into logic programming, via Horn clauses. A carefully designed Introduction Vision grammar/parser can be run forwards, backwards and sideways. In this talk we Why we want this? shall de-construct DCGs using syntax-rules and MINIKANREN, a library Related work using the Revised5 Report on the Algorithmic Language Scheme (R5RS) and Motivation implementing a compact logic-programming system, keeping reversibility in What we do not want What we do want mind. Parsing Expression Grammars (PEGs) is a related technique that like Background DCGs also suffers from the inability to express left-recursive grammars. We Why, why, why ... make a link between DCGs and PEGs by borrowing the mechanism from Problem statement DCGs, adding meta-syntactic sugar from PEGs and propose a way to run Solution possibly left-recursive parsers using either formalism in a pure, on-line fashion. Solving left-recursion Meta-syntactic sugar Finally, we re-interpret DCGs as executable, bidirectional Domain-Specific Language (DSL) specifications and transformations, perhaps better suited for Conclusion DSL design than R5RS syntax-rules. -

Attribute Grammars and Their Applications

Attribute Grammars and their Applications Krishnaprasad Thirunarayan Metadata and Languages Laboratory Department of Computer Science and Engineering Wright State University Dayton, OH-45435 INTRODUCTION Attribute grammars are a framework for defining semantics of programming languages in a syntax-directed fashion. In this paper, we define attribute grammars, and then illustrate their use for language definition, compiler generation, definite clause grammars, design and specification of algorithms, etc. Our goal is to emphasize its role as a tool for design, formal specification and implementation of practical systems, so our presentation is example rich. BACKGROUND The lexical structure and syntax of a language is normally defined using regular expressions and context-free grammars respectively (Aho et al, 2007, Chapters 3-4). Knuth (1968) introduced attribute grammars to specify static and dynamic semantics of a programming language in a syntax-directed way. Let G = (N, T, P, S) be a context-free grammar for a language L (Aho et al, 2007). N is the set of non-terminals. T is the set of terminals. P is the set of productions. Each production is of the form A ::= α, where A ∈ N and α ∈ (N U T)*. S ∈ N is the start symbol. An attribute grammar AG is a triple (G, A, AR), where G is a context-free grammar for the language, A associates each grammar symbol X ∈ N U T with a set of attributes, and AR associates each production R ∈ P with a set of attribute computation rules (Paakki, 1995). A(X), where X ∈ (N U T), can be further partitioned into two sets: synthesized attributes S(X) and inherited attributes I(X). -

Recovery, Convergence and Documentation of Languages Zaytsev, V.V

VU Research Portal Recovery, Convergence and Documentation of Languages Zaytsev, V.V. 2010 document version Publisher's PDF, also known as Version of record Link to publication in VU Research Portal citation for published version (APA) Zaytsev, V. V. (2010). Recovery, Convergence and Documentation of Languages. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. E-mail address: [email protected] Download date: 25. Sep. 2021 VRIJE UNIVERSITEIT Recovery, Convergence and Documentation of Languages ACADEMISCH PROEFSCHRIFT ter verkrijging van de graad Doctor aan de Vrije Universiteit Amsterdam, op gezag van de rector magnificus prof.dr. L.M. Bouter, in het openbaar te verdedigen ten overstaan van de promotiecommissie van de faculteit der Exacte Wetenschappen op woensdag 27 oktober 2010 om 15.45 uur in de aula van de universiteit, De Boelelaan 1105 door Vadim Valerievich Zaytsev geboren te Rostov aan de Don, Rusland promotoren: prof.dr. -

Declarative Tabling in Prolog Using Multi-Prompt Delimited Control

More declarative tabling in Prolog using multi-prompt delimited control Samer Abdallah ([email protected]) Jukedeck Ltd. August 28, 2017 Abstract Several Prolog implementations include a facility for tabling, an alter- native resolution strategy which uses memoisation to avoid redundant duplication of computations. Until relatively recently, tabling has required either low-level support in the underlying Prolog engine, or extensive pro- gram transormation (de Guzman et al., 2008). An alternative approach is to augment Prolog with low level support for continuation capturing control operators, particularly delimited continuations, which have been investigated in the field of functional programming and found to be capable of supporting a wide variety of computational effects within an otherwise declarative language. This technical report describes an implementation of tabling in SWI Prolog based on delimited control operators for Prolog recently introduced by Schrijvers et al.(2013). In comparison with a previous implementation of tabling for SWI Prolog using delimited control (Desouter et al., 2015), this approach, based on the functional memoising parser combinators of Johnson(1995), stays closer to the declarative core of Prolog, requires less code, and is able to deliver solutions from systems of tabled predicates incrementally (as opposed to finding all solutions before delivering any to the rest of the program). A collection of benchmarks shows that a small number of carefully targeted optimisations yields performance within a factor of about 2 of the optimised version of Desouter et al.’s system currently included in SWI Prolog. 1 Introduction and background arXiv:1708.07081v2 [cs.PL] 24 Aug 2017 Tabling, or memoisation (Michie, 1968) is a well known technique for speeding up computations by saving and reusing the results of earlier subcomputations instead of repeating them. -

DEFINITE CLAUSE TRANSLATION GRAMMARS by Harvey Abramson Technical Report 84-3 April 1984

DEFINITE CLAUSE TRANSLATION GRAMMARS by Harvey Abramson Technical Report 84-3 April 1984 Definite Clause Translation Grammars Harvey Abrameon Department or Computer Science University of British Columbia Vancouver, B.C. Canada ABSTRACT In this paper we introduce Definite Clause Translation Grammars, a new class of logic grammars which generalizes Definite Clause Grammars and which may be thought of as a logical implementation of Attribute Grammars. Definite Clause Translation Grammars permit the specification of the syntax and seman tics or a language: the syntax is specified as in Definite Clause Grammars; but the semantics is specified by one or more semantic rules in the form of Horn clauses attached to each node or the parse tree (automatically created during syntactic analysis), and which control traversal(s) of the parse tree and computation of attributes or each node. The semantic rules attached to a node constitute there fore, a local data base for that node. The separation of syntactic and semantic rules is intended to promote modularity, simplicity and clarity of definition, and ease of modification as compared to Definite Clause Grammars, Metamorphosis Grammars, and Restriction Grammars. 1. Introduction A grammar is a finite way or specifying a language which may consist or an infinite number or "sentences". A grammar is a logic grammar if its rules can be represented as clauses of first order predicate logic, and particularly, as Horn clauses. Such logic grammars can conveniently be implemented by the logic programming language Pro)og: grammar rules are translated into Prolog rules which can then be executed for either recognition of sentences or the language specified, or (with some care) for generating sentences of the language specified. -

Definite Clause Grammars with Parse Trees: Extension for Prolog

Definite Clause Grammars with Parse Trees: Extension for Prolog Falco Nogatz University of Würzburg, Department of Computer Science, Am Hubland, 97074 Würzburg, Germany [email protected] Dietmar Seipel University of Würzburg, Department of Computer Science, Am Hubland, 97074 Würzburg, Germany [email protected] Salvador Abreu LISP, Department of Computer Science, University of Évora, Portugal [email protected] Abstract Definite Clause Grammars (DCGs) are a convenient way to specify possibly non-context-free grammars for natural and formal languages. They can be used to progressively build a parse tree as grammar rules are applied by providing an extra argument in the DCG rule’s head. In the simplest way, this is a structure that contains the name of the used nonterminal. This extension of a DCG has been proposed for natural language processing in the past and can be done automatically in Prolog using term expansion. We extend this approach by a meta-nonterminal to specify optional and sequences of nonterminals, as these structures are common in grammars for formal, domain-specific languages. We specify a term expansion that represents these sequences as lists while preserving the grammar’s ability to be used both for parsing and serialising, i.e. to create a parse tree by a given source code and vice-versa. We show that this mechanism can be used to lift grammars specified in extended Backus–Naur form (EBNF) to generate parse trees. As a case study, we present a parser for the Prolog programming language itself based only on the grammars given in the ISO Prolog standard which produces corresponding parse trees.