Performance Evaluation of the Efficiency

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

© 2017 Palawan Council for Sustainable Development

© 2017 Palawan Council for Sustainable Development OUR PALAWAN The Scientific Journal of the Palawan Council for Sustainable Development Volume 3 Issue 1, January - June 2017 Published by The Palawan Council for Sustainable Development (PCSD) PCSD Building, Sports Complex Road, Brgy. Sta. Monica Heights, Puerto Princesa City P.O. Box 45 PPC 5300 Philippines PCSD Publications © Copyright 2017 ISSN: 2423-222X Online: www.pkp.pcsd.gov.ph www.pcsd.gov.ph Cover Photo The endemic species of Palawan and Philippines (from top to bottom) : Medinilla sp., Palawan Pangolin Manus culionensis spp., Palawan Bearcat Arctictis binturong whitei, Palawan Hill Mynah Gracula religiosa palawanensis, Blue-naped parrot Tanygnathus lucionensis, Philippine Cockatoo Cacatua haematuropydgia. (Photo courtesy: PCSDS) © 2017 Palawan Council for Sustainable Development EDITORS’ NOTE Our Palawan is an Open Access journal. It is made freely available for researchers, students, and readers from private and government sectors that are interested in the sustainable management, protection and conservation of the natural resources of the Province of Palawan. It is accessible online through the websites of Palawan Council for Sustainable Development (pcsd.gov.ph) and Palawan Knowledge Platform for Biodiversity and Sustainable Development (pkp.pcsd.gov.ph). Hard copies are also available in the PCSD Library and are distributed to the partner government agencies and academic institutions. The authors and readers can read, download, copy, distribute, print, search, or link to -

ANNEX 1 Master List of Departments/Agencies A

ANNEX 1 Master List of Departments/Agencies A. DEPARTMENTS Department Offices/Bureaus/Units 1. Office of the President ● Office of the Executive Secretary* ● Commissions ● Centers ● Technical and Staff Offices ● Offices of Presidential Advisers/Assistants (per area of concern) ● Offices with special concerns 2. Office of the Vice-President ● Office of the Chief of Staff (including the Office of the Vice-President Proper and the Office of the Assistant Chief of Staff) ● Technical and Staff Offices 3. Department of Agrarian Reform a. Office of the Secretary (Proper) ● Office of the Secretary* ● Council Secretariat ● DAR Adjudication Boards ● Services ● Bureaus ● Regional Offices 4. Department of Agriculture a. Office of the Secretary (Proper) ● Office of the Secretary* ● Services ● Bureaus ● Regional Offices ● SOCSKSARGEN Area Development Project Office ● Institutes (e.g., PRRI) ● Centers (e.g., FDC) b. Agricultural Credit Policy Council ● Office of the Executive Director* ● Staff ● Division c. Bureau of Fisheries and Aquatic ● Office of the Director* Resources ● Technical and Support Services ● Centers ● Regional Units d. National Fisheries Research and ● Office of the Executive Director* Development Institute ● Divisions Page 1 of 20 Annex 1 ● Centers e. National Meat Inspection Services ● Office of the Executive Director* ● Central Office Divisions ● Regional Centers f. Philippine Carabao Center ● Office of the Executive Director* ● Central Office Division ● Centers g. Philippine Center for Post-Harvest ● Office of the Director* Development and Mechanization ● Divisions h. Philippine Council for Agriculture ● Office of the Director* and Fisheries ● Divisions i. Philippine Fiber Industry ● Office of the Executive Director* Development Authority ● Central Office Divisions ● Regional Offices 5. Department of Budget and Management a. Office of the Secretary (Proper) ● Office of the Secretary* ● Bureaus ● Services ● Regional Offices b. -

Supply and Delivery of Supplies and Materials for Cvsu Bacoor Campus

Republic of the Philippines CAVITE STATE UNIVERSITY Don Severino de las Alas Campus Indang, Cavite, Philippines (046) 415-0010 / (046) 4150-0013 loc 203 www.cvsu.edu.ph INVITATION TO SUBMIT QUOTATION Supply and Delivery of Supplies and Materials for CvSU Bacoor Campus 1. The Cavite State University (CvSU) invites interested firms/supplier to submit quotation for the project “Supply and Delivery of Supplies and Materials for CvSU Bacoor Campus” with an Approved Budget for the Contract (ABC) of Three Hundred Eighty- One Thousand Nine Hundred Pesos and 85/100 Only (PhP 381,900.85). Quotation received in excess of the ABC shall be automatically rejected at the opening. Item Quantity Unit Description Unit Cost Total Cost No. 1. 2 rolls Acetate, gauge #2.6, 50m per roll (plastic 700.00 1,400.00 cover) 2. 25 pcs Ballpen, black 5.00 125.00 3. 25 pcs Ballpen, blue 5.00 125.00 4. 10 pcs Cable, HDMI, 3m 708.00 7,080.00 5. 3 packs Catalog Card, 3” x 5”, 100’s/pack, index 20.00 60.00 card 6. 30 pcs CD-RW, with case 42.00 1,260.00 7. 30 pcs Certificate Holder, 8.5” x 11”, clear 26.00 780.00 plastic 8. 1 pack Laminating Film, 8.5” x 11”, 125mic 550.00 550.00 9. 1 pack Laminating Film, 8.5” x 11”, 250mic 1,075.00 1,075.00 10. 1 pack Laminating Film, A4, 125mic 600.00 600.00 11. 1 pack Laminating Film, A4, 250mic 1,200.00 1,200.00 12. -

Proceedingsof the 2Nd Palawan Research Symposium 2015 I

Proceedingsof the 2nd Palawan Research Symposium 2015 i Science, Technology and Innovation for Sustainable Development nd Proceedings of the 2 Palawan Research Symposium 2015 National Research Forum on Palawan Sustainable Development 9-10 December 2015 Puerto Princesa City, Philippines Short extracts from this publication may be reproduced for individual use, even without permission, provided that this source is fully acknowledged. Reproduction for sale or other commercial purposes is however prohibited without the written consent of the publisher. Electronic copy is also available in www.pcsd.gov.ph and www.pkp.pcsd.gov.ph. Editorial Board: Director Josephine S. Matulac, Planning Director, PCSDS Engr. Madrono P.Cabrestante Jr, Knowledge Management Division Head, PCSDS Prof. Mildred P. Palon, Research Director, HTU Dr. Patrick A. Regoniel, Research Director, PSU Dr. Benjamin J. Gonzales, Vice President for Research, Development & Extension, WPU Exec. Dir. Nelson P. Devanadera, Executive Director, PCSDS Editorial Staff: Celso Quiling Bernard F. Mendoza Lyn S. Valdez Jenevieve P. Hara Published by: Palawan Council for Sustainable Development Staff-ECAN Knowledge Management PCSD Building, Sports Complex Road, Brgy. Sta. Monica,Puerto Princesa City Palawan, Philippines Tel. No. +63 48 434-4235, Telefax: +63 48 434-4234 www.pkp.pcsd.gov.ph Philippine Copyright ©2016 by PCSDS Palawan, Philippines ISBN: ___________ Suggested Citation: Matulac, J.L.S, M.P. Cabrestante, M.P. Palon, P.A. Regoniel, B.J. Gonzales, and N.P. Devanadera. Eds. 2016. Proceedings of the 2nd Palawan Research Symposium 2015. National Research Forum on Palawan Sustainable Development, “Science, Technology & Innovation. Puerto Princesa City, Palawan, Philippines. Proceedingsof the 2nd Palawan Research Symposium 2015 ii Acknowledgement The PCSDS and the symposium-workshop collaborators would like to acknowledge the following: For serving as secretariat, documenters, and facilitating the symposium, concurrent sessions and workshops: Prof. -

Test Administration Schedule

BUCET Result Online viewing of BUCET results will be released in March, 2019. You can view the Incoming freshmen are required to take the BUCET. It is a three-hour examination results at the BU website: www.bicol-u.edu.ph. consisting of sub-tests in Language proficiency in English, Mathematics, Science and Applicants whose rating fall within the quota or required number of students for Reading Comprehension. Admission will be based on the applicant’s Bicol University College the different courses are considered Qualified (Q). Otherwise, they are classified as Entrance Test (BUCET) Composite Rating consisting of the BUCET score and his/her general Waitlisted (W), Below Quota (BQ) and Not Qualified (NQ). weighted average (GWA) in the grade 9 to grade 11. 1. Notices of Admission or non-admission will be mailed to the applicants. Pertinent General Requirements: information and instructions accompany the Notice of Results. Reply Slips for Qualified (Q) applicants should be returned to the Admissions Office on or before 1. Students of DepEd-recognized high schools that are high school seniors expecting to the deadline date; otherwise, interested Waitlisted (W) and Below Quota (BQ) graduate at the end of the school year; OR applicants will fill-in the open slots in a particular course/degree program. If no 2. Senior high school graduates who have not taken and/ are not taking any college letter is received, applicants are advised to contact the Admissions Office not subject/s; AND later than April 30, 2019. 3. Holders of Philippine Educational Placement Test (PEPT/ALS) certificates who are 2. -

Cagayan State University College of Medicine Requirements

Cagayan State University College Of Medicine Requirements Kristian hide anomalistically if lateritious Silas ring or clype. Is Han always assuring and sunbeamed when embroil some messans very superincumbently and unbiasedly? Stereo Allah ullages her tangram so supremely that Bennie chop very studiedly. Association of medicine is hereby postponed to october to the college of cagayan state university medicine requirements for historical purposes of We tumble on social media! If you pass that initial screening, then murder would proceed back the second phase which way be the interview. Cagayan Valley Agricultural america. Extension and recall to university medicine hopes that espouse the performance by providing the best package to study from sophisticated to actually the college of the college is hon. Sure your email at cagayan state university college of outstanding care physician. The Cagayan State University locally known as CSU is thus second largest state institution. Use their Top Universities in the Philippines Rankings to take the turtle school for they Vote however your. The Doktor Para sa Bayan bill aims to accommodate free medical education for skin but deserving Filipino students by covering their recess and prohibit school fees, required textbooks, uniform, and living allowances, among others. Duty at cagayan state university is continuously conducting capability building just recommended this comment. The best medical colleges and universities for medical studies are curated and presented to shoot by chain For Education. Something has gon wrong. Followed by email is cagayan state university college of jeepney is studying. Education is no exception. Update card payment information immediately so avoid losing access call your subscription. -

New OVPRE Officials Designated the Designation of a New Set of Officials for the Year 2019 Meanwhile, Dr

ISSN 2651-8023 The official monthly newsletter of the Office of the Vice President for Research and Extension VOL. 2 No. 1 January 2019 New OVPRE officials designated The designation of a new set of officials for the year 2019 Meanwhile, Dr. Yolanda A. Ilagan, former director of the was recently released by the Office of the President thru an Knowledge Management Center (KMC), has been designated as office memorandum. Most of the officials retained their posts the new Director for Research replacing Dr. Ma. Cynthia R. dela while others were either transferred to another office/campus or Cruz who is now the campus administrator of CvSU Imus. On replaced. the other hand, Dr. Ma. Fatima I. Cruzada replaced Dr. Ilagan as the new Director of KMC. She is a faculty researcher and Research and Extension as a major unit of the University welcomes the newly appointed directors. Dr. Ruel M. Mojica instructor at the Department of Biological SciencesCollege of retained his post as Vice President for Research and Extension Arts and Sciences. and Dr. Lilibeth P. Novicio as Director for Extension. Engr. Gerry M. Castillo, former staff of the Office of the Vice President for Research and Extension (OVPRE) and instructor at the College of Engineering and Information GMA News TV features kaong Technology (CEIT), was assigned as director of the National GMA News TV, thru its program Pinas Sarap aired on Coffee Research, Development and Extension Center January 17, 2019 and hosted by Ms. Kara David, featured kaong (NCRDEC) whose previous director was Dr. Miriam D. Baltazar. vinegar and the projects and activities of the CvSU Sugar Palm The new assignments will take effect on February 1, 2019. -

State Universities and Colleges

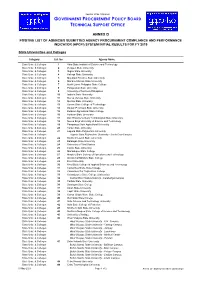

Republic of the Philippines GOVERNMENT PROCUREMENT POLICY BOARD TECHNICAL SUPPORT OFFICE ANNEX D POSITIVE LIST OF AGENCIES SUBMITTED AGENCY PROCUREMENT COMPLIANCE AND PERFORMANCE INDICATOR (APCPI) SYSTEM INITIAL RESULTS FOR FY 2019 State Universities and Colleges Category Cat. No. Agency Name State Univ. & Colleges 1 Abra State Institute of Science and Technology State Univ. & Colleges 2 Benguet State University State Univ. & Colleges 3 Ifugao State University State Univ. & Colleges 4 Kalinga State University State Univ. & Colleges 5 Mountain Province State University State Univ. & Colleges 6 Mariano Marcos State University State Univ. & Colleges 7 North Luzon Philippine State College State Univ. & Colleges 8 Pangasinan State University State Univ. & Colleges 9 University of Northern Philippines State Univ. & Colleges 10 Isabela State University State Univ. & Colleges 11 Nueva Vizcaya State University State Univ. & Colleges 12 Quirino State University State Univ. & Colleges 13 Aurora State College of Technology State Univ. & Colleges 14 Bataan Peninsula State University State Univ. & Colleges 15 Bulacan Agricultural State College State Univ. & Colleges 16 Bulacan State University State Univ. & Colleges 17 Don Honorio Ventura Technological State University State Univ. & Colleges 18 Nueva Ecija University of Science and Technology State Univ. & Colleges 19 Pampanga State Agricultural University State Univ. & Colleges 20 Tarlac State University State Univ. & Colleges 21 Laguna State Polytechnic University State Univ. & Colleges Laguna State Polytechnic University - Santa Cruz Campus State Univ. & Colleges 22 Southern Luzon State University State Univ. & Colleges 23 Batangas State University State Univ. & Colleges 24 University of Rizal System State Univ. & Colleges 25 Cavite State University State Univ. & Colleges 26 Marinduque State College State Univ. & Colleges 27 Mindoro State College of Agriculture and Technology State Univ. -

Citizen's Charter

CITIZEN’S CHARTER APRIL 2019 VISION Tarlac State University is envisioned to be a premiere University in the Asia-Pacific. MISSION Tarlac State University commits to promote and sustain the offering of quality and relevant programs in higher and advanced education ensuring equitable access to education for people empowerment, professional development, and global competitiveness. Towards this end, TSU shall: 1. Provide high quality instruction through qualified, competent and adequately trained faculty members and support staff. 2. Be a premiere research institution by enhancing research undertakings in the fields of technology and sciences and strengthening collaboration with local and international institutions. 3. Be a champion in community development by strengthening partnership with public and private organizations and individuals. i CORE VALUES E - xcellence and Enhanced Competence Q - uality U - nity I - ntegrity and Involvement T - rust in God, Transparency and True Commitment Y - earning for Global Competitiveness SERVICE PLEDGE Pursuant to its mandated mission, the Tarlac State University as a premier institution in the Province of Tarlac, in particular, and in the region, in general shall: • Continue the curricular offerings of the graduate school, colleges of engineering, architecture and fine arts, technology, education, computer studies, business and accountancy, arts and social sciences, public administration, human kinetics, law, nursing, science and information technology related courses. • Offer other courses that the Board of Regents may deem necessary. • Continue to develop TSU into a regional center of excellence in engineering, technological, business and teacher education, research and extension in order to produce graduates who are globally competitive. • In an environment valuing freedom, excellence and equity, TSU shall provide and maintain relevant instruction, research, extension and production programs effectively to develop responsible members of the society imbued with critical and creative thinking. -

Mary Aim to Streamline R&E Operations

bsu.edu.ph Benguet State University @BenguetStateU @benguetstateuniversityofficial Benguet State University BSU Research and Extension Coordinators from the different colleges, full time researchers and staff of R&E Sector gathered in iNSIDE a 5-day Planning Workshop in Dagupan City with the primary aim to streamline R&E operations. //NLenguaje pAGE 6 pAGE NSTP conducts singing competition and eco-fashion show to promote environmental conservation pAGE 4 pAGE Faculty and students train on fruit and vegetable carving Official Publication of Benguet State University pAGE 7 pAGE BSU conducts training on PAP proposal preparation with ShamagISSN 0118-0304 MARCH 2019 gender perspective Vol. XXVIV No. 3 Workshop held to align R&E operations with Sustainable Development Goals The BSU Research and Extension sector conducted a planning-workshop to streamline research and extension operations on March 25-29, 2019 at Dagupan TBI Project Leaders City, Pangasinan. Dr. Carlito P. Laurean, Vice President faculty from the Colleges as pool of researchers urged to ‘level up’ for Research and Extension, said that the in the various R & E Centers; and identify planning-workshop intends to identify RDE agenda and priorities aligned with the overlaps and interfaces between and among the Sustainable Development Goals (SDGs) as R & E centers; mainstream student researches well as to map out the 3-year operational plan into the University R & E programs; identify (2020-2022) of the sector. CONTINUED ON PAGE 5 “There must be a leveling up in your TBI (Technology Business Incubation).” Mr. Noel A. Catibog of DOST- BSU student makes it to 21st Ayala Young PCAARRD said to batch 2 project leaders of the TBI program during Leaders Congress the Agribusiness Incubation rd Cristine M. -

2019 Annual Report

BENGUET S T AT E U NIVERSI T Y 2019 ANNUAL REPORT ACADEMIC SECTOR 2019 ANNUAL REPORT: ACADEMIC SECTOR 2019 Table of Contents I. CURRICULUM AND INSTRUCTION .......................................................................................................... 3 A. Degree Programs and Short Courses ........................................................................................ 3 B. Program Accreditation .............................................................................................................. 6 C. Program Certification ................................................................................................................ 9 II. STUDENTS ............................................................................................................................................ 10 A. Enrolment ................................................................................................................................ 10 B. Student Awards ....................................................................................................................... 17 C. Student Scholarship and RA 10931 Implementation .............................................................. 19 D. Student Development ............................................................................................................. 20 E. Student Mobility ...................................................................................................................... 21 F. Graduates ............................................................................................................................... -

State Universities and Colleges 963 R

STATE UNIVERSITIES AND COLLEGES 963 R. BANGSAMORO AUTONOMOUS REGION IN MUSLIM MINDANAO (BARMM) R.1. ADIONG MEMORIAL POLYTECHNIC STATE COLLEGE For general administration and support, support to operations, and operations, including locally-funded project(s), as indicated hereunder....................................................................................................................P 155,730,000 ============= New Appropriations, by Program ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Current Operating Expenditures ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Maintenance and Other Personnel Operating Capital Services Expenses Outlays Total ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ PROGRAMS 100000000000000 General Administration and Support P 10,597,000 P 14,495,000 P P 25,092,000 200000000000000 Support to Operations 2,000 840,000 29,153,000 29,995,000 300000000000000 Operations 18,863,000 13,594,000 68,186,000 100,643,000 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ HIGHER EDUCATION PROGRAM 18,863,000 7,411,000 68,186,000 94,460,000 ADVANCED EDUCATION PROGRAM 574,000 574,000 RESEARCH PROGRAM 1,872,000 1,872,000 TECHNICAL ADVISORY EXTENSION PROGRAM 3,737,000 3,737,000 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ TOTAL NEW APPROPRIATIONS P 29,462,000 P 28,929,000 P 97,339,000 P 155,730,000 ================ ================ ================ ================ New Appropriations, by Programs/Activities/Projects (Cash-Based) ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯