Techniques for Accelerating Intensity-Based Rigid Image Registration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

241E1SB/00 Philips LCD Monitor with Smarttouch Controls

Philips LCD monitor with SmartTouch controls 24"w E-line Full HD 241E1SB Big Full-HD display for great viewing experience Enjoy more with this attractive 16:9 Full HD 1080p quality display ! With DVI and VGA inputs, Auto format, SmartContrast and Glossy finish, the 241E1 is a compelling choice for all your needs Great picture quality • Full HD LCD display, 1920x1080p resolution • 16:9 display for best widescreen gaming and video • 5ms fast response time • SmartContrast 25000:1 for incredible rich black details • DVI signal input for full HD performance with HDCP Design that complements any interior • Glossy finish to enhance your decor Environmentally friendly green design • Energy Star for energy efficiency and low power consumption • Eco-friendly materials meets major International standards Great convenience • Dual input accepts both analog VGA and digital DVI signals • Ultra-modern SmartTouch controls • Auto picture format control switching in wide and 4:3 format LCD monitor with SmartTouch controls 241E1SB/00 24"w E-line Full HD Highlights Full HD 1920x1080p LCD display SmartContrast ratio 25000:1 exceed the standard. For example, in sleep This display has a resolution that is referred to mode Energy Star 5.0 requires less than 1watt as Full HD. With state-of-the-art LCD screen power consumption, whereas Philips monitors technology it has the full high-definition consume less than 0.5watts. Further details can widescreen resolution of 1920 x 1080p. It allows be obtained from www.energystar.gov the best possible picture quality from any format of HD input signal. It produces brilliant flicker- Dual input free pictures with optimum brightness and superb colors. -

190V1SB/00 Philips LCD Widescreen Monitor

Philips LCD widescreen monitor 19"w V-line 1440 x 900/Format 16:10 190V1SB Widescreen display offering good value With features like DVI and VGA inputs, SmartContrast and Energy efficiency, the 190V1 offers good value. High-quality picture • WXGA+, wide format 1440 x 900 resolution for sharper display • 5 ms fast response time • SmartContrast: For incredible rich black details Great convenience • Dual input accepts both analogue VGA and digital DVI signals • Easy picture format control switching between wide and 4:3 formats • Screen tilts to your ideal, individualised viewing angle Environmentally friendly green design • Eco-friendly materials that meet international standards • Complies with RoHS standards of care for the environment • EPEAT Silver ensures lower impact on environment LCD widescreen monitor 190V1SB/00 19"w V-line 1440 x 900/Format 16:10 Highlights Specifications Eco-friendly materials and back again to match the display's aspect ratio Picture/Display With sustainability as a strategic driver of its with your content; it allows you to work with wide • LCD panel type: TFT-LCD business, Philips is committed to using eco-friendly documents without scrolling or viewing widescreen • Panel Size: 19 inch/48.1 cm materials across its new product range. Lead-free media in the widescreen mode and gives you • Aspect ratio: 16:10 materials are used across the range. All body plastic distortion-free, native mode display of 4:3 ratio • Pixel pitch: 0.285 x 0.285 mm parts, metal chassis parts and packing material uses content. • Brightness: 250 cd/m² 100% recyclable material. A substantial reduction in • SmartContrast: 20000:1 mercury content in lamps has been achieved. -

Panasonic BT-LH1710 Operating Instructions

Operating Instructions LCD Video Monitor Model No. BT-LH1760P Model No. BT-LH1760E Model No. BT-LH1710P Model No. BT-LH1710E Before operating this product, please read the instructions carefully and save this manual for future use. F1008T0 -P D ENGLISH Printed in Japan VQT1Z04 Read this first ! (for BT-LH1760P/1710P) CAUTION: In order to maintain adequate ventilation, do not install or place this unit in a bookcase, built-in cabinet or any other confined space. To prevent risk of electric shock or fire hazard due to overheating, ensure that curtains and any other materials do not obstruct the ventilation. CAUTION: TO REDUCE THE RISK OF FIRE OR SHOCK HAZARD AND ANNOYING INTERFERENCE, USE THE RECOMMENDED ACCESSORIES ONLY. CAUTION: This apparatus can be operated at a voltage in the range of 100 - 240 V AC. Voltages other than 120 V are not intended for U.S.A. and Canada. CAUTION: Operation at a voltage other than 120 V AC may require the use of a different AC plug. Please contact either a local or foreign Panasonic authorized service center for assistance in selecting an alternate AC plug. ■ THIS EQUIPMENT MUST BE GROUNDED To ensure safe operation, the three-pin plug must be inserted only into a standard three-pin power outlet CAUTION: which is effectively grounded through normal • Keep the temperature inside the rack to household wiring. Extension cords used with the between 41°F to 95°F (5°C to 35°C). equipment must have three cores and be correctly • Bolt the rack securely to the floor so that it wired to provide connection to the ground. -

Video Data Processing Method and Device for Display Adaptive Video Playback

(19) TZZ ¥¥_T (11) EP 2 983 354 A1 (12) EUROPEAN PATENT APPLICATION (43) Date of publication: (51) Int Cl.: 10.02.2016 Bulletin 2016/06 H04N 5/44 (2006.01) G06F 3/14 (2006.01) G09G 5/00 (2006.01) H04N 21/4363 (2011.01) (2011.01) (21) Application number: 15001639.2 H04N 21/44 (22) Date of filing: 02.06.2015 (84) Designated Contracting States: (72) Inventors: AL AT BE BG CH CY CZ DE DK EE ES FI FR GB • Oh, Hyunmook GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO 137-893 Seoul (KR) PL PT RO RS SE SI SK SM TR • Suh, Jongyeul Designated Extension States: 137-893 Seoul (KR) BA ME Designated Validation States: (74) Representative: Katérle, Axel MA Wuesthoff & Wuesthoff Patentanwälte PartG mbB (30) Priority: 08.08.2014 US 201462034792 P Schweigerstraße 2 81541 München (DE) (71) Applicant: LG Electronics Inc. Yeongdeungpo-gu Seoul 150-721 (KR) (54) VIDEO DATA PROCESSING METHOD AND DEVICE FOR DISPLAY ADAPTIVE VIDEO PLAYBACK (57) A video data processing method and/ device for including electro optical transfer function (EOTF) type display adaptive video playback are disclosed. The video information for identifying EOTF supported by the sink data processing method includes transmitting display in- device, receiving video data from the source device via formation indicating capabilities of a sink device to a the interface, and playing the received video data back. source device via an interface, the display information EP 2 983 354 A1 Printed by Jouve, 75001 PARIS (FR) EP 2 983 354 A1 Description BACKGROUND OF THE INVENTION 5 Field of the Invention [0001] The present invention relates to processing of video data and, more particularly, to a video data processing method and device for display adaptive video playback. -

Digital Image Prep for Displays

Digital Image Prep for Displays Jim Roselli ISF-C, ISF-R, HAA Tonight's Agenda • A Few Basics • Aspect Ratios • LCD Display Technologies • Image Reviews Light Basics We view the world in 3 colors Red, Green and Blue We are Trichromatic beings We are more sensitive to Luminance than to Color Our eyes contain: • Light 4.5 million cones (color receptors) 90 million rods (lumen receptors) Analog vs. Digital Light Sample • Digitize the Photon Light Sample Light Sample DSLR’s Canon 5D mkII and Nikon D3x 14 bits per color channel (16,384) Color channels (R,G & B) • Digital Capture Digital Backs Phase One 16 bits per channel (65,536) Color channels (R,G & B) Square Pixels Big pixels have less noise … Spatial Resolution Round spot vs. Square pixel • Size Matters Square Pixels • What is this a picture of ? Aspect Ratio Using Excel’s “Greatest Common Divisor” function • The ratio of the width to =GCD(x,y) xRatio = (x/GCD) the height of the image yRatio = (y/GCD) on a display screen Early Television • How do I calculate this ? Aspect Ratio 4 x 3 Aspect Ratio • SAR - Storage Aspect Ratio • DAR – Display Aspect Ratio • PAR – Pixel Aspect Ratio Aspect Ratio Device Width(x) Heigth(y) GCD xRa5o yRa5o Display 4096 2160 16 256 135 Display 1920 1080 120 16 9 • Examples Display 1280 720 80 16 9 Display 1024 768 256 4 3 Display 640 480 160 4 3 Aspect Ratio Device Width(x) Heigth(y) GCD xRa5o yRa5o Nikon D3x 6048 4032 2016 3 2 • Examples Canon 5d mkII 4368 2912 1456 3 2 Aspect Ratio Device Width(x) Heigth(y) GCD xRa5o yRa5o iPhone 1 480 320 160 3 2 HTC Inspire 800 480 160 5 3 • Examples Samsung InFuse 800 480 160 5 3 iPhone 4 960 640 320 3 2 iPad III 2048 1536 512 4 3 Motorola Xoom Tablet 1280 800 160 8 5 Future Aspect Ratio Device Width(x) Heigth(y) GCD xRa5o yRa5o Display 2048 1536 512 4 3 Display 3840 2160 240 16 9 Display 7680 4320 480 16 9 Aspect Ratio 4K Format Aspect Ratio • Don’t be scared … Pan D3x Frame . -

3. Visual Attention

Brunel UNIVERSITY WEST LONDON INTELLIGENT IMAGE CROPPING AND SCALING A thesis submitted for the degree of Doctor of Philosophy by Joerg Deigmoeller School of Engineering and Design th 24 January 2011 1 Abstract Nowadays, there exist a huge number of end devices with different screen properties for watching television content, which is either broadcasted or transmitted over the internet. To allow best viewing conditions on each of these devices, different image formats have to be provided by the broadcaster. Producing content for every single format is, however, not applicable by the broadcaster as it is much too laborious and costly. The most obvious solution for providing multiple image formats is to produce one high- resolution format and prepare formats of lower resolution from this. One possibility to do this is to simply scale video images to the resolution of the target image format. Two significant drawbacks are the loss of image details through downscaling and possibly unused image areas due to letter- or pillarboxes. A preferable solution is to find the contextual most important region in the high-resolution format at first and crop this area with an aspect ratio of the target image format afterwards. On the other hand, defining the contextual most important region manually is very time consuming. Trying to apply that to live productions would be nearly impossible. Therefore, some approaches exist that automatically define cropping areas. To do so, they extract visual features, like moving areas in a video, and define regions of interest (ROIs) based on those. ROIs are finally used to define an enclosing cropping area. -

(12) United States Patent (10) Patent No.: US 7,257,776 B2 Bailey Et Al

US007257776B2 (12) United States Patent (10) Patent No.: US 7,257,776 B2 Bailey et al. (45) Date of Patent: Aug. 14, 2007 (54) SYSTEMS AND METHODS FORSCALING A 5,838,969 A 11/1998 Jacklin et al. GRAPHICAL USER INTERFACE 5,842,165 A 11/1998 Raman et al. ACCORDING TO DISPLAY DIMIENSIONS 5,854,629 A 12/1998 Redpath AND USINGA TERED SIZING SCHEMATO 6,061,653 A 5, 2000 Fisher et al. DEFINE DISPLAY OBJECTS 6,081,816 A * 6/2000 Agrawal ..................... 715,521 6,125,347 A 9, 2000 Cote et al. 6, 192,339 B1 2, 2001 Cox (75) Inventors: Richard St. Clair Bailey, Bellevue, 6,233,559 B1 5/2001 Balakrishnan WA (US); Stephen Russell Falcon, 6,310,629 B1 10/2001 Muthusamy et al. Woodinville, WA (US); Dan Banay, 6,434,529 B1 8, 2002 Walker et al. Seattle, WA (US) 6,456.305 B1* 9/2002 Qureshi et al. ............. T15,800 6,456,974 B1 9, 2002 Baker et al. (73) Assignee: Microsoft Corporation, Redmond, WA (US) (Continued) OTHER PUBLICATIONS (*) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 “Winamp 3 Preview”.http://www.mp3 newswire.net/stories/2001/ U.S.C. 154(b) by 286 days. winamp3.htm. May 23, 2001. (Continued) (21) Appl. No.: 10/072,393 Primary Examiner Tadesse Hailu (22) Filed: Feb. 5, 2002 Assistant Examiner Michael Roswell (74) Attorney, Agent, or Firm—Lee & Hayes PLLC (65) Prior Publication Data US 2003/O146934 A1 Aug. 7, 2003 (57) ABSTRACT Systems and methods are described for Scaling a graphical (51) Int. -

Property Name Definition / Semantics Ebucore Ebucore Implementation

EBUCore implementation hints and Property Name Definition / Semantics EBUcore examples EBU Core in mets:dmdSec[dataObject] The title of an item contained in a dataObject may differ, either slightly or wholly, from the title of the Version and/or title Work/Variant to which it is linked hierarchically. In particular, title/dc:title where an incomplete physical product of the Version has been acquired and been catalogued as an item The broad media type of the item contained in a dataObject (e.g., film, video, audio, optical, digital file). Recording this high-level <format><medium typeLabel="digital" physical description information will enable simple searching for only film, video, format/medium/@typeLabel /></format> digital, etc. elements rather than searching by all possible formats and carriers. The physical carrier storing the digital file.For digital files, it is most important for users to immediately identify the file <description description[@typeLabel=“specificCarrierType“]/ specific carrier type container or wrapper (MXF, MOV, DPX, etc.) Institutions should typeLabel=“specificCarrierType“><dc:descript dc:description develop standard lists of terms to indicate the specific carrier ion>DCDM</dc:description></description> type or refer to authoritative existing lists. Description of the preservation or access status of the description[@typeLabel=“accessStatus“]/ preservation/access status dataObject, for example Master, Viewing, etc. Select term from a dc:description controlled list. Additional information specific to the item contained in the description[@typeLabel=“comment“]/ supplementary information dataObject dc:description Enter the numerical measurement indicating the size of the file size format/fileSize[@unit="GB"] digital asset’s file(s), in KB, MB, GB, or TB. -

(Tech 3264) to Ebu-Tt Subtitle Files

TECH 3360 EBU-TT, PART 2 MAPPING EBU STL (TECH 3264) TO EBU-TT SUBTITLE FILES VERSION 1.0 SOURCE: SP/MIM – XML SUBTITLES Geneva May 2017 There are blank pages throughout this document. This document is paginated for two sided printing Tech 3360 - Version 1.0 EBU-TT Part 2 - EBU STL Mapping to EBU-TT Conformance Notation This document contains both normative text and informative text. All text is normative except for that in the Introduction, any section explicitly labelled as ‘Informative’ or individual paragraphs which start with ‘Note:’ Normative text describes indispensable or mandatory elements. It contains the conformance keywords ‘shall’, ‘should’ or ‘may’, defined as follows: ‘Shall’ and ‘shall not’: Indicate requirements to be followed strictly and from which no deviation is permitted in order to conform to the document. ‘Should’ and ‘should not’: Indicate that, among several possibilities, one is recommended as particularly suitable, without mentioning or excluding others. OR indicate that a certain course of action is preferred but not necessarily required. OR indicate that (in the negative form) a certain possibility or course of action is deprecated but not prohibited. ‘May’ and ‘need not’: Indicate a course of action permissible within the limits of the document. Default identifies mandatory (in phrases containing “shall”) or recommended (in phrases containing “should”) values that can, optionally, be overwritten by user action or supplemented with other options in advanced applications. Mandatory defaults must be supported. The support of recommended defaults is preferred, but not necessarily required. Informative text is potentially helpful to the user, but it is not indispensable and it does not affect the normative text. -

Welcome to Xtend - the Simplest Display Scaler

docs.xgasoft.com Welcome to Xtend - The Simplest Display Scaler Copyright © XGASOFT, All Rights Reserved Display scalers: everyone needs one, and Xtend is the last one you'll ever need! Xtend automatically and intelligently resizes your games and applications to ll any window or display, desktop or mobile, with pixel precision and no black bars. It's easy to use and comes pre-congured to suit most projects, while also oering deep customization and powerful camera functions for even the most challenging scaling needs. Features Setupless How to use Xtend? Add it to your project. Done. Seriously. (But you can always customize it if you want to!) Universal Xtend supports multiple scaling modes for dierent needs. No matter your project, there's a mode for you! Such as: Linear - For desktop-style applications that need 1:1 screen real estate Aspect - For games and interactive applications that need to ll the screen while preserving a base play area Axis - For horizontal or vertical splitscreen Pixel - For retro art styles that demand pixel perfection at any shape and size All modes also support DPI scaling for windowed applications, ensuring consistent physical size across any screen resolution and pixel density. Copyright © XGASOFT, All Rights Reserved Optimal Xtend only runs when there's a change in viewport resolution. Your app's performance is uncompromised! Powerful Still not enough? Xtend includes additional functions for managing multiple view cameras, all with their own scaling modes, and drawing regular content relative to display scale. It's the complete package! Helpful Take a peek under the hood at any time with built-in debug statistics and hint boxes. -

220E1SB/00 Philips LCD Monitor

Philips LCD monitor 22" w E-line 21.6" w Display Full HD 220E1SB Full HD 16:9 display with glossy design Enjoy more with this Vista-ready Full HD 1080p quality display! The 220E1 comes with an attractive glossy finish and easy-to-use features — all in a budget-friendly package. Great picture quality • Full HD LCD display, 1920 x 1080p resolution • 5 ms fast response time • SmartContrast 8000:1 for rich black details Design that complements any interior • Glossy finish to enhance your decor Great convenience • Auto picture format control switching in wide and 4:3 format LCD monitor 220E1SB/00 22" w E-line 21.6" w Display Full HD Specifications Highlights Picture/Display Power Full HD 1920 x 1080p LCD display • LCD panel type: TFT-LCD • Power LED indicator: Operation - green, Standby/ This display has a resolution that is referred to as Full • Panel Size: 21.6 inch/54.86 cm sleep - Amber HD. With state-of-the-art LCD screen technology it • Aspect ratio: 16:9 • Power supply: Built-in, 100-240 VAC, 50/60 Hz has the full high-definition widescreen resolution of • Pixel pitch: 0.248 x 0.248 mm • On mode: 40 W (typical) 1920 x 1080p. It allows the best possible picture • Viewing angle: 170º (H)/160º (V), @ C/R > 10 • Off mode: < 0.5 W quality from any format of HD input signal. It • Brightness: 300 cd/m² produces brilliant flicker-free pictures with optimum • SmartContrast: 8000:1 Dimensions brightness and superb colours. This vibrant and • Contrast ratio (typical): 1000:1 • Product with stand (mm): 512 x 374 x 205 mm sharp image will provide you with an enhanced • Response time (typical): 5 ms • Product without stand (mm): 453 x 570 x 140 mm viewing experience. -

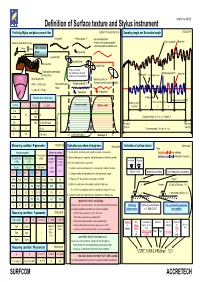

Definition of Surface Texture and Stylus Instrument

Definition of Surface texture and Stylus instrument Confirm to ISO’02 ISO4287:’97 Profile by Stylus and phase correct filter ISO4287:’97 and ISO3274:’96 Sampling length and Evaluation length Total profile Primary profile P Form deviation profile =Mean line for roughness profile Primary profile P Mean line Measure perpendicular to lay =Waviness profile on old DIN & JIS X-axis Z-axis Stylus method probe P-parameter λs profile filter λc profile filter Real surface λf profile filter Phase correct filter Traced profile perpendicular 50% transmission at cutoff Roughness profile R Profile peak Mean line to real surface No phase shift / low distortion Top of profile peak Stylus tip geometry Waviness profile W θ ° ・Stylus deformation Roughness profile R (Filtered center line waviness profile) θ=60 ( or 90°) cone ・Noise rtip rtip=2µm( or 5, 10µm) R-parameter W-parameter 100% Selection of λs & Stylus tip rtip Profile valley Profile element width Xs Sampling length lr Bottom of profile valley Roughness profile λc (mm) λc/λs rtip (µm) Waviness profile = Cutoff λc lr lr lr lr 0.08 30 2.5 2 50% 0.25 100 Evaluation length ln = n×lr (n: Default 5) 0.8 300 2 (5 at Rz >3µm) Pre travel Post travel lp (λ /2) lp (λ /2) 2.5 8 5 or 2 c Traversing length Lt = Lp + Ln + Lp c Transmission 0 8 25 10, 5 or 2 λs Cutoff (Wavelength) λc λc Wavelength λ λf ISO4288:’96 Measuring condition: R-parameter Evaluation procedure of roughness ISO4288:’96 Indication of surface texture ISO1302:’02 Note.: Non-periodic profile Measuring condition 1.