Projecting the Future Individual Contributions of Nhl

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2021 District 41 Inter-League Rules

2021 District 41 Inter-League Rules Objective: Promote, develop, supervise, and voluntarily assist in all lawful ways, the interest of those who will participate in Little League Baseball. District 41 leagues participating in Inter- league play will adhere to the same rules for the 2021 Season. General: The “2021 Official Regulations and Playing Rules of Little League Baseball” will be strictly enforced, except those rules adopted by these Bylaws. All Managers, Coaches and Umpires shall familiarize themselves with all rules contained in the 2021 Official Regulations and Playing Rules of Little League Baseball (Blue Book/ LL app). All Managers, Coaches and Umpires shall familiarize themselves with District 41 2021 Inter-league Bylaws District 41 Division Bylaws • Tee-Ball Division: • Teams can either use the tee or coach pitch • Each field must have a tee at their field • A Tee-ball game is 60-minutes maximum. • Each team will bat the entire roster each inning. Official scores or standings shall not be maintained. • Each batter will advance one base on a ball hit to an infielder or outfielder, with a maximum of two bases on a ball hit past an outfielder. The last batter of each inning will clear the bases and run as if a home run and teams will switch sides. • No stealing of bases or advancing on overthrows. • A coach from the team on offense will place/replace the balls on the batting tee. • On defense, all players shall be on the field. There shall be five/six (If using a catcher) infield positions. The remaining players shall be positioned in the outfield. -

NCAA Statistics Policies

Statistics POLICIES AND GUIDELINES CONTENTS Introduction ���������������������������������������������������������������������������������������������������������������������� 3 NCAA Statistics Compilation Guidelines �����������������������������������������������������������������������������������������������3 First Year of Statistics by Sport ���������������������������������������������������������������������������������������������������������������4 School Code ��������������������������������������������������������������������������������������������������������������������������������������������4 Countable Opponents ������������������������������������������������������������������������������������������������������ 5 Definition ������������������������������������������������������������������������������������������������������������������������������������������������5 Non-Countable Opponents ����������������������������������������������������������������������������������������������������������������������5 Sport Implementation ������������������������������������������������������������������������������������������������������������������������������5 Rosters ������������������������������������������������������������������������������������������������������������������������������ 6 Head Coach Determination ���������������������������������������������������������������������������������������������������������������������6 Co-Head Coaches ������������������������������������������������������������������������������������������������������������������������������������7 -

An Introductory Guide to Advanced Hockey Stats

SHOT METRICS: AN INTRODUCTORY GUIDE TO ADVANCED HOCKEY STATS by Mike McLaughlin Patrick McLaughlin July 2013 Copyright c Left Wing Lock, Inc. 2013 All Rights Reserved ABSTRACT A primary goal of analysis in hockey and fantasy hockey is the ability to use statistics to accurately project the future performance of individual players and teams. Traditional hockey statistics (goals, assists, +/-, etc.) are limited in their ability to achieve this goal, due in large part to their non-repeatability. One alternative approach to hockey analysis would use puck possession as its fundamental metric. That is, if a player or team is dominant, that dominance should be reflected in the amount of time in which they possess the puck. Unfortunately, the NHL does not track nor publish data related to puck possession. In spite of this lack of data, there are methods that can be used to track puck possession. The purpose of this document is to introduce hockey fans (and fantasy hockey managers) to the topic of Shot Metrics. Briefly, Shot Metrics involves the use of NHL shot data to analyze individual players and teams. The shot data is used as a proxy for puck possession. Essentially, teams that are able to shoot the puck more often are doing so because they are more frequently in possession of the puck. It turns out that teams that are able to consistently outshoot their opponents typically end up winning games and performing well in the playoffs [1]. Thus, shot data can play an integral role in the way the game of hockey is analyzed. -

P18 3.E$S 4 Layout 1

THURSDAY, APRIL 6, 2017 SPORTS Nations turn to Plan B for hockey at Olympics with no NHL NEW YORL: The morning after the NHL is true even in European professional player pool “as deep as it has ever been,” Renney said. In a recent interview, Renney that. Assuming European leagues do give announced it wasn’t going to the 2018 leagues. and executive Jim Johannson - who could said Hockey Canada has already pursued players permission or stop their seasons, Olympics, some Americans playing in The result in coming months may be be tasked with putting the team together its Plan B and will be nimble enough to the player pool for the U.S., Canada and Europe started wondering if they should nations navigating a wild set of complica- - said the US will “have 25 great stories on adjust to any changes to rules concerning other countries could grow if potential keep their schedules open for next tions in putting their Olympic teams the ice in South Korea and will go to the eligible players. Two-time Canadian borderline NHL free agents choose to go February. “Myself and couple other together. If Patrick Kane, Jonathan Quick, Olympics with medal expectations.” Olympic gold-medal winner Jonathan abroad next season for a chance to play in Americans, Deron Quint and Dave Leggio, Jack Eichel and Auston Matthews aren’t Toews expects top junior and college and the Olympics. were joking around to not make any plans available, USA Hockey will likely look to GOLD-MEDAL EXPECTATIONS a lot of European players to make up Russia is likely to be the gold medal over the Olympic break next year because Americans playing Europe to fill the bulk Two-time defending Olympic champi- Canada’s roster. -

Brandon Wheat Kings

JUNIOR PLAYERS WHO HAVE PREVIOUSLY PARTICIPATED Brandon Kamloops Moose Jaw Regina CIS Schael Higson Luc Smith Noah Gregor Josh Mahura Tyson Baillie - Alberta Sam Steel Stephane Legault - Alberta Calgary Kelowna Portland Rhett Rachinski - Alberta Tyler Mrkonjic Konrad Belcourt Skyler McKenzie Seattle Nolan Volcan World Junior Edmonton Kootenay Red Deer Carter Hart Patrick Dea Troy Murray Jared Freadrich Swift Current Tyson Jost Trey Fix-Wolansky Lane Zablocki Jordan Papirny Medicine Hat Everett Ryan Jevne Vancouver Matt Fonteyne Tyler Benson Carter Hart PROFESSIONAL PLAYERS WHO HAVE PREVIOUSLY PARTICIPATED Arizona Dallas New York Rangers Vancouver Kevin Connauton Austin Fyten Tanner Glass Derek Dorsett Shane Doan Ales Hemsky Nick Holden Winnipeg Jordan Martinook Edmonton Ottawa Eric Comrie Boston Jordan Eberle Clarke MacArthur Brenden Kichton Jake Debrusk Andrew Ference Dion Phaneuf JC Lipon Joe Morrow Mark Letestu Troy Rutkowski AHL Buffalo David Musil Zach Smith Travis Ewanyk Tyler Ennis Dillon Simpson Zack Stortini TJ Foster Taylor Fedun Florida Philadelphia Brett Ponich Calgary Alex Petrovic Jordan Weal Jaynen Rissling Matt Frattin Mark Pysyk San Jose Harrison Ruopp Kent Simpson Brent Regner David Schlemko Brandon Troock Ladislav Smid Los Angeles St. Louis Joshua Winquist Kris Versteeg Tom Gilbert Jay Bouwmeester Olympians - Men Carolina Matt Greene Kyle Brodziak Jay Bouwmeester - Canada Keegan Lowe Minnesota Magnus Paajarvi Jarome Iginla - Canada Cam Ward Devan Dubnyk Scott Upshall Chris Pronger - Canada Chicago Jared Spurgeon Nail -

Hockey Pools for Profit: a Simulation Based Player Selection Strategy

HOCKEY POOLS FOR PROFIT: A SIMULATION BASED PLAYER SELECTION STRATEGY Amy E. Summers B.Sc., University of Northern British Columbia, 2003 A PROJECT SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTEROF SCIENCE in the Department of Statistics and Actuarial Science @ Amy E. Summers 2005 SIMON FRASER UNIVERSITY Fall 2005 All rights reserved. This work may not be reproduced in whole or in part, by photocopy or other means, without the permission of the author APPROVAL Name: Amy E. Summers Degree: Master of Science Title of project: Hockey Pools for Profit: A Simulation Based Player Selection Strategy Examining Committee: Dr. Richard Lockhart Chair Dr. Tim Swartz Senior Supervisor Simon Fraser University Dr. Derek Bingham Supervisor Simon F'raser University Dr. Gary Parker External Examiner Simon Fraser University Date Approved: SIMON FRASER UNIVERSITY PARTIAL COPYRIGHT LICENCE The author, whose copyright is declared on the title page of this work, has granted to Simon Fraser University the right to lend this thesis, project or extended essay to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. The author has further granted permission to Simon Fraser University to keep or make a digital copy for use in its circulating collection. The author has further agreed that permission for multiple copying of this work for scholarly purposes may be granted by either the author or the Dean of Graduate Studies. -

Sabermetrics: the Past, the Present, and the Future

Sabermetrics: The Past, the Present, and the Future Jim Albert February 12, 2010 Abstract This article provides an overview of sabermetrics, the science of learn- ing about baseball through objective evidence. Statistics and baseball have always had a strong kinship, as many famous players are known by their famous statistical accomplishments such as Joe Dimaggio’s 56-game hitting streak and Ted Williams’ .406 batting average in the 1941 baseball season. We give an overview of how one measures performance in batting, pitching, and fielding. In baseball, the traditional measures are batting av- erage, slugging percentage, and on-base percentage, but modern measures such as OPS (on-base percentage plus slugging percentage) are better in predicting the number of runs a team will score in a game. Pitching is a harder aspect of performance to measure, since traditional measures such as winning percentage and earned run average are confounded by the abilities of the pitcher teammates. Modern measures of pitching such as DIPS (defense independent pitching statistics) are helpful in isolating the contributions of a pitcher that do not involve his teammates. It is also challenging to measure the quality of a player’s fielding ability, since the standard measure of fielding, the fielding percentage, is not helpful in understanding the range of a player in moving towards a batted ball. New measures of fielding have been developed that are useful in measuring a player’s fielding range. Major League Baseball is measuring the game in new ways, and sabermetrics is using this new data to find better mea- sures of player performance. -

20 0124 Bridgeport Bios

BRIDGEPORT SOUND TIGERS: COACHES BIOS BRENT THOMPSON - HEAD COACH Brent Thompson is in his seventh season as head coach of the Bridgeport Sound Tigers, which also marks his ninth year in the New York Islanders organization. Thompson was originally hired to coach the Sound Tigers on June 28, 2011 and led the team to a division title in 2011-12 before being named assistant South Division coach of the Islanders for two seasons (2012-14). On May 2, 2014, the Islanders announced Thompson would return to his role as head coach of the Sound Tigers. He is 246-203-50 in 499 career regular-season games as Bridgeport's head coach. Thompson became the Sound Tigers' all-time winningest head coach on Jan. 28, 2017, passing Jack Capuano with his 134th career victory. Prior to his time in Bridgeport, Thompson served as head coach of the Alaska Aces (ECHL) for two years (2009-11), winning the Kelly Cup Championship in 2011. During his two seasons as head coach in Alaska, Thompson amassed a record of 83- 50-11 and won the John Brophy Award as ECHL Coach of the Year in 2011 after leading the team to a record of 47-22-3. Thompson also served as a player/coach with the CHL’s Colorado Eagles in 2003-04 and was an assistant with the AHL’s Peoria Rivermen from 2005-09. Before joining the coaching ranks, Thompson enjoyed a 14-year professional playing career from 1991-2005, which included 121 NHL games and more than 900 professional contests. The Calgary, AB native was originally drafted by the Los Angeles Kings in the second round (39th overall) of the 1989 NHL Entry Draft. -

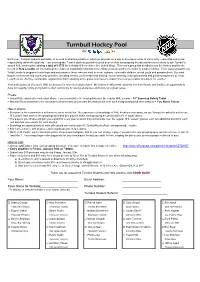

Turnbull Hockey Pool For

Turnbull Hockey Pool for Each year, Turnbull students participate in several fundraising initiatives, which we promote as a way to develop a sense of community, leadership and social responsibility within the students. Last year's grade 7 and 8 students put forth a great deal of effort campaigning friends and family members to join Turnbull's annual NHL hockey pool, raising a total of $1750 for a charity of their choice (the United Way). This year's group has decided to run the hockey pool for the benefit of Help Lesotho, an international development organization working in the AIDS-ravaged country of Lesotho in southern Africa. From www.helplesotho.org "Help Lesotho’s programs foster hope and motivation in those who are most in need: orphans, vulnerable children, at-risk youth and grandmothers. Our work targets root causes and community priorities, including literacy, youth leadership training, school twinning, child sponsorship and gender programming. Help Lesotho is an effective, sustainable organization that is working at the grass-roots level to support the next generation of leaders in Lesotho." Your participation in this year's NHL hockey pool is very much appreciated. We believe it will provide students and their friends and families an opportunity to have fun together while giving back to their community by raising awareness and funds for a great cause. Prizes: > Grand Prize awarded to contestant whose team accumulates the most points over the regular NHL season = 10" Samsung Galaxy Tablet > Monthly Prizes awarded to the contestants whose teams accumulate the most points over each designated period (see website) = Two Movie Passes How it Works: > Everyone in the community is welcome to join in on the fun. -

By Vicky Waltz Photographs by Asia Kepka

By Vicky Waltz PhotograPhs By asia kePka Winners By now, the Terrier icemen’s 2009 na- tional championship victory is the stuff of legend. Down by two goals with less than a minute left in the April 11 NCAA finals in Washington, D.C., they not only put the puck into the net twice more to tie, but scored in sudden-death overtime to bring the championship trophy back to Comm. Ave. for the first time since 1995. (See story on page 9.) The come-from- behind triumph against Ohio’s Miami University set a new school record for wins in a sea- son — thirty-five, the most by any team since Michigan in the 1996–1997 season — and helped to send Matt Gilroy (MET’09), John McCarthy (CAS’09), and Colin Wilson (CAS’11) to the National Hockey League. But men’s hockey wasn’t the only Terri- er team to score big this year. The women’s basketball team capped off its best season in BU history with an undefeated Amer- ica East Conference season, the men’s and women’s soccer teams captured the America East regular season and tourna- ment, and women’s lacrosse and softball both took home America East titles — the fifth in a row for BU’s lacrosse players. Wait, there’s more: runners from the men’s and women’s track and field and cross-country teams and two members of the men’s swimming and diving team competed at the NCAA championships in their sport, and two Terrier women — basketball forward Jesyka Burks-Wiley (CAS’09) and lacrosse midfielder Sarah Dalton (CGS’07, CAS’09) were named the America East Player of the Year for their sports. -

2009-2010 Colorado Avalanche Media Guide

Qwest_AVS_MediaGuide.pdf 8/3/09 1:12:35 PM UCQRGQRFCDDGAG?J GEF³NCCB LRCPLCR PMTGBCPMDRFC Colorado MJMP?BMT?J?LAFCÍ Upgrade your speed. CUG@CP³NRGA?QR LRCPLCRDPMKUCQR®. Available only in select areas Choice of connection speeds up to: C M Y For always-on Internet households, wide-load CM Mbps data transfers and multi-HD video downloads. MY CY CMY For HD movies, video chat, content sharing K Mbps and frequent multi-tasking. For real-time movie streaming, Mbps gaming and fast music downloads. For basic Internet browsing, Mbps shopping and e-mail. ���.���.���� qwest.com/avs Qwest Connect: Service not available in all areas. Connection speeds are based on sync rates. Download speeds will be up to 15% lower due to network requirements and may vary for reasons such as customer location, websites accessed, Internet congestion and customer equipment. Fiber-optics exists from the neighborhood terminal to the Internet. Speed tiers of 7 Mbps and lower are provided over fiber optics in selected areas only. Requires compatible modem. Subject to additional restrictions and subscriber agreement. All trademarks are the property of their respective owners. Copyright © 2009 Qwest. All Rights Reserved. TABLE OF CONTENTS Joe Sakic ...........................................................................2-3 FRANCHISE RECORD BOOK Avalanche Directory ............................................................... 4 All-Time Record ..........................................................134-135 GM’s, Coaches ................................................................. -

Tarvaris Jackson Can't Obtain Buffet 12 Times This Week If He's Going to Acquaint It Amongst the Game

Tarvaris Jackson can't obtain buffet 12 times this week if he's going to acquaint it amongst the game. (AP Photo/Elaine Thompson) (AP) Marshawn Lynch has struggled to find apartment to escape this season. (AP Photo/Elaine Thompson) (ASSOCIATED PRESS) Tony Romo wasn't great last week against the Eagles. (AP Photo/Michael Perez) (AP) DeMarcus Ware is an of the most dangerous pass rushers surrounded the NFL. (AP Photo/Marcio Jose Sanchez, File) (ASSOCIATED PRESS) Miles Austin was quiet last week,anyhow the Seahawks ambition have their hands full with him aboard Sunday. (AP Photo/Sharon Ellman) (AP) Seahawks along Cowboys: 5 things to watch WHAT: Seattle Seahawks (2-5) along Dallas Cowboys (3-4) (Week nine) WHEN: 10 a.m. PT Sunday WHERE: Cowboys Stadium, Dallas, Texas TV/RADIO: FOX artery 13 among Seattle) / 710 AM, 97.three FM What to watch for 1,boise state football jersey. Protect the pectoral Tarvaris Jackson is probable to activity Sunday, so expect him to begin as quarterback as the Seahawks. But don??t be also surprised whether he can??t withstand the same volume and ferocity of hits we??ve become accustomed to seeing. Coach Pete Carroll indicated Friday that meantime Jackson is healthy enough to activity he??s still a ways from being after to where he was pre-injury. ??He does not feel great,?? Carroll said Friday. ??He??s but making it through practice.?? Of lesson ??barely?? making it is still making it, and with the access Charlie Whitehurst has performed this season, it??s likely that the Seahawks will do whatever they can to reserve Jackson on the field.