Chapter 22 Tensor Algebras, Symmetric Algebras and Exterior

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

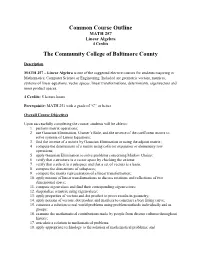

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Package 'Einsum'

Package ‘einsum’ May 15, 2021 Type Package Title Einstein Summation Version 0.1.0 Description The summation notation suggested by Einstein (1916) <doi:10.1002/andp.19163540702> is a concise mathematical notation that implicitly sums over repeated indices of n- dimensional arrays. Many ordinary matrix operations (e.g. transpose, matrix multiplication, scalar product, 'diag()', trace etc.) can be written using Einstein notation. The notation is particularly convenient for expressing operations on arrays with more than two dimensions because the respective operators ('tensor products') might not have a standardized name. License MIT + file LICENSE Encoding UTF-8 SystemRequirements C++11 Suggests testthat, covr RdMacros mathjaxr RoxygenNote 7.1.1 LinkingTo Rcpp Imports Rcpp, glue, mathjaxr R topics documented: einsum . .2 einsum_package . .3 Index 5 1 2 einsum einsum Einstein Summation Description Einstein summation is a convenient and concise notation for operations on n-dimensional arrays. Usage einsum(equation_string, ...) einsum_generator(equation_string, compile_function = TRUE) Arguments equation_string a string in Einstein notation where arrays are separated by ’,’ and the result is separated by ’->’. For example "ij,jk->ik" corresponds to a standard matrix multiplication. Whitespace inside the equation_string is ignored. Unlike the equivalent functions in Python, einsum() only supports the explicit mode. This means that the equation_string must contain ’->’. ... the arrays that are combined. All arguments are converted to arrays with -

Equivalence of A-Approximate Continuity for Self-Adjoint

Equivalence of A-Approximate Continuity for Self-Adjoint Expansive Linear Maps a,1 b, ,2 Sz. Gy. R´ev´esz , A. San Antol´ın ∗ aA. R´enyi Institute of Mathematics, Hungarian Academy of Sciences, Budapest, P.O.B. 127, 1364 Hungary bDepartamento de Matem´aticas, Universidad Aut´onoma de Madrid, 28049 Madrid, Spain Abstract Let A : Rd Rd, d 1, be an expansive linear map. The notion of A-approximate −→ ≥ continuity was recently used to give a characterization of scaling functions in a multiresolution analysis (MRA). The definition of A-approximate continuity at a point x – or, equivalently, the definition of the family of sets having x as point of A-density – depend on the expansive linear map A. The aim of the present paper is to characterize those self-adjoint expansive linear maps A , A : Rd Rd for which 1 2 → the respective concepts of Aµ-approximate continuity (µ = 1, 2) coincide. These we apply to analyze the equivalence among dilation matrices for a construction of systems of MRA. In particular, we give a full description for the equivalence class of the dyadic dilation matrix among all self-adjoint expansive maps. If the so-called “four exponentials conjecture” of algebraic number theory holds true, then a similar full description follows even for general self-adjoint expansive linear maps, too. arXiv:math/0703349v2 [math.CA] 7 Sep 2007 Key words: A-approximate continuity, multiresolution analysis, point of A-density, self-adjoint expansive linear map. 1 Supported in part in the framework of the Hungarian-Spanish Scientific and Technological Governmental Cooperation, Project # E-38/04. -

Lecture Notes in One File

Phys 6124 zipped! World Wide Quest to Tame Math Methods Predrag Cvitanovic´ January 2, 2020 Overview The career of a young theoretical physicist consists of treating the harmonic oscillator in ever-increasing levels of abstraction. — Sidney Coleman I am leaving the course notes here, not so much for the notes themselves –they cannot be understood on their own, without the (unrecorded) live lectures– but for the hyperlinks to various source texts you might find useful later on in your research. We change the topics covered year to year, in hope that they reflect better what a graduate student needs to know. This year’s experiment are the two weeks dedicated to data analysis. Let me know whether you would have preferred the more traditional math methods fare, like Bessels and such. If you have taken this course live, you might have noticed a pattern: Every week we start with something obvious that you already know, let mathematics lead us on, and then suddenly end up someplace surprising and highly non-intuitive. And indeed, in the last lecture (that never took place), we turn Coleman on his head, and abandon harmonic potential for the inverted harmonic potential, “spatiotemporal cats” and chaos, arXiv:1912.02940. Contents 1 Linear algebra7 HomeworkHW1 ................................ 7 1.1 Literature ................................ 8 1.2 Matrix-valued functions ......................... 9 1.3 A linear diversion ............................ 12 1.4 Eigenvalues and eigenvectors ...................... 14 1.4.1 Yes, but how do you really do it? . 18 References ................................... 19 exercises 20 2 Eigenvalue problems 23 HomeworkHW2 ................................ 23 2.1 Normal modes .............................. 24 2.2 Stable/unstable manifolds ....................... -

Notes on Manifolds

Notes on Manifolds Justin H. Le Department of Electrical & Computer Engineering University of Nevada, Las Vegas [email protected] August 3, 2016 1 Multilinear maps A tensor T of order r can be expressed as the tensor product of r vectors: T = u1 ⊗ u2 ⊗ ::: ⊗ ur (1) We herein fix r = 3 whenever it eases exposition. Recall that a vector u 2 U can be expressed as the combination of the basis vectors of U. Transform these basis vectors with a matrix A, and if the resulting vector u0 is equivalent to uA, then the components of u are said to be covariant. If u0 = A−1u, i.e., the vector changes inversely with the change of basis, then the components of u are contravariant. By Einstein notation, we index the covariant components of a tensor in subscript and the contravariant components in superscript. Just as the components of a vector u can be indexed by an integer i (as in ui), tensor components can be indexed as Tijk. Additionally, as we can view a matrix to be a linear map M : U ! V from one finite-dimensional vector space to another, we can consider a tensor to be multilinear map T : V ∗r × V s ! R, where V s denotes the s-th-order Cartesian product of vector space V with itself and likewise for its algebraic dual space V ∗. In this sense, a tensor maps an ordered sequence of vectors to one of its (scalar) components. Just as a linear map satisfies M(a1u1 + a2u2) = a1M(u1) + a2M(u2), we call an r-th-order tensor multilinear if it satisfies T (u1; : : : ; a1v1 + a2v2; : : : ; ur) = a1T (u1; : : : ; v1; : : : ; ur) + a2T (u1; : : : ; v2; : : : ; ur); (2) for scalars a1 and a2. -

LECTURES on PURE SPINORS and MOMENT MAPS Contents 1

LECTURES ON PURE SPINORS AND MOMENT MAPS E. MEINRENKEN Contents 1. Introduction 1 2. Volume forms on conjugacy classes 1 3. Clifford algebras and spinors 3 4. Linear Dirac geometry 8 5. The Cartan-Dirac structure 11 6. Dirac structures 13 7. Group-valued moment maps 16 References 20 1. Introduction This article is an expanded version of notes for my lectures at the summer school on `Poisson geometry in mathematics and physics' at Keio University, Yokohama, June 5{9 2006. The plan of these lectures was to give an elementary introduction to the theory of Dirac structures, with applications to Lie group valued moment maps. Special emphasis was given to the pure spinor approach to Dirac structures, developed in Alekseev-Xu [7] and Gualtieri [20]. (See [11, 12, 16] for the more standard approach.) The connection to moment maps was made in the work of Bursztyn-Crainic [10]. Parts of these lecture notes are based on a forthcoming joint paper [1] with Anton Alekseev and Henrique Bursztyn. I would like to thank the organizers of the school, Yoshi Maeda and Guiseppe Dito, for the opportunity to deliver these lectures, and for a greatly enjoyable meeting. I also thank Yvette Kosmann-Schwarzbach and the referee for a number of helpful comments. 2. Volume forms on conjugacy classes We will begin with the following FACT, which at first sight may seem quite unrelated to the theme of these lectures: FACT. Let G be a simply connected semi-simple real Lie group. Then every conjugacy class in G carries a canonical invariant volume form. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

What's in a Name? the Matrix As an Introduction to Mathematics

St. John Fisher College Fisher Digital Publications Mathematical and Computing Sciences Faculty/Staff Publications Mathematical and Computing Sciences 9-2008 What's in a Name? The Matrix as an Introduction to Mathematics Kris H. Green St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/math_facpub Part of the Mathematics Commons How has open access to Fisher Digital Publications benefited ou?y Publication Information Green, Kris H. (2008). "What's in a Name? The Matrix as an Introduction to Mathematics." Math Horizons 16.1, 18-21. Please note that the Publication Information provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/math_facpub/12 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. What's in a Name? The Matrix as an Introduction to Mathematics Abstract In lieu of an abstract, here is the article's first paragraph: In my classes on the nature of scientific thought, I have often used the movie The Matrix to illustrate the nature of evidence and how it shapes the reality we perceive (or think we perceive). As a mathematician, I usually field questions elatedr to the movie whenever the subject of linear algebra arises, since this field is the study of matrices and their properties. So it is natural to ask, why does the movie title reference a mathematical object? Disciplines Mathematics Comments Article copyright 2008 by Math Horizons. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

The Mechanics of the Fermionic and Bosonic Fields: an Introduction to the Standard Model and Particle Physics

The Mechanics of the Fermionic and Bosonic Fields: An Introduction to the Standard Model and Particle Physics Evan McCarthy Phys. 460: Seminar in Physics, Spring 2014 Aug. 27,! 2014 1.Introduction 2.The Standard Model of Particle Physics 2.1.The Standard Model Lagrangian 2.2.Gauge Invariance 3.Mechanics of the Fermionic Field 3.1.Fermi-Dirac Statistics 3.2.Fermion Spinor Field 4.Mechanics of the Bosonic Field 4.1.Spin-Statistics Theorem 4.2.Bose Einstein Statistics !5.Conclusion ! 1. Introduction While Quantum Field Theory (QFT) is a remarkably successful tool of quantum particle physics, it is not used as a strictly predictive model. Rather, it is used as a framework within which predictive models - such as the Standard Model of particle physics (SM) - may operate. The overarching success of QFT lends it the ability to mathematically unify three of the four forces of nature, namely, the strong and weak nuclear forces, and electromagnetism. Recently substantiated further by the prediction and discovery of the Higgs boson, the SM has proven to be an extraordinarily proficient predictive model for all the subatomic particles and forces. The question remains, what is to be done with gravity - the fourth force of nature? Within the framework of QFT theoreticians have predicted the existence of yet another boson called the graviton. For this reason QFT has a very attractive allure, despite its limitations. According to !1 QFT the gravitational force is attributed to the interaction between two gravitons, however when applying the equations of General Relativity (GR) the force between two gravitons becomes infinite! Results like this are nonsensical and must be resolved for the theory to stand. -

Introduction to Quantum Field Theory

Utrecht Lecture Notes Masters Program Theoretical Physics September 2006 INTRODUCTION TO QUANTUM FIELD THEORY by B. de Wit Institute for Theoretical Physics Utrecht University Contents 1 Introduction 4 2 Path integrals and quantum mechanics 5 3 The classical limit 10 4 Continuous systems 18 5 Field theory 23 6 Correlation functions 36 6.1 Harmonic oscillator correlation functions; operators . 37 6.2 Harmonic oscillator correlation functions; path integrals . 39 6.2.1 Evaluating G0 ............................... 41 6.2.2 The integral over qn ............................ 42 6.2.3 The integrals over q1 and q2 ....................... 43 6.3 Conclusion . 44 7 Euclidean Theory 46 8 Tunneling and instantons 55 8.1 The double-well potential . 57 8.2 The periodic potential . 66 9 Perturbation theory 71 10 More on Feynman diagrams 80 11 Fermionic harmonic oscillator states 87 12 Anticommuting c-numbers 90 13 Phase space with commuting and anticommuting coordinates and quanti- zation 96 14 Path integrals for fermions 108 15 Feynman diagrams for fermions 114 2 16 Regularization and renormalization 117 17 Further reading 127 3 1 Introduction Physical systems that involve an infinite number of degrees of freedom are usually described by some sort of field theory. Almost all systems in nature involve an extremely large number of degrees of freedom. A droplet of water contains of the order of 1026 molecules and while each water molecule can in many applications be described as a point particle, each molecule has itself a complicated structure which reveals itself at molecular length scales. To deal with this large number of degrees of freedom, which for all practical purposes is infinite, we often regard a system as continuous, in spite of the fact that, at certain distance scales, it is discrete. -

28. Exterior Powers

28. Exterior powers 28.1 Desiderata 28.2 Definitions, uniqueness, existence 28.3 Some elementary facts 28.4 Exterior powers Vif of maps 28.5 Exterior powers of free modules 28.6 Determinants revisited 28.7 Minors of matrices 28.8 Uniqueness in the structure theorem 28.9 Cartan's lemma 28.10 Cayley-Hamilton Theorem 28.11 Worked examples While many of the arguments here have analogues for tensor products, it is worthwhile to repeat these arguments with the relevant variations, both for practice, and to be sensitive to the differences. 1. Desiderata Again, we review missing items in our development of linear algebra. We are missing a development of determinants of matrices whose entries may be in commutative rings, rather than fields. We would like an intrinsic definition of determinants of endomorphisms, rather than one that depends upon a choice of coordinates, even if we eventually prove that the determinant is independent of the coordinates. We anticipate that Artin's axiomatization of determinants of matrices should be mirrored in much of what we do here. We want a direct and natural proof of the Cayley-Hamilton theorem. Linear algebra over fields is insufficient, since the introduction of the indeterminate x in the definition of the characteristic polynomial takes us outside the class of vector spaces over fields. We want to give a conceptual proof for the uniqueness part of the structure theorem for finitely-generated modules over principal ideal domains. Multi-linear algebra over fields is surely insufficient for this. 417 418 Exterior powers 2. Definitions, uniqueness, existence Let R be a commutative ring with 1.