Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UCL Council Member Handbook

COUNCIL MEMBER HANDBOOK Last updated August 2021 Table of Contents COUNCIL MEMBER HANDBOOK .......................................................................................... 1 Preface ................................................................................................................................... 3 1. A brief history of UCL .................................................................................................. 3 2. Council’s Powers and Responsibilities ........................................................................ 4 3. The Role of the Office for Students ............................................................................. 7 4. How Council Operates ................................................................................................. 9 5. Council Membership .................................................................................................. 11 6. UCL Council Members’ Role ..................................................................................... 12 7. Duties and Responsibilities of Council Members ...................................................... 14 8. The Chair of Council’s Role and Responsibilities ..................................................... 16 9. Roles and Responsibilities of the Secretary to Council ............................................ 19 10. Confidentiality ............................................................................................................ 21 11. Personal Liability of Council Members ..................................................................... -

Undergraduate Prospectus 2021 Entry

Undergraduate 2021 Entry Prospectus Image captions p15 p30–31 p44 p56–57 – The Marmor Homericum, located in the – Bornean orangutan. Courtesy of USO – UCL alumnus, Christopher Nolan. Courtesy – Students collecting beetles to quantify – Students create a bespoke programme South Cloisters of the Wilkins Building, depicts Homer reciting the Iliad to the – Saltburn Mine water treatment scheme. of Kirsten Holst their dispersion on a beach at Atlanterra, incorporating both arts and science and credits accompaniment of a lyre. Courtesy Courtesy of Onya McCausland – Recent graduates celebrating at their Spain with a European mantis, Mantis subjects. Courtesy of Mat Wright religiosa, in the foreground. Courtesy of Mat Wright – Community mappers holding the drone that graduation ceremony. Courtesy of John – There are a number of study spaces of UCL Life Sciences Front cover captured the point clouds and aerial images Moloney Photography on campus, including the JBS Haldane p71 – Students in a UCL laboratory. Study Hub. Courtesy of Mat Wright – UCL Portico. Courtesy of Matt Clayton of their settlements on the peripheral slopes – Students in a Hungarian language class p32–33 Courtesy of Mat Wright of José Carlos Mariátegui in Lima, Peru. – The Arts and Sciences Common Room – one of ten languages taught by the UCL Inside front cover Courtesy of Rita Lambert – Our Student Ambassador team help out in Malet Place. The mural on the wall is p45 School of Slavonic and East European at events like Open Days and Graduation. a commissioned illustration for the UCL St Paul’s River – Aerial photograph showing UCL’s location – Prosthetic hand. Courtesy of UCL Studies. -

Document Title

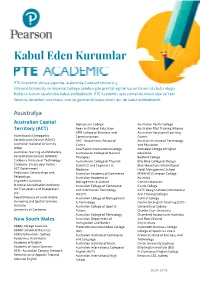

Kabul Eden Kurumlar PTE Academic dünya çapında, aralarında Stanford University, Harvard University ve Imperial College London gibi prestijli eğitim kurumlarının da bulunduğu, binlerce kurum tarafından kabul edilmektedir. PTE Academic aynı zamanda Avustralya ve Yeni Zelanda devletleri tarafından vize ve göçmenlik başvuruları için de kabul edilmektedir. Avustralya Australian Capital Alphacrucis College Australian Pacific College Territory (ACT) Apex Institute of Education Australian Pilot Training Alliance APM College of Business and Australian Vocational Learning Australasian Osteopathic Communication Centre Accreditation Council (AOAC) ARC - Accountants Resource Australis Institute of Technology Australian National University Centre and Education (ANU) Asia Pacific International College Avondale College of Higher Australian Nursing and Midwifery Australasian College of Natural Education Accreditation Council (ANMAC) Therapies Bedford College Canberra Institute of Technology Australasian College of Physical Billy Blue College of Design Canberra. Create your future - Scientists and Engineers in Blue Mountains International ACT Government Medicine Hotel Management School Endeavour Scholarships and Australian Academy of Commerce (BMIHMS) Campion College Fellowships Australian Academy of Australia Engineers Australia Management & Science Carrick Education National Accreditation Authority Australian College of Commerce Castle College for Translators and Interpreters and Information Technology CATC Design School (Commercial Ltd (ACCIT) Arts Training -

Undergraduate Prospectus 2021 Entry

Undergraduate 2021 Entry Prospectus Image captions p15 p30–31 p44 p56–57 – The Marmor Homericum, located in the – Bornean orangutan. Courtesy of USO – UCL alumnus, Christopher Nolan. Courtesy – Students collecting beetles to quantify – Students create a bespoke programme South Cloisters of the Wilkins Building, depicts Homer reciting the Iliad to the – Saltburn Mine water treatment scheme. of Kirsten Holst their dispersion on a beach at Atlanterra, incorporating both arts and science and credits accompaniment of a lyre. Courtesy Courtesy of Onya McCausland – Recent graduates celebrating at their Spain with a European mantis, Mantis subjects. Courtesy of Mat Wright religiosa, in the foreground. Courtesy of Mat Wright – Community mappers holding the drone that graduation ceremony. Courtesy of John – There are a number of study spaces of UCL Life Sciences Front cover captured the point clouds and aerial images Moloney Photography on campus, including the JBS Haldane p71 – Students in a UCL laboratory. Study Hub. Courtesy of Mat Wright – UCL Portico. Courtesy of Matt Clayton of their settlements on the peripheral slopes – Students in a Hungarian language class p32–33 Courtesy of Mat Wright of José Carlos Mariátegui in Lima, Peru. – The Arts and Sciences Common Room – one of ten languages taught by the UCL Inside front cover Courtesy of Rita Lambert – Our Student Ambassador team help out in Malet Place. The mural on the wall is p45 School of Slavonic and East European at events like Open Days and Graduation. a commissioned illustration for the UCL St Paul’s River – Aerial photograph showing UCL’s location – Prosthetic hand. Courtesy of UCL Studies. -

New Research Offers Hope for Parents of Picky Eaters

UCL/Weight Concern Press Release Embargo: 00.01 hours Eastern Standard Time/05.00 hours British Summer Time, Monday 30 September 2013 NEW RESEARCH OFFERS HOPE FOR PARENTS OF PICKY EATERS An intervention developed by UCL psychologists significantly increases consumption of fruit and vegetables commonly disliked among picky young children, new research has found. The research, published in the Journal of the Academy of Nutrition and Dietetics, showed that in a randomised controlled trial involving 450 young children, a new method of taste exposure significantly increased the proportion of children willing to try new foods and to continue eating them. In the trial of the method with over 200 families, toddlers showed a 61% increase in their liking of a specific vegetable, and the amount of the vegetable they were willing to eat trebled. A new pack called Tiny Tastes - based on this method - has been developed by UCL in partnership with Weight Concern, a charitable organisation set up by a group of academics and clinicians to combat the epidemic of obesity sweeping the UK by supporting and empowering people to live a healthy lifestyle. Mealtimes can frequently be a battle-ground for many parents, with up to 40 per cent of toddlers becoming picky eaters at some point during childhood, with more than a quarter refusing food every day. In additional, despite the importance of fruit and vegetables in a healthy diet, only 20 per cent of children actually eat the recommended five portions a day, according to the 2010 Health Survey for England. Vegetables are among children’s most disliked foods, and vegetable intake consistently falls short of dietary guidelines. -

Management/Analysis Tools for Reviews • James Thomas, EPPI-Centre • Ethan Balk, Brown University • Nancy Owens, Covidence • Martin Morris, Mcgill Library

Management/Analysis Tools for Reviews • James Thomas, EPPI-Centre • Ethan Balk, Brown University • Nancy Owens, Covidence • Martin Morris, McGill Library KTDRR and Campbell Collaboration Research Evidence Training Session 3: April 17, 2019 Copyright © 2018 American Institutes for Research (AIR). All rights reserved. No part of this presentation may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from AIR. Submit copyright permissions requests to the AIR Publications Copyright and Permissions Help Desk at [email protected]. Users may need to secure additional permissions from copyright holders whose work AIR included after obtaining permission as noted to reproduce or adapt materials for this presentation. Agenda 3:00 – 3:05: Introduction 3:05 – 3:25: EPPI-Reviewer, James Thomas 3:25 – 3:45: Abstrackr, Ethan Balk 3:45 – 4:05: Covidence, Nancy Owens 4:05 – 4:25: Rayyan, Martin Morris 4:25 – 4:30: Wrap-up, Evaluation 2 A brief introduction to EPPI-Reviewer James Thomas KTDRR and Campbell Collaboration Research Evidence Training: Management/Analysis Tools for Reviews April 17 2019 James Thomas – [email protected] Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) Social Science Research Unit UCL Institute of Education University College London 3 Outline • A very brief history of EPPI-Reviewer • The design principles of EPPI-Reviewer • Outline of the structure -

Review 2011 1 Research

LONDON’S GLOBAL UNIVERSITY ReviewHighlights 2011 2011 Walking on Mars © Angeliki Kapoglou Over summer 2011, UCL Communications held a The winning entry was by Angeliki Kapoglou (UCL Space photography competition, open to all students, calling for & Climate Physics), who was selected to serve as a member images that demonstrated how UCL students contribute of an international crew on the Mars Desert Research Station, to society as global citizens. The term ‘education for global which simulates the Mars environment in the Utah desert. citizenship’ encapsulates all that UCL does to enable Researchers at the station work to develop key knowledge students to respond to the intellectual, social and personal needed to prepare for the human exploration of Mars. challenges that they will encounter throughout their future careers and lives. The runners-up and other images of UCL life can be seen at: www.flickr.com/uclnews Contents Research 2 Follow UCL news www.ucl.ac.uk Health 5 Insights: a fortnightly email summary Global 8 of news, comment and events: www.ucl.ac.uk/news/insights Teaching & Learning 11 Events calendar: Enterprise 14 www.events.ucl.ac.uk Highlights 2011 17 Twitter: @uclnews UCL Council White Paper 2011–2021 YouTube: UCLTV Community 21 In images: www.flickr.com/uclnews Finance & Investment 25 SoundCloud: Awards & Appointments 30 www.soundcloud.com/uclsound iTunes U: People 36 http://itunes.ucl.ac.uk Leadership 37 UCL – London’s Global University Our vision Our values • An outstanding institution, recognised as one of the world’s -

Simcha Jong Leiden University, Science Based Business, Faculty of Science Snellius Building, Niels Bohrweg 1, 2333 CA Leiden, Room 102 [email protected]

Simcha Jong Leiden University, Science Based Business, Faculty of Science Snellius Building, Niels Bohrweg 1, 2333 CA Leiden, Room 102 [email protected] ACADEMIC APPOINTMENTS LEIDEN UNIVERSITY, The Netherlands 2016-present Professor and Director, Science Based Business UNIVERSITY COLLEGE LONDON, United Kingdom 2006-present - Visiting Professor, UCL School of Management (2017-present) - Faculty Chair, UCL Health Care Management Initiative (2014-2016) - University Lecturer in Management Science and Innovation (2006-16) HARVARD UNIVERSITY, Cambridge, MA 2015-2016 - Visiting Assistant Professor in Global Health and Population, TH Chan School of Public Health - Member of Global Health Systems Cluster UNIVERSITY OF CALIFORNIA AT BERKELEY, Berkeley, CA 2004 Visiting scholar, Department of Sociology EDUCATION EUROPEAN UNIVERSITY INSTITUTE, Florence, Italy 2002-2007 PhD in Social and Political Sciences Dissertation title: Scientific Communities and the Birth of New Industries. UNIVERSITY OF CALIFORNIA AT BERKELEY, Berkeley, CA 1999-2000 Exchange student Haas School of Business (1999-2000) UNIVERSITY OF CAMBRIDGE, UK 2001-2002 MPhil in Management Studies (distinction level) UNIVERSITY COLLEGE, UNIVERSITY OF UTRECHT 1998-2001 BA in Social Sciences (cum laude) UNIVERSITY OF AMSTERDAM 1997-1998 ‘Propaedeusis’ degrees in Economics and Law SELECTED PUBLICATIONS Atun, R [et al, including Jong, S], Diabetes in sub-Saharan Africa: from clinical care to health policy, 2017. The Lancet Diabetes & Endocrinology 5(8), 622-67 Lin, R-T, C-K Lin, DC Christiani, I Kawachi, Y Cheng, S Verguet, S Jong, 2017. The impact of the introduction of new recognition criteria for overwork-related cardiovascular and cerebrovascular diseases: a cross-country comparison, Nature Scientific Reports 7, 167. Hassan S., H Huang, K Warren, B Mahdavi, D Smith, S Jong, SS Farid, 2016. -

Postdoctoral Research Fellow London School of Economics and Political Science Department of Management

MISLAV RADIC Postdoctoral Research Fellow London School of Economics and Political Science Department of Management Education 2016- 2020 Cass Business School, City, University of London PhD in Management 2015- 2016 Cass Business School, City, University of London MRes in Management 2013- 2014 Faculty of Economics and Business, University of Zagreb MA in Management (Magna cum Laude) 2009-2013 Faculty of Economics and Business, University of Zagreb BA in Economics Experience 2020 - current London School of Economic and Political Sciences Postdoctoral Research Fellow 2015- current World Economic Forum Global Shaper 2015 – 2019 Cass Business School, City, University of London PhD Candidate, Teaching and Research Assistant 2014 – 2019 Faculty of Economics and Business, University of Zagreb Teaching and Research Assistant Dissertation Exploring the Organizational Implications of Privatization Supervisors: Davide Ravasi, Sebastien Mena, & Cliff Oswick Examiners: Michael Smets (University of Oxford) & Daniel Beunza (Cass Business School) Research interests Organizational Change; CSR; Employment Relations; Organizational Identity; Sensemaking; Hybrid Organizing; Privatization; Qualitative Research Method Publications • Glavas, A., & Radic, M. (2019). Corporate Social Responsibility: An Overview from an Organizational and Psychological Perspective. Oxford Research Encyclopedia of Psychology. • Radic, M., Ravasi, D., & Munir, K., (2021). Privatization: Implications of Public vs Private Ownership. Journal of Management, 47(6), 1596-1629. Working -

Sessions for Sunday, May 05 343

Sessions for Sunday, May 05 Sunday, 08:00 AM - 09:30 AM Sunday, 08:00 AM - 09:30 AM, Piscataway Track: Energy Supply Chains Invited Session: Energy Market Coordination and Analytics 343 Chair(s): Kai Pan 093-0367 Data-Driven Predictive Model for Residential Building Energy Optimization Meysam Rabiee, Student, University of Oregon, United States Shaya Sheikh, Assistant Professor, New York Institute of Technology, United States We utilize a hybrid heuristic and data mining technique to develop an energy consumption and feature selection model for residential buildings. We use weather and energy consumption data from several zip codes in Austin, Texas to validate the predictive model. The presented approach can be extended to other climate zones. 093-0784 Death Spiral in Solar Power Markets Fariba Farajbakhsh, Student, University of Texas Dallas, United States Metin Cakanyildirim, Professor, University of Texas Dallas, United States Higher solar power penetration is environmentally desirable, but can increase electricity prices. Having environmental and social responsibilities, regulators face challenges with setting prices. We provide a revenue maximization formulation to reveal the connection (death spiral) between rate increases and solar penetration, and extend this formulation to mitigate price increases. 093-1119 An Analytical Comparison of Electricity Market Designs in the Presence of Renewables Yashar Ghiassi-Farrokhfal, Assistant Professor, Rotterdam School of Management, Netherlands Rodrigo Crisostomo Pereira Belo, Associate Professor, Rotterdam School of Management, Netherlands Mohammad-Reza Hesamzadeh, Associate Professor, Royal Institute of Technology (Kth), Sweden Existing electricity markets are not designed to handle large scale unpredictability. Hence, their efficiency might extensively deteriorate as more solar/wind power is introduced. -

Teaching and Learning in Higher Education Ed

Teaching and Learning in Higher Education Teaching ‘… an admirable testament to UCL’s ambition to foster innovative, evidence-based and thoughtful approaches to teaching and learning. There is much to learn from here.’— Professor Karen O’Brien, Head of the Humanities Division, University of Oxford ‘Research and teaching’ is a typical response to the question, ‘What are universities for?’ For most people, one comes to mind more quickly than the other. Most undergraduate students will think of teaching, while PhD students will think of research. University staff will have similarly varied reactions depending on their roles. Emphasis on one or the other has also changed over time according to governmental incentives and pressure. Davies and Norbert Pachler ed. Jason P. For some decades, higher education has been bringing the two closer together, to the point of them overlapping, by treating students as partners and nding ways of having them learn through undertaking research. Drawing on a range of examples from across the disciplines, this collection demonstrates how one research-rich university, University College London (UCL), has set up initiatives to raise the pro le of teaching and give it parity with research. It explains what staff and students have done to create an environment in which students can learn by discovery, through research- based education. ‘… an exemplary text of its kind, offering much to dwell on to all interested in advancing university education.’— Ronald Barnett, Emeritus Professor of Higher Education, University College London Institute of Education Dr Jason P. Davies is a Senior Teaching Fellow at the UCL Arena Centre. -

London's Global University

LONDON’S GLOBAL UNIVERSITY ACADEMIC BOARD Wednesday 13th May 2015 M I N U T E S PRESENT1: President and Provost (Chair) Ms Wendy Appleby, Ms Nicola Arnold, Dr Simon Banks, Mr Francis Brako, Dame Nicola Brewer, Ms Annabel Brown, Ms Jane Burns, Professor Stephen Caddick, Dr Ben Campkin, Ms Sue Chick, Professor Lucie Clapp, Ms Charlotte Croffie, Professor Izzat Darwazeh, Professor Julio D. Davila, Ms Eleanor Day, Dr Sally Day, Dr Rachele De Felice, Dr Rosaline Duhs, Dr Melanie Ehren, Dr Caroline Essex, Professor Susan Evans, Ms Ava Fatah gen. Schieck, Dr Martin Fry, Professor Mary Fulbrook, Mr Philip Gardner, Dr Hugh Goodacre, Dr Lesley Gourlay, Dr George Grimble, Dr Paul Groves, Professor Valerie Hazan, Professor Jenny Head, Professor Michael Heinrich, Dr Evangelos Himonides, Dr John Hurst, Ms Liz Jones, Ms Lina Kamenova, Mr Rex Knight, Mr Lukmaan Kolia, Professor Susanne Kord, Professor Jan Kubik, Dr Sarabajaya Kumar, Dr Andrew Logsdail, Dr Helga Lúthersdóttir, Professor Raymond MacAllister, Professor Sandy MacRobert, Dr Merle Mahon, Professor Genise Manuwald, Dr Lilijana Marjanovic-Halburd, Professor Charles Marson, Dr Helen Matthews, Ms Fiona McClement, Dr Saladin Meckled-Garcia, Dr Jenny Mindell, Dr John Mitchell, Dr Richard Mole, Dr Caroline Newton, Professor Martin Oliver, Professor Norbert Pachler, Professor Alan Penn, Dr Brent Pilkey, Mr Mike Rowson, Professor Tom Salt, Professor Elizabeth Shepherd, Professor Richard R Simons, Professor Lucia Sivilotti, Dr Hazel Smith, Dr Fiona Strawbridge, Professor Stephen Smith, Professor Sacha Stern, Ms Emanuela Tilley, Mr Simon To, Professor Derek Tocher, Professor Jonathan Wolff, Professor Steve Wood. In attendance: Ms Clare Goudy, Mr Dominique Fourniol (Media), Dr Patty Kostkova, Mr Derfel Owen (Secretary), Ms Chandan Shah.