DCIG Analysts Will Speak on Ransomware and Cloud File Services at Nasuni Cloudbound2020

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nasuni Cloud File Services™ the Modern, Cloud-Based Platform to Store, Protect, Synchronize, and Collaborate on Files Across All Locations at Scale

Data Sheet Data Sheet Nasuni Cloud File Services Nasuni Cloud File Services™ The modern, cloud-based platform to store, protect, synchronize, and collaborate on files across all locations at scale. Solution Overview What is Nasuni? Nasuni® Cloud File Services™ enables organizations to Nasuni Cloud File Services is a software-defined store, protect, synchronize and collaborate on files platform that leverages the low cost, scalability, and across all locations at scale. Licensed as stand-alone or durability of Amazon Simple Storage Service (Amazon bundled services, the Nasuni platform modernizes S3) and Nasuni’s cloud-based UniFS® global file system primary and archive file storage, file backup, and to transform file storage. disaster recovery, while offering advanced capabilities Just like device-based file systems were needed to for multi-site file synchronization. It is also the first make disk storage usable for storing files, Nasuni UniFS platform that automatically reduces costs as files age so overcomes obstacles inherent in Amazon S3 related to data never has to be migrated again. latency, file retrieval, and organization to make object With Nasuni, IT gains a more scalable, affordable, storage usable for storing files, with key features like: manageable, and highly available file infrastructure that • Fast access to files in any edge location. meets cloud-first objectives and eliminates tedious NAS • Legacy application support, without app rewrites. migrations and forklift upgrades. End users gain limitless capacity for group shares, project directories, and home • Familiar hierarchical folder structure for fast search. drives; LAN-speed access to files in any edge location; • Unified platform for primary and archive file storage and fast recovery of files to any point in time. -

Nasuni for Business Continuity

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Business Continuity Summary of Nasuni Modern Cloud File Services Enables Remote Access to Critical Capabilities for Business File Assets and Remote Administration of File Infrastructure Continuity and Remote Work Anytime, Anywhere A key part of business continuity is main- Consolidation of Primary File Data in Remote File Access taining access to critical business con- Cloud Storage with Fast, Edge Access Anywhere, Anytime tent no matter what happens. Files are Highly resilient file storage is a funda- through standard drive the fastest-growing business content in mental building block for continuous file mappings or Web almost every industry. Providing remote file access. Nasuni stores the “gold copies” browser access, then, is a first step toward supporting of all file data in cloud object storage such Object Storage Dura- a “Work From Anywhere” model that can as Azure Blob, Amazon S3, or Google bility, Scalability, and sustain business operations through one-off Cloud Storage instead of legacy block Economics using Azure, hardware failures, local outages, regional storage. This approach leverages the AWS, and Google cloud office disasters, or global pandemics. superior durability, scalability, and availability storage of object storage to provision limitless file A remotely accessible file infrastructure sharing capacity, at substantially lower cost. Built-in Backup with that enables remote work must also be Better RPO/RTO with remotely manageable. IT departments Nasuni Edge Appliances – lightweight continuous file versioning must be able to provision new file storage virtual machines that cache copies of just to cloud storage capacity, create file shares, map drives, the frequently accessed files from cloud Rapid, Low-Cost DR recover data, and more without needing storage – can be deployed for as many that restores file shares skilled administrators “on the ground” in a offices as needed to give workers access in <15 minutes without datacenter or back office. -

Nasuni for Pharmaceuticals

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Pharmaceuticals Faster Collaboration Accelerate Research Collaboration with the Power of the Cloud between global research Now more than ever, pharmaceutical Nasuni Cloud File Storage for and manufacturing companies need to collaborate efficiently Pharmaceuticals locations across research and development centers, Major pharmaceutical companies have manufacturing facilities, and regulatory Unlimited Cloud solved their unstructured data storage, management centers. Critical research Storage On-Demand sharing, security, and protection challeng- and manufacturing processes generate in Microsoft Azure, AWS, es with Nasuni. terabytes of unstructured data that needs to IBM Cloud, Google be shared quickly — and protected securely. Immutable Storage in the Cloud Cloud or others elimi- Nasuni enables pharmaceutical com- nates on-premises NAS For IT, this requirement puts a tangible panies to consolidate all their file data in & file servers stress on traditionally siloed storage hard- cloud object storage (e.g. Microsoft Azure 50% Cost Savings ware and network bandwidth resources. Blob Storage, Amazon S3, Google Cloud when compared to Supporting unstructured data sharing Storage) instead of trapping it in silos of traditional NAS & backup forces IT to add storage capacity at an traditional, on-premises file servers or Net- solutions unpredictable rate, then bolt on expensive work-Attached Storage (NAS). Free from networking capabilities. Yet failing to keep physical device constraints, the combina- End-to-End Encryption up can impact both business and re- tion of Nasuni and cloud object storage secures research and search progress. Long delays waiting for offers limitless, scalable capacity at lower manufacturing data research files and manufacturing data to costs than on-premises storage. -

Egnyte + Alberici Case Study

+ Customer Success From the Office to the Project Site, How Alberici Uses Egnyte to Keep Teams in Sync Egnyte has opened new doors of possibilities where we didn’t have access out in the field previously. The VDC and BIM teams utilize Egnyte to sync huge files offline. They have been our largest proponents of Egnyte. — Ron Borror l Director, IT Infrastructure $2B + Annual Revenue Introduction Solving complex challenges on aggressive timelines is in the DNA of Alberici Corporation, a diversified construction company 17 that works in industrial and commercial markets globally. Regional Offices When longtime client General Motors Company called on Alberici for an urgent design-build request to transform an 100 86,000 square-foot warehouse into an emergency ventilator Average Number manufacturing facility during the COVID-19 pandemic, they of Projects answered the call. Within just two weeks, the transformed facility produced its first ventilator and, within a month, it was making 500 life-saving devices a day. AT A GLANCE Drawing on their more than 100-years of experience successfully completing complex projects like the largest hydroelectric plant on the Ohio River, automotive assembly plants for leading car manufacturers, and SSM Health Saint Louis University Hospital, Alberici was ready to act fast. Engineering News-Record recently ranked Alberici as the 31st- largest construction company in the United States with annual revenues of $2B. Nearly 80 percent of Alberici’s business is generated by repeat clients, a testament to their service and quality and the foundation for consistent growth. www.egnyte.com | © 2020 by Egnyte Inc. All rights reserved. -

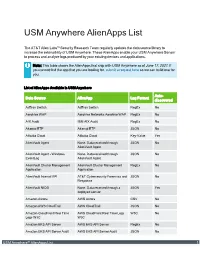

USM Anywhere Alienapps List

USM Anywhere AlienApps List The AT&T Alien Labs™ Security Research Team regularly updates the data source library to increase the extensibility of USM Anywhere. These AlienApps enable your USM Anywhere Sensor to process and analyze logs produced by your existing devices and applications. Note: This table shows the AlienApps that ship with USM Anywhere as of June 17, 2021. If you cannot find the app that you are looking for, submit a request here so we can build one for you. List of AlienApps Available in USM Anywhere Auto- Data Source AlienApp Log Format discovered AdTran Switch AdTran Switch RegEx No Aerohive WAP Aerohive Networks Aerohive WAP RegEx No AIX Audit IBM AIX Audit RegEx No Akamai ETP Akamai ETP JSON No Alibaba Cloud Alibaba Cloud Key-Value Yes AlienVault Agent None. Data received through JSON No AlienVault Agent AlienVault Agent - Windows None. Data received through JSON No EventLog AlienVault Agent AlienVault Cluster Management AlienVault Cluster Management RegEx No Application Application AlienVault Internal API AT&T Cybersecurity Forensics and JSON No Response AlienVault NIDS None. Data received through a JSON Yes deployed sensor Amazon Aurora AWS Aurora CSV No Amazon AWS CloudTrail AWS CloudTrail JSON No Amazon CloudFront Real Time AWS CloudFront Real Time Logs W3C No Logs W3C W3C Amazon EKS API Server AWS EKS API Server RegEx No Amazon EKS API Server Audit AWS EKS API Server Audit JSON No USM Anywhere™ AlienApps List 1 List of AlienApps Available in USM Anywhere (Continued) Auto- Data Source AlienApp Log Format discovered -

File Servers: Is It Time to Say Goodbye? Unstructured Data Is the Fastest-Growing Segment of Data in the Data Center

File Servers: Is It Time to Say Goodbye? Unstructured data is the fastest-growing segment of data in the data center. A significant portion of data within unstructured data is the data that users create though office productivity and other specialized applications. User data also often represents the bulk of the organization’s intellectual property. Traditionally, user data is stored on either file servers or network-attached storage (NAS) systems, which IT tries to locate solely at a primary data center. Initially, the problem that IT faced with storing unstructured data was keeping up with its growth, which leads to file server or NAS sprawl. Now the problem IT faces in storing file data is that users are no longer located in a single primary headquarters. The distribution of employees exacerbates file server sprawl. It also makes it almost impossible for collaboration on the data between locations. IT has tried various workarounds like routing everyone to a single server via a virtual private network (VPN), which leads to inconsistent connections and unacceptable performance. Users rebel and implement workarounds like consumer file sync and share, which puts corporate data at risk. Organizations are looking to the cloud for alternatives. Still, most cloud solutions are either attempts to harden file sync and share or are Cloud-only NAS implementations that don’t allow for cloud latency. Nasuni A File Services Platform Built for the Cloud Nasuni is a global file system that enables distributed organizations to work together as if they were all in a single office. It leverages the cloud as a primary storage point but integrates on-premises edge appliances, often running as virtual machines, to overcome cloud latency issues. -

Nasuni and AWS for Gaming Studios

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni and AWS for Gaming Studios Nasuni and AWS Provide the Best collaborating on large ‘unstructured’ game NASUNI AT A GLANCE Cloud File Solution for the Booming concept files, designs, and protypes Unlimited file storage Gaming Industry requires cumbersome synching tools, backed by Amazon S3 The global gaming market is expected to which can slow down the initial ideation grow to $257B by 2025 from $151B in process. Optimized for large 2020 pushing game studios to continually game build files Nasuni + AWS Cloud File Storage strive to enhance gamer’s experience Nasuni backed by Amazon S3 replaces on-site Near-zero cloud latency more rapidly than their competitors by file servers that need continual maintenances, for end users launching new games and extending backup, and synchronizing to remote existing franchises. Complicating their locations. Nasuni provides cloud file storage Global file synchroni- mission is needing to support multiple, specifically optimized for large, packaged zation and file lock diverse consoles and platforms, such as files such as ISO images, compressed Sony PlayStation, Microsoft Xbox, Nintendo Built-in backup with archives, and other development distribu- Switch, and Windows PC, along with unlimited snapshots tions along with other unstructured files mobile devices. required to bring a new game to market. Extensive file protocol While well-known code management By moving files out of legacy file servers support tools exist for developers to check-in and and into a cloud solution, game studios check-out code segments, challenges will have infinitely scalable, dynamic cloud Disaster recovery exist for distributing large ISO game builds file storage to meet the demands of the in minutes or compressed build artifacts quickly and largest gaming builds and production efficiently to remote locations for testing, pipelines, accelerating development and slowing down the entire development shortening QA cycles. -

A Review on Cloud Computing Security

Oluyinka. I. Omotosho, International Journal of Computer Science and Mobile Computing, Vol.8 Issue.9, September- 2019, pg. 245-257 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 6.199 IJCSMC, Vol. 8, Issue. 9, September 2019, pg.245 – 257 A Review on Cloud Computing Security Oluyinka. I. Omotosho* *Corresponding Author: O. I. Omotosho. Email: [email protected] Department of Computer Science and Engineering, Ladoke Akintola University of Technology, Ogbomoso, Oyo state, Nigeria Email: [email protected] I. INTRODUCTION Cloud computing is commonly used to represent any work done on a computer, mobile or any device, where the data and possibly the application being used do not reside on the device but rather on an unspecified device elsewhere on the Internet. The basic premise of cloud computing is that consumers (individuals, industry, government, academia and so on) pay for IT services from cloud service providers (CSP). Services offered in cloud computing are generally based on three standard models (Infrastructure-as s service, Platform-as a service, and Software as a Service) defined by the National Institute of Standards and Technology (NIST). The reason for cloud existence is to resolve managing problems being faced for data that were excessively stored, either mandatory capacity was limited due to the infrastructure of the business, or large capacity that led to a wasted capital. Apart from those major factors such as the initial capital, capitals and the service-fix cost, the sophisticated effort for the patching, the managing and the upgrading of the internal infrastructure is a huge obstacle for firm‘s development and mobility. -

IBM Software-Defined Storage Guide

Front cover IBM Software-Defined Storage Guide Larry Coyne Joe Dain Eric Forestier Patrizia Guaitani Robert Haas Christopher D. Maestas Antoine Maille Tony Pearson Brian Sherman Christopher Vollmar Redpaper International Technical Support Organization IBM Software-Defined Storage Guide July 2018 REDP-5121-02 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. Third Edition (July 2018) © Copyright International Business Machines Corporation 2016, 2018. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too . xi Comments welcome. xii Stay connected to IBM Redbooks . xii Chapter 1. Why software-defined storage . 1 1.1 Introduction to software-defined architecture . 2 1.2 Introduction to software-defined storage. 3 1.3 Introduction to Cognitive Storage Management . 7 Chapter 2. Software-defined storage. 9 2.1 Introduction to SDS . 10 2.2 SDS overview . 11 2.2.1 SDS supports emerging as well as traditional IT consumption models . 12 2.2.2 Required SDS capabilities . 14 2.2.3 SDS Functions . 15 2.3 SDS Data-access protocols . 16 2.3.1 Block I/O . 16 2.3.2 File I/O . 17 2.3.3 Object Storage . 17 2.4 SDS reference architecture. 17 2.5 Ransomware Considerations . 18 Chapter 3. IBM SDS product offerings . 19 3.1 SDS architecture . 20 3.1.1 SDS control plane . 23 3.1.2 SDS data plane. -

IBM Cloud Object Storage and Nasuni Enterprise File Service Technology Speed Collaboration with Faster, More Flexible Storage and File Sharing

IBM Cloud Solution Brief IBM Cloud Object Storage and Nasuni enterprise file service technology Speed collaboration with faster, more flexible storage and file sharing Video, image, audio, Internet of Things (IoT) data — enterprises face explosive Benefits growth in the number and size of files that users need to access, collaborate over and store. Network attached storage (NAS) or storage area network • Consolidates storage and file sync- (SAN) arrays and existing backup, disaster recovery (DR), distributed file and-share into one global namespace for accelerated access and virtually system and recovery technologies struggle to meet business requirements unlimited capacity for speed, agility and always-on availability. As a result, users are turning to • Avoids the cost of separate backup and third-party enterprise file sync-and-share (EFSS) solutions that can add cost disaster recovery technology and complexity and make it difficult for IT to enforce security policies. • Transforms workflow and data exchange ® to encourage greater innovation, efficiency IBM Cloud Object Storage provides virtually unlimited scalability and collaboration and industry-leading availability and reliability to facilitate consistent enterprisewide data flow. Nasuni Corp. provides a cloud-scale EFSS • Helps streamline and simplify administration for both file management solution that helps you store, protect, access and manage fast-growing and the storage system unstructured data. Combined, Nasuni innovation and IBM Cloud Object Storage offer a unified file -

On I/O Performance and Cost Efficiency of Cloud Orst Age: a Client's Perspective

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 11-4-2019 On I/O Performance and Cost Efficiency of Cloud orSt age: A Client's Perspective Binbing Hou Louisiana State University and Agricultural and Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Computer and Systems Architecture Commons, and the Data Storage Systems Commons Recommended Citation Hou, Binbing, "On I/O Performance and Cost Efficiency of Cloud Storage: A Client's Perspective" (2019). LSU Doctoral Dissertations. 5090. https://digitalcommons.lsu.edu/gradschool_dissertations/5090 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. ON I/O PERFORMANCE AND COST EFFICIENCY OF CLOUD STORAGE: A CLIENT'S PERSPECTIVE A Dissertation Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Philosophy in The Department of Computer Science and Engineering by Binbing Hou B.S., Wuhan University of Technology, 2011 M.S., Huazhong University of Science and Technology, 2014 December 2019 Acknowledgments At the end of the doctorate program, I would like to express my deepest gratitude to many people. It is their great help and support that make this dissertation possible. First of all, I would like to thank my advisor, Dr. Feng Chen, for his continuous support. -

Everything You Need to Know About Object Storage

STHome ORAGE MANAGING THE INFORMATION THAT DRIVES THE ENTERPRISE Castagna: Big storage cooking up in labs Toigo: Hyper- SNAPSHOT 1 EDITOR’S NOTE / CASTAGNA consolidation the future Need for more Data storage capacity driving growing faster than Object storage squeezing out NAS most NAS purchases capacity … for now Snapshot 1: Capacity driving most NAS CLOUD BACKUP STORAGE REVOLUTION / TOIGO purchases Tap the cloud to Hyper-consolidated Cloud alternatives ease backup burdens is the future to local backup Snapshot 2: NFS and 10 Gig pre- ferred for new NAS SNAPSHOT 2 HOT SPOTS / SINCLAIR Hadoop’s role in big data storage Users favor venerable When simple storage NFS and 10 Gig for isn’t so simple Sinclair: Simple storage not so new NAS systems simple Matchett: SayEverything hi you need to to the transforma- HADOOP READ-WRITE / MATCHETT tional cloud know about object storage Big data requires The sun rises on About us Object storage is moving out of the cloud to big storage transformational nudge NAS out of enterprise file storage cloud storage services MAY 2016, VOL. 15, NO. 3 STORAGE • MAY 2016 1 EDITOR’S LETTER RICH CASTAGNA Home Castagna: Big storage cooking up in labs Toigo: Hyper- Data storage Not to be confused with bitcoins, each of these coins consolidation (de.eloped at the Uni.ersity of Southampton in the UK) the future growing faster is said to be capable of holding 360 TB of data on a disk Object storage that appears to be only a bit larger than a quarter. That’s a squeezing out NAS than capacity lot of data in a small space, and it makes that 32 GB USB flash dri.e on your keychain seem pretty puny.