Towards Network-Level Efficiency for Cloud Storage Services

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Compress/Decompress Encrypt/Decrypt

Windows Compress/Decompress WinZip Standard WinZip Pro Compressed Folders Zip and unzip files instantly with 64-bit, best-in-class software ENHANCED! Compress MP3 files by 15 - 20 % on average Open and extract Zipx, RAR, 7Z, LHA, BZ2, IMG, ISO and all other major compression file formats Open more files types as a Zip, including DOCX, XLSX, PPTX, XPS, ODT, ODS, ODP, ODG,WMZ, WSZ, YFS, XPI, XAP, CRX, EPUB, and C4Z Use the super picker to unzip locally or to the cloud Open CAB, Zip and Zip 2.0 Methods Convert other major compressed file formats to Zip format Apply 'Best Compression' method to maximize efficiency automatically based on file type Reduce JPEG image files by 20 - 25% with no loss of photo quality or data integrity Compress using BZip2, LZMA, PPMD and Enhanced Deflate methods Compress using Zip 2.0 compatible methods 'Auto Open' a zipped Microsoft Office file by simply double-clicking the Zip file icon Employ advanced 'Unzip and Try' functionality to review interrelated components contained within a Zip file (such as an HTML page and its associated graphics). Windows Encrypt/Decrypt WinZip Standard WinZip Pro Compressed Folders Apply encryption and conversion options, including PDF conversion, watermarking and photo resizing, before, during or after creating your zip Apply separate conversion options to individual files in your zip Take advantage of hardware support in certain Intel-based computers for even faster AES encryption Administrative lockdown of encryption methods and password policies Check 'Encrypt' to password protect your files using banking-level encryption and keep them completely secure Secure sensitive data with strong, FIPS-197 certified AES encryption (128- and 256- bit) Auto-wipe ('shred') temporarily extracted copies of encrypted files using the U.S. -

Nasuni Cloud File Services™ the Modern, Cloud-Based Platform to Store, Protect, Synchronize, and Collaborate on Files Across All Locations at Scale

Data Sheet Data Sheet Nasuni Cloud File Services Nasuni Cloud File Services™ The modern, cloud-based platform to store, protect, synchronize, and collaborate on files across all locations at scale. Solution Overview What is Nasuni? Nasuni® Cloud File Services™ enables organizations to Nasuni Cloud File Services is a software-defined store, protect, synchronize and collaborate on files platform that leverages the low cost, scalability, and across all locations at scale. Licensed as stand-alone or durability of Amazon Simple Storage Service (Amazon bundled services, the Nasuni platform modernizes S3) and Nasuni’s cloud-based UniFS® global file system primary and archive file storage, file backup, and to transform file storage. disaster recovery, while offering advanced capabilities Just like device-based file systems were needed to for multi-site file synchronization. It is also the first make disk storage usable for storing files, Nasuni UniFS platform that automatically reduces costs as files age so overcomes obstacles inherent in Amazon S3 related to data never has to be migrated again. latency, file retrieval, and organization to make object With Nasuni, IT gains a more scalable, affordable, storage usable for storing files, with key features like: manageable, and highly available file infrastructure that • Fast access to files in any edge location. meets cloud-first objectives and eliminates tedious NAS • Legacy application support, without app rewrites. migrations and forklift upgrades. End users gain limitless capacity for group shares, project directories, and home • Familiar hierarchical folder structure for fast search. drives; LAN-speed access to files in any edge location; • Unified platform for primary and archive file storage and fast recovery of files to any point in time. -

Lock-Free Collaboration Support for Cloud Storage Services With

Lock-Free Collaboration Support for Cloud Storage Services with Operation Inference and Transformation Jian Chen, Minghao Zhao, and Zhenhua Li, Tsinghua University; Ennan Zhai, Alibaba Group Inc.; Feng Qian, University of Minnesota - Twin Cities; Hongyi Chen, Tsinghua University; Yunhao Liu, Michigan State University & Tsinghua University; Tianyin Xu, University of Illinois Urbana-Champaign https://www.usenix.org/conference/fast20/presentation/chen This paper is included in the Proceedings of the 18th USENIX Conference on File and Storage Technologies (FAST ’20) February 25–27, 2020 • Santa Clara, CA, USA 978-1-939133-12-0 Open access to the Proceedings of the 18th USENIX Conference on File and Storage Technologies (FAST ’20) is sponsored by Lock-Free Collaboration Support for Cloud Storage Services with Operation Inference and Transformation ⇤ 1 1 1 2 Jian Chen ⇤, Minghao Zhao ⇤, Zhenhua Li , Ennan Zhai Feng Qian3, Hongyi Chen1, Yunhao Liu1,4, Tianyin Xu5 1Tsinghua University, 2Alibaba Group, 3University of Minnesota, 4Michigan State University, 5UIUC Abstract Pattern 1: Losing updates Alice is editing a file. Suddenly, her file is overwritten All studied This paper studies how today’s cloud storage services support by a new version from her collaborator, Bob. Sometimes, cloud storage collaborative file editing. As a tradeoff for transparency/user- Alice can even lose her edits on the older version. services friendliness, they do not ask collaborators to use version con- Pattern 2: Conflicts despite coordination trol systems but instead implement their own heuristics for Alice coordinates her edits with Bob through emails to All studied handling conflicts, which however often lead to unexpected avoid conflicts by enforcing a sequential order. -

Photos Copied" Box

Our photos have never been as scattered as they are now... Do you know where your photos are? Digital Photo Roundup Checklist www.theswedishorganizer.com Online Storage Edition Let's Play Digital Photo Roundup! Congrats on making the decision to start organizing your digital photos! I know the task can seem daunting, so hopefully this handy checklist will help get your moving in the right direction. LET'S ORGANIZE! To start organizing your digital photos, you must first gather them all into one place, so that you'll be able to sort and edit your collection. Use this checklist to document your family's online storage accounts (i.e. where you have photos saved online), and whether they are copied onto your Master hub (the place where you are saving EVERYTHING). It'll make the gathering process a whole lot easier if you keep a record of what you have already copied and what is still to be done. HERE'S HOW The services in this checklist are categorized, so that you only need to print out what applies to you. If you have an account with the service listed, simply check the "Have Account" box. When you have copied all the photos, check the "Photos Copied" box. Enter your login credentials under the line between the boxes for easy retrieval. If you don't see your favorite service on the list, just add it to one of the blank lines provided after each category. Once you are done, you should find yourself with all your digital images in ONE place, and when you do, check back on the blog for tools to help you with the next step in the organizing process. -

Nasuni for Business Continuity

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Business Continuity Summary of Nasuni Modern Cloud File Services Enables Remote Access to Critical Capabilities for Business File Assets and Remote Administration of File Infrastructure Continuity and Remote Work Anytime, Anywhere A key part of business continuity is main- Consolidation of Primary File Data in Remote File Access taining access to critical business con- Cloud Storage with Fast, Edge Access Anywhere, Anytime tent no matter what happens. Files are Highly resilient file storage is a funda- through standard drive the fastest-growing business content in mental building block for continuous file mappings or Web almost every industry. Providing remote file access. Nasuni stores the “gold copies” browser access, then, is a first step toward supporting of all file data in cloud object storage such Object Storage Dura- a “Work From Anywhere” model that can as Azure Blob, Amazon S3, or Google bility, Scalability, and sustain business operations through one-off Cloud Storage instead of legacy block Economics using Azure, hardware failures, local outages, regional storage. This approach leverages the AWS, and Google cloud office disasters, or global pandemics. superior durability, scalability, and availability storage of object storage to provision limitless file A remotely accessible file infrastructure sharing capacity, at substantially lower cost. Built-in Backup with that enables remote work must also be Better RPO/RTO with remotely manageable. IT departments Nasuni Edge Appliances – lightweight continuous file versioning must be able to provision new file storage virtual machines that cache copies of just to cloud storage capacity, create file shares, map drives, the frequently accessed files from cloud Rapid, Low-Cost DR recover data, and more without needing storage – can be deployed for as many that restores file shares skilled administrators “on the ground” in a offices as needed to give workers access in <15 minutes without datacenter or back office. -

Nasuni for Pharmaceuticals

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Pharmaceuticals Faster Collaboration Accelerate Research Collaboration with the Power of the Cloud between global research Now more than ever, pharmaceutical Nasuni Cloud File Storage for and manufacturing companies need to collaborate efficiently Pharmaceuticals locations across research and development centers, Major pharmaceutical companies have manufacturing facilities, and regulatory Unlimited Cloud solved their unstructured data storage, management centers. Critical research Storage On-Demand sharing, security, and protection challeng- and manufacturing processes generate in Microsoft Azure, AWS, es with Nasuni. terabytes of unstructured data that needs to IBM Cloud, Google be shared quickly — and protected securely. Immutable Storage in the Cloud Cloud or others elimi- Nasuni enables pharmaceutical com- nates on-premises NAS For IT, this requirement puts a tangible panies to consolidate all their file data in & file servers stress on traditionally siloed storage hard- cloud object storage (e.g. Microsoft Azure 50% Cost Savings ware and network bandwidth resources. Blob Storage, Amazon S3, Google Cloud when compared to Supporting unstructured data sharing Storage) instead of trapping it in silos of traditional NAS & backup forces IT to add storage capacity at an traditional, on-premises file servers or Net- solutions unpredictable rate, then bolt on expensive work-Attached Storage (NAS). Free from networking capabilities. Yet failing to keep physical device constraints, the combina- End-to-End Encryption up can impact both business and re- tion of Nasuni and cloud object storage secures research and search progress. Long delays waiting for offers limitless, scalable capacity at lower manufacturing data research files and manufacturing data to costs than on-premises storage. -

Chapter 14 This Presentation Covers

Basic Operating Systems Chapter 14 This presentation covers: > Staying Current >Basic Operating Systems Overview > Command Prompts Qualities of a Good Technician “Soft skills” as they are known across many industries are essential Staying Current Benefits of staying current include: >(1) understanding and troubleshooting the latest technologies > (2) recommending upgrades or solutions to customers > (3) saving time troubleshooting (and time is money) > (4) being someone considered for a promotion Staying Current A variety of methods to stay current include: >Subscribe to a magazine or an online magazine > Subscribe to a news list that gives you an update in your email > Join or attend association meetings >Register for and attend a seminar > Attend an online webinar > Take a class > Read books > Talk to your department peers and supervisor Basic Operating Systems Overview Basic Operating Systems > Computers require software to operate >An operating system (OS) is software that coordinates the interaction between hardware and any software applications and the interaction between a user and the computer >An operating system can be a graphical user interface (GUI) or a command line interface, or both > It is responsible for handling file and disk management Popular Operating Systems > Microsoft Windows >Apple macOS > Linux > Chrome OS > Android (mobile) > Apple iOS (mobile) 32- vs 64-bit Operating Systems Windows 7 Editions Windows 10 Editions End-of-Life Concerns > Serious consequences if an operating system or application has reached its -

Mobile Cloud Storage

Context Awareness MobileHCI 2014, Sept. 23–26, 2014, Toronto, ON, CA Mobile Cloud Storage: A Contextual Experience Karel Vandenbroucke Denzil Ferreira*, Jorge Goncalves*, Katrien De Moor iMinds-MICT-Ghent University Vassilis Kostakos* ITEM-NTNU Ghent, Belgium University of Oulu Trondheim, Norway [email protected] Oulu, Finland [email protected] {dteixeir;jgoncalv;vassilis}@ee.oulu.fi ABSTRACT anywhere, at any time and from any device. However, one In an increasingly connected world, users access personal unyielding disadvantage of these lightweight, mobile or shared data, stored “in the cloud” (e.g., Dropbox, devices is their limited storage capacity, which also limits Skydrive, iCloud) with multiple devices. Despite the their possible uses. It is also more common to find services popularity of cloud storage services, little work has focused and applications that work across different mobile devices on investigating cloud storage users’ Quality of Experience and platforms. As a result, the related user behavior and (QoE), in particular on mobile devices. Moreover, it is not usage patterns have become more complex and a range of clear how users’ context might affect QoE. We conducted problems has emerged. One recurring challenge is to an online survey with 349 cloud service users to gain connect multiple devices that have vastly different insight into their usage and affordances. In a 2-week characteristics and requirements and how to synchronize follow-up study, we monitored mobile cloud service usage the stored data across these devices in an effortless way. on tablets and smartphones, in real-time using a mobile- With recent improvements on network speed, reliability based Experience Sampling Method (ESM) questionnaire. -

Egnyte + Alberici Case Study

+ Customer Success From the Office to the Project Site, How Alberici Uses Egnyte to Keep Teams in Sync Egnyte has opened new doors of possibilities where we didn’t have access out in the field previously. The VDC and BIM teams utilize Egnyte to sync huge files offline. They have been our largest proponents of Egnyte. — Ron Borror l Director, IT Infrastructure $2B + Annual Revenue Introduction Solving complex challenges on aggressive timelines is in the DNA of Alberici Corporation, a diversified construction company 17 that works in industrial and commercial markets globally. Regional Offices When longtime client General Motors Company called on Alberici for an urgent design-build request to transform an 100 86,000 square-foot warehouse into an emergency ventilator Average Number manufacturing facility during the COVID-19 pandemic, they of Projects answered the call. Within just two weeks, the transformed facility produced its first ventilator and, within a month, it was making 500 life-saving devices a day. AT A GLANCE Drawing on their more than 100-years of experience successfully completing complex projects like the largest hydroelectric plant on the Ohio River, automotive assembly plants for leading car manufacturers, and SSM Health Saint Louis University Hospital, Alberici was ready to act fast. Engineering News-Record recently ranked Alberici as the 31st- largest construction company in the United States with annual revenues of $2B. Nearly 80 percent of Alberici’s business is generated by repeat clients, a testament to their service and quality and the foundation for consistent growth. www.egnyte.com | © 2020 by Egnyte Inc. All rights reserved. -

A Survey on Cloud Storage

1764 JOURNAL OF COMPUTERS, VOL. 6, NO. 8, AUGUST 2011 A Survey on Cloud Storage Jiehui JU1,4 1.School of Information and Electronic Engineering, Zhejiang University of Science and Technology,Hangzhou,China Email: [email protected] Jiyi WU2,3, Jianqing FU3, Zhijie LIN1,3, Jianlin ZHANG2 2. Key Lab of E-Business and Information Security, Hangzhou Normal University, Hangzhou,China 3.School of Computer Science and Technology, Zhejiang University,Hangzhou,China 4.Key Lab of E-business Market Application Technology of Guangdong Province, Guangdong University of Business Studies, Guangzhou 510320, China Email: [email protected]; [email protected]; [email protected]; [email protected] Abstract— Cloud storage is a new concept come into being simultaneously with cloud computing, and can be divided into public cloud storage, private cloud storage and hybrid cloud storage. This article gives a quick introduction to cloud storage. It covers the key technologies in Cloud Computing and Cloud Storage. Google GFS massive data storage system and the popular open source Hadoop HDFS were detailed introduced to analyze the principle of Cloud Storage technology. As an important technology area and research direction, cloud storage is becoming a hot research for both academia and industry session. The future valuable research works were summarized at the end. Index Terms—Cloud Storage, Distributed File System, Research Status, survey I. INTRODUCTION As we all known disk storage is one of the largest expenditure in IT projects. ComputerWorld estimates that storage is responsible for almost 30% of capital expenditures as the average growth of data approaches Figure 1. -

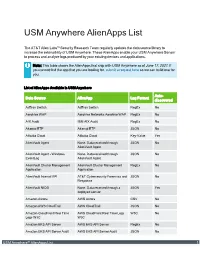

USM Anywhere Alienapps List

USM Anywhere AlienApps List The AT&T Alien Labs™ Security Research Team regularly updates the data source library to increase the extensibility of USM Anywhere. These AlienApps enable your USM Anywhere Sensor to process and analyze logs produced by your existing devices and applications. Note: This table shows the AlienApps that ship with USM Anywhere as of June 17, 2021. If you cannot find the app that you are looking for, submit a request here so we can build one for you. List of AlienApps Available in USM Anywhere Auto- Data Source AlienApp Log Format discovered AdTran Switch AdTran Switch RegEx No Aerohive WAP Aerohive Networks Aerohive WAP RegEx No AIX Audit IBM AIX Audit RegEx No Akamai ETP Akamai ETP JSON No Alibaba Cloud Alibaba Cloud Key-Value Yes AlienVault Agent None. Data received through JSON No AlienVault Agent AlienVault Agent - Windows None. Data received through JSON No EventLog AlienVault Agent AlienVault Cluster Management AlienVault Cluster Management RegEx No Application Application AlienVault Internal API AT&T Cybersecurity Forensics and JSON No Response AlienVault NIDS None. Data received through a JSON Yes deployed sensor Amazon Aurora AWS Aurora CSV No Amazon AWS CloudTrail AWS CloudTrail JSON No Amazon CloudFront Real Time AWS CloudFront Real Time Logs W3C No Logs W3C W3C Amazon EKS API Server AWS EKS API Server RegEx No Amazon EKS API Server Audit AWS EKS API Server Audit JSON No USM Anywhere™ AlienApps List 1 List of AlienApps Available in USM Anywhere (Continued) Auto- Data Source AlienApp Log Format discovered