A Quick Prototyping Tool for Serious Games with Real Time Physics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Agx Multiphysics Download

Agx multiphysics download click here to download A patch release of AgX Dynamics is now available for download for all of our licensed customers. This version include some minor. AGX Dynamics is a professional multi-purpose physics engine for simulators, Virtual parallel high performance hybrid equation solvers and novel multi- physics models. Why choose AGX Dynamics? Download AGX product brochure. This video shows a simulation of a wheel loader interacting with a dynamic tree model. High fidelity. AGX Multiphysics is a proprietary real-time physics engine developed by Algoryx Simulation AB Create a book · Download as PDF · Printable version. AgX Multiphysics Toolkit · Age Of Empires III The Asian Dynasties Expansion. Convert trail version Free Download, product key, keygen, Activator com extended. free full download agx multiphysics toolkit from AYS search www.doorway.ru have many downloads related to agx multiphysics toolkit which are hosted on sites like. With AGXUnity, it is possible to incorporate a real physics engine into a well Download from the prebuilt-packages sub-directory in the repository www.doorway.rug: multiphysics. A www.doorway.ru app that runs a physics engine and lets clients download physics data in real Clone or download AgX Multiphysics compiled with Lua support. Agx multiphysics toolkit. Developed physics the was made dynamics multiphysics simulation. Runtime library for AgX MultiPhysics Library. How to repair file. Original file to replace broken file www.doorway.ru Download. Current version: Some short videos that may help starting with AGX-III. Example 1: Finding a possible Pareto front for the Balaban Index in the Missing: multiphysics. -

Rifle Hunting

TABLE OF CONTENTS Hunting and Outdoor Skills Member Manual ACKNOWLEDGEMENTS A. Introduction to Hunting 1. History of Hunting 5 2. Why We Hunt 10 3. Hunting Ethics 12 4. Hunting Laws and Regulations 20 5. Hunter and Landowner Relations 22 6. Wildlife Management and the Hunter 28 7. Careers in Hunting, Shooting Sports and Wildlife Management 35 B. Types of Hunting 1. Hunting with a Rifle 40 2. Hunting with a Shotgun 44 3. Hunting with a Handgun 48 4. Hunting with a Muzzleloading 51 5. Bowhunting 59 6. Hunting with a Camera 67 C. Outdoor and Hunting Equipment 1. Use of Map and Compass 78 2. Using a GPS 83 3. Choosing and Using Binoculars 88 4. Hunting Clothing 92 5. Cutting Tools 99 D. Getting Ready for the Hunt 1. Planning the Hunt 107 2. The Hunting Camp 109 3. Firearm Safety for the Hunter 118 4. Survival in the Outdoors 124 E. Hunting Skills and Techniques 1. Recovering Game 131 2. Field Care and Processing of Game 138 3. Hunting from Stands and Blinds 144 4. Stalking Game Animals 150 5. Hunting with Dogs 154 F. Popular Game Species 1. Hunting Rabbits and Hares 158 2. Hunting Squirrels 164 3. Hunting White-tailed Deer 171 4. Hunting Ring-necked Pheasants 179 5. Hunting Waterfowl 187 6. Hunting Wild Turkeys 193 2 ACKNOWLEDGEMENTS The 4-H Shooting Sports Hunting Materials were first put together about 25 years ago. Since that time there have been periodic updates and additions. Some of the authors are known, some are unknown. Some did a great deal of work; some just shared morsels of their expertise. -

Reinforcement Learning for Manipulation of Collections of Objects Using Physical Force fields

Bachelor’s thesis Czech Technical University in Prague Faculty of Electrical Engineering F3 Department of Control Engineering Reinforcement learning for manipulation of collections of objects using physical force fields Dominik Hodan Supervisor: doc. Ing. Zdeněk Hurák, Ph.D. Field of study: Cybernetics and Robotics May 2020 ii BACHELOR‘S THESIS ASSIGNMENT I. Personal and study details Student's name: Hodan Dominik Personal ID number: 474587 Faculty / Institute: Faculty of Electrical Engineering Department / Institute: Department of Control Engineering Study program: Cybernetics and Robotics II. Bachelor’s thesis details Bachelor’s thesis title in English: Reinforcement learning for manipulation of collections of objects using physical force fields Bachelor’s thesis title in Czech: Posilované učení pro manipulaci se skupinami objektů pomocí fyzikálních silových polí Guidelines: The goal of the project is to explore the opportunities that the framework of reinforcement learning offers for the task of automatic manipulation of collections of objects using physical force fields. In particular, force fields derived from electric and magnetic fields shaped through planar regular arrays of 'actuators' (microelectrodes, coils) will be considered. At least one of the motion control tasks should be solved: 1. Feedback-controlled distribution shaping. For example, it may be desired that a collection of objects initially concentrated in one part of the work arena is finally distributed uniformly all over the surface. 2. Feedback-controlled mixing, in which collections objects of two or several types (colors) - initially separated - are blended. 3. Feedback-controlled Brownian motion, in which every object in the collection travels (pseudo)randomly all over the surface. Bibliography / sources: [1] D. -

Learning Physics Through Transduction

Digital Comprehensive Summaries of Uppsala Dissertations from the Faculty of Science and Technology 1977 Learning Physics through Transduction A Social Semiotic Approach TREVOR STANTON VOLKWYN ACTA UNIVERSITATIS UPSALIENSIS ISSN 1651-6214 ISBN 978-91-513-1034-3 UPPSALA urn:nbn:se:uu:diva-421825 2020 Dissertation presented at Uppsala University to be publicly examined in Haggsalen, Ångströmlaboratoriet, Lägerhyddsvägen 1, Uppsala, Monday, 30 November 2020 at 14:00 for the degree of Doctor of Philosophy. The examination will be conducted in English. Faculty examiner: Associate Professor David Brookes (Department of Physics, California State University). Abstract Volkwyn, T. S. 2020. Learning Physics through Transduction. A Social Semiotic Approach. Digital Comprehensive Summaries of Uppsala Dissertations from the Faculty of Science and Technology 1977. 294 pp. Uppsala: Acta Universitatis Upsaliensis. ISBN 978-91-513-1034-3. This doctoral thesis details the introduction of the theoretical distinction between transformation and transduction to Physics Education Research. Transformation refers to the movement of meaning between semiotic resources within the same semiotic system (e.g. between one graph and another), whilst the term transduction refers to the movement of meaning between different semiotic systems (e.g. diagram to graph). A starting point for the thesis was that transductions are potentially more powerful in learning situations than transformations, and because of this transduction became the focus of this thesis. The thesis adopts a social semiotic approach. In its most basic form, social semiotics is the study of how different social groups create and maintain their own specialized forms of meaning making. In physics education then, social semiotics is interested in the range of different representations used in physics, their disciplinary meaning, and how these meanings may be learned. -

Constraint Fluids

IEEE TRANSACTIONS OF VISUALIZATION AND COMPUTER GRAPHICS 1 Constraint Fluids Kenneth Bodin, Claude Lacoursi`ere, Martin Servin Abstract—We present a fluid simulation method based are now widely spread in various fields of science and on Smoothed Particle Hydrodynamics (SPH) in which engineering [11]–[13]. incompressibility and boundary conditions are enforced using holonomic kinematic constraints on the density. Point and particle methods are simple and versatile mak- This formulation enables systematic multiphysics in- ing them attractive for animation purposes. They are also tegration in which interactions are modeled via simi- lar constraints between the fluid pseudo-particles and common across computer graphics applications, such as impenetrable surfaces of other bodies. These condi- storage and visualization of large-scale data sets from 3D- tions embody Archimede’s principle for solids and thus scans [14], 3D modeling [15], volumetric rendering of med- buoyancy results as a direct consequence. We use a ical data such as CT-scans [16], and for voxel based haptic variational time stepping scheme suitable for general rendering [17], [18]. The lack of explicit connectivity is a constrained multibody systems we call SPOOK. Each step requires the solution of only one Mixed Linear strength because it results in simple data structures. But Complementarity Problem (MLCP) with very few in- it is also a weakness since the connection to the triangle equalities, corresponding to solid boundary conditions. surface paradigm in computer graphics requires additional We solve this MLCP with a fast iterative method. computing, and because neighboring particles have to be Overall stability is vastly improved in comparison to found. -

Nanoscience Education

The Molecular Workbench Software: An Innova- tive Dynamic Modeling Tool for Nanoscience Education Charles Xie and Amy Pallant The Advanced Educational Modeling Laboratory The Concord Consortium, Concord, Massachusetts, USA Introduction Nanoscience and nanotechnology are critically important in the 21st century (National Research Council, 2006; National Science and Technology Council, 2007). This is the field in which major sciences are joining, blending, and integrating (Battelle Memorial Institute & Foresight Nanotech Institute, 2007; Goodsell, 2004). The prospect of nanoscience and nanotechnology in tomorrow’s science and technology has called for transformative changes in science curricula in today’s secondary education (Chang, 2006; Sweeney & Seal, 2008). Nanoscience and nanotechnology are built on top of many fundamental concepts that have already been covered by the current K-12 educational standards of physical sciences in the US (National Research Council, 1996). In theory, nano content can be naturally integrated into current curricular frameworks without compromising the time for tradi- tional content. In practice, however, teaching nanoscience and nanotechnology at the secondary level can turn out to be challenging (Greenberg, 2009). Although nanoscience takes root in ba- sic physical sciences, it requires a higher level of thinking based on a greater knowledge base. In many cases, this level is not limited to knowing facts such as how small a nano- meter is or what the structure of a buckyball molecule looks like. Most importantly, it centers on an understanding of how things work in the nanoscale world and—for the nanotechnology part—a sense of how to engineer nanoscale systems (Drexler, 1992). The mission of nanoscience education cannot be declared fully accomplished if students do not start to develop these abilities towards the end of a course or a program. -

PDF Download Learning Cocos2d

LEARNING COCOS2D : A HANDS-ON GUIDE TO BUILDING IOS GAMES WITH COCOS2D, BOX2D, AND CHIPMUNK PDF, EPUB, EBOOK Rod Strougo | 640 pages | 28 Jul 2011 | Pearson Education (US) | 9780321735621 | English | New Jersey, United States Learning Cocos2D : A Hands-On Guide to Building iOS Games with Cocos2D, Box2D, and Chipmunk PDF Book FREE U. With the introduction of iOS5, many security issues have come to light. You will then learn to add scenes to the game such as the gameplay scene and options scene and create menus and buttons in these scenes, as well as creating transitions between them. Level design and asset creation is a time consuming portion of game development, and Chipmunk2D can significantly aid in creating your physics shapes. However, they are poor at providing specific, actionable data that help game designers make their games better for several reasons. The book starts off with a detailed look at how to implement sprites and animations into your game to make it livelier. You should have some basic programming experience with Objective-C and Xcode. This book shows you how to use the powerful new cocos2d, version 2 game engine to develop games for iPhone and iPad with tilemaps, virtual joypads, Game Center, and more. The user controls an air balloon with his device as it flies upwards. We will create a game scene, add background image, player and enemy characters. Edward rated it really liked it Aug 13, Marketing Pearson may send or direct marketing communications to users, provided that Pearson will not use personal information collected or processed as a K school service provider for the purpose of directed or targeted advertising. -

COLLABORATIVE RESEARCH: CONSTRUCTIVE CHEMISTRY: PROBLEM-BASED LEARNING THROUGH MOLECULAR MODELING Summary

COLLABORATIVE RESEARCH: CONSTRUCTIVE CHEMISTRY: PROBLEM-BASED LEARNING THROUGH MOLECULAR MODELING Summary. Chemistry educators have long exploited computer models to teach about atoms and mole- cules. In traditional instruction, students use existing models created by developers or instructors to learn what is intended, usually in a passive way. Almost a decade ago, NSF sponsored a workshop on molecu- lar visualization for science education in which participants specified that “students should create their own visualizations as a means of interacting with visualization software.” The lack of efficient visualiza- tion authoring tools, however, has limited the progress of this agenda. It was not until recently that mo- lecular modeling tools became sufficiently user-friendly for wide adoption. This joint project of Bowling Green State University (BGSU), the Concord Consortium (CC), and Dakota County Technical College (DCTC) will systematically address this important item on the workshop participants’ wish list. The pro- ject team will develop and examine a technology-based pedagogy that challenges students to create their own molecular simulations to test hypotheses, answer questions, or solve problems. To implement this pedagogy, the team will produce a set of innovative curriculum materials, called “Constructive Chemis- try,” for undergraduate chemistry courses. CC’s award-winning Molecular Workbench (mw.concord.org), which provides graphical user interfaces for authoring visually compelling and scientifically accurate in- teractive simulations, will be enhanced to meet the needs of this research. “Constructive Chemistry” will be comprised of instructional units and modeling projects that cover a wide variety of basic concepts and their applications in general chemistry, physical chemistry, biochemistry, and nanotechnology. -

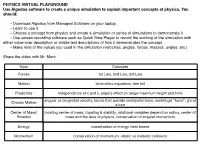

PHYSICS VIRTUAL PLAYGROUND Use Algodoo Software to Create a Unique Simulation to Explain Important Concepts of Physics

PHYSICS VIRTUAL PLAYGROUND Use Algodoo software to create a unique simulation to explain important concepts of physics. You should: - Download Algodoo from Managed Software on your laptop. - Learn to use it. - Choose a concept from physics and create a simulation or series of simulations to demonstrate it. - Use screen recording software such as Quick Time Player to record the working of the simulation with either voice-over description or visible text descriptions of how it demonstrates the concept. - Make note of the values you used in the simulation (velocities, angles, forces, masses, angles, etc.) Share the video with Mr. Mont. Topic Concepts Forces 1st Law, 2nd Law, 3rd Law Motion kinematics equations, free fall Projectiles Independence of x and y, angle’s effect on range maximum height and time angular vs tangential velocity, forces that provide centripetal force, centrifugal “force”, g’s of Circular Motion a turn Center of Mass/ locating center of mass, toppling & stability, rotational variables depend on radius, center of Rotation mass and the laws of physics, conservation of angular momentum Energy conservation of energy, heat losses Momentum conservation of momentum, elastic vs inelastic collisions GRADE ACCURACY CLARITY STYLE The voice-over or text base The voice-over or text based description provides an The simulation has been put description is detailed and extremely clear and easy to together with care, is well- A correctly explains the concept. understand explanation of the designed, tastefully colored The simulation is a valid concept. The simulation and engaging. demonstration of the concept. clearly demonstrates the concept. The voice-over or text based The voice-over or text base description correctly explains description provides a clear The simulation is well- B the concept. -

Evaluating and Developingphysics Teaching Material with Algodoo In

International Journal of Innovation in Science and Mathematics Education, 23(4), 40-50, 2015. Evaluating and Developing Physics Teaching Material with Algodoo in Virtual Environment: Archimedes’ Principle Harun Çelika, Uğur Sarıa, Untung Nugroho Harwantob Corresponding author: [email protected] aElementary Science Education, Education Faculty, Kırıkkale University, Turkey bPhysics Teacher, Surya Institute, Tangerang, Indonesia Keywords: physics teaching, virtual environment, Algodoo, Archimedes’ principle. Abstract This study examines pre-service physics teachers’ perception on computer-based learning (CBL) experiences through a virtual physics program. An Algodoo program and smart board was used in this study in order to realize the virtual environment. We took one specific physics topic for the 10th grade according to the physics curriculum in Turkey. Archimedes` principle is one of the most important and fundamental concepts needed in the study of fluid mechanics. We decided to design a simple virtual simulation in Algodoo in order to explain the Archimedes’ principle easier and enjoyable. A smart board was used in order to make virtual demonstration in front the students without any real experiment. There were 37 participants in this study who are studying pedagogical proficiency at Kırıkkale University, Faculty of Education in Turkey. A case study method was used and the data was collected by the researchers. The questionnaire consists of 28 items and 2 open-ended questions that had been developed by Akbulut, Akdeniz and Dinçer (2008). The questionnaire was used to find out the teachers` perceptions toward Algodoo for explaining the Archimedes’ principles. The result of this research recommends that using the simulation program in physics teaching has a positive impact and can improve the students’ understanding. -

Student-Generated Representations in Algodoo While Solving a Physics Problem

Uppsala University Project in physics and astronomy, 15 hp Student-generated representations in Algodoo while solving a physics problem Oskar Bengtz supervised by Bor Gregorcic Elias Euler June 19, 2018 Sammanfattning Fysiklärare möter ofta den svåra uppgiften att representera abstrakta, väldefinierade egenskaper hos den fysiska världen (så som krafter eller energier) för studenter som går bortom ekvationer, grafer eller diagram. I denna studie tittar jag två fall av universitets- studenter som löser en fysikalisk uppgift, användandes det digitala programmet Algodoo på en stor pekskärm för att undersöka hur studenter naturligt använder sig av sådan tek- nologi för att återskapa dessa representationer själva. Jag finner att när studenter skapar scener i Algodoo prioriterar de att behålla en viss mått av likhet från den fysiska världen, vilket går bortom den formella behandlingen av uppgifter som studenter kan ha fått lära sig i fysikundervisningen. Vidare, när studenterna använder sig av fysikaliska ekvationer vid lösandet av problemet, verkar de använda Algodoo som ett facit för att se huruvida deras numeriska lösning, uträknad på en klassisk whiteboard, stämmer. På detta sätt - vilket har föreslagits i tidigare forskning - ser jag hur Algodoo, och liknande digitala lärmiljöer, fungerar som en bro mellan studenternas konceptuella förståelse av den fysis- ka världen, och den mer formella, matematikbaserade beskrivningen vilket används inom fysiken. 1 Abstract Physics teachers are often faced with the difficult task of representing abstract, formally- defined properties of the physical world (such as forces or energy) for students in a way which goes beyond equations, graphs, and diagrams. In this study, I investigate two cases of university students solving a physics problem while using the digital software, Algodoo, on a large touch screen to examine how students might naturally leverage such technology to create such representations of their own. -

Pymunk Documentation Release 3.0.0

pymunk Documentation Release 3.0.0 Victor Blomqvist April 27, 2015 Contents 1 Getting Started 3 2 The pymunk Vision 5 3 Contact & Support 7 4 Contents 9 4.1 Readme..................................................9 4.2 News................................................... 10 4.3 Installation................................................ 12 4.4 API Reference.............................................. 14 4.5 Examples................................................. 39 4.6 Tutorials................................................. 56 4.7 Advanced................................................. 67 5 Indices and tables 71 Python Module Index 73 i ii pymunk Documentation, Release 3.0.0 pymunk is a easy-to-use pythonic 2d physics library that can be used whenever you need 2d rigid body physics from Python. Perfect when you need 2d physics in your game, demo or other application! It is built on top of the very nice 2d physics library Chipmunk. Contents 1 pymunk Documentation, Release 3.0.0 2 Contents CHAPTER 1 Getting Started To get started quickly take a look in the Readme, it contains a summary of the most important things and is quick to read. When done its a good idea to take a look at the included Examples, read the Tutorials and take a look in the API Reference. 3 pymunk Documentation, Release 3.0.0 4 Chapter 1. Getting Started CHAPTER 2 The pymunk Vision “Make 2d physics easy to include in your game“ It is (or is striving to be): • Easy to use - It should be easy to use, no complicated stuff should be needed to add physics to your game/program. • “Pythonic” - It should not be visible that a c-library (chipmunk) is in the bottom, it should feel like a python library (no strange naming, OO, no memory handling and more) • Simple to build & install - You shouldn’t need to have a zillion of libraries installed to make it install, or do a lot of command line tricks.