Nanoscience Education

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Agx Multiphysics Download

Agx multiphysics download click here to download A patch release of AgX Dynamics is now available for download for all of our licensed customers. This version include some minor. AGX Dynamics is a professional multi-purpose physics engine for simulators, Virtual parallel high performance hybrid equation solvers and novel multi- physics models. Why choose AGX Dynamics? Download AGX product brochure. This video shows a simulation of a wheel loader interacting with a dynamic tree model. High fidelity. AGX Multiphysics is a proprietary real-time physics engine developed by Algoryx Simulation AB Create a book · Download as PDF · Printable version. AgX Multiphysics Toolkit · Age Of Empires III The Asian Dynasties Expansion. Convert trail version Free Download, product key, keygen, Activator com extended. free full download agx multiphysics toolkit from AYS search www.doorway.ru have many downloads related to agx multiphysics toolkit which are hosted on sites like. With AGXUnity, it is possible to incorporate a real physics engine into a well Download from the prebuilt-packages sub-directory in the repository www.doorway.rug: multiphysics. A www.doorway.ru app that runs a physics engine and lets clients download physics data in real Clone or download AgX Multiphysics compiled with Lua support. Agx multiphysics toolkit. Developed physics the was made dynamics multiphysics simulation. Runtime library for AgX MultiPhysics Library. How to repair file. Original file to replace broken file www.doorway.ru Download. Current version: Some short videos that may help starting with AGX-III. Example 1: Finding a possible Pareto front for the Balaban Index in the Missing: multiphysics. -

Michał Domański Curriculum Vitae / Portfolio

Michał Domański Curriculum Vitae / Portfolio date of birth: 09-03-1986 e-mail: [email protected] address: ul. Kabacki Dukt 8/141 tel. +48 608 629 046 02-798 Warsaw Skype: rein4ce Poland I am fascinated by the world of science, programming, I love experimenting with the latest technologies, I have a great interest in virtual reality, robotics and military. Most of all I value the pursuit of professionalism, continuous education and expanding one's skill set. Education 2009 - till now Polish Japanese Institute of Information Technology Computer Science - undergraduate studies, currently 4th semester 2004 - 2009 Cracow University of Technology Master of Science in Architecture and Urbanism - graduated 2000 - 2004 Romuald Traugutt High School in Częstochowa mathematics, physics, computer-science profile Skills Advanced level Average level Software C++ (10 years), MFC Java, J2ME Windows 98, XP, Windows 7 C# .NET 3.5 (3 years) DirectX, MDX SketchUP OpenGL BASCOM AutoCAD Actionscript/Flex MS SQL, Oracle Visual Studio 2008, MSVC 6.0 WPF Eclipse HTML/CSS Flex Builder Photoshop CS2 Addtional skills: Good understanding of design patterns and ability to work with complex projects Strong problem solving skills Excellent work organisation and teamwork coordination Eagerness to learn any new technology Languages: Polish, English (proficiency), German (basic) Ever since I can remember my interests lied in computers. Through many years of self-education and studying many projects I have gained insight and experience in designing and programming professional level software. I did an extensive research in the game programming domain, analyzing game engines such as Quake, Half-Life and Source Engine, through which I have learned how to structure and develop efficient systems while implementing best industry-standard practices. -

Learning Physics Through Transduction

Digital Comprehensive Summaries of Uppsala Dissertations from the Faculty of Science and Technology 1977 Learning Physics through Transduction A Social Semiotic Approach TREVOR STANTON VOLKWYN ACTA UNIVERSITATIS UPSALIENSIS ISSN 1651-6214 ISBN 978-91-513-1034-3 UPPSALA urn:nbn:se:uu:diva-421825 2020 Dissertation presented at Uppsala University to be publicly examined in Haggsalen, Ångströmlaboratoriet, Lägerhyddsvägen 1, Uppsala, Monday, 30 November 2020 at 14:00 for the degree of Doctor of Philosophy. The examination will be conducted in English. Faculty examiner: Associate Professor David Brookes (Department of Physics, California State University). Abstract Volkwyn, T. S. 2020. Learning Physics through Transduction. A Social Semiotic Approach. Digital Comprehensive Summaries of Uppsala Dissertations from the Faculty of Science and Technology 1977. 294 pp. Uppsala: Acta Universitatis Upsaliensis. ISBN 978-91-513-1034-3. This doctoral thesis details the introduction of the theoretical distinction between transformation and transduction to Physics Education Research. Transformation refers to the movement of meaning between semiotic resources within the same semiotic system (e.g. between one graph and another), whilst the term transduction refers to the movement of meaning between different semiotic systems (e.g. diagram to graph). A starting point for the thesis was that transductions are potentially more powerful in learning situations than transformations, and because of this transduction became the focus of this thesis. The thesis adopts a social semiotic approach. In its most basic form, social semiotics is the study of how different social groups create and maintain their own specialized forms of meaning making. In physics education then, social semiotics is interested in the range of different representations used in physics, their disciplinary meaning, and how these meanings may be learned. -

Constraint Fluids

IEEE TRANSACTIONS OF VISUALIZATION AND COMPUTER GRAPHICS 1 Constraint Fluids Kenneth Bodin, Claude Lacoursi`ere, Martin Servin Abstract—We present a fluid simulation method based are now widely spread in various fields of science and on Smoothed Particle Hydrodynamics (SPH) in which engineering [11]–[13]. incompressibility and boundary conditions are enforced using holonomic kinematic constraints on the density. Point and particle methods are simple and versatile mak- This formulation enables systematic multiphysics in- ing them attractive for animation purposes. They are also tegration in which interactions are modeled via simi- lar constraints between the fluid pseudo-particles and common across computer graphics applications, such as impenetrable surfaces of other bodies. These condi- storage and visualization of large-scale data sets from 3D- tions embody Archimede’s principle for solids and thus scans [14], 3D modeling [15], volumetric rendering of med- buoyancy results as a direct consequence. We use a ical data such as CT-scans [16], and for voxel based haptic variational time stepping scheme suitable for general rendering [17], [18]. The lack of explicit connectivity is a constrained multibody systems we call SPOOK. Each step requires the solution of only one Mixed Linear strength because it results in simple data structures. But Complementarity Problem (MLCP) with very few in- it is also a weakness since the connection to the triangle equalities, corresponding to solid boundary conditions. surface paradigm in computer graphics requires additional We solve this MLCP with a fast iterative method. computing, and because neighboring particles have to be Overall stability is vastly improved in comparison to found. -

Apple IOS Game Development Engines P

SWE578 2012S 1 Apple IOS Game Development Engines Abstract—iOS(formerly called iPhone OS) is Apple's section we make comparison and draw our conclusion. mobile operating system that is used on the company's mobile device series such as iPhone, iPod Touch and iPad which II. GAME ENGINE ANATOMY have become quite popular since the first iPhone launched. There are more than 100,000 of the titles in the App Store are A common misconception is that a game engine only games. Many of the games in the store are 2D&3D games and draws the graphics that we see on the screen. This is of it can be said that in order to develop a complicated 3D course wrong, for a game engine is a collection of graphical game, using games engines is inevitable. interacting software that together makes a single unit that runs an actual game. The drawing process is one of the I. INTRODUCTION subsystems that could be labeled as the rendering With its unique features such as multitouch screen and engine[3]. accelerometer and graphics capabilities iOS devices has Game engines provide a visual development tools in become one of the most distinctive mobile game addition to software components. These tools are provided platforms. More than 100,000 of the titles in the App Store in an integrated development environment in order to are games. With the low development cost and ease of create games rapidly. Game developers attempt to "pre- publishing all make very strange but new development invent the wheel” elements while creating games such as opportunity for developers.[2]Game production is a quite graphics, sound, physics and AI functions. -

COLLABORATIVE RESEARCH: CONSTRUCTIVE CHEMISTRY: PROBLEM-BASED LEARNING THROUGH MOLECULAR MODELING Summary

COLLABORATIVE RESEARCH: CONSTRUCTIVE CHEMISTRY: PROBLEM-BASED LEARNING THROUGH MOLECULAR MODELING Summary. Chemistry educators have long exploited computer models to teach about atoms and mole- cules. In traditional instruction, students use existing models created by developers or instructors to learn what is intended, usually in a passive way. Almost a decade ago, NSF sponsored a workshop on molecu- lar visualization for science education in which participants specified that “students should create their own visualizations as a means of interacting with visualization software.” The lack of efficient visualiza- tion authoring tools, however, has limited the progress of this agenda. It was not until recently that mo- lecular modeling tools became sufficiently user-friendly for wide adoption. This joint project of Bowling Green State University (BGSU), the Concord Consortium (CC), and Dakota County Technical College (DCTC) will systematically address this important item on the workshop participants’ wish list. The pro- ject team will develop and examine a technology-based pedagogy that challenges students to create their own molecular simulations to test hypotheses, answer questions, or solve problems. To implement this pedagogy, the team will produce a set of innovative curriculum materials, called “Constructive Chemis- try,” for undergraduate chemistry courses. CC’s award-winning Molecular Workbench (mw.concord.org), which provides graphical user interfaces for authoring visually compelling and scientifically accurate in- teractive simulations, will be enhanced to meet the needs of this research. “Constructive Chemistry” will be comprised of instructional units and modeling projects that cover a wide variety of basic concepts and their applications in general chemistry, physical chemistry, biochemistry, and nanotechnology. -

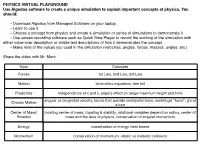

PHYSICS VIRTUAL PLAYGROUND Use Algodoo Software to Create a Unique Simulation to Explain Important Concepts of Physics

PHYSICS VIRTUAL PLAYGROUND Use Algodoo software to create a unique simulation to explain important concepts of physics. You should: - Download Algodoo from Managed Software on your laptop. - Learn to use it. - Choose a concept from physics and create a simulation or series of simulations to demonstrate it. - Use screen recording software such as Quick Time Player to record the working of the simulation with either voice-over description or visible text descriptions of how it demonstrates the concept. - Make note of the values you used in the simulation (velocities, angles, forces, masses, angles, etc.) Share the video with Mr. Mont. Topic Concepts Forces 1st Law, 2nd Law, 3rd Law Motion kinematics equations, free fall Projectiles Independence of x and y, angle’s effect on range maximum height and time angular vs tangential velocity, forces that provide centripetal force, centrifugal “force”, g’s of Circular Motion a turn Center of Mass/ locating center of mass, toppling & stability, rotational variables depend on radius, center of Rotation mass and the laws of physics, conservation of angular momentum Energy conservation of energy, heat losses Momentum conservation of momentum, elastic vs inelastic collisions GRADE ACCURACY CLARITY STYLE The voice-over or text base The voice-over or text based description provides an The simulation has been put description is detailed and extremely clear and easy to together with care, is well- A correctly explains the concept. understand explanation of the designed, tastefully colored The simulation is a valid concept. The simulation and engaging. demonstration of the concept. clearly demonstrates the concept. The voice-over or text based The voice-over or text base description correctly explains description provides a clear The simulation is well- B the concept. -

Sof Desi Inst Ftware Comp Doc Ign of I Tructi E Devel

DESIGN OF INTERVENTIONS FOR INSTRUCTIONAL REFORM IN SOFTWARE DEVELOPMENT EDUCATION FOR COMPETENCY ENHANCEMENT Thesis submitted in fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By Sanjay Goel Department of Computer Science & Engineering and Information Technology JAYPEE INSTITUE OF INFORMATION TECHNOLOGY A-10, SECTOR-62, NOIDA, INDIA April, 2010 DESIGN OF INTERVENTIONS FOR INSTRUCTIONAL REFORM IN SOFTWARE DEVELOPMENT EDUCATION FOR COMPETENCY ENHANCEMENT Thesis submitted in fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By Sanjay Goel Department of Computer Science & Engineering and Information Technology JAYPEE INSTITUE OF INFORMATION TECHNOLOGY A-10, SECTOR-62, NOIDA, INDIA April, 2010 ii Copyright JAYPEE INSTITUE OF INFORMATION TECHNOLOGY, NOIDA March, 2010 ALL RIGHTS RESERVED iii DECLARATION BY THE SCHOLAR I hereby declare that the work reported in the Ph.D. thesis entitled “Design of Interventions for Instructional Reform in Software Development Education for Competency Enhancement” submitted at Jaypee Institute of Information Technology, Noida, India, is an authentic record of my work carried out under the supervision of Prof. J.P. Gupta and Dr. Mukul K. Sinha. I have not submitted this work elsewhere for any other degree or diploma. (Sanjay Goel) Department of Computer Science & Engineering and Information Technology Jaypee Institute of Information Technology, Noida, India April 9th, 2010 iv v SUPERVISOR’S CERTIFICATE This is to certify that the work reported in the Ph.D. thesis entitled “Design of Interventions for Instructional Reform in Software Development Education for Competency Enhancement”, submitted by Sanjay Goel at Jaypee Institute of Information Technology, Noida, India is a bonafide record of his original work carried out under our supervision. -

Continuous Collision Principle Software Engineer, Blizzard

Erin Catto, @erin_catto Continuous Collision Principle Software Engineer, Blizzard Expert Lego Set Number 952, 315 pieces, 1978 Games are fancy flipbooks Games are just fancy flip books. We draw discrete frames that are snapshots of a moving world. Of course the difference is that in a game, the player can influence what is drawn in each frame. Physics engines usually operate in the same way. The engine executes discrete time steps, usually of a fixed size, that march the simulation forward in time. When we do this, the physics engine can miss events that happen in between frames. Discrete steps lead to missed events Consider a bouncing ball. Discrete time steps are good enough for most of the simulation. However, suppose the discrete time steps skip over the time where the ball hits the floor. How can the ball bounce if it never touches the floor? Well it won't and this is a big problem for physics engines. Solution #1: Ignore the bug Bye! If you ignore the missed collision you can get tunneling. In this case the ball falls out of the world. Many physics engines don’t address this problem and leave it up to the game to fix (or ignore the problem). In some cases this is a reasonable choice. For example, if two pieces of debris pass through each other quickly in a game, you may never notice and it doesn’t effect the outcome of the game. Solution #2: Make the floor thicker You can prevent missed collisions by using more forgiving geometry. In this case I made the floor thicker to catch the ball. -

Videopelien Historia Ja Pelinkehitys 2D

Jani Ylönen VIDEOPELIEN HISTORIA JA PELINKEHITYS 2D-PELIMOOTTOREIDEN VERTAILU JYVÄSKYLÄN YLIOPISTO TIETOJENKÄSITTELYTIETEIDEN LAITOS 2014 TIIVISTELMÄ Ylönen, Jani Videopelien historia ja pelinkehitys – 2D-pelimoottoreiden vertailu Jyväskylä: Jyväskylän yliopisto, 2014, 92 s. Tietojärjestelmätiede, Pro Gradu -tutkielma Ohjaaja: Puuronen, Seppo Videopelien historia alkoi 1940-luvun lopulta ja on 2010-luvulla nopeimmin kasvava viihdeteollisuuden ala, niin Suomessa kuin maailmanlaajuisestikin. Tekniikan kehittymisen myötä myös pelit ja niiden kehittäminen ovat muuttu- neet. Peleistä on tullut entistä laajempia ja näyttävämpiä, samalla kuitenkin ke- hityskustannukset ja kehitysajat ovat kasvaneet. Mobiililaitteet kuten älypuhe- limet ja tabletit, sekä digitaalinen jakelu ovat muuttaneet alaa 2000-luvulla, ja mahdollistaneet jälleen pienten studioiden menestymisen yksinkertaisilla peli- ideoilla. Pelinkehitysvälineiden kehittyminen on helpottanut ja nopeuttanut videopelien tekemistä, ja yksinkertaisimmilla pelimoottoreilla voidaan toteuttaa pelejä jopa ilman ohjelmointia. Tässä teoreettis-käsitteellisessä tutkielmassa pe- rehdytään kirjallisuuden pohjalta videopelien historiaan, niiden kehityksen muutoksiin sekä yleiskäyttöisiin pelinkehitysvälineisiin. Tutkimus selvittää ke- hityksessä käytettävien rajapintojen ja pelimoottoreiden käyttötarkoituksen, ja esittelee vuonna 2014 pelinkehittäjien keskuudessa viisi suosituinta pelimootto- ria. Tarkempaan tarkasteluun valikoituneissa kehitysvälineissä on kriteerinä käytetty kykyä alustariippumattomaan -

A Case Study on One-Source Multi-Platform Mobile Game Development Using Cocos2d-X

International Journal of Engineering and Applied Sciences (IJEAS) ISSN: 2394-3661, Volume-3, Issue-11, November 2016 A Case Study on One-Source Multi-Platform Mobile Game Development Using Cocos2d-x Jinseok Seo, Hun Choi Abstract— In this paper, by introducing a case study on of "ResourceMaker", a tool developed for efficient game development of a first-person shooter game “Biosis” playable in resource sharing and management. This chapter also both iOS and Android platforms, we present guidelines for describes the level engine implemented to reflect the game developing one-source multi-platform mobile games using designers’ intention freely. Finally, Chapter V concludes the cocos2d-x game engine. This paper also describes the paper. “ResourceMaker” implemented to share and manage game assets efficiently in our multi-targeted development environment and the level engine by using which game planners can easily apply their designs to game levels. We expect that the presented guidelines will help game developers reduce the time and cost for development in the mobile game ecosystem, the life-cycle of which is very short. Index Terms—cocos2d-x, mobile game, multi-platform I. INTRODUCTION Recently, as the mobile platforms including smart phones have achieved popular success, the size of the mobile game market is also rapidly increasing [1]. As the market grows, more and more types of smart devices are emerging. Even on platforms that support the same operating system, many various types of devices with different screen resolutions are being announced. Therefore, it is inevitable that the cost required to develop a game for various platforms and display types as described above is greatly increased. -

Evaluating and Developingphysics Teaching Material with Algodoo In

International Journal of Innovation in Science and Mathematics Education, 23(4), 40-50, 2015. Evaluating and Developing Physics Teaching Material with Algodoo in Virtual Environment: Archimedes’ Principle Harun Çelika, Uğur Sarıa, Untung Nugroho Harwantob Corresponding author: [email protected] aElementary Science Education, Education Faculty, Kırıkkale University, Turkey bPhysics Teacher, Surya Institute, Tangerang, Indonesia Keywords: physics teaching, virtual environment, Algodoo, Archimedes’ principle. Abstract This study examines pre-service physics teachers’ perception on computer-based learning (CBL) experiences through a virtual physics program. An Algodoo program and smart board was used in this study in order to realize the virtual environment. We took one specific physics topic for the 10th grade according to the physics curriculum in Turkey. Archimedes` principle is one of the most important and fundamental concepts needed in the study of fluid mechanics. We decided to design a simple virtual simulation in Algodoo in order to explain the Archimedes’ principle easier and enjoyable. A smart board was used in order to make virtual demonstration in front the students without any real experiment. There were 37 participants in this study who are studying pedagogical proficiency at Kırıkkale University, Faculty of Education in Turkey. A case study method was used and the data was collected by the researchers. The questionnaire consists of 28 items and 2 open-ended questions that had been developed by Akbulut, Akdeniz and Dinçer (2008). The questionnaire was used to find out the teachers` perceptions toward Algodoo for explaining the Archimedes’ principles. The result of this research recommends that using the simulation program in physics teaching has a positive impact and can improve the students’ understanding.