AUTHOR Webnet 97 World Conference of the WWW

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Perl Baseless Myths & Startling Realities

http://xkcd.com/224/ 1 Perl Baseless Myths & Startling Realities by Tim Bunce, February 2008 2 Parrot and Perl 6 portion incomplete due to lack of time (not lack of myths!) Realities - I'm positive about Perl Not negative about other languages - Pick any language well suited to the task - Good developers are always most important, whatever language is used 3 DISPEL myths UPDATE about perl Who am I? - Tim Bunce - Author of the Perl DBI module - Using Perl since 1991 - Involved in the development of Perl 5 - “Pumpkin” for 5.4.x maintenance releases - http://blog.timbunce.org 4 Perl 5.4.x 1997-1998 Living on the west coast of Ireland ~ Myths ~ 5 http://www.bleaklow.com/blog/2003/08/new_perl_6_book_announced.html ~ Myths ~ - Perl is dead - Perl is hard to read / test / maintain - Perl 6 is killing Perl 5 6 Another myth: Perl is slow: http://www.tbray.org/ongoing/When/200x/2007/10/30/WF-Results ~ Myths ~ - Perl is dead - Perl is hard to read / test / maintain - Perl 6 is killing Perl 5 7 Perl 5 - Perl 5 isn’t the new kid on the block - Perl is 21 years old - Perl 5 is 14 years old - A mature language with a mature culture 8 How many times Microsoft has changed developer technologies in the last 14 years... 9 10 You can guess where thatʼs leading... From “The State of the Onion 10” by Larry Wall, 2006 http://www.perl.com/pub/a/2006/09/21/onion.html?page=3 Buzz != Jobs - Perl5 hasn’t been generating buzz recently - It’s just getting on with the job - Lots of jobs - just not all in web development 11 Web developers tend to have a narrow focus. -

Selling on Amazon Guide to XML

Selling on Amazon Guide to XML Editor’s Note The XML Help documentation contains general information about using XML on Amazon. There are differences in using XML for various Amazon websites, based on differences in the features and functionality available on those sites. - Some of the product categories in the XML Help are not available for merchants on some Amazon websites. If a product category is available to merchants on a particular Amazon website, then the XSD files for that category are valid for that Amazon website as well. Selling on Amazon – Guide to XML Contents 1. XML Overview ................................................................................................................................1 What is XML? ................................................................................................................................. 1 Why Use XML? ............................................................................................................................... 1 Prerequisite ........................................................................................................................................................ 1 Using Amazon Marketplace Web Service for XML Integration ............................................................ 1 Using XML to send catalog information ............................................................................................ 2 Using XML to process orders .......................................................................................................... -

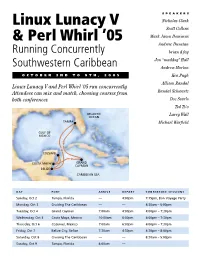

Linux Lunacy V & Perl Whirl

SPEAKERS Linux Lunacy V Nicholas Clark Scott Collins & Perl Whirl ’05 Mark Jason Dominus Andrew Dunstan Running Concurrently brian d foy Jon “maddog” Hall Southwestern Caribbean Andrew Morton OCTOBER 2ND TO 9TH, 2005 Ken Pugh Allison Randal Linux Lunacy V and Perl Whirl ’05 run concurrently. Attendees can mix and match, choosing courses from Randal Schwartz both conferences. Doc Searls Ted Ts’o Larry Wall Michael Warfield DAY PORT ARRIVE DEPART CONFERENCE SESSIONS Sunday, Oct 2 Tampa, Florida — 4:00pm 7:15pm, Bon Voyage Party Monday, Oct 3 Cruising The Caribbean — — 8:30am – 5:00pm Tuesday, Oct 4 Grand Cayman 7:00am 4:00pm 4:00pm – 7:30pm Wednesday, Oct 5 Costa Maya, Mexico 10:00am 6:00pm 6:00pm – 7:30pm Thursday, Oct 6 Cozumel, Mexico 7:00am 6:00pm 6:00pm – 7:30pm Friday, Oct 7 Belize City, Belize 7:30am 4:30pm 4:30pm – 8:00pm Saturday, Oct 8 Cruising The Caribbean — — 8:30am – 5:00pm Sunday, Oct 9 Tampa, Florida 8:00am — Perl Whirl ’05 and Linux Lunacy V Perl Whirl ’05 are running concurrently. Attendees can mix and match, choosing courses Seminars at a Glance from both conferences. You may choose any combination Regular Expression Mastery (half day) Programming with Iterators and Generators of full-, half-, or quarter-day seminars Speaker: Mark Jason Dominus Speaker: Mark Jason Dominus (half day) for a total of two-and-one-half Almost everyone has written a regex that failed Sometimes you’ll write a function that takes too (2.5) days’ worth of sessions. The to match something they wanted it to, or that long to run because it produces too much useful conference fee is $995 and includes matched something they thought it shouldn’t, and information. -

When Geeks Cruise

COMMUNITY Geek Cruise: Linux Lunacy Linux Lunacy, Perl Whirl, MySQL Swell: Open Source technologists on board When Geeks Cruise If you are on one of those huge cruising ships and, instead of middle-aged ladies sipping cocktails, you spot a bunch of T-shirt touting, nerdy looking guys hacking on their notebooks in the lounges, chances are you are witnessing a “Geek Cruise”. BY ULRICH WOLF eil Baumann, of Palo Alto, Cali- and practical tips on application develop- The dedicated Linux track comprised a fornia, has been organizing geek ment – not only for Perl developers but meager spattering of six lectures, and Ncruises since 1999 (http://www. for anyone interested in programming. though there was something to suit geekcruises.com/), Neil always finds everyone’s taste, the whole thing tended enough open source and programming Perl: Present and to lack detail. Ted T’so spent a long time celebrities to hold sessions on Linux, (Distant?) Future talking about the Ext2 and Ext3 file sys- Perl, PHP and other topics dear to geeks. In contrast, Allison Randal’s tutorials on tems, criticizing ReiserFS along the way, Parrot Assembler and Perl6 features were but had very little to say about network Open Source Celebs hardcore. Thank goodness Larry Wall file systems, an increasingly vital topic. on the Med summed up all the major details on Perl6 Developers were treated to a lecture on I was lucky enough to get on board the in a brilliant lecture that was rich with developing shared libraries, and admins first Geek Cruise on the Mediterranean, metaphors and bursting with informa- enjoyed sessions on Samba and hetero- scaring the nerds to death with my tion. -

Minimal Perl for UNIX and Linux People

Minimal Perl For UNIX and Linux People BY TIM MAHER MANNING Greenwich (74° w. long.) For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact: Special Sales Department Manning Publications Co. Cherokee Station PO Box 20386 Fax: (609) 877-8256 New York, NY 10021 email: [email protected] ©2007 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Manning Publications Co. Copyeditor: Tiffany Taylor 209 Bruce Park Avenue Typesetters: Denis Dalinnik, Dottie Marsico Greenwich, CT 06830 Cover designer: Leslie Haimes ISBN 1-932394-50-8 Printed in the United States of America 12345678910–VHG–1009080706 To Yeshe Dolma Sherpa, whose fortitude, endurance, and many sacrifices made this book possible. To my parents, Gloria Grady Washington and William N. Maher, who indulged my early interests in literature. To my limbic system, with gratitude for all the good times we’ve had together. -

Your FREE Google Advertising Strategy to Boost E-Commerce Sales

Your FREE Google Advertising Strategy To Boost E-commerce Sales Strategic Marketing Content from the experts at 1 • See what’s in store Table of Contents 3. Introduction 4. Google Shopping Google Shopping Ads are product based ads that utilize your product listings instead of keywords to target consumers. 6. Google Display Network & Custom Intent Audiences Google’s custom intent audience option gives advertisers the ability to target individuals who are already actively searching and in-market for products or services relevant to their business. 8. Google Paid Search Paid search or commonly referred to as PPC (pay-per-click) advertising is the most popular campaign type for Google Ads. Paid search ads operate on a pay-per-click model, meaning you will only pay for the ad when an individual clicks on it. 11. Google Dynamic Remarketing Setting up a dynamic product remarketing campaign allows you to display your product or brand to a recent visitor of your site, consequently increasing the chance they come back and make a purchase. 13. The Strategy Think of the strategy as a funnel, the top being a broad audience where most of your transactions have less of a chance to take place. As the funnel becomes more narrow, the audience you have here will get you closer and closer to a sale! 14. About Us We are all really good people. 2 • Table of Contents Your FREE Google Advertising Strategy To Boost E-commerce Sales Follow this Google advertising strategy and you start to see more sales right away! Having Issues? Google’s digital advertising In this guide you will learn 3 Are you having issues platform, Google Ads (formerly valuable information on how to driving traffic to your Google AdWords), provides a boost your ecommerce sales with ecommerce site? variety of options for promoting the help of shopping, display, your ecommerce business and search and remarketing ads all 3 Or, do you receive decent products. -

Programming Perl (Nutshell Handbooks) Randal L. Schwartz

[PDF] Programming Perl (Nutshell Handbooks) Randal L. Schwartz, Larry Wall - pdf download free book Programming Perl (Nutshell Handbooks) PDF, Programming Perl (Nutshell Handbooks) Download PDF, Programming Perl (Nutshell Handbooks) by Randal L. Schwartz, Larry Wall Download, Programming Perl (Nutshell Handbooks) Full Collection, Read Best Book Online Programming Perl (Nutshell Handbooks), Read Online Programming Perl (Nutshell Handbooks) Ebook Popular, Download Free Programming Perl (Nutshell Handbooks) Book, Download PDF Programming Perl (Nutshell Handbooks), pdf free download Programming Perl (Nutshell Handbooks), by Randal L. Schwartz, Larry Wall Programming Perl (Nutshell Handbooks), the book Programming Perl (Nutshell Handbooks), Download Programming Perl (Nutshell Handbooks) Online Free, Read Online Programming Perl (Nutshell Handbooks) Book, Read Programming Perl (Nutshell Handbooks) Full Collection, Programming Perl (Nutshell Handbooks) PDF read online, Programming Perl (Nutshell Handbooks) Ebooks, Programming Perl (Nutshell Handbooks) Free Download, Programming Perl (Nutshell Handbooks) Free PDF Download, Programming Perl (Nutshell Handbooks) Books Online, PDF Download Programming Perl (Nutshell Handbooks) Free Collection, CLICK HERE FOR DOWNLOAD pdf, mobi, epub, azw, kindle Description: About the Author Randal L. Schwartz is a two-decade veteran of the software industry. He is skilled in software design, system administration, security, technical writing, and training. Randal has coauthored the "must-have" standards: Programming Perl, Learning Perl, Learning Perl for Win32 Systems, and Effective Perl Learning, and is a regular columnist for WebTechniques, PerformanceComputing, SysAdmin, and Linux magazines. He is also a frequent contributor to the Perl newsgroups, and has moderated comp.lang.perl.announce since its inception. His offbeat humor and technical mastery have reached legendary proportions worldwide (but he probably started some of those legends himself). -

Google Shopping Feed

Best Practice Guide: Google Shopping Feed Best Practice Guide: Google Shopping Feed I. INTRODUCTION 3 13. Sales price [sale_price] 28 39. Merchant promotions 14. Sales price effective date [promotion_id] 50 Contents [sales_price_date] 29 40. Destination and time specifics 15. Cost of goods sold (COGS) [expiration_date], [cost_of_goods_sold] 30 [excluded_destination], 16. Unique product identifiers [included_destination] 51 [gtin], [mpn], [brand] 31 41. Installment [installment] 52 17. Item group ID [item_group_id] 33 42. Loyalty Points [loyalty_points] 52 18. Condition [condition] 34 19. Color 35 [color] III. BEST PRACTICES FOR YOUR 20. Gender 36 [gender] GOOGLE SHOPPING FEED 53 21. Age group [age_group] 36 II. KEY FEED SPECS AND 22. Material [material] 37 REQUIREMENTS 5 23. Pattern [pattern] 37 24. Size [size] 38 25. Size type [size_type] 39 26. Size system [size_system] 39 27. Shipping [shipping] 40 28. Shipping weight shipping_weight] 42 29. Shipping label [shipping_label] 42 30. Shipping size [shipping_length], IV. GOOGLE AND [shipping_width], PRODUCTSUP 56 1. Product ID [id] 7 [shipping height] 43 2. Title [title] 9 31. Handling times 3. Description [description] 12 [min_handling_time], 4. Link [link] 14 [max_handling_time] 43 5. Image link [image_link] 15 32. Tax [tax], [tax_category] 43 6. Google product category 33. Product combination labels [google_product_category] 16 [multipack], [is_bundle] 44 7. Product type 34. Adult labels [adult] 45 [product_type] 18 35. Ads redirect [ads_redirect] 45 8. Mobile link [mobile_link] 20 36. Shopping campaigns custom 9. Additional image link labels [custom_label_X] 46 [additional_image_link] 22 37. Unit prices [unit_pricing_ 10. Availability [availability] 23 measure], [unit_pricing_base_ 11. Price [price] 25 measure] 48 12. Availability date 38. Energy labels [energy_ [availability date] 28 efficiency_class] 49 www.productsup.com [email protected] BEST PRACTICE GUIDE: GOOGLE SHOPPING FEED | 2 I. -

The London School of Economics and Political Science Computational

The London School of Economics and Political Science Computational Consumption: Social Media and the construction of Digital Consumers Cristina Alaimo A thesis submitted to the Department of Management of the London School of Economics for the degree of Doctor of Philosophy, London, July 2014 1 Declaration I certify that the thesis I have presented for examination for the PhD degree of the London School of Economics and Political Science is solely my own work other than where I have clearly indicated that it is the work of others (in which case the extent of any work carried out jointly by me and any other person is clearly identified in it). The copyright of this thesis rests with the author. Quotation from it is permitted, provided that full acknowledgement is made. This thesis may not be reproduced without my prior written consent. I warrant that this authorisation does not, to the best of my belief, infringe the rights of any third party. I declare that my thesis consists of 85,609 words (including footnotes but excluding bibliography and appendices). Statement of use of third party for editorial help I can confirm that my thesis was copy edited for conventions of language, spelling and grammar by Jonathan A. Sutcliffe. 2 Abstract The abundance of social data and the constant development of new models of personalized suggestions are rewriting the way in which consumption is experienced. Not only are consumers now immersed in an information mediated context - decoupled from physical and socio-cultural constrains - but they also experience other consumers and themselves differently, embracing the prescriptions of a technological medium made by algorithmic suggestions and software instructions. -

Product Listing Ads for Beginners by Elizabeth Marsten Product Listing Ads for Beginners

Product Listing Ads for Beginners By Elizabeth Marsten www.portent.com Product Listing Ads for Beginners Legal, Notes and Other Stuff © 2013, The Written Word, Inc. d/b/a Portent, Inc. and Elizabeth Marsten. This work is licensed under the Creative Commons Attribution-Noncom- mercial-No Derivative Works 3.0 United States License. * * * Who is this book for? Click here to read the license. Those new to Product Listings Ads and/or the world of product feeds and Google Shopping in general. That’s a fancy way of saying: please don’t steal from me. It’s not cool. Especially those who are looking for some step by step If you like this book, you might want to check out Elizabeth’s posts at the and best practice guidance. Portent blog www.portent.com. * * * If you want to talk to Elizabeth, you can reach her on Twitter @ebkendo or by email at [email protected] 2 www.portent.com Product Listing Ads for Beginners Table of Contents 4 What are PLAs? 8 What You Need 9 Getting Started » AdWords » Merchant Center » Webmaster Tools » Product Feed 42 Tracking Progress 46 Troubleshooting 3 www.portent.com Product Listing Ads for Beginners What are PLAs? How do they work, where do they show? Google rolled out Product Listing Ads (PLAs) in early 2011. PLAs show paid ads with images, price and sometimes promotional text in 3-6 ad blocks depending on the query and available results on both a typical SERP and on Google Shopping SERPs. Prior to October 2012, results on the main page of a Google Shopping SERP were organic (and free) generated by prod- uct feeds submitted by merchants through Google Merchant Center and through crawls performed by googlebot during regular web crawls of sites in the Google index. -

Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials by Allison Randal, Dan Sugalski, Leopold Tötsch

• Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials By Allison Randal, Dan Sugalski, Leopold Tötsch Publisher: O'Reilly Pub Date: June 2003 ISBN: 0-596-00499-0 Pages: 208 Slots: 1 Perl 6 Essentials is the first book that offers a peek into the next major version of the Perl language. Written by members of the Perl 6 core development team, the book covers the development not only of Perl 6 syntax but also Parrot, the language-independent interpreter developed as part of the Perl 6 design strategy. This book is essential reading for anyone interested in the future of Perl. It will satisfy their curiosity and show how changes in the language will make it more powerful and easier to use. 1 / 155 • Table of Contents • Index • Reviews • Reader Reviews • Errata Perl 6 Essentials By Allison Randal, Dan Sugalski, Leopold Tötsch Publisher: O'Reilly Pub Date: June 2003 ISBN: 0-596-00499-0 Pages: 208 Slots: 1 Copyright Preface How This Book Is Organized Font Conventions We'd Like to Hear from You Acknowledgments Chapter 1. Project Overview Section 1.1. The Birth of Perl 6 Section 1.2. In the Beginning . Section 1.3. The Continuing Mission Chapter 2. Project Development Section 2.1. Language Development Section 2.2. Parrot Development Chapter 3. Design Philosophy Section 3.1. Linguistic and Cognitive Considerations Section 3.2. Architectural Considerations Chapter 4. Syntax Section 4.1. Variables Section 4.2. Operators Section 4.3. Control Structures Section 4.4. Subroutines Section 4.5. Classes and Objects Section 4.6. -

Perl Baseless Myths & Startling Realities

http://xkcd.com/224/ Perl Baseless Myths & Startling Realities by Tim Bunce, July 2008 Prefer ‘Good Developers’ over ‘Good Languages’ “For all program aspects investigated, the performance variability that derives from differences among programmers of the same language—as described by the bad-to-good ratios—is on average as large or larger than the variability found among the different languages.” — An empirical comparison of C, C++, Java, Perl, Python, Rexx, and Tcl. IEEE Computer Journal October 2000 Who am I? - Tim Bunce - Author of the Perl DBI module - Using Perl since 1991 - Involved in the development of Perl 5 - “Pumpkin” for 5.4.x maintenance releases - http://blog.timbunce.org ~ Myths ~ ~ Myths ~ - Perl is dead - Perl is hard to read / test / maintain - Perl 6 is killing Perl 5 ~ Myths ~ - Perl is dead - Perl is hard to read / test / maintain - Perl 6 is killing Perl 5 Perl 5 - Perl 5 isn’t the new kid on the block - Perl is 21 years old - Perl 5 is 14 years old - A mature language with a mature culture Buzz != Jobs - Perl5 hasn’t been generating buzz recently - It’s just getting on with the job - Lots of jobs - - just not all in web development Guess the Languages “web developer” Yes, Perl is growing more slowly than others but these are just “web developer” jobs “software engineer” Perl is mentioned in many more software engineer/developer jobs. “foo developer” Perl is the primary focus of more developer jobs. Want a fun new job? Become a Perl developer! Massive Module Market - Large and vibrant developer community - Over 15,000 distributions (58,000 modules) - Over 6,700 ‘authors’ (who make releases) - One quarter of all CPAN distributions have been updated in the last 4 months! - Half of all updated in the last 17 months! Top Modules -Many gems, including..