On-Line Multi-Class Segmentation of Side-Scan Sonar Imagery Using an Autonomous Underwater Vehicle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chesapeake Yacht Sales

FROM THE QUARTERDECK MARCH 2012 Championships at Lake Eustis. I’ve seen no The Welcome Cruisers event is results at this time. In addition, several of our scheduled for March 24th. This is the kick Cruisers have been down in the Sunshine State off gathering for the cruising season and is (despite George Sadler’s report of rain in Key expected to set the tone for events to follow. Largo). Thanks to all for carrying the FBYC We look forward to an exciting collection of burgee beyond our home waters. outings including some activities planned around the Op Sail venture in the summer of Registration for Junior Week began on 2012. Feb. 1st. Beginning Opti was at the limit of capacity within 42 hours of going live on the Those of us involved in FBYC Board website. Other aspects of our Junior program meetings have witnessed some changes for are in high demand as well. Race teams are this year. I’m specifically referring to the filling or have filled and the numbers for Opti conference call system we have put in place. The Ullman Sails Seminar “Unlocking Development Team are so high that another At the last meeting seven people were remote the Race Course” took place on Feb. 15th. coaching position for that team is justified. I’m calling in from Vermont to Florida. This system The event was very well attended. Of the 133 looking forward to Junior Week as two of my allows folks who do not live in the Richmond attendees, 50 were non-members of FBYC. -

THE PHYSICIAN's GUIDE to DIVING MEDICINE the PHYSICIAN's GUIDE to DIVING MEDICINE Tt",,.,,,,., , ••••••••••• ,

THE PHYSICIAN'S GUIDE TO DIVING MEDICINE THE PHYSICIAN'S GUIDE TO DIVING MEDICINE tt",,.,,,,., , ••••••••••• , ......... ,.", •••••••••••••••••••••••• ,. ••. ' ••••••••••• " .............. .. Edited by Charles W. Shilling Catherine B. Carlston and Rosemary A. Mathias Undersea Medical Society Bethesda, Maryland PLENUM PRESS • NEW YORK AND LONDON Library of Congress Cataloging in Publication Data Main entry under title: The Physician's guide to diving medicine. Includes bibliographies and index. 1. Submarine medicine. 2. Diving, Submarine-Physiological aspects. I. Shilling, Charles W. (Charles Wesley) II. Carlston, Catherine B. III. Mathias, Rosemary A. IV. Undersea Medical Society. [DNLM: 1. Diving. 2. Submarine Medicine. WD 650 P577] RC1005.P49 1984 616.9'8022 84-14817 ISBN-13: 978-1-4612-9663-8 e-ISBN-13: 978-1-4613-2671-7 DOl: 10.1007/978-1-4613-2671-7 This limited facsimile edition has been issued for the purpose of keeping this title available to the scientific community. 10987654 ©1984 Plenum Press, New York A Division of Plenum Publishing Corporation 233 Spring Street, New York, N.Y. 10013 All rights reserved No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, microfilming, recording, or otherwise, without written permission from the Publisher Contributors The contributors who authored this book are listed alphabetically below. Their names also appear in the text following contributed chapters or sections. N. R. Anthonisen. M.D .. Ph.D. Professor of Medicine University of Manitoba Winnipeg. Manitoba. Canada Arthur J. Bachrach. Ph.D. Director. Environmental Stress Program Naval Medical Research Institute Bethesda. Maryland C. Gresham Bayne. -

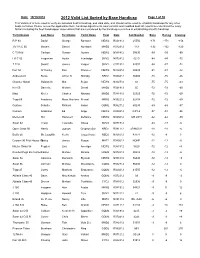

2012 Valid List Sorted by Base Handicap

Date: 10/19/2012 2012 Valid List Sorted by Base Handicap Page 1 of 30 This Valid List is to be used to verify an individual boat's handicap, and valid date, and should not be used to establish handicaps for any other boats not listed. Please review the appilication form, handicap adjustments, boat variants and modified boat list reports to understand the many factors including the fleet handicapper observations that are considered by the handicap committee in establishing a boat's handicap Yacht Design Last Name First Name Yacht Name Fleet Date Sail Number Base Racing Cruising R P 90 David George Rambler NEW2 R021912 25556 -171 -171 -156 J/V I R C 66 Meyers Daniel Numbers MHD2 R012912 119 -132 -132 -120 C T M 66 Carlson Gustav Aurora NEW2 N081412 50095 -99 -99 -90 I R C 52 Fragomen Austin Interlodge SMV2 N072412 5210 -84 -84 -72 T P 52 Swartz James Vesper SMV2 C071912 52007 -84 -87 -72 Farr 50 O' Hanley Ron Privateer NEW2 N072412 50009 -81 -81 -72 Andrews 68 Burke Arthur D Shindig NBD2 R060412 55655 -75 -75 -66 Chantier Naval Goldsmith Mat Sejaa NEW2 N042712 03 -75 -75 -63 Ker 55 Damelio Michael Denali MHD2 R031912 55 -72 -72 -60 Maxi Kiefer Charles Nirvana MHD2 R041812 32323 -72 -72 -60 Tripp 65 Academy Mass Maritime Prevail MRN2 N032212 62408 -72 -72 -60 Custom Schotte Richard Isobel GOM2 R062712 60295 -69 -69 -57 Custom Anderson Ed Angel NEW2 R020312 CAY-2 -57 -51 -36 Merlen 49 Hill Hammett Defiance NEW2 N020812 IVB 4915 -42 -42 -30 Swan 62 Tharp Twanette Glisse SMV2 N071912 -24 -18 -6 Open Class 50 Harris Joseph Gryphon Soloz NBD2 -

Deep Sea Dive Ebook Free Download

DEEP SEA DIVE PDF, EPUB, EBOOK Frank Lampard | 112 pages | 07 Apr 2016 | Hachette Children's Group | 9780349132136 | English | London, United Kingdom Deep Sea Dive PDF Book Zombie Worm. Marrus orthocanna. Deep diving can mean something else in the commercial diving field. They can be found all over the world. Depth at which breathing compressed air exposes the diver to an oxygen partial pressure of 1. Retrieved 31 May Diving medicine. Arthur J. Retrieved 13 March Although commercial and military divers often operate at those depths, or even deeper, they are surface supplied. Minimal visibility is still possible far deeper. The temperature is rising in the ocean and we still don't know what kind of an impact that will have on the many species that exist in the ocean. Guiel Jr. His dive was aborted due to equipment failure. Smithsonian Institution, Washington, DC. Depth limit for a group of 2 to 3 French Level 3 recreational divers, breathing air. Underwater diving to a depth beyond the norm accepted by the associated community. Limpet mine Speargun Hawaiian sling Polespear. Michele Geraci [42]. Diving safety. Retrieved 19 September All of these considerations result in the amount of breathing gas required for deep diving being much greater than for shallow open water diving. King Crab. Atrial septal defect Effects of drugs on fitness to dive Fitness to dive Psychological fitness to dive. The bottom part which has the pilot sphere inside. List of diving environments by type Altitude diving Benign water diving Confined water diving Deep diving Inland diving Inshore diving Muck diving Night diving Open-water diving Black-water diving Blue-water diving Penetration diving Cave diving Ice diving Wreck diving Recreational dive sites Underwater environment. -

'The Last of the Earth's Frontiers': Sealab, the Aquanaut, and the US

‘The Last of the earth’s frontiers’: Sealab, the Aquanaut, and the US Navy’s battle against the sub-marine Rachael Squire Department of Geography Royal Holloway, University of London Submitted in accordance with the requirements for the degree of PhD, University of London, 2017 Declaration of Authorship I, Rachael Squire, hereby declare that this thesis and the work presented in it is entirely my own. Where I have consulted the work of others, this is always clearly stated. Signed: ___Rachael Squire_______ Date: __________9.5.17________ 2 Contents Declaration…………………………………………………………………………………………………………. 2 Abstract……………………………………………………………………………………………………………… 5 Acknowledgements …………………………………………………………………………………………… 6 List of figures……………………………………………………………………………………………………… 8 List of abbreviations…………………………………………………………………………………………… 12 Preface: Charting a course: From the Bay of Gibraltar to La Jolla Submarine Canyon……………………………………………………………………………………………………………… 13 The Sealab Prayer………………………………………………………………………………………………. 18 Chapter 1: Introducing Sealab …………………………………………………………………………… 19 1.0 Introduction………………………………………………………………………………….... 20 1.1 Empirical and conceptual opportunities ……………………....................... 24 1.2 Thesis overview………………………………………………………………………………. 30 1.3 People and projects: a glossary of the key actors in Sealab……………… 33 Chapter 2: Geography in and on the sea: towards an elemental geopolitics of the sub-marine …………………………………………………………………………………………………. 39 2.0 Introduction……………………………………………………………………………………. 40 2.1 The sea in geography………………………………………………………………………. -

Palaestra-FINAL-PUBLICATION.Pdf

Forum of Sport, Physical Education, and Recreation for Those With Disabilities PALAESTRA Wheelchair Rugby: “Really Believe in Yourself and You Can Reach Your Goals” page 20 www.Palaestra.com Vol. 27, No. 3 | Fall 2013 Therapy on the Water Universal Access Sailing at Boston’s Community Boating Gary C. du Moulin Genzyme Marcin Kunicki Charles Zechel Community Boating Inc. Introduction Sailing and Disability: A Philosophy for Therapy While the sport is less well known as a therapeutic activity, Since 1946, the mission of Community Boating, Inc. (CBI), sailing engenders all the physical and psychological components the nation’s oldest community sailing organization, has been the important to the rehabilitative process (McCurdy,1991; Burke, advancement of the sport of sailing by minimizing economic and 2010). The benefits of this therapeutic and recreational reha- physical obstacles. In addition, CBI enhances the community by bilitative activity can offer the experience of adventure, mobil- offering access to sailing as a vehicle to empower its members ity, and freedom. Improvement in motor skills and coordination, to develop independence and self-confidence, improve communi- self-confidence, and pride through accomplishment are but a few cation and, foster teamwork. Members also acquire a deeper un- of the goals that can be achieved (Hough & Paisley, 2008; Groff, derstanding of community spirit and the power of volunteerism. Lundberg, & Zabriskie, 2009; Burke, 2010). Instead of acting Founded in 2006, in cooperation with the Massachusetts Depart- as the passive beneficiaries of sailing activities, people with dis- ment of Conservation and Recreation (DCR), the Executive Office abilities can be direct participants where social interaction and of Public and Private Partnerships, and the corporate sponsorship teamwork are promoted in the environment of a sailboat’s cock- of Genzyme, a biotechnology company the Universal Access Pro- pit. -

Future Vision for Autonomous Ocean Observations

fmars-07-00697 September 6, 2020 Time: 20:42 # 1 REVIEW published: 08 September 2020 doi: 10.3389/fmars.2020.00697 Future Vision for Autonomous Ocean Observations Christopher Whitt1*, Jay Pearlman2, Brian Polagye3, Frank Caimi4, Frank Muller-Karger5, Andrea Copping6, Heather Spence7, Shyam Madhusudhana8, William Kirkwood9, Ludovic Grosjean10, Bilal Muhammad Fiaz10, Satinder Singh10, Sikandra Singh10, Dana Manalang11, Ananya Sen Gupta12, Alain Maguer13, Justin J. H. Buck14, Andreas Marouchos15, Malayath Aravindakshan Atmanand16, Ramasamy Venkatesan16, Vedachalam Narayanaswamy16, Pierre Testor17, Elizabeth Douglas18, Sebastien de Halleux18 and Siri Jodha Khalsa19 1 JASCO Applied Sciences, Dartmouth, NS, Canada, 2 FourBridges, Port Angeles, WA, United States, 3 Department of Mechanical Engineering, Pacific Marine Energy Center, University of Washington, Seattle, WA, United States, 4 Harbor Branch Oceanographic Institute, Florida Atlantic University, Fort Pierce, FL, United States, 5 College of Marine Science, University of South Florida, St. Petersburg, FL, United States, 6 Pacific Northwest National Laboratory, Seattle, WA, United States, 7 AAAS Science and Technology Policy Fellowship, Water Power Technologies Office, United States Edited by: Department of Energy, Washington, DC, United States, 8 Center for Conservation Bioacoustics, Cornell Lab of Ornithology, Ananda Pascual, Cornell University, Ithaca, NY, United States, 9 Monterey Bay Aquarium Research Institute, Moss Landing, CA, United States, Mediterranean Institute for Advanced 10 OceanX -

To My Friends in the UK I Seem to Have Promised Steve and Simon That I Would Write an Article on Tuning a Sonar for Your Website

To My Friends in the UK I seem to have promised Steve and Simon that I would write an article on tuning a Sonar for your website. Since “tuning” alone is not enough to be useful, here are some of my thoughts on a number of factors that may improve upwind performance. I hope you find at least some of this helpful. Further, in an effort to counteract the current wave of American tribalism, I welcome any requests for clarification, or other discussion, either on your website forum or by email to [email protected]. Sheeting and Steering-Mainsheet and jib sheet trim are the most critical controls. In moderate conditions mainsheet wants to be trimmed so the upper leech telltale stalls about half the time. In light air you may want it to flow most of the time to maximize speed and get the keel working. In very heavy air you may not be able to keep it from flowing full time, which also helps drive through waves. Traveler should be adjusted so boom is on the centerline unless you’re overpowered. Every small puff opens the main leech and requires an immediate trim to restore the leech to its critical setting. Every lull requires an ease. Jib should be sheeted so all three luff telltales break at the same time but jib upper leech telltale should absolutely never stall. We do not cleat the jib sheet while sailing upwind, but always work to perfect its trim. In very light air I like to heel the boat to give some shape to the sails. -

Underwater Optical Imaging: Status and Prospects

Underwater Optical Imaging: Status and Prospects Jules S. Jaffe, Kad D. Moore Scripps Institution of Oceanography. La Jolla, California USA John McLean Arete Associates • Tucson, Arizona USA Michael R Strand Naval Surface Warfare Center. Panama City, Florida USA Introduction As any backyard stargazer knows, one simply has vision, and photography". Subsequent to that, there to look up at the sky on a cloudless night to see light have been several books which have summarized a whose origin was quite a long time ago. Here, due to technical understanding of underwater imaging such as the fact that the mean scattering and absorption Merten's book entitled "In Water Photography" lengths are greater in size than the observable uni- (Mertens, 1970) and a monograph edited by Ferris verse, one can record light from stars whose origin Smith (Smith, 1984). An interesting conference which occurred around the time of the big bang. took place in 1970 resulted in the publication of several Unfortunately for oceanographers, the opacity of sea papers on optics of the sea, including the air water inter- water to light far exceeds these intergalactic limits, face and the in water transmission and imaging of making the job of collecting optical images in the ocean objects (Agard, 1973). In this decade, several books by a difficult task. Russian authors have appeared which treat these prob- Although recent advances in optical imaging tech- lems either in the context of underwater vision theory nology have permitted researchers working in this area (Dolin and Levin, 1991) or imaging through scattering to accomplish projects which could only be dreamed of media (Zege et al., 1991). -

US SAILING FUNCTIONAL CLASSIFICATION SYSTEM & PROCEDURES MANUAL 2013—2016 Effective—1 January 2013

[Type text ] [Type text ] [Type text ] US SAILING FUNCTIONAL CLASSIFICATION SYSTEM & PROCEDURES MANUAL 2013—2016 Effective—1 January 2013 Originally Published—May 2013 US Sailing Member of the PO Box 1260 15 Maritime Drive Portsmouth, RI 02871-0907 Phone: 1-401-683-0800 / Toll free: 1-800-USSAIL - (1-800-877-2451) Fax: 401-683-0840 E-Mail: [email protected] International Paralympic Committee Roger H S trube, MD – A pril 20 13 1 ROGE R H STRUBE, MD – APRIL 2013 [Type text ] [Type text ] [Type text ] Contents Introduction....................................................................................................................................................6 Appendix to Introduction – Glossary of Medical Terminology..........................................................................7 Joint Movement Definitions: ...........................................................................................................................7 Neck........................................................................................................................................................7 Shoulder..................................................................................................................................................7 Elbow ......................................................................................................................................................7 Wrist .......................................................................................................................................................7 -

Oakcliff Sailing Center 2013 Sailing & Events Calendar

OAKCLIFF SAILING CENTER 2013 SAILING & EVENTS CALENDAR Janurary Tues Wed Thu Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tues Wed Thurs 2013 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 Holiday, ETC New Years OFF OFF OFF OFF OFF OFF OFF OFF OFF OFF OFF OFF OFF Martin Luther King Day Acorn /Sapling Seminars Match Race SM 40 Shields Melges Reg. Key West Race Week IRC Classics Laser DR Sched Rose Bowl NSPS - US SAILING - Clearwater FL Chicago Boat Show NYYC -Mystic Award for Hunt Bill/ Sonar Jay's Sked Jag Cup E-22Miami College Coaches seminar ON SITE US Sailing Youth World Qualifer Miami OCR OlympiC Clases regatta February Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tue Wed Thu Fri Sat Sun Mon Tues Wed Thurs Fri 2013 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 Holiday, ETC SKI Weekend? Valentine's Day President's Day YRA Rules Seminar at AmeriCan YC Acorn /Sapling Seminars Match Race RC Judge Seminar? Match Race Need min. 2 to help w/ SM 40 Shields Melges IRC Classics Laser SEAW SEAW SEAW SEAW DR Sched Umpire for Mex? Miami Boat Show IYRUS -NYYC Heineken Regatta on Heineken Bill/ Sonar Umpire for Mex? Jay's Sked Jag Cup Miami Weekends in Annapolis for Feb. -

International J/24 Class Association

NOTICE OF RACE 2016 Sonar Atlantic Coast Championship Noroton Viper 0pen NOROTON YACHT CLUB Darien, CT June 4-5, 2016 1 RULES 1.2 The Regatta will be governed by the rules as defined in the Racing Rules of Sailing (RRS). 1.3 Racing rules listed below will be changed as indicated. The changes will appear in full in the Sailing Instructions. The Sailing Instructions may also change other racing rules. a. RRS 63.7 will be replaced with: "If there is any conflict between the Sailing Instructions and this notice of race, the Sailing Instructions shall apply." b. RRS 61 will be changed to require a boat to inform a race committee finish boat immediately after finishing of its intention to protest another boat and provide the sail number of the protested boat. c. The US Sailing Prescriptions to rules 60, 63.2 and 63.4 will be deleted. 2 ADVERTISING Advertising is restricted as regulated by ISAF Regulation 20, Advertising Code. 3 ELIGIBILTY AND ENTRIES 4 The event is open to all competitors who meet the Sonar Class Association eligibility requirements (Article B12.00) or the Viper 640 Class Association rules. 4.2 Sonars: Eligible boats may enter by completing registration and paying the entry fee to Sonar Fleet 1. You can register online at the Sonar Class Association website at www.sonar.org. You may also obtain a registration form online, and mail it to: Bruce McArthur 123 Harbor Drive #209 Stamford, CT 06902 Phone: (203) 655-6665 Vipers: Eligible boats may enter using the Regatta Network link on the Viper website.