Automated Vehicle Electronic Control Unit (ECU) Sensor Location Using Feature-Vector Based Comparisons

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bluecat™ 300 Brochure

Your LSI engine emission control just got easier! The BlueCAT™ 300 is a Retrofit Emissions Control System which has been verified by the California Air Resources Board (CARB) for installation on uncontrolled gaseous-fueled Large Spark-Ignition (LSI) engines. BlueCAT™ 300 systems control exhaust emissions and noise from industrial forklift trucks, floor care machinery, aerial lifts, ground support equipment, and other spark-ignition rich-burn (stoichiometric) engines. Nett Technologies’ BlueCAT™ 300 practically eliminates all of the major exhaust pollutants: Carbon Monoxide (CO) and Oxides of Nitrogen (NOx) emissions are reduced by over 90% and Hydrocarbons (HC) by over 80%. BlueCAT™ 300 three-way catalytic converters consist of a high-performance emissions control catalyst and an advanced electronic Air/Fuel Ratio Controller. The devices work together to optimize engine operation, fuel economy and control emissions. The controller also reduces fuel consumption and increases engine life. Nett's BlueCAT™ 300 catalytic muffler replaces the OEM muffler simplifying installation and saving time. The emission control catalyst is built into the muffler and its size is selected based on the displacement of the engine. Thousands of direct-fit designs are available for all makes/models of forklifts and other equipment. The BlueCAT™ 300 catalytic muffler matches or surpasses the noise attenuation performance of the original muffler with the addition of superior emissions reduction performance. NIA ARB OR IF L ™ A C BlueCAT VERIFIED 300 LSI-2 Rule 3-Way Catalyst scan and learn Sold and supported globally, Nett Technologies Inc., develops and manufactures proprietary catalytic solutions that use the latest in diesel oxidation catalyst (DOC), diesel particulate filter (DPF), selective catalytic reduction (SCR), engine electronics, stationary engine silencer, exhaust system and exhaust gas dilution technologies. -

Engine Control Unit

Engine Control Unit João Filipe Ferreira Vicente Dissertation submitted for obtaining the degree in Master of Electronic Engineering, Instituto Superior Técnico Abstract The car used (Figure 1) has a fibreglass body and uses a Honda F4i engine taken from the Honda This paper describes the design of a fully CBR 600. programmable, low cost ECU based on a standard electronic circuit based on a dsPIC30f6012A for the Honda CBR600 F4i engine used in the Formula Student IST car. The ECU must make use of all the temperature, pressure, position and speed sensors as well as the original injectors and ignition coils that are already available on the F4i engine. The ECU must provide the user access to all the maps and allow their full customization simply by connecting it to a PC. This will provide the user with Figure 1 - FST03. the capability to adjust the engine’s performance to its needs quickly and easily. II. Electronic Fuel Injection Keywords The growing concern of fuel economy and lower emissions means that Electronic Fuel Injection Electronic Fuel Injection, Engine Control Unit, (EFI) systems can be seen on most of the cars Formula Student being sold today. I. Introduction EFI systems provide comfort and reliability to the driver by ensuring a perfect engine start under This project is part of the Formula Student project most conditions while lessening the impact on the being developed at Instituto Superior Técnico that environment by lowering exhaust gas emissions for the European series of the Formula Student and providing a perfect combustion of the air-fuel competition. -

05 NG Engine Technology.Pdf

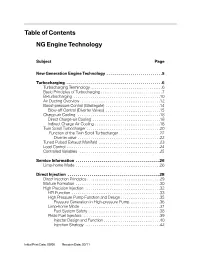

Table of Contents NG Engine Technology Subject Page New Generation Engine Technology . .5 Turbocharging . .6 Turbocharging Terminology . .6 Basic Principles of Turbocharging . .7 Bi-turbocharging . .10 Air Ducting Overview . .12 Boost-pressure Control (Wastegate) . .14 Blow-off Control (Diverter Valves) . .15 Charge-air Cooling . .18 Direct Charge-air Cooling . .18 Indirect Charge Air Cooling . .18 Twin Scroll Turbocharger . .20 Function of the Twin Scroll Turbocharger . .22 Diverter valve . .22 Tuned Pulsed Exhaust Manifold . .23 Load Control . .24 Controlled Variables . .25 Service Information . .26 Limp-home Mode . .26 Direct Injection . .28 Direct Injection Principles . .29 Mixture Formation . .30 High Precision Injection . .32 HPI Function . .33 High Pressure Pump Function and Design . .35 Pressure Generation in High-pressure Pump . .36 Limp-home Mode . .37 Fuel System Safety . .38 Piezo Fuel Injectors . .39 Injector Design and Function . .40 Injection Strategy . .42 Initial Print Date: 09/06 Revision Date: 03/11 Subject Page Piezo Element . .43 Injector Adjustment . .43 Injector Control and Adaptation . .44 Injector Adaptation . .44 Optimization . .45 HDE Fuel Injection . .46 VALVETRONIC III . .47 Phasing . .47 Masking . .47 Combustion Chamber Geometry . .48 VALVETRONIC Servomotor . .50 Function . .50 Subject Page BLANK PAGE NG Engine Technology Model: All from 2007 Production: All After completion of this module you will be able to: • Understand the technology used on BMW turbo engines • Understand basic turbocharging principles • Describe the benefits of twin Scroll Turbochargers • Understand the basics of second generation of direct injection (HPI) • Describe the benefits of HDE solenoid type direct injection • Understand the main differences between VALVETRONIC II and VALVETRONIC II I 4 NG Engine Technology New Generation Engine Technology In 2005, the first of the new generation 6-cylinder engines was introduced as the N52. -

Design of the Electronic Engine Control Unit Performance Test System of Aircraft

aerospace Article Design of the Electronic Engine Control Unit Performance Test System of Aircraft Seonghee Kho 1 and Hyunbum Park 2,* 1 Department of Defense Science & Technology-Aeronautics, Howon University, 64 Howondae 3gil, Impi, Gunsan 54058, Korea; [email protected] 2 School of Mechanical Convergence System Engineering, Kunsan National University, 558 Daehak-ro, Miryong-dong, Gunsan 54150, Korea * Correspondence: [email protected]; Tel.: +82-(0)63-469-4729 Abstract: In this study, a real-time engine model and a test bench were developed to verify the performance of the EECU (electronic engine control unit) of a turbofan engine. The target engine is a DGEN 380 developed by the Price Induction company. The functional verification of the test bench was carried out using the developed test bench. An interface and interworking test between the test bench and the developed EECU was carried out. After establishing the verification test environments, the startup phase control logic of the developed EECU was verified using the real- time engine model which modeled the startup phase test data with SIMULINK. Finally, it was confirmed that the developed EECU can be used as a real-time engine model for the starting section of performance verification. Keywords: test bench; EECU (electronic engine control unit); turbofan engine Citation: Kho, S.; Park, H. Design of 1. Introduction the Electronic Engine Control Unit The EECU is a very important component in aircraft engines, and the verification Performance Test System of Aircraft. test for numerous items should be carried out in its development process. Since it takes Aerospace 2021, 8, 158. https:// a lot of time and cost to carry out such verification test using an actual engine, and an doi.org/10.3390/aerospace8060158 expensive engine may be damaged or a safety hazard may occur, the simulator which virtually generates the same signals with the actual engine is essential [1]. -

Outboard Protection

Get Premium Outboard Protection. For True Peace of Mind. Passport Premier offers comprehensive, long-term engine package protection for your new or pre-owned vessel. Even entire engine assemblies are replaced if necessary. So you can enjoy your time on the water, knowing you are covered against costly repairs for years to come. Passport Premier lets you head out with confidence: • Long-term coverage on over 120 major engine parts • Covers overheating, even detonation, lots more • Repair reimbursement includes parts and labor • Locking in now offers assurance against inflation • Protection cost can be rolled into your boat financing • All benefits are transferable for higher resale value With expert service at any manufacturer authorized facility and plan management by Brunswick, a top U.S. boat and engine seller, it’s coverage you can truly count on. Comprehensive Extended Protection Benefits Non-Defective Engine Breakdown Claim Payment Benefits Service Assist Engine Sensor Failures Carbonized Rings Lubricants Hoses On-water towing Pick Up/delivery Thermostat Failures Heat Collapsed Rings Coolants Engine Tuning Hoist/lift-out Lake Test Overheating* Scored Pistons Belts Taxes Haul Out Sea Trial Preignition Scored Cylinders Spark Plugs Shop Supplies Dockside repair call Detonation Heat Cracked Heads Clamps Haul Out Burnt Valves Warped Heads Filters Transfer Provision Bent Valves Heat Cracked Block Tuliped Valves All service contract plan benefits transferable on new boats – *Any overheating conditions created by raw water pump and/or impeller -

SURVEY on MULTI POINT FUEL INJECTION (MPFI) ENGINE Deepali Baban Allolkar*1, Arun Tigadi 2 & Vijay Rayar3 *1 Department of Electronics and Communication, KLE Dr

ISSN: 2277-9655 [Allolkar* et al., 7(5): May, 2018] Impact Factor: 5.164 IC™ Value: 3.00 CODEN: IJESS7 IJESRT INTERNATIONAL JOURNAL OF ENGINEERING SCIENCES & RESEARCH TECHNOLOGY SURVEY ON MULTI POINT FUEL INJECTION (MPFI) ENGINE Deepali Baban Allolkar*1, Arun Tigadi 2 & Vijay Rayar3 *1 Department of Electronics and Communication, KLE Dr. M S Sheshgiri College of Engineering and Technology, India. 2,3Assistant Professor, Department of Electronics and Communication, KLE Dr. M S Sheshgiri College of Engineering and Technology, India. DOI: 10.5281/zenodo.1241426 ABSTRACT The Multi Point Fuel Injection (MPFI) is a system or method of injecting fuel into internal combustion engine through multi ports situated on intake valve of each cylinder. It delivers an exact quantity of fuel in each cylinder at the right time. The amount of air intake is decided by the car driver by pressing the gas pedal, depending on the speed requirement. The air mass flow sensor near throttle valve and the oxygen sensor in the exhaust sends signal to Electronic control unit (ECU). ECU determines the air fuel ratio required, hence the pulse width. Depending on the signal from ECU the injectors inject fuel right into the intake valve. Thus the multi-point fuel injection technology uses individual fuel injector for each cylinder, there is no gas wastage over time. It reduces the fuel consumption and makes the vehicle more efficient and economical. KEYWORDS: Multi point fuel injection (MPFI), Cylinder, Gas pedal, Throttle valve, Electronic control Unit (ECU). I. INTRODUCTION Petrol engines used carburetor for supplying the air fuel mixture in correct ratio but fuel injection replaced carburetors from the 1980s onward. -

Research Article Development of an Integrated Cooling System Controller for Hybrid Electric Vehicles

Hindawi Journal of Electrical and Computer Engineering Volume 2017, Article ID 2605460, 9 pages https://doi.org/10.1155/2017/2605460 Research Article Development of an Integrated Cooling System Controller for Hybrid Electric Vehicles Chong Wang,1 Qun Sun,1 and Limin Xu2 1 School of Mechanical and Automotive Engineering, Liaocheng University, Liaocheng, China 2School of International Education, Liaocheng University, Liaocheng, China Correspondence should be addressed to Chong Wang; [email protected] Received 14 January 2017; Accepted 15 March 2017; Published 10 April 2017 Academic Editor: Ephraim Suhir Copyright © 2017 Chong Wang et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. A hybrid electrical bus employs both a turbo diesel engine and an electric motor to drive the vehicle in different speed-torque scenarios. The cooling system for such a vehicle is particularly power costing because it needs to dissipate heat from not only the engine, but also the intercooler and the motor. An electronic control unit (ECU) has been designed with a single chip computer, temperature sensors, DC motor drive circuit, and optimized control algorithm to manage the speeds of several fans for efficient cooling using a nonlinear fan speed adjustment strategy. Experiments suggested that the continuous operating performance of the ECU is robust and capable of saving 15% of the total electricity comparing with ordinary fan speed control method. 1. Introduction according to water temperature. Some recent studies paid more attention to the nonlinear engine and radiator ther- A hybrid electrical vehicle (HEV) employs both a turbo diesel mocharacteristics and the corresponding nonlinear PWM engine and an electric motor to drive the vehicle in different control techniques [6–9], which so far has not yielded mass speed-torquescenarios.Aneffectivethermomanagement produced equipment. -

Review Paper on Vehicle Diagnosis with Electronic Control Unit

Volume 3, Issue 2, February – 2018 International Journal of Innovative Science and Research Technology ISSN No:-2456 –2165 Review Paper on Vehicle Diagnosis with Electronic Control Unit Rucha Pupala Jalaj Shukla Mechanical, Sinhgad Institute of Technology and Science Mechanical, Sinhgad Institute of Technology and Science Pune, India Pune, India Abstract—An Automobile vehicle is prone to various faults stringency of exhaust gas legislation, the electronically due to more complex integration of electro-mechanical controlled system has been widely used in engines for components. Due to the increasing stringency of emission performance optimization of the engine as well as vehicle norms improved and advanced electronic systems have propelled by the engine[1].The faults in the automotive engine been widely used. When different faults occur it is very may lead to increased emissions and more fuel consumption difficult for a technician who does not have sufficient with engine damage. These affects can be prevented if faults knowledge to detect and repair the electronic control are detected and treated in timely manner. A number of fault system. However such services in the after sales network monitoring and diagnostic methods have been developed for are crucial to the brand value of automotive manufacturer automotive applications. The existing systems typically and client satisfaction. Development of a fast, reliable and implement fault detection to indicate that something is wrong accurate intelligent system for fault diagnosis of in the monitored system, fault separation to determine the automotive engine is greatly urged. In this paper a new exact location of the fault i.e., the component which is faulty, approach to Off- Board diagnostic system for automotive and fault identification to determine the magnitude of the fault. -

Virtual Sensor for Automotive Engine to Compensate for the Map, Engine Speed Sensors Faults

Virtual Sensor For Automotive Engine To Compensate For The Map, Engine Speed Sensors Faults By Sohaub S.Shalalfeh Ihab Sh.Jaber Ahmad M.Hroub Supervisor: Dr. Iyad Hashlamon Submitted to the College of Engineering In partial fulfillment of the requirements for the degree of Bachelor degree in Mechatronics Engineering Palestine Polytechnic University March- 2016 Palestine Polytechnic University Hebron –Palestine College of Engineering and Technology Mechanical Engineering Department Project Name Virtual sensor for automotive engine to compensate for the map, engine speed sensors faults Project Team Sohaub S.shalalfeh Ihab Sh.Jaber Ahmad M.Hroub According to the project supervisor and according to the agreement of the testing committee members, this project is submitted to the Department of Mechanical Engineering at College of Engineering in partial fulfillments of the requirements of the Bachelor’s degree. Supervisor Signature ………………………….. Committee Member Signature ……………………… ……………………….. …………………… Department Head Signature ………………………………… I Dedication To our parents. To all our teachers. To all our friends. To all our brothers and sisters. To Palestine Polytechnic University. Acknowledgments We could not forget our families, who stood by us, with their support, love and care for our whole lives; they were with us with their bodies and souls, believed in us and helped us to accomplish this project. We would like to thank our amazing teachers at Palestine Polytechnic University, to whom we would carry our gratitude our whole life. Special thanks -

Closed Loop Engine Management System

Information Sheet #105 CLOSED-LOOP CONTROL SYSTEMS ON GASEOUS GENERATORS Frequently the engine used to drive the generator in a standby or prime power generator system is a 4-stroke spark ignition (SI) engine. While many smaller portable generators use SI engines fueled by gasoline, the majority of SI engine driven generators above 10kW are fitted with SI engines fueled by gaseous fuel, either natural gas (NG), or liquid petroleum gas (LPG). The majority of gaseous powered SI engines within a generator system are frequently referred to as having a Closed-Loop Engine Control System. In understanding Buckeye Power Sales necessary maintenance required to maintain optimum operation and performance of an SI engine using a closed-loop system, it is Reliable Power Professionals Since 1947 important to be aware of all the components within the system, their functions, and the advantages a closed-loop system. 1.0 WHAT IS A CLOSED-LOOP SYSTEM: The term “loop” in a control system refers to the path taken through various components to obtain a desired output. Used in conjunction with the word “closed” it refers to sensors measuring actual output along the path against required output. The various outputs measured along the path, or loop, are referred to as feedback signals. In a closed- loop system, the feedback along the path constantly enables the engine control system to adjust and ensure the right output is maintained as variations in ambient temperature, load, altitude, and humidity influence combustion and required output. So, in brief, closed-loop systems employ sensors in the loop to constantly provide feedback so the ECU can adjust inputs to obtain the required output. -

Design of Electronically Controlled Fuel Injection System for Carburetor Based Engine

IJRET: International Journal of Research in Engineering and Technology eISSN: 2319-1163 | pISSN: 2321-7308 DESIGN OF ELECTRONICALLY CONTROLLED FUEL INJECTION SYSTEM FOR CARBURETOR BASED ENGINE 1 2 3 4 Abhishek Kumar , Abhijeet Kumar , Ujjwal Ashish , Ashok B 1B.Tech (EEE), 3rd year, VIT University, Vellore, India 2B.Tech (ECE), 3rd year, VIT University, Vellore, India 3B.Tech (EEE), 3rd year, VIT University, Vellore, India 4Assistant Professor, SMBS, VIT University, Vellore, India Abstract In the Modern world, automotive electronics play an important role in the manufacturing of any passenger car. Automotive electronics consists of advanced sensors, control units, and “mechatronic“actuator making it increasingly complex, networked vehicle systems. Electronic fuel injection (EFI) is the most common example of automotive electronics application in Powertrain. An EFI system is basically developed for the control of injection timing and fuel quantity for better fuel efficiency and power output. In this paper, we will explain various types of fuel injection system that are used most commonly nowadays and will also explain the various parameters considered during calculating,(using speed density method), base fuel quantity during runtime. We will explain the major difference between speed density method and alpha-n method and in the end, we will also show the MATLAB/Simulink model of fuel injection system for Single cylinder four stroke engine. Keywords: Air Fuel Ratio, Fuel Injection System, Speed Density Method, Engine Management System --------------------------------------------------------------------***---------------------------------------------------------------------- 1. INTRODUCTION The primary difference between carburetors and fuel Engine management system (EMS) is an essential part of injection system is that fuel injection atomizes through a any vehicle, which controls and monitors the engine. -

Engine Control Unit MS 12 Engine Control Unit MS 12

Bosch Motorsport | Engine Control Unit MS 12 Engine Control Unit MS 12 www.bosch-motorsport.com u 12 injection output stages u For piezo injectors u 78 data inputs The MS 12 is the high-end ECU for Diesel engines. This Optional function packages available ECU offers 12 Piezo injection power stages for use in up to a 12 cylinder engine. Various engine and chassis Interface to Bosch Data Logging System parameters can be measured with a high number of in- Max. vibration Vibration profile 1 (see Appendix put channels. All measured data can be transferred via or www.bosch-motorsport.com) FireWire interface to an optional flash card data log- ger. Gear box control strategies are optional. Technical Specifications Application Mechanical Data Engine layout Max. 12 cyl. Aluminum housing Injector type Piezo injectors 5 connectors in motorsport technology with high pin density, 242 pins Control strategy Quantity based Each connector individually filtered. Injection timing 2 pilot injections Vibration damped circuit boards 1 main injection 1 post injection 8 housing fixation points Turbo boost control (incl. VTG) Single or Twin-Turbo Size 240 x 200 x 57 mm Lambda measurement Protection Classification IP67 to DIN 40050, Section 9, Is- sue 2008 Traction control Weight 2,500 g Launch control Temperature range -20 to 85°C Gear cut for sequential gearbox Gearbox control Speed limiter 2 | Engine Control Unit MS 12 Electrical Data AS 6-18-35 SB Power consumption w/o inj. Approx. 5 W at 14 V Mating Connector III F 02U 000 475-01 AS 6-18-35 SC Power consumption at 6,500 rpm Max.