Computational Star-Tracking Compensation in Astrophotography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Name: NAAP – the Rotating Sky 1/11

Name: The Rotating Sky – Student Guide I. Background Information Work through the explanatory material on The Observer, Two Systems – Celestial, Horizon, the Paths of Stars, and Bands in the Sky. All of the concepts that are covered in these pages are used in the Rotating Sky Explorer and will be explored more fully there. II. Introduction to the Rotating Sky Simulator • Open the Rotating Sky Explorer The Rotating Sky Explorer consists of a flat map of the Earth, Celestial Sphere, and a Horizon Diagram that are linked together. The explanations below will help you fully explore the capabilities of the simulator. • You may click and drag either the celestial sphere or the horizon diagram to change your perspective. • A flat map of the earth is found in the lower left which allows one to control the location of the observer on the Earth. You may either drag the map cursor to specify a location, type in values for the latitude and longitude directly, or use the arrow keys to make adjustments in 5° increments. You should practice dragging the observer to a few locations (North Pole, intersection of the Prime Meridian and the Tropic of Capricorn, etc.). • Note how the Earth Map, Celestial Sphere, and Horizon Diagram are linked together. Grab the map cursor and slowly drag it back and forth vertically changing the observer’s latitude. Note how the observer’s location is reflected on the Earth at the center of the Celestial Sphere (this may occur on the back side of the earth out of view). • Continue changing the observer’s latitude and note how this is reflected on the horizon diagram. -

The Definitive Guide to Shooting Hypnotic Star Trails

The Definitive Guide to Shooting Hypnotic Star Trails www.photopills.com Mark Gee proves everyone can take contagious images 1 Feel free to share this eBook © PhotoPills December 2016 Never Stop Learning A Guide to the Best Meteor Showers in 2016: When, Where and How to Shoot Them How To Shoot Truly Contagious Milky Way Pictures Understanding Golden Hour, Blue Hour and Twilights 7 Tips to Make the Next Supermoon Shine in Your Photos MORE TUTORIALS AT PHOTOPILLS.COM/ACADEMY Understanding How To Plan the Azimuth and Milky Way Using Elevation The Augmented Reality How to find How To Plan The moonrises and Next Full Moon moonsets PhotoPills Awards Get your photos featured and win $6,600 in cash prizes Learn more+ Join PhotoPillers from around the world for a 7 fun-filled days of learning and adventure in the island of light! Learn More Index introduction 1 Quick answers to key Star Trails questions 2 The 21 Star Trails images you must shoot before you die 3 The principles behind your idea generation (or diverge before you converge) 4 The 6 key Star Trails tips you should know before start brainstorming 5 The foreground makes the difference, go to an award-winning location 6 How to plan your Star Trails photo ideas for success 7 The best equipment for Star Trails photography (beginner, advanced and pro) 8 How to shoot single long exposure Star Trails 9 How to shoot multiple long exposure Star Trails (image stacking) 10 The best star stacking software for Mac and PC (and how to use it step-by-step) 11 How to create a Star Trails vortex (or -

A Guide to Smartphone Astrophotography National Aeronautics and Space Administration

National Aeronautics and Space Administration A Guide to Smartphone Astrophotography National Aeronautics and Space Administration A Guide to Smartphone Astrophotography A Guide to Smartphone Astrophotography Dr. Sten Odenwald NASA Space Science Education Consortium Goddard Space Flight Center Greenbelt, Maryland Cover designs and editing by Abbey Interrante Cover illustrations Front: Aurora (Elizabeth Macdonald), moon (Spencer Collins), star trails (Donald Noor), Orion nebula (Christian Harris), solar eclipse (Christopher Jones), Milky Way (Shun-Chia Yang), satellite streaks (Stanislav Kaniansky),sunspot (Michael Seeboerger-Weichselbaum),sun dogs (Billy Heather). Back: Milky Way (Gabriel Clark) Two front cover designs are provided with this book. To conserve toner, begin document printing with the second cover. This product is supported by NASA under cooperative agreement number NNH15ZDA004C. [1] Table of Contents Introduction.................................................................................................................................................... 5 How to use this book ..................................................................................................................................... 9 1.0 Light Pollution ....................................................................................................................................... 12 2.0 Cameras ................................................................................................................................................ -

To Photographing the Planets, Stars, Nebulae, & Galaxies

Astrophotography Primer Your FREE Guide to photographing the planets, stars, nebulae, & galaxies. eeBook.inddBook.indd 1 33/30/11/30/11 33:01:01 PPMM Astrophotography Primer Akira Fujii Everyone loves to look at pictures of the universe beyond our planet — Astronomy Picture of the Day (apod.nasa.gov) is one of the most popular websites ever. And many people have probably wondered what it would take to capture photos like that with their own cameras. The good news is that astrophotography can be incredibly easy and inexpensive. Even point-and- shoot cameras and cell phones can capture breathtaking skyscapes, as long as you pick appropriate subjects. On the other hand, astrophotography can also be incredibly demanding. Close-ups of tiny, faint nebulae, and galaxies require expensive equipment and lots of time, patience, and skill. Between those extremes, there’s a huge amount that you can do with a digital SLR or a simple webcam. The key to astrophotography is to have realistic expectations, and to pick subjects that are appropriate to your equipment — and vice versa. To help you do that, we’ve collected four articles from the 2010 issue of SkyWatch, Sky & Telescope’s annual magazine. Every issue of SkyWatch includes a how-to guide to astrophotography and visual observing as well as a summary of the year’s best astronomical events. You can order the latest issue at SkyandTelescope.com/skywatch. In the last analysis, astrophotography is an art form. It requires the same skills as regular photography: visualization, planning, framing, experimentation, and a bit of luck. -

Making Star Trail Images During Winter

Making Star Trail Images during Winter By Jeff Kowalke What I will be covering… u Preparation – Equipment, Scouting, Timing u What Camera Settings to Use u How to Focus u How to create compositions u Setup u Post Processing Remember, this is how I do it! u There are countless ways to do things. The items that we talk about today are just how I’ve figured out how to do them. u Most photographers, including myself , are always looking for better ways to accomplish tasks so, if after this presentation, you come up with a way to do something better, please share! That includes any aspect of my talk tonight! Equipment u Clothing – Dress for the weather! u Camera u Canon 70 D with Canon EF-S 10-18mm f/4.5 – 5.6 IS STM u Differences between fast and slow lens u Great Guide for Astrophotography and Equipment u Lonely Speck – How to pick a Lens for Milky Way Photography - http://www.lonelyspeck.com/lenses-for-milky-way-photography./ u Slower lens = star trails u Battery Pack u Contains two batteries = more time out taking star trails, even with the cold u Extra batteries u Tripod u Appreciate my lighter tripod that I hike around with to find a good spot u Intervalometer – extra batteries u Storm Cover u Micro-fiber cloths Misc. Equipment u Tarp u Driving – Car + Mummy Bag or Chair + Tarp + Mummy Bag u Hiking – Tarp + Mummy Bag u Handwarmers – keeping lens and battery pack warm u iPad / Book u Bivvy Bag u Loupe Before you leave checklist u Bundled up? u All extra batteries for camera + intervalometer in pocket near your body – keeps them warm until -

An Approach to Ground Based Space Surveillance of Geostationary On-Orbit Servicing Operations

Acta Astronautica 112 (2015) 56–68 Contents lists available at ScienceDirect Acta Astronautica journal homepage: www.elsevier.com/locate/actaastro An approach to ground based space surveillance of geostationary on-orbit servicing operations Robert (Lauchie) Scott a, Alex Ellery b a Defence R&D Canada, 3701 Carling Ave, Ottawa, Ontario, Canada K1A 0Z4 b Carleton University, Department of Aerospace and Mechanical Engineering, Ottawa, Ontario, Canada article info abstract Article history: On Orbit Servicing (OOS) is a class of dual-use robotic space missions that could potentially Received 5 November 2014 extend the life of orbiting satellites by fuel replenishment, repair, inspection, orbital Received in revised form maintenance or satellite repurposing, and possibly reduce the rate of space debris generation. 5 February 2015 OOS performed in geostationary orbit poses a unique challenge for the optical space Accepted 7 March 2015 surveillance community. Both satellites would be performing proximity operations in tight Available online 18 March 2015 formation flight with separations less than 500 m making atmospheric seeing (turbulence) a Keywords: challenge to resolving a geostationary satellite pair when viewed from the ground. The two Satellite tracking objects would appear merged in an image as the resolving power of the telescope and Space surveillance detector, coupled with atmospheric seeing, limits the ability to resolve the two objects. This Space situational awareness poses an issue for obtaining orbital data for conjunction flight safety or, in matters pertaining On-orbit servicing Differential angles tracking to space security, inferring the intent and trajectory of an unexpected object perched very close to one's satellite asset on orbit. -

Cheryl Wamboldt Volume 11 Issue 1

Editor: Cheryl Wamboldt Volume 11 Issue 1 October That old law about "an eye for an eye" leaves 2017 everybody blind. Rev. Martin Luther King Jr. (Activist, 1929-1968) Executive 2017/2018 Presidents Message Our new season has started well. We had a very successful fund raiser. I especially want to thank Cheryl Swain for all her hard work and planning for the event. Thank you to the membership for great support for the bake sale and print raffle. O We hope everyone has enjoyed and gained some knowledge from our speakers. There are several outing locations coming up this fall so I hope you are able to go along to some. It is a good opportunity to get new images and get to know other members. If you have any ideas for outing locations please speak to Bob Jones or Lawrence Robson. We hope you will find the upcoming meetings informative and interesting. Please speak to anyone on the executive if you have questions regarding submitting images, prints or any other photography or club questions. Heather Halfyard co-president Our special thanks to Cheryl Swain for taking a dream and making it a reality. Great job Cheryl as th e Craft show was a great success . Also special thanks to the hard workers behind the scenes. On the next pages I have shared photos of the Craft Show PAGE 1 PAGE 2 Artisan Craft Sale I would like to take this opportunity to say thank you to everyone for their participation in helping to make this event a success!! You all came through in spades for the Print Raffle and Bake Sale table with your generous donations and all your efforts to package and label your baked good. -

Dave Morrow's Night Sky Photography Guides

Dave Morrow’s Night Sky Photography Guides This guide contains PDF copies of my Milky Way, Star Trails & Northern Lights Photography Guides, which can be found on my website. PDFs allow you to easily take the guides out shooting with you, by downloading them to your phone. Many photographers have asked for a complete resource, so I also wrote a book on the topic, which goes into even more detail. Start with this guide & if you want to learn more, download the ebook. START HERE | LEARN PHOTOGRAPHY | VIEW PHOTOS | WORKSHOPS & TOURS www.DaveMorrowPhotography.com Page 1 My 170 page eBook, Photograph the Night Sky, teaches every skill, technique and workflow for Milky Way, Northern Lights, Moon, Star Trail and Night Sky Photography. You Can Download Photograph the Night Sky for 10$ I also provide Star & Night Sky Photography Workshops & Tours. Enjoy the Guide & Happy Shooting, Dave TABLE OF CONTENTS Click the titles & jump to specific sections. Milky Way Photography Guide 4 Star Photography Camera Equipment 4 Minimum Requirements 4 Planning Your Night Photography Shoot 6 Moon Phase, Dark Skies & Weather 6 Learn The Photographer’s Ephemeris & Google Earth 7 Locate the Milky Way - Stellarium 8 Focusing Your Lens - Milky Way & Night Photography 8 Focusing Your Lens at Night - Camera Technique 9 Method 1: Preset Your Focus Point During the Day 9 Camera Settings - Milky Way & Star Photography 10 Exposure Time Settings - Milky Way Photography 12 The 500 Rule Equation & Exposure Chart 14 Step By Step - Calculating the -

Photographing the Night Sky DSLR Astrophotography

Photographing the Night Sky DSLR Astrophotography Phil North FODCC Agenda • Introduction • Moonlit Landscapes • Night Sky Photography – Milky Way – Star Trails – Time Lapse • Some Examples • Coffee Break • Photoshop Techniques 26 October 2015 © Phil North FODC 2 What is Astrophotography • Astrophotography is a large sub-discipline in amateur astronomy and is the process of producing photographs of objects in the universe and large areas of the sky. • It can be done with standard photography equipment to specialised equipment requiring significant financial investment. • Astrophotography is relatively easy in the beginning stages but becomes difficult at advanced levels. • Advance level requires use of telescopes, tracking mounts and specialised CCD cameras 26 October 2015 © Phil North FODC 3 Types of Astrophotography • Deep space – images which are taken with use of a telescope of objects beyond our own solar system. – These are those stunning images you see of distant galaxies and nebulae, and this is the most technical and hardest form of astrophotography. • Wide Field – this is astrophotography that is taken with a DSLR camera and wide angled lens. – These are the images you see that include a starry sky or star trails above a landscape. This is the most accessible form of astrophotography, and are the kind I practice and will be talking about. – Time-lapse Astrophotography – is just an extension on Wide Field Astrophotography. The only difference is you take lots of exposures over time and then combine the frames to make a time-lapse video. The same technique can be used to make a star trail image. 26 October 2015 © Phil North FODC 4 What You Need • A camera that has manual exposure mode • A bulb setting for exposures longer than 30s • A remote control or a shutter release cable in order to minimise shaking the camera when taking the pictures. -

Astrophotography Handbook for DSLR Cameras

Astrophotography Handbook for DSLR Cameras Michael K. Miller Oak Ridge, TN Quick Start Guide The three most common forms of wide-angle astrophotography with DSLR cameras adjust red values DLSR cameras Lens Modes ISO, Post as necessary for RAW preferred NR noise reduction aperture, processing proper exposure shutter speed Star trails Full frame better Wide angle M mode, ISO 400, Combine Point N for circles 16-50mm Manual focus Widest f-stop, frames in Remote shutter e.g., 24 mm at in 30 min.- 2h Photoshop release (hold or lock (16 mm for a crop in multiple 20 -30s down release) Liveview, (see slide 30) sensor camera) shots (camera on CL or intervalometer set NR OFF continuous low), Star trails on 20s (LONG) or BULB setting for exposure time intervalometer Stars/Milky Full frame better Wide angle M mode, ISO 400-1600, Normal Way Remote shutter 16-50mm Manual focus Widest f-stop, release e.g., 24 mm at in 10-20s max. (16 mm for a crop Liveview, Single shot, S Stars sensor camera) NR ON The moon Crop factor Longest focal Spot meter, ISO 200, Normal cameras better length: Spot focus, f/5.6, Remote shutter 400-500mm Auto focus ~1/1000s, Moon release on moon Single shot, S The explanations for these settings are discussed in the following slides Tripod is required for all these celestial objects; remove lens filters, use lens hoods 2 Types of Astrophotography Wide-field, or landscape, astrophotography - photographs of the night sky revealing the stars and galaxies, including the Milky Way, that are acquired with DSLR and other cameras with wide-angle lenses with focal lengths shorter than roughly 35 mm. -

Exploring Time

Exploring Time Learn How to Create Your Own Stunning Time-lapses Dr. Mike Shaw Bryan Peterson School of Photography [email protected] Last Week – Ramped Time-Lapses You will learn: • What is a time-lapse • What equipment is needed, best camera settings • How to create your input files • How to assemble your input files into time-lapses • How to cope with things that often go wrong • Advanced methods of assembling time-lapses • Advanced time-lapse subjects (day-to-night, astro, etc.) • How to include motion Week 4 – Advanced Topics You will learn: • What is a time-lapse • What equipment is needed, best camera settings • How to create your input files • How to assemble your input files into time-lapses • How to cope with things that often go wrong • Advanced methods of assembling time-lapses • Advanced time-lapse subjects (day-to-night, astro, etc.) • How to include motion Advanced time-lapse topics Methods Starbursts Smoothing Subjects Milky Way Aurora Borealis Moonrise Motion Slide 3D Starburst time-lapse “Shooting Star” Time-lapses 1) Sequence of starry sky images can be used to make a star trail image 2) Star trail option in StarStax: “comet mode” 3) Option: “Save intermediate files” 4) Use intermediate files to create a shooting star time-lapse! Use StarStax to convert input files original images Starburst star trail image “comet mode” intermediate files Simply use these files for time-lapse! Can combine original/starbust images Advanced time-lapse topics Methods Starbursts Smoothing Subjects Milky Way Aurora Borealis Moonrise -

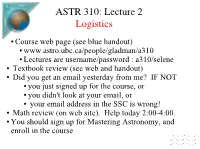

ASTR 310: Lecture 2 Logistics

ASTR 310: Lecture 2 Logistics ● Course web page (see blue handout) ● www.astro.ubc.ca/people/gladman/a310 ● Lectures are username/password : a310/selene ● Textbook review (see web and handout) ● Did you get an email yesterday from me? IF NOT ● you just signed up for the course, or ● you didn't look at your email, or ● your email address in the SSC is wrong! ● Math review (on web site). Help today 2:00-4:00. ● You should sign up for Mastering Astronomy, and enroll in the course MasteringMastering AstronomyAstronomy introintro www.masteringastronomy.com New Student Access code in book or from bookstore or online (see course web page) MAKE SURE your UBC ID goes in Join course : a310ubc2011sep Assignments 2 and 3, due 6 AM Monday Assign. 1 not for credit, do this weekend. ASTR 310 The routine 1) Make note of readings for next class See course web page for the page number DO the readings, then look for the quiz 2) The Mastering Astronomy quiz goes live 2 PM (on each day there is a lecture) 1)Reading quiz appear at end of each class This is the material to be done NEXT lecture 2)Due at *6 AM* due date will the norm... 3) I adapt lectures to places you have troubles (this is called “Just in Time Teaching”) WhyWhy dodo wewe dodo this?this? On the questionnaire, many students said something like: “ I've heard that if you do the readings and keep up this is a course that is not too hard to get a good grade in.” This is CORRECT! We've actually SHOWN this makes a big difference! Comparison using two methods for this course.