“How to Be the Steward of Your Idea, W/Affectiva's Rana El Kaliouby”

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CITY MOOD How Does Your City Feel?

CITY MOOD how does your city feel? Luisa Fabrizi Interaction Design One year Master 15 Credits Master Thesis August 2014 Supervisor: Jörn Messeter CITY MOOD how does your city feel? 2 Thesis Project I Interaction Design Master 2014 Malmö University Luisa Fabrizi [email protected] 3 01. INDEX 01. INDEX .................................................................................................................................03 02. ABSTRACT ...............................................................................................................................04 03. INTRODUCTION ...............................................................................................................................05 04. METHODOLOGY ..............................................................................................................................08 05. THEORETICAL FRamEWORK ...........................................................................................................09 06. DESIGN PROCESS ...................................................................................................................18 07. GENERAL CONCLUSIONS ..............................................................................................................41 08. FURTHER IMPLEMENTATIONS ....................................................................................................44 09. APPENDIX ..............................................................................................................................47 02. -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

Re-Excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael Lyons

”Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael Lyons To cite this version: Michael Lyons. ”Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset. 2021. hal-03321964 HAL Id: hal-03321964 https://hal.archives-ouvertes.fr/hal-03321964 Preprint submitted on 18 Aug 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution - NonCommercial - NoDerivatives| 4.0 International License “Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael J. Lyons Ritsumeikan University Abstract Twenty-five years ago, my colleagues Miyuki Kamachi and Jiro Gyoba and I designed and photographed JAFFE, a set of facial expression images intended for use in a study of face perception. In 2019, without seeking permission or informing us, Kate Crawford and Trevor Paglen exhibited JAFFE in two widely publicized art shows. In addition, they published a nonfactual account of the images in the essay “Excavating AI: The Politics of Images in Machine Learning Training Sets.” The present article recounts the creation of the JAFFE dataset and unravels each of Crawford and Paglen’s fallacious statements. -

Playing God? Towards Machines That Deny Their Maker

Lecture with Prof. Rosalind Picard, Massachusetts Institute of Technology (MIT) Playing God? Towards Machines that Deny Their Maker 22. April 2016 14:15 UniS, Hörsaal A003 CAMPUS live VBG Bern Institut für Philosophie Christlicher Studentenverein Christliche Hochschulgruppe Länggassstrasse 49a www.campuslive.ch/bern www.bern.vbg.net 3000 Bern 9 www.philosophie.unibe.ch 22. April 2016 14:15 UniS, Hörsaal A003 (Schanzeneckstrasse 1, Bern) Lecture with Prof. Rosalind Picard (MIT) Playing God? Towards Machines that Deny their Maker "Today, technology is acquiring the capability to sense and respond skilfully to human emotion, and in some cases to act as if it has emotional processes that make it more intelligent. I'll give examples of the potential for good with this technology in helping people with autism, epilepsy, and mental health challenges such as anxie- ty and depression. However, the possibilities are much larger: A few scientists want to build computers that are vastly superior to humans, capable of powers beyond reproducing their own kind. What do we want to see built? And, how might we make sure that new affective technologies make human lives better?" Professor Rosalind W. Picard, Sc.D., is founder and director of the Affective Computing Research Group at the Massachusetts Institute of Technology (MIT) Media Lab where she also chairs MIT's Mind+Hand+Heart initiative. Picard has co- founded two businesses, Empatica, Inc. creating wearable sensors and analytics to improve health, and Affectiva, Inc. delivering software to measure and communi- cate emotion through facial expression analysis. She has authored or co-authored over 200 scientific articles, together with the book Affective Computing, which was instrumental in giving rise to the field by that name. -

Membership Information

ACM 1515 Broadway New York, NY 10036-5701 USA The CHI 2002 Conference is sponsored by ACM’s Special Int e r est Group on Computer-Human Int e r a c t i o n (A CM SIGCHI). ACM, the Association for Computing Machinery, is a major force in advancing the skills and knowledge of Information Technology (IT) profession- als and students throughout the world. ACM serves as an umbrella organization offering its 78,000 members a variety of forums in order to fulfill its members’ needs, the delivery of cutting-edge technical informa- tion, the transfer of ideas from theory to practice, and opportunities for information exchange. Providing high quality products and services, world-class journals and magazines; dynamic special interest groups; numerous “main event” conferences; tutorials; workshops; local special interest groups and chapters; and electronic forums, ACM is the resource for lifelong learning in the rapidly changing IT field. The scope of SIGCHI consists of the study of the human-computer interaction process and includes research, design, development, and evaluation efforts for interactive computer systems. The focus of SIGCHI is on how people communicate and interact with a broadly-defined range of computer systems. SIGCHI serves as a forum for the exchange of ideas among com- puter scientists, human factors scientists, psychologists, social scientists, system designers, and end users. Over 4,500 professionals work together toward common goals and objectives. Membership Information Sponsored by ACM’s Special Interest Group on Computer-Human -

Toward Machines with Emotional Intelligence

Toward Machines with Emotional Intelligence Rosalind W. Picard MIT Media Laboratory Abstract For half a century, artificial intelligence researchers have focused on giving machines linguistic and mathematical-logical reasoning abilities, modeled after the classic linguistic and mathematical-logical intelligences. This chapter describes new research that is giving machines (including software agents, robotic pets, desktop computers, and more) skills of emotional intelligence. Machines have long been able to appear as if they have emotional feelings, but now machines are also being programmed to learn when and how to display emotion in ways that enable the machine to appear empathetic or otherwise emotionally intelligent. Machines are now being given the ability to sense and recognize expressions of human emotion such as interest, distress, and pleasure, with the recognition that such communication is vital for helping machines choose more helpful and less aggravating behavior. This chapter presents several examples illustrating new and forthcoming forms of machine emotional intelligence, highlighting applications together with challenges to their development. 1. Introduction Why would machines need emotional intelligence? Machines do not even have emotions: they don’t feel happy to see us, sad when we go, or bored when we don’t give them enough interesting input. IBM’s Deep Blue supercomputer beat Grandmaster and World Champion Gary Kasparov at Chess without feeling any stress during the game, and without any joy at its accomplishment, -

Affectiva RANA EL KALIOUBY

STE Episode Transcript: Affectiva RANA EL KALIOUBY: A lip corner pull, which is pulling your lips outwards and upwards – what we call a smile – is action unit 12. The face is one of the most powerful ways of communicating human emotions. We're on this mission to humanize technology. I was spending more time with my computer than I did with any other human being – and this computer, it knew a lot of things about me, but it didn't have any idea of how I felt. There are hundreds of different types of smiles. There's a sad smile, there's a happy smile, there's an “I'm embarrassed” smile – and there's definitely an “I'm flirting” smile. When we're face to face, when we actually are able to get these nonverbal signals, we end up being kinder people. The algorithm tracks your different facial expressions. I really believe this is going to become ubiquitous. It's going to be the standard human machine interface. Any technology in human history is neutral. It's how we decide to use it. There's a lot of potential where this thing knows you so well, it can help you become a better version of you. But that same data in the wrong hands could be could be manipulated to exploit you. CATERINA FAKE: That was computer scientist Rana el Kaliouby. She invented an AI tool that can read the expression on your face and know how you’re feeling in real time. With this software, computers can read our signs of emotion – happiness, fear, confusion, grief – which paves the way for a future where technology is more human, and therefore serves us better. -

Affective Computing and Autism

Affective Computing and Autism a a RANA EL KALIOUBY, ROSALIND PICARD, AND SIMON BARON-COHENb aMassachusetts Institute of Technology, Cambridge, Massachusetts 02142-1308, USA bUniversity of Cambridge, Cambridge CB3 0FD, United Kingdom ABSTRACT: This article highlights the overlapping and converging goals and challenges of autism research and affective computing. We propose that a collaboration between autism research and affective computing could lead to several mutually beneficial outcomes—from developing new tools to assist people with autism in understanding and operating in the socioemotional world around them, to developing new computa- tional models and theories that will enable technology to be modified to provide an overall better socioemotional experience to all people who use it. This article describes work toward this convergence at the MIT Media Lab, and anticipates new research that might arise from the interaction between research into autism, technology, and human socioemotional intelligence. KEYWORDS: autism; Asperger syndrome (AS); affective computing; af- fective sensors; mindreading software AFFECTIVE COMPUTING AND AUTISM Autism is a set of neurodevelopmental conditions characterized by social interaction and communication difficulties, as well as unusually narrow, repeti- tive interests (American Psychiatric Association 1994). Autism spectrum con- ditions (ASC) comprise at least four subgroups: high-, medium-, and low- functioning autism (Kanner 1943) and Asperger syndrome (AS) (Asperger 1991; Frith 1991). Individuals with AS have average or above average IQ and no language delay. In the other three autism subgroups there is invariably some degree of language delay, and the level of functioning is indexed by overall IQ. Individuals diagnosed on the autistic spectrum often exhibit a “triad of strengths”: good attention to detail, deep, narrow interest, and islets of ability (Baron-Cohen 2004). -

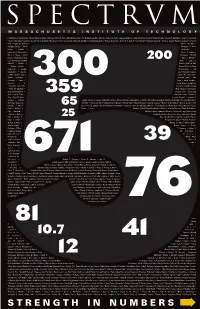

Download a PDF of This Issue

Hal Abelson • Edward Adams • Morris Adelman • Edward Adelson • Khurram Afridi • Anuradha Agarwal • Alfredo Alexander-Katz • Marilyne Andersen • Polina Olegovna Anikeeva • Anuradha M Annaswamy • Timothy Antaya • George E. Apostolakis • Robert C. Armstrong • Elaine V. Backman • Lawrence Bacow • Emilio Baglietto • Hari Balakrishnan • Marc Baldo • Ronald G. Ballinger • George Barbastathis • William A. Barletta • Steven R. H. Barrett • Paul I. Barton • Moungi G. Bawendi • Martin Z. Bazant • Geoffrey S. Beach • Janos M. Beer • Angela M. Belcher • Moshe Ben-Akiva • Hansjoerg Berger • Karl K. Berggren • A. Nihat Berker • Dimitri P. Bertsekas • Edmundo D. Blankschtein • Duane S. Boning • Mary C. Boyce • Kirkor Bozdogan • Richard D. Braatz • Rafael Luis Bras • John G. Brisson • Leslie Bromberg • Elizabeth Bruce • Joan E. Bubluski • Markus J. Buehler • Cullen R. Buie • Vladimir Bulovic • Mayank Bulsara • Tonio Buonassisi • Jacopo Buongiorno • Oral Buyukozturk • Jianshu Cao • Christopher Caplice • JoAnn Carmin • John S. Carroll • W. Craig Carter • Frederic Casalegno • Gerbrand Ceder • Ivan Celanovic • Sylvia T. Ceyer • Ismail Chabini • Anantha • P. Chandrakasan • Gang Chen • Sow-Hsin Chen • Wai K. Cheng • Yet-Ming Chiang • Sallie W. Chisholm • Nazil Choucri • Chryssosto- midis Chryssostomos • Shaoyan Chu • Jung-Hoon Chun • Joel P. Clark • Robert E. Cohen • Daniel R. Cohn • Clark K. Colton • Patricia Connell • Stephen R. Connors • Chathan Cooke • Charles L. Cooney • Edward F. Crawley • Mary Cummings • Christopher C. Cummins • Kenneth R. Czerwinski • Munther A. Da hleh • Rick Lane Danheiser • Ming Dao • Richard de Neufville • Olivier L de Weck • Edward Francis DeLong • Erik Demaine • Martin Demaine • Laurent Demanet • Michael J. Demkowicz • John M. Deutch • Srini Devadas • Mircea Dinca • Patrick S Doyle • Elisabeth M.Drake • Mark Drela • Catherine L.Drennan • Michael J.Driscoll • Steven Dubowsky • Esther Duflo • Fredo Durand • Thomas Eagar • Richard S. -

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome PRIMARY INVESTIGATORS: Matthew Goodwin, The Groden Center. Rosalind Picard, Massachusetts Institute of Technology Media Laboratory Rana el Kaliouby, Massachusetts Institute of Technology Media Laboratory Alea Teeters, Massachusetts Institute of Technology Media Laboratory June Groden, The Groden Center DESCRIPTION: Evaluate the scientific and clinical significance of using a wearable camera system (Self-Cam) to improve recognition of emotions from real-world faces in young adults with Asperger syndrome (AS) and high-functioning autism (HFA). The study tests a group of 10 young adults diagnosed with AS/HFA who are asked to wear Self-Cam three times a week for 10 weeks, and use a computerized, interactive facial expression analysis system to review and analyze their videos on a weekly basis. A second group of 10 age-, sex-, IQ-matched young adults with AS/HFA will use Mind Reading – a systematic DVD guide for teaching emotion recognition. These two groups will be tested pre- and post-intervention on recognition of emotion from faces at three levels of generalization: videos of themselves, videos of their interaction partner, and videos of other participants (i.e. people they are less familiar with). A control group of 10 age- and sex- matched typically developing adults will also be tested to establish performance differences in emotion recognition abilities across groups. This study will use a combination of behavioral reports, direct observation, and pre- and post- questionnaires to assess Self-Cam’s acceptance by persons with autism. We hypothesize that the typically developing control group will perform better on emotion recognition than both groups with AS/HFA at baseline and that the Self-Cam group, whose intervention teaches emotion recognition using real-life videos, will perform better than the Mind Reading group who will learn emotion recognition from a standard stock of emotion videos. -

Middle East Prepares for AI Acceleration

Research Insights Middle East prepares for AI acceleration Exploring AI commitment, ambitions and strategies By Ian Fletcher, Brian Goehring, Anthony Marshall, and Tarek Saeed Talking points Connectivity, big data drive AI use AI is the new space race While early investment in artificial Modern AI has been around since the 1950s. Since that time, there have been several AI false starts known as “AI intelligence (AI) was typically motivated by winters,” in which AI was not considered all that seriously. a desire to get ahead, the emphasis today Now, however, AI is being driven by the proliferation of is increasingly on achieving competitive ubiquitous connectivity, dramatically increased computing capability, unprecedented amounts of data, parity—making sure an organization is not and ever-more sophisticated systems of engagement. left behind. As a consequence, regional Today’s business leaders understand that AI is an leaders—especially those in Middle East increasingly important tool for future growth and prosperity and are investing accordingly. nations—are recognizing AI’s growing significance and are placing AI at the Forward-looking countries in the Middle East, such as the United Arab Emirates (UAE), Saudi Arabia, Egypt, and center of national economic strategies, Qatar are taking bold steps—through increased organization, and culture. investment across sectors and policy awareness and commitment—to prepare and position for dramatic AI creates jobs progress using AI. Smaller countries that develop a Despite intense media attention on AI’s significant edge in AI technology will punch above their weight class. possible impact on replacing workers, empirical evidence collected by the IBM AI investment is clearly on the rise. -

Affectiva-MIT Facial Expression Dataset (AM-FED): Naturalistic and Spontaneous Facial Expressions Collected In-The-Wild

Affectiva-MIT Facial Expression Dataset (AM-FED): Naturalistic and Spontaneous Facial Expressions Collected In-the-Wild Daniel McDuffyz, Rana El Kalioubyyz, Thibaud Senechalz, May Amrz, Jeffrey F. Cohnx, Rosalind Picardyz z Affectiva Inc., Waltham, MA, USA y MIT Media Lab, Cambridge, MA, USA x Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, USA [email protected], fkaliouby,thibaud.senechal,[email protected], [email protected], [email protected] Abstract ing research and media testing [14] to understanding non- verbal communication [19]. With the ubiquity of cameras Computer classification of facial expressions requires on computers and mobile devices, there is growing interest large amounts of data and this data needs to reflect the in bringing these applications to the real-world. To do so, diversity of conditions seen in real applications. Public spontaneous data collected from real-world environments is datasets help accelerate the progress of research by provid- needed. Public datasets truly help accelerate research in an ing researchers with a benchmark resource. We present a area, not just because they provide a benchmark, or a com- comprehensively labeled dataset of ecologically valid spon- mon language, through which researchers can communicate taneous facial responses recorded in natural settings over and compare their different algorithms in an objective man- the Internet. To collect the data, online viewers watched ner, but also because compiling such a corpus and getting one of three intentionally amusing Super Bowl commercials it reliably labeled, is tedious work - requiring a lot of effort and were simultaneously filmed using their webcam. They which many researchers may not have the resources to do.