Apple M1 Foreshadows Rise of RISC-V | by Erik Engheim | Dec, 2020 | Medium

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

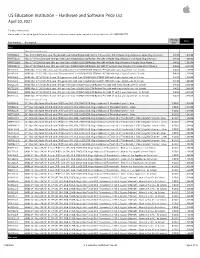

Apple US Education Price List

US Education Institution – Hardware and Software Price List April 30, 2021 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac iMac with Intel processor MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR2P2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 3-year AppleCare+ for Schools 8/4/20 1,818.00 BR2S2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 4-year AppleCare+ for Schools 8/4/20 1,868.00 BR2Q2LL/A BNDL iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB -

Holiday Catalog

Brilliant for what’s next. With the power to achieve anything. AirPods Pro AppleCare+ Protection Plan†* $29 Key Features • Active Noise Cancellation for immersive sound • Transparency mode for hearing and connecting with the world around you • Three sizes of soft, tapered silicone tips for a customizable fit • Sweat and water resistant1 • Adaptive EQ automatically tunes music to the shape of your ear • Easy setup for all your Apple devices2 • Quick access to Siri by saying “Hey Siri”3 • The Wireless Charging Case delivers more than 24 hours of battery life4 AirPods Pro. Magic amplified. Noise nullified. Active Noise Cancellation for immersive sound. Transparency mode for hearing what’s happening around you. Sweat and water resistant.1 And a more customizable fit for all-day comfort. AirPods® AirPods AirPods Pro with Charging Case with Wireless Charging Case with Wireless Charging Case $159 $199 $249 1 AirPods Pro are sweat and water resistant for non-water sports and exercise and are IPX4 rated. Sweat and water resistance are not permanent conditions. The charging case is not sweat or water resistant. 2 Requires an iCloud account and macOS 10.14.4, iOS 12.2, iPadOS, watchOS 5.2, or tvOS 13.2 or later. 3Siri may not be available in all languages or in all areas, and features may vary by area. 4 Battery life varies by use and configuration. See apple.com/batteries for details. Our business is part of a select group of independent Apple® Resellers and Service Providers who have a strong commitment to Apple’s Mac® and iOS platforms and have met or exceeded Apple’s highest training and sales certifications. -

Apple Accessories & Prices Input Devices Apple Magic Mouse 2 (APPX333) £65.50 Imac, Mac Mini and Accessories Magic Mouse 2 - Space Grey (APPX015) £82.50

Apple Accessories & Prices Input Devices Apple Magic Mouse 2 (APPX333) £65.50 iMac, Mac Mini and Accessories Magic Mouse 2 - Space Grey (APPX015) £82.50 Magic Trackpad 2 (APPX335) £107.50 Magic Trackpad 2 - Space Grey (APPX016) £124.00 Magic Keyboard (APPK005) £79.95 Magic Keyboard - Numeric (APPK006) £105.00 Magic Keyboard - Numeric - Space Grey (APPK007) £124.00 Adapters and Network Mini DisplayPort to DVI Adapter (APPX117) £23.95 Mini DisplayPort to VGA Adapter (APPX142) £23.95 USB-C to USB-A (APPX281) £14.95 USB-C to Digital AV (APPX099) £62.50 Misc. Accessories USB Retina Superdrive (APPX228) £65.50 HomePod Mini Smart Speakers - Available in Space Grey and Silver (APPX137/138) £82.50 HomePod Smart Speakers - Available in Space Grey and Silver (APPX013/014) £232.50 Many other adapters and accessories also available! Guernsey33 Commercial Arcade, Computers St. Peter Port Tel 01481-728738 Ltd E. & O. E. 18th August 2021 Mac Products & Specifications Mac Products & Specifications Mac Mini - i5 2.6GHz (APPC022) £915.00 iMac 27” i5 3.1GHz - 5K Retina Display (APPC028) £1499.00 3.0GHz 6-Core i5 Processor w/ 9MB shared L3 cache - Turbo Boost 3.1GHz 6-Core i5 Processor (Turbo Boost up to 4.5GHz), 8GB up to 4.1GHz, 8GB DDR4 RAM, 256GB Solid State Drive, Intel UHD DDR4 RAM, 256GB Solid State Drive, Radeon Pro 5300 Graphics Graphics 630, 802.11ac Wi-Fi (802.11 a/b/g/n compatible) & Bluetooth 5.0 (4GB), 802.11ac Wi-Fi (802.11 a/b/g/n compatible) & Bluetooth 5.0 Mac Mini - M1 8-Core Processor (APPC031) £582.00 iMac 27” i5 3.3GHz - 5K Retina Display -

In-Datacenter Performance Analysis of a Tensor Processing Unit

In-Datacenter Performance Analysis of a Tensor Processing Unit Presented by Josh Fried Background: Machine Learning Neural Networks: ● Multi Layer Perceptrons ● Recurrent Neural Networks (mostly LSTMs) ● Convolutional Neural Networks Synapse - each edge, has a weight Neuron - each node, sums weights and uses non-linear activation function over sum Propagating inputs through a layer of the NN is a matrix multiplication followed by an activation Background: Machine Learning Two phases: ● Training (offline) ○ relaxed deadlines ○ large batches to amortize costs of loading weights from DRAM ○ well suited to GPUs ○ Usually uses floating points ● Inference (online) ○ strict deadlines: 7-10ms at Google for some workloads ■ limited possibility for batching because of deadlines ○ Facebook uses CPUs for inference (last class) ○ Can use lower precision integers (faster/smaller/more efficient) ML Workloads @ Google 90% of ML workload time at Google spent on MLPs and LSTMs, despite broader focus on CNNs RankBrain (search) Inception (image classification), Google Translate AlphaGo (and others) Background: Hardware Trends End of Moore’s Law & Dennard Scaling ● Moore - transistor density is doubling every two years ● Dennard - power stays proportional to chip area as transistors shrink Machine Learning causing a huge growth in demand for compute ● 2006: Excess CPU capacity in datacenters is enough ● 2013: Projected 3 minutes per-day per-user of speech recognition ○ will require doubling datacenter compute capacity! Google’s Answer: Custom ASIC Goal: Build a chip that improves cost-performance for NN inference What are the main costs? Capital Costs Operational Costs (power bill!) TPU (V1) Design Goals Short design-deployment cycle: ~15 months! Plugs in to PCIe slot on existing servers Accelerates matrix multiplication operations Uses 8-bit integer operations instead of floating point How does the TPU work? CISC instructions, issued by host. -

Apple Branded 20210331

Apple Inc. Please visit the Apple NASPO online store for current product pricing, availability and product information. Consumer MNWNC- Band Part Number Description (MSRP) 102 iMac iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 1 MHK03LL/A 2 1,099.00 1,049.00 1 MHK23LL/A iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/256GB SSD/Radeon Pro 555X w/Apple Magic Keyboard and Apple Magic Mouse 2 1,299.00 1,249.00 1 MHK33LL/A iMac 21.5" 4K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 560X w/Apple Magic Keyboard and Apple Magic Mouse 2 1,499.00 1,399.00 1 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 1,799.00 1,699.00 1 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 1,999.00 1,899.00 1 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 2,299.00 2,099.00 1 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools - 1,168.00 1 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools - 1,218.00 1 BR3G2LL/A BNDL iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/256GB SSD/Radeon Pro 555X with 3-year AppleCare+ for Schools - 1,368.00 -

The American Express® Card Membership Rewards® 2021 Catalogue WHAT's INSIDE Select Any Icon to Go to Desired Section

INCENTIVO The American Express® Card Membership Rewards® 2021 Catalogue WHAT'S INSIDE Select any icon to go to desired section THE MEMBERSHIP REWARDS® PROGRAM FREQUENT TRAVELER OPTION FREQUENT GUEST FREQUENT FLYER PROGRAM PROGRAM NON-FREQUENT TRAVELER OPTION GADGETS & HOME & TRAVEL HEALTH & ENTERTAINMENT KITCHEN ESSENTIALS WELLNESS DINING HEALTH & WELLNESS LUXURY HOTEL SHOPPING VOUCHERS VOUCHERS VOUCHERS VOUCHERS FINANCIAL REWARDS TERMS & CONDITIONS THE MEMBERSHIP REWARDS PROGRAM THE MEMBERSHIP REWARDS PROGRAM TAKES YOU FURTHER NON-EXPIRING MEMBERSHIP REWARDS® POINTS Your Membership Rewards® Points do not expire, allowing you to save your Membership Rewards® Points for higher 1 value rewards. ACCELERATED REWARDS REDEMPTION Membership Rewards® Points earned from your Basic and Supplementary Cards are automatically pooled to enable 2 accelerated redemption. COMPREHENSIVE REWARDS PROGRAM The Membership Rewards® Points can be redeemed for a wide selection of shopping gift certificates, dining vouchers, 3 gadgets, travel essential items and more. The Membership Rewards® Points can also be transferred to other loyalty programs such as Airline Frequent Flyer and Hotel Frequent Guest Rewards programs which will allow you to enjoy complimentary flights or hotel stays. *Select any item below to go to the desired section. THE FREQUENT NON- MEMBERSHIP FREQUENT FINANCIAL TERMS & TRAVELER CONDITIONS REWARDS OPTION TRAVELER REWARDS PROGRAM OPTION THE MEMBERSHIP REWARDS PROGRAM PROGRAM OPTIONS AND STRUCTURE Earn one (1) Membership Rewards® Point for every -

Apple US Education Price List

US Education Institution – Hardware and Software Price List November 10, 2020 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MHK23LL/A iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/256GB SSD/Radeon Pro 555X w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,249.00 MHK33LL/A iMac 21.5" 4K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 560X w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,399.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR3G2LL/A BNDL iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/256GB -

Embree High Performance Ray Tracing Kernels 3.13.1

Intel® Embree High Performance Ray Tracing Kernels 3.13.1 August 10, 2021 Contents 1 Embree Overview 2 1.1 Supported Platforms ......................... 3 1.2 Version History ............................ 3 2 Installation of Embree 22 2.1 Windows MSI Installer ........................ 22 2.2 Windows ZIP File .......................... 22 2.3 Linux tar.gz Files ........................... 22 2.4 macOS PKG Installer ........................ 23 2.5 macOS ZIP file ............................ 23 3 Compiling Embree 24 3.1 Linux and macOS .......................... 24 3.2 Windows ............................... 26 3.3 CMake Configuration ......................... 28 4 Using Embree 32 5 Embree API 33 5.1 Device Object ............................. 34 5.2 Scene Object ............................. 34 5.3 Geometry Object ........................... 35 5.4 Ray Queries .............................. 35 5.5 Point Queries ............................. 36 5.6 Collision Detection .......................... 36 5.7 Miscellaneous ............................. 36 6 Upgrading from Embree 2 to Embree 3 37 6.1 Device ................................. 38 6.2 Scene ................................. 38 6.3 Geometry ............................... 38 6.4 Buffers ................................. 40 6.5 Miscellaneous ............................. 40 1 7 Embree API Reference 43 7.1 rtcNewDevice ............................. 43 7.2 rtcRetainDevice ............................ 46 7.3 rtcReleaseDevice ........................... 47 7.4 rtcGetDeviceProperty ....................... -

Importance of New Apple Computers

Importance of New Apple Computers Lorrin R. Garson OPCUG & PATACS December 12, 2020 © 2020 Lorrin R. Garson Rapidly Changing Scene •Some information will have changed within the past few days and even hours •Expect new developments over the next several months 2 A Short Prologue: Computer Systems I’ve Worked On •Alpha Microsystems* (late 1970s ➜ 1990s) •Various Unix systems (1980s ➜ 2000s) Active hypertext •Microsoft Windows (~1985 ➜ 2013) links •Apple Computers (~1986 ➜ 2020) * Major similarities to DEC PDP/11 3 Not me in disguise! No emotional attachment to any computer system 4 Short History of Apple CPUs •1976 Apple I & II; MOS 6502 •1977 Apple III; Synertek 6502B •1985 Macintosh; Motorola 68000 ✓ 68020, 68030 and 68030 •1994 Macintosh; PowerPC 601 ✓ 603, 604, G3, G4 and G5 5 History of Apple Hardware (CPUs) (cont.) •2006 Macintosh; Intel x86 ✓ Yonah, Core Penryn, Nehalem, Westmere, Sandy Bridge, Ivy Bridge, Haswell, Broadwell, Skylake, Kaby Lake, Coffee Lake, Ice Lake, Tiger Lake ✓ 2009 Apple dropped support for PowerPC •2020 Mac Computers; Apple Silicon 6 Terminology •“Apple Silicon” refers to Apple’s proprietary ARM- based hardware •Apple Silicon aka “System* on a Chip” aka “SoC” •“M1” name of the chip implementing Apple Silicon** * Not silicon on a chip ** The M1 is a “superset” of the iPhone A14 chip 7 ARM vs. x86 •ARM uses RISC architecture (Reduced Instruction Set Computing) ✓ Fugaku supercomputer (world’s fastest computer) •x86 uses CISC architecture (Complex Instruction Set Computing) ✓ Intel-based computers •ARM focuses -

Faster Dilithium, Kyber, and Saber on Cortex-A72 and Apple M1

Neon NTT: Faster Dilithium, Kyber, and Saber on Cortex-A72 and Apple M1 Hanno Becker1, Vincent Hwang2,3, Matthias J. Kannwischer3, Bo-Yin Yang3 and Shang-Yi Yang4 1 Arm Research, Cambridge, UK [email protected] 2 National Taiwan University, Taipei, Taiwan [email protected] 3 Academia Sinica, Taipei, Taiwan [email protected], [email protected] 4 Chelpis Co. Ltd., Taipei, Taiwan [email protected] Abstract. We present new speed records on the Armv8-A architecture for the lattice- based schemes Dilithium, Kyber, and Saber. The core novelty in this paper is the combination of Montgomery multiplication and Barrett reduction resulting in “Barrett multiplication” which allows particularly efficient modular one-known-factor multiplication using the Armv8-A Neon vector instructions. These novel techniques combined with fast two-unknown-factor Montgomery multiplication, Barrett reduction sequences, and interleaved multi-stage butterflies result in significantly faster code. We also introduce “asymmetric multiplication” which is an improved technique for caching the results of the incomplete NTT, used e.g. for matrix-to-vector polynomial multiplication. Our implementations target the Arm Cortex-A72 CPU, on which our speed is 1.7× that of the state-of-the-art matrix-to-vector polynomial multiplication in Kyber [Nguyen–Gaj 2021]. For Saber, NTTs are far superior to Toom–Cook multi- plication on the Armv8-A architecture, outrunning the matrix-to-vector polynomial multiplication by 2.1×. On the Apple M1, our matrix-vector products run 2.1× and 1.9× faster for Kyber and Saber respectively. Keywords: NIST PQC · Armv8-A · Neon · Dilithium · Kyber · Saber 1 Introduction When large quantum computers arrive, Shor’s algorithm [Sho97] will break almost all currently deployed public-key cryptography by solving the integer factorization and the discrete logarithms problems. -

Macbook Air for Business Supercharged. Super Light. Super Productive

MacBook Air for Business Supercharged. Super light. Super productive. MacBook Air completely redefines what a notebook can do in a work-from-anywhere world. With the groundbreaking efficiency of the Apple M1 chip, the power of macOS Big Sur, and stunning performance in a fanless design, MacBook Air also offers incredible value. Starting at only $999, it’s a smarter investment for business than ever before. Powered by the Apple M1 chip Better everyday work experience. Small chip. Giant leap for business. Apps for work with new possibilities. MacBook Air is powered by the revolutionary M1, Apple’s first chip designed MacBook Air with the M1 chip and macOS runs common third-party business apps specifically for the Mac. With its industry-leading performance per watt, M1 like Microsoft Word, PowerPoint and Excel, Slack and Zoom. So employees can do the delivers up to 3.5x faster CPU, up to 5x faster GPU, up to 9x faster machine work they need to do in the apps they already use. When upgrading to M1, Rosetta 2 learning capabilities, and up to 18 hours of battery life.1,2 M1 transforms the ensures that existing Mac apps work out of the box. And for the first time, employees Mac experience. can run popular iOS and iPadOS apps directly on Mac. All-day battery life in a fanless design. Works seamlessly with iPhone and iPad. MacBook Air has advanced energy-saving technologies that help extend battery MacBook Air is the perfect companion to iPhone and iPad. With Continuity, employees life — with up to 15 hours of wireless web browsing and up to 18 hours of video can move easily between devices throughout their workday. -

Apple M1 Chip Small Chip, Huge Leap

Apple M1 Chip Small chip, huge leap Every once in a while the world of technology makes a giant leap forward. The first personal computers, wide adoption of the internet and the first iPhones were not only fascinating and useful technology in and of themselves, but they also transformed an entire industry, spurring competitors and collaborators to new heights. The Apple M1 chip heralds one of those leaps. Reviewers love the M1 Apple's ARM-based M1 chip will mean astounding efficiency, speed and performance for Mac and Mac-based programs and frameworks, and reviews were . gushing might not be too strong a word for it. One reviewer for engadget.com positively swooned over his experience with the new M1-based MacBook Air1: “Apple's new MacBook Air is stunningly fast. It's raring to go the instant you open its lid. Want to browse the web? Watch it load bloated sites faster than you've ever seen on a laptop. Want to play some games? Step back as it blows away every ultraportable, with no fan noise to get in the way. And if you need to take a break, don't worry. It's got enough battery life to last you all day. Using the new MacBook Air is like stepping into a new world where we can demand much more from ultraportables.” 1 “MacBook Air M1 review: Faster than most PCs, no fan required,” engadget.com, November 17, 2020 One gobsmacked developer pointed out on his Twitter feed that Xcode 12.3 beta unzips in five minutes on an M1 Apple Silicon-based machine versus 13 minutes and 22 seconds on And Jamf’s own CEO Dean Hager, after ordering his own a device using Intel i9.