How Pro-Government “Trolls” Influence Online Conversations In

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Examination of the Impact of Astroturfing on Nationalism: A

social sciences $€ £ ¥ Article An Examination of the Impact of Astroturfing on Nationalism: A Persuasion Knowledge Perspective Kenneth M. Henrie 1,* and Christian Gilde 2 1 Stiller School of Business, Champlain College, Burlington, VT 05401, USA 2 Department of Business and Technology, University of Montana Western, Dillon, MT 59725, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-802-865-8446 Received: 7 December 2018; Accepted: 23 January 2019; Published: 28 January 2019 Abstract: One communication approach that lately has become more common is astroturfing, which has been more prominent since the proliferation of social media platforms. In this context, astroturfing is a fake grass-roots political campaign that aims to manipulate a certain audience. This exploratory research examined how effective astroturfing is in mitigating citizens’ natural defenses against politically persuasive messages. An experimental method was used to examine the persuasiveness of social media messages related to coal energy in their ability to persuade citizens’, and increase their level of nationalism. The results suggest that citizens are more likely to be persuaded by an astroturfed message than people who are exposed to a non-astroturfed message, regardless of their political leanings. However, the messages were not successful in altering an individual’s nationalistic views at the moment of exposure. The authors discuss these findings and propose how in a long-term context, astroturfing is a dangerous addition to persuasive communication. Keywords: astroturfing; persuasion knowledge; nationalism; coal energy; social media 1. Introduction Astroturfing is the simulation of a political campaign which is intended to manipulate the target audience. Often, the purpose of such a campaign is not clearly recognizable because a political effort is disguised as a grassroots operation. -

Political Astroturfing Across the World

Political astroturfing across the world Franziska B. Keller∗ David Schochy Sebastian Stierz JungHwan Yang§ Paper prepared for the Harvard Disinformation Workshop Update 1 Introduction At the very latest since the Russian Internet Research Agency’s (IRA) intervention in the U.S. presidential election, scholars and the broader public have become wary of coordi- nated disinformation campaigns. These hidden activities aim to deteriorate the public’s trust in electoral institutions or the government’s legitimacy, and can exacerbate political polarization. But unfortunately, academic and public debates on the topic are haunted by conceptual ambiguities, and rely on few memorable examples, epitomized by the often cited “social bots” who are accused of having tried to influence public opinion in various contemporary political events. In this paper, we examine “political astroturfing,” a particular form of centrally co- ordinated disinformation campaigns in which participants pretend to be ordinary citizens acting independently (Kovic, Rauchfleisch, Sele, & Caspar, 2018). While the accounts as- sociated with a disinformation campaign may not necessarily spread incorrect information, they deceive the audience about the identity and motivation of the person manning the ac- count. And even though social bots have been in the focus of most academic research (Fer- rara, Varol, Davis, Menczer, & Flammini, 2016; Stella, Ferrara, & De Domenico, 2018), seemingly automated accounts make up only a small part – if any – of most astroturf- ing campaigns. For instigators of astroturfing, relying exclusively on social bots is not a promising strategy, as humans are good at detecting low-quality information (Pennycook & Rand, 2019). On the other hand, many bots detected by state-of-the-art social bot de- tection methods are not part of an astroturfing campaign, but unrelated non-political spam ∗Hong Kong University of Science and Technology yThe University of Manchester zGESIS – Leibniz Institute for the Social Sciences §University of Illinois at Urbana-Champaign 1 bots. -

Disinformation, and Influence Campaigns on Twitter 'Fake News'

Disinformation, ‘Fake News’ and Influence Campaigns on Twitter OCTOBER 2018 Matthew Hindman Vlad Barash George Washington University Graphika Contents Executive Summary . 3 Introduction . 7 A Problem Both Old and New . 9 Defining Fake News Outlets . 13 Bots, Trolls and ‘Cyborgs’ on Twitter . 16 Map Methodology . 19 Election Data and Maps . 22 Election Core Map Election Periphery Map Postelection Map Fake Accounts From Russia’s Most Prominent Troll Farm . 33 Disinformation Campaigns on Twitter: Chronotopes . 34 #NoDAPL #WikiLeaks #SpiritCooking #SyriaHoax #SethRich Conclusion . 43 Bibliography . 45 Notes . 55 2 EXECUTIVE SUMMARY This study is one of the largest analyses to date on how fake news spread on Twitter both during and after the 2016 election campaign. Using tools and mapping methods from Graphika, a social media intelligence firm, we study more than 10 million tweets from 700,000 Twitter accounts that linked to more than 600 fake and conspiracy news outlets. Crucially, we study fake and con- spiracy news both before and after the election, allowing us to measure how the fake news ecosystem has evolved since November 2016. Much fake news and disinformation is still being spread on Twitter. Consistent with other research, we find more than 6.6 million tweets linking to fake and conspiracy news publishers in the month before the 2016 election. Yet disinformation continues to be a substantial problem postelection, with 4.0 million tweets linking to fake and conspiracy news publishers found in a 30-day period from mid-March to mid-April 2017. Contrary to claims that fake news is a game of “whack-a-mole,” more than 80 percent of the disinformation accounts in our election maps are still active as this report goes to press. -

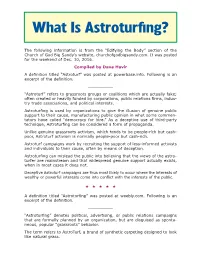

What Is Astroturfing? Churchofgodbigsandy.Com

What Is Astroturfing? The following information is from the “Edifying the Body” section of the Church of God Big Sandy’s website, churchofgodbigsandy.com. It was posted for the weekend of Dec. 10, 2016. Compiled by Dave Havir A definition titled “Astroturf” was posted at powerbase.info. Following is an excerpt of the definition. __________ “Astroturf” refers to grassroots groups or coalitions which are actually fake; often created or heavily funded by corporations, public relations firms, indus- try trade associations, and political interests. Astroturfing is used by organizations to give the illusion of genuine public support to their cause, manufacturing public opinion in what some commen- tators have called “democracy for hire.” As a deceptive use of third-party technique, Astroturfing can be considered a form of propaganda. Unlike genuine grassroots activism, which tends to be people-rich but cash- poor, Astroturf activism is normally people-poor but cash-rich. Astroturf campaigns work by recruiting the support of less-informed activists and individuals to their cause, often by means of deception. Astroturfing can mislead the public into believing that the views of the astro- turfer are mainstream and that widespread genuine support actually exists, when in most cases it does not. Deceptive Astroturf campaigns are thus most likely to occur where the interests of wealthy or powerful interests come into conflict with the interests of the public. ★★★★★ A definition titled “Astroturfing” was posted at weebly.com. Following is an excerpt of the definition. __________ “Astroturfing” denotes political, advertising, or public relations campaigns that are formally planned by an organization, but are disguised as sponta- neous, popular “grassroots” behavior. -

S:\FULLCO~1\HEARIN~1\Committee Print 2018\Henry\Jan. 9 Report

Embargoed for Media Publication / Coverage until 6:00AM EST Wednesday, January 10. 1 115TH CONGRESS " ! S. PRT. 2d Session COMMITTEE PRINT 115–21 PUTIN’S ASYMMETRIC ASSAULT ON DEMOCRACY IN RUSSIA AND EUROPE: IMPLICATIONS FOR U.S. NATIONAL SECURITY A MINORITY STAFF REPORT PREPARED FOR THE USE OF THE COMMITTEE ON FOREIGN RELATIONS UNITED STATES SENATE ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION JANUARY 10, 2018 Printed for the use of the Committee on Foreign Relations Available via World Wide Web: http://www.gpoaccess.gov/congress/index.html U.S. GOVERNMENT PUBLISHING OFFICE 28–110 PDF WASHINGTON : 2018 For sale by the Superintendent of Documents, U.S. Government Publishing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate Mar 15 2010 04:06 Jan 09, 2018 Jkt 000000 PO 00000 Frm 00001 Fmt 5012 Sfmt 5012 S:\FULL COMMITTEE\HEARING FILES\COMMITTEE PRINT 2018\HENRY\JAN. 9 REPORT FOREI-42327 with DISTILLER seneagle Embargoed for Media Publication / Coverage until 6:00AM EST Wednesday, January 10. COMMITTEE ON FOREIGN RELATIONS BOB CORKER, Tennessee, Chairman JAMES E. RISCH, Idaho BENJAMIN L. CARDIN, Maryland MARCO RUBIO, Florida ROBERT MENENDEZ, New Jersey RON JOHNSON, Wisconsin JEANNE SHAHEEN, New Hampshire JEFF FLAKE, Arizona CHRISTOPHER A. COONS, Delaware CORY GARDNER, Colorado TOM UDALL, New Mexico TODD YOUNG, Indiana CHRISTOPHER MURPHY, Connecticut JOHN BARRASSO, Wyoming TIM KAINE, Virginia JOHNNY ISAKSON, Georgia EDWARD J. MARKEY, Massachusetts ROB PORTMAN, Ohio JEFF MERKLEY, Oregon RAND PAUL, Kentucky CORY A. BOOKER, New Jersey TODD WOMACK, Staff Director JESSICA LEWIS, Democratic Staff Director JOHN DUTTON, Chief Clerk (II) VerDate Mar 15 2010 04:06 Jan 09, 2018 Jkt 000000 PO 00000 Frm 00002 Fmt 5904 Sfmt 5904 S:\FULL COMMITTEE\HEARING FILES\COMMITTEE PRINT 2018\HENRY\JAN. -

Introduction: What Is Computational Propaganda?

!1 Introduction: What Is Computational Propaganda? Digital technologies hold great promise for democracy. Social media tools and the wider resources of the Internet o"er tremendous access to data, knowledge, social networks, and collective engagement opportunities, and can help us to build better democracies (Howard, 2015; Margetts et al., 2015). Unwelcome obstacles are, however, disrupting the creative democratic applications of information technologies (Woolley, 2016; Gallacher et al., 2017; Vosoughi, Roy, & Aral, 2018). Massive social platforms like Facebook and Twitter are struggling to come to grips with the ways their creations can be used for political control. Social media algorithms may be creating echo chambers in which public conversations get polluted and polarized. Surveillance capabilities are outstripping civil protections. Political “bots” (software agents used to generate simple messages and “conversations” on social media) are masquerading as genuine grassroots movements to manipulate public opinion. Online hate speech is gaining currency. Malicious actors and digital marketers run junk news factories that disseminate misinformation to harm opponents or earn click-through advertising revenue.# It is no exaggeration to say that coordinated e"orts are even now working to seed chaos in many political systems worldwide. Some militaries and intelligence agencies are making use of social media as conduits to undermine democratic processes and bring down democratic institutions altogether (Bradshaw & Howard, 2017). Most democratic governments are preparing their legal and regulatory responses. But unintended consequences from over- regulation, or regulation uninformed by systematic research, may be as damaging to democratic systems as the threats themselves.# We live in a time of extraordinary political upheaval and change, with political movements and parties rising and declining rapidly (Kreiss, 2016; Anstead, 2017). -

Complex Perceptions of Social Media Misinformation in China

The Government’s Dividend: Complex Perceptions of Social Media Misinformation in China Zhicong Lu1, Yue Jiang2, Cheng Lu1, Mor Naaman3, Daniel Wigdor1 1University of Toronto 2University of Maryland 3Cornell Tech {luzhc, ericlu, daniel}@dgp.toronto.edu, [email protected], [email protected] ABSTRACT involves a wide array of actors including traditional news out- The social media environment in China has become the dom- lets, professional or casual news-reporting individuals, user- inant source of information and news over the past decade. generated content, and third-party fact-checkers. As a result, This news environment has naturally suffered from challenges norms for disseminating information through social media and related to mis- and dis-information, encumbered by an in- the networks through which information reaches audiences creasingly complex landscape of factors and players including are more diverse and intricate. This changing environment social media services, fact-checkers, censorship policies, and makes it challenging for users to evaluate the trustworthiness astroturfing. Interviews with 44 Chinese WeChat users were of information on social media [14]. conducted to understand how individuals perceive misinfor- In China, the media landscape is even more complicated be- mation and how it impacts their news consumption practices. cause government interventions in the ecosystem are becoming Overall, this work exposes the diverse attitudes and coping increasingly prevalent. Most people only use local social me- strategies that Chinese users employ in complex social me- dia platforms, such as WeChat, Weibo, and Toutiao, to social- dia environments. Due to the complex nature of censorship ize with others and consume news online. -

Weaving the Dark Web: Legitimacy on Freenet, Tor, and I2P (Information

The Information Society Series Laura DeNardis and Michael Zimmer, Series Editors Interfaces on Trial 2.0, Jonathan Band and Masanobu Katoh Opening Standards: The Global Politics of Interoperability, Laura DeNardis, editor The Reputation Society: How Online Opinions Are Reshaping the Offline World, Hassan Masum and Mark Tovey, editors The Digital Rights Movement: The Role of Technology in Subverting Digital Copyright, Hector Postigo Technologies of Choice? ICTs, Development, and the Capabilities Approach, Dorothea Kleine Pirate Politics: The New Information Policy Contests, Patrick Burkart After Access: The Mobile Internet and Inclusion in the Developing World, Jonathan Donner The World Made Meme: Public Conversations and Participatory Media, Ryan Milner The End of Ownership: Personal Property in the Digital Economy, Aaron Perzanowski and Jason Schultz Digital Countercultures and the Struggle for Community, Jessica Lingel Protecting Children Online? Cyberbullying Policies of Social Media Companies, Tijana Milosevic Authors, Users, and Pirates: Copyright Law and Subjectivity, James Meese Weaving the Dark Web: Legitimacy on Freenet, Tor, and I2P, Robert W. Gehl Weaving the Dark Web Legitimacy on Freenet, Tor, and I2P Robert W. Gehl The MIT Press Cambridge, Massachusetts London, England © 2018 Robert W. Gehl All rights reserved. No part of this book may be reproduced in any form by any electronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher. This book was set in ITC Stone Serif Std by Toppan Best-set Premedia Limited. Printed and bound in the United States of America. Library of Congress Cataloging-in-Publication Data is available. ISBN: 978-0-262-03826-3 eISBN 9780262347570 ePub Version 1.0 I wrote parts of this while looking around for my father, who died while I wrote this book. -

What's a Patent Owner To

Winter 2018 Vol. 16, Issue 1 A review of developments in Intellectual Property Law Motions to Amend at the PTAB after Aqua Products, Inc. v. Matal – What’s a Patent Owner to Do? By Andrew W. Williams, Ph.D. conducted by the Patent Trial and Appeal Board shifting to the patent owner, however, was In 2011, Congress enacted (“PTAB” or “Board”) that analyzed statistics in not found in either the statute or the rules the America Invents Act and all IPRs, CBMs, and PGRs. The third and most promulgated by the Patent Office. Instead, the created new mechanisms recent iteration included data through the Fiscal PTAB interpreted its regulations in an early to challenge issued claims Year 2017 (which ended on September 30, decision, Idle Free Sys., Inc. v. Bergstrom, Inc.1 at the Patent Office. The 2017). The first thing this study revealed is that In Idle Free, the PTAB indicated that the burden goal was to expeditiously motions to amend were filed in only 275 out is on the patent owner to show “a patentable resolve issues of patent of 2,766 completed trials, or 10%. The Board distinction over the prior art of record and also validity in response to the counted a trial as “completed” when it was prior art known to the patent owner.”2 The public outcry that validity terminated due to settlement, when there was Board subsequently relaxed the patent owner’s challenges in the federal courts were too a request for adverse judgement, when it was burden in MasterImage 3D, Inc. v. -

From Anti-Vaxxer Moms to Militia Men: Influence Operations, Narrative Weaponization, and the Fracturing of American Identity

From Anti-Vaxxer Moms to Militia Men: Influence Operations, Narrative Weaponization, and the Fracturing of American Identity Dana Weinberg, PhD (contact) & Jessica Dawson, PhD ABSTRACT How in 2020 were anti-vaxxer moms mobilized to attend reopen protests alongside armed militia men? This paper explores the power of weaponized narratives on social media both to create and polarize communities and to mobilize collective action and even violence. We propose that focusing on invocation of specific narratives and the patterns of narrative combination provides insight into the shared sense of identity and meaning different groups derive from these narratives. We then develop the WARP (Weaponize, Activate, Radicalize, Persuade) framework for understanding the strategic deployment and presentation of narratives in relation to group identity building and individual responses. The approach and framework provide powerful tools for investigating the way narratives may be used both to speak to a core audience of believers while also introducing and engaging new and even initially unreceptive audience segments to potent cultural messages, potentially inducting them into a process of radicalization and mobilization. (149 words) Keywords: influence operations, narrative, social media, radicalization, weaponized narratives 1 Introduction In the spring of 2020, several FB groups coalesced around protests of states’ coronavirus- related shutdowns. Many of these FB pages developed in a concerted action by gun-rights activists (Stanley-Becker and Romm 2020). The FB groups ostensibly started as a forum for people to express their discontent with their state’s shutdown policies, but the bulk of their membership hailed from outside the targeted local communities. Moreover, these online groups quickly devolved to hotbeds of conspiracy theories, malign information, and hate speech (Finkelstein et al. -

FCJ-156 Hacking the Social: Internet Memes, Identity Antagonism, and the Logic of Lulz

The Fibreculture Journal issn: 1449-1443 DIGITAL MEDIA + NETWORKS + TRANSDISCIPLINARY CRITIQUE issue 22 2013: Trolls and the Negative Space of the Internet FCJ-156 Hacking the Social: Internet Memes, Identity Antagonism, and the Logic of Lulz Ryan M. Milner College of Charleston Abstract: 4chan and reddit are participatory media collectives undergirded by a “logic of lulz” that favours distanced irony and critique. It often works at the expense of core identity categories like race and gender. However, the logic need not be entirely counterproductive to public discourse. Provided that diverse identities find voice instead of exclusion, these sites may facilitate vibrant, agonistic discussion instead of disenfranchising antagonism. In order to assess this potential for productive agonism, I undertook a critical discourse analysis of these collectives. Emphasising the image memes they produce, I evaluated discourses on race and gender. Both race and gender representations were dominated by familiar stereotypes and partial representations. However, while dissenting perspectives on race were repressed or excluded, dissenting perspectives on gender were vocalised and contested. The ‘logic of lulz’ facilitated both dominance and counter, each articulated with heavy reliance on irony and critique. This logic ambiguously balanced agonism and antagonism, but contestation provided sharper engagement than repression. 62 FCJ-156 fibreculturejournal.org Ryan M. Milner ‘A troll exploits social dynamics like computer hackers exploit security loop- holes…’ (Adrian Chen, 2012 October 12) In October 2012, reddit – a popular link aggregation service and public discussion forum – was embroiled in a prominent controversy. Adrian Chen, a journalist for the news site Gawker, had just revealed the ‘offline’ identity of Violentacrez, one of reddit’s ‘most reviled characters but also one if its most beloved users’ (Chen, 2012 October 12). -

The Weaponization of Twitter Bots in the Gulf Crisis

International Journal of Communication 13(2019), 1389–1415 1932–8036/20190005 Propaganda, Fake News, and Fake Trends: The Weaponization of Twitter Bots in the Gulf Crisis MARC OWEN JONES Hamad bin Khalifa University, Qatar1 To address the dual need to examine the weaponization of social media and the nature of non-Western propaganda, this article explores the use of Twitter bots in the Gulf crisis that began in 2017. Twitter account-creation dates within hashtag samples are used as a primary indicator for detecting Twitter bots. Following identification, the various modalities of their deployment in the crisis are analyzed. It is argued that bots were used during the crisis primarily to increase negative information and propaganda from the blockading countries toward Qatar. In terms of modalities, this study reveals how bots were used to manipulate Twitter trends, promote fake news, increase the ranking of anti-Qatar tweets from specific political figures, present the illusion of grassroots Qatari opposition to the Tamim regime, and pollute the information sphere around Qatar, thus amplifying propaganda discourses beyond regional and national news channels. Keywords: bots, Twitter, Qatar, computational propaganda, Gulf crisis, Middle East The Gulf Crisis: Propaganda, Twitter, and Pretexts The recent Gulf crisis, in which Qatar was isolated by Saudi Arabia, Egypt, Bahrain, and the United Arab Emirates (UAE), has been accompanied by a huge social media propaganda campaign. This isolation, termed by some as “the blockade,” is an important opportunity for scholars of global communications to address what Oliver Boyd-Barrett (2017) calls the “imperative” need to explore “characteristics of non-Western propaganda” (p.