Contrast Sensitivity and Vision-Related Quality of Life Assessment in the Pediatric Low Vision Setting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bass – Glaucomatous-Type Field Loss Not Due to Glaucoma

Glaucoma on the Brain! Glaucomatous-Type Yes, we see lots of glaucoma Field Loss Not Due to Not every field that looks like glaucoma is due to glaucoma! Glaucoma If you misdiagnose glaucoma, you could miss other sight-threatening and life-threatening Sherry J. Bass, OD, FAAO disorders SUNY College of Optometry New York, NY Types of Glaucomatous Visual Field Defects Paracentral Defects Nasal Step Defects Arcuate and Bjerrum Defects Altitudinal Defects Peripheral Field Constriction to Tunnel Fields 1 Visual Field Defects in Very Early Glaucoma Paracentral loss Early superior/inferior temporal RNFL and rim loss: short axons Arcuate defects above or below the papillomacular bundle Arcuate field loss in the nasal field close to fixation Superotemporal notch Visual Field Defects in Early Glaucoma Nasal step More widespread RNFL loss and rim loss in the inferior or superior temporal rim tissue : longer axons Loss stops abruptly at the horizontal raphae “Step” pattern 2 Visual Field Defects in Moderate Glaucoma Arcuate scotoma- Bjerrum scotoma Focal notches in the inferior and/or superior rim tissue that reach the edge of the disc Denser field defects Follow an arcuate pattern connected to the blind spot 3 Visual Field Defects in Advanced Glaucoma End-Stage Glaucoma Dense Altitudinal Loss Progressive loss of superior or inferior rim tissue Non-Glaucomatous Etiology of End-Stage Glaucoma Paracentral Field Loss Peripheral constriction Hereditary macular Loss of temporal rim tissue diseases Temporal “islands” Stargardt’s macular due -

Optic Nerve Hypoplasia Plus: a New Way of Looking at Septo-Optic Dysplasia

Optic Nerve Hypoplasia Plus: A New Way of Looking at Septo-Optic Dysplasia Item Type text; Electronic Thesis Authors Mohan, Prithvi Mrinalini Publisher The University of Arizona. Rights Copyright © is held by the author. Digital access to this material is made possible by the University Libraries, University of Arizona. Further transmission, reproduction or presentation (such as public display or performance) of protected items is prohibited except with permission of the author. Download date 29/09/2021 22:50:06 Item License http://rightsstatements.org/vocab/InC/1.0/ Link to Item http://hdl.handle.net/10150/625105 OPTIC NERVE HYPOPLASIA PLUS: A NEW WAY OF LOOKING AT SEPTO-OPTIC DYSPLASIA By PRITHVI MRINALINI MOHAN ____________________ A Thesis Submitted to The Honors College In Partial Fulfillment of the Bachelors degree With Honors in Physiology THE UNIVERSITY OF ARIZONA M A Y 2 0 1 7 Approved by: ____________________________ Dr. Vinodh Narayanan Center for Rare Childhood Disorders Abstract Septo-optic dysplasia (SOD) is a rare congenital disorder that affects 1/10,000 live births. At its core, SOD is a disorder resulting from improper embryological development of mid-line brain structures. To date, there is no comprehensive understanding of the etiology of SOD. Currently, SOD is diagnosed based on the presence of at least two of the following three factors: (i) optic nerve hypoplasia (ii) improper pituitary gland development and endocrine dysfunction and (iii) mid-line brain defects, including agenesis of the septum pellucidum and/or corpus callosum. A literature review of existing research on the disorder was conducted. The medical history and genetic data of 6 patients diagnosed with SOD were reviewed to find damaging variants. -

TUBB3 M323V Syndrome Presents with Infantile Nystagmus

G C A T T A C G G C A T genes Case Report TUBB3 M323V Syndrome Presents with Infantile Nystagmus Soohwa Jin 1, Sung-Eun Park 2, Dongju Won 3, Seung-Tae Lee 3, Sueng-Han Han 2 and Jinu Han 4,* 1 Department of Opthalmology, Yonsei University College of Medicine, Seoul 03722, Korea; [email protected] 2 Department of Ophthalmology, Institute of Vision Research, Severance Hospital, Yonsei University College of Medicine, Seoul 03722, Korea; [email protected] (S.-E.P.); [email protected] (S.-H.H.) 3 Department of Laboratory Medicine, Severance Hospital, Yonsei University College of Medicine, Seoul 03722, Korea; [email protected] (D.W.); [email protected] (S.-T.L.) 4 Department of Ophthalmology, Institute of Vision Research, Gangnam Severance Hospital, Yonsei University College of Medicine, Seoul 06273, Korea * Correspondence: [email protected]; Tel.: +82-2-2019-3445 Abstract: Variants in the TUBB3 gene, one of the tubulin-encoding genes, are known to cause congenital fibrosis of the extraocular muscles type 3 and/or malformations of cortical development. Herein, we report a case of a 6-month-old infant with c.967A>G:p.(M323V) variant in the TUBB3 gene, who had only infantile nystagmus without other ophthalmological abnormalities. Subsequent brain magnetic resonance imaging (MRI) revealed cortical dysplasia. Neurological examinations did not reveal gross or fine motor delay, which are inconsistent with the clinical characteristics of patients with the M323V syndrome reported so far. A protein modeling showed that the M323V mutation in the TUBB3 gene interferes with αβ heterodimer formation with the TUBA1A gene. -

Multiple Ocular Developmental Defects in Four Closely Related Alpacas

Veterinary Ophthalmology (2018) 1–8 DOI:10.1111/vop.12540 CASE REPORT Multiple ocular developmental defects in four closely related alpacas Kelly E. Knickelbein,* David J. Maggs,§ Christopher M. Reilly,†,1 Kathryn L. Good*,2 and Juliet R. Gionfriddo‡,3 *The Veterinary Medical Teaching Hospital, University of California, Davis, CA 95616, USA; §Department of Surgical and Radiological Sciences, University of California, Davis, CA 95616 USA; †Department of Pathology Microbiology, and Immunology, University of California, Davis, CA 95616, USA; and ‡The College of Veterinary Medicine and Biomedical Sciences, Colorado State University, Fort Collins, CO 80528, USA Address communications to: Abstract D. J. Maggs Objective To describe the clinical, gross pathologic, and histopathologic findings for a Tel.: (530) 752-3937 visually impaired 5.8-year-old female alpaca with multiple ocular abnormalities, as well Fax: (530) 752-6042 as the clinical findings for three closely related alpacas. e-mail: [email protected] Animals studied Four alpacas. Present addresses: 1Insight Procedures Ophthalmic examination was performed on a 16-month-old female alpaca Veterinary Specialty Pathology, following observation of visual impairment while hospitalized for an unrelated illness. Austin, TX 78752, USA Following acute systemic decline and death 4.5 years later, the alpaca’s brain, optic 2Department of Surgical and Radiological Sciences, nerves, and eyes were examined grossly and histologically. Ophthalmic examination of University of California, Davis, three closely related alpacas was subsequently performed. CA 95616, USA Results The 16-month-old female alpaca (Alpaca 1) had ophthalmoscopic findings sug- 3 Red Feather Lakes, CO 80545, gestive of a coloboma or hypoplasia of the retinal pigment epithelium (RPE) and chor- USA oid, and suspected optic nerve hypoplasia OU. -

Without Retinopathy Ofprematurity 93

BritishJournalofOphthalmology 1993; 77:91-94 91 Follow-up study on premature infants with and without retinopathy of prematurity Br J Ophthalmol: first published as 10.1136/bjo.77.2.91 on 1 February 1993. Downloaded from Rosemary Robinson, Michael O'Keefe Abstract first screened at 6 weeks. When discharged from The ocular complications in population of 131 the neonatal unit, they continue to attend for premature infants, with and without retino- follow up at the Children's Hospital. Follow up pathy of prematurity (ROP) are reported. An is determined by the degree ofvascularisation of increased incidence of strabismus (20% with the retina. If fully vascularised, the child is seen ROP and 25% without ROP) and myopia again at 3 months and then yearly until age 5 (27-5% with ROP and 8-8% without ROP) was years, unless strabismus or amblyopia develop. shown. Significant visual loss occurred in If ROP is diagnosed, assessment is every 3-4 10-7% overall, increasing to 35% with stage 3 weeks if stage 1-2 is present and weekly if stage disease and 100% with stage 4. With the 3. If stage 3 threshold disease is noted then increased survival rate of premature infants, cryopexy is applied. After treatment, all infants the relevance to future management of this are seen at 3 monthly intervals for the first year expanding group of young people is and every 6 months for 5 years, then annually. considered. We classified ROP according to the (BrJ7 Ophthalmol 1993; 77: 91-94) international classification7 and defined signifi- cant ROP as stage 3 or 4 disease. -

I. Infants with Poor Vision

AAPOS Genetic Eye Disease Workshop 2019 I. Infants with poor vision 1. Infant with poor vision without nystagmus—Debbie Costakos Case #1: An ex 27-week CGA (Corrected Gestational Age) BW 1050g baby girl was referred at age 30 weeks for ROP. ROP resolved spontaneously but the patient was noted to have poor eye contact and no fix and follow at 6 months of age. PMHx was significant for prematurity, intraventricular hemorrhage (grade 3) and periventricular leukomalacia FHx was negative for poor vision Alignment was orthotropic CRx was +3.00 sphere in both eyes DFE revealed normal optic nerves, macula and vessels Case #2: A 6-month-old baby girl was referred at age 6 months for lack of eye contact and no fix and follow. PMHx was not significant FHx was negative for poor vision, strabismus or developmental delay Visual acuity was blinks to light both eyes Alignment was variable intermittent exotropia up to 40 PD X(T) CRx was +5.00 +1.00 x 90 and +4.50 +2.00 x 90 DFE revealed poor foveal reflex in both eyes with a blond fundus Use clinical findings, history and family history to guide the work up. Differential Diagnosis may include: Differential Diagnosis for Infant with poor vision, no nystagmus: Delayed Visual Maturation (DVM) Cortical Visual Impairment (CVI) Albinism Seizure disorder Metabolic disorders Prematurity with PVL, other CNS involvement (with or without ROP). Note: strabismus alone is not a diagnosis for bilateral poor vision Imaging tools are available Workup to consider: OCT—“normal” appearance varies by age ERG Referral to other specialists Brain mri Genetic testing, or not, depending on differential diagnosis and probably yield 2. -

Negative Sinus Pressure and Normal Predisease Imaging in Silent Sinus

CASE REPORTS AND SMALL CASE SERIES UnoprostoneLatanoprost Unoprostone Increase of Intraocular 60 Pressure After Topical Cyclophotocoagulation Administration of 50 Prostaglandin Analogs 40 Several prostaglandins have been demonstrated to reduce intraocular 30 pressure (IOP) in normal, hyperten- sive, and glaucomatous eyes.1-3 Two mm Hg IOP, OD 20 different prostaglandin analogs are commercially available: unopros- 10 tone (Rescula; Ciba Vision Ophthal- OS mics, Duluth, Ga) and latanoprost (Xalatan; Pharmacia Inc, Colum- 0 October 5, 1996 October 10, 1996 October 2, 1997 October 7, 1997 bus, Ohio). We observed an inverse Time reaction after topical administration Time course of intraocular pressure (IOP) for both eyes. Arrows indicate application of prostaglandin of both analogs. derivates or cyclophotocoagulation only of the left eye. Report of a Case. A 29-year-old wom- an had retinitis pigmentosa with of treatment with unoprostone, the and visual acuity increased to 6/20 typical ophthalmoscopic findings, a IOP returned to 15 mm Hg. During (Figure). ring scotoma, and a flat electro- the following weeks the IOP again There were no signs of acute retinogram. Juvenile glaucoma was ranged between 1 and 35 mm Hg. anterior segment inflammation af- diagnosed at the age of 12 years. Be- Five months after this trial with uno- ter the prostaglandin applications. A cause of the characteristic malforma- prostone, another prostaglandin ana- marked atrophy of the ciliary body tion of the anterior segment it was log, latanoprost, became available. At was observed with high-resolution classifiedasRiegersyndrome.Theini- this time, the IOP again was about 30 ultrasound biomicroscopy. tial IOP at the time of glaucoma de- mm Hg despite maximum tolerated tection was 50 mm Hg. -

Common Childhood Eye Conditions.Indd

September 2020 . common childhood eye conditions There are a huge variety of diff erent eye conditions, and each one aff ects vision and individuals in diff erent ways. We’ve listed information on some of the more common visual impairments in children in this ebook. Albinism is a group of genetic disorders in which the affected individual has reduced, or absent pigmentation in the eyes, skin and hair. Children with albinism find their greatest problems arise on sunny days and in brightly lit environments (photophobia). Virtually everyone with albinism has nystagmus (fast ‘to and fro’ movements of the eye). There are two main types of albinism: Ocular Albinism mainly affects the Oculo-Cutaneous Albinism affects eyes of the child, while skin and hair the eyes, hair and skin to the extent may only be a little lighter than other that the child may have a very fair, family members. almost white appearance. Children with albinism have very short sight that cannot be fully corrected by wearing glasses. Find out more at www.albinism.org.uk Amblyopia – this is sometimes Aniridia is a rare congenital called a ‘lazy’ eye. It means that condition causing incomplete an eye has a decrease in vision formation of the iris and loss of which cannot be corrected with vision, usually affecting both eyes. spectacles. It is usually caused by Although not entirely absent, an eye turn (strabismus/ squint), all that remains of the iris (the so it’s more likely that one eye coloured part of the eye) is a thick is affected. It is important that collar of tissue around its outer a young child’s squint is treated edge. -

What Is Optic Nerve Hypoplasia?

What is the optic nerve? The optic nerve is a collection of more than a million nerve fibers that transmit visual signals from the eye to the brain [See Figure 1]. The optic nerve develops during the first trimester of intrauterine life. Fig. 1: Normal optic nerve. What is optic nerve hypoplasia? Optic nerve hypoplasia (ONH) is a congenital condition in which the optic nerve is underdeveloped (small) [See figure 2]. Fig. 2: Optic nerve hypoplasia. How is optic nerve hypoplasia diagnosed? The diagnosis of ONH is typically made by the appearance of small/pale optic nerve during a dilated eye exam. It is difficult to predict visual acuity potential based on the optic nerve appearance. What causes optic nerve hypoplasia? Most cases of ONH have no clearly identifiable cause. There are no known racial or socioeconomic factors in the development of ONH, nor is there a known association with exposure to pesticides. ONH has been associated with maternal ingestion of phenytoin, quinine, and LSD, as well as with fetal alcohol syndrome. What visual problems are associated with optic nerve hypoplasia? Vision impairment from ONH ranges from mild to severe and may affect one or both eyes. Nystagmus (shaking of the eyes) may be seen with both unilateral and bilateral cases. The incidence of strabismus is increased with ONH. Is optic nerve hypoplasia associated with non-visual problems? Optic nerve hypoplasia can be associated with central nervous system (CNS) malformations which put the patient at risk for other problems, including seizure disorder and developmental delay. Hormone deficiencies occur in most children, regardless of associated midline brain abnormalities or pituitary gland abnormalities on MRI. -

Local Coverage Determination for Visual Electrophysiology Testing

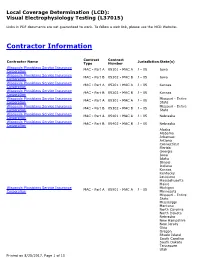

Local Coverage Determination (LCD): Visual Electrophysiology Testing (L37015) Links in PDF documents are not guaranteed to work. To follow a web link, please use the MCD Website. Contractor Information Contract Contract Contractor Name Jurisdiction State(s) Type Number Wisconsin Physicians Service Insurance MAC - Part A 05101 - MAC A J - 05 Iowa Corporation Wisconsin Physicians Service Insurance MAC - Part B 05102 - MAC B J - 05 Iowa Corporation Wisconsin Physicians Service Insurance MAC - Part A 05201 - MAC A J - 05 Kansas Corporation Wisconsin Physicians Service Insurance MAC - Part B 05202 - MAC B J - 05 Kansas Corporation Wisconsin Physicians Service Insurance Missouri - Entire MAC - Part A 05301 - MAC A J - 05 Corporation State Wisconsin Physicians Service Insurance Missouri - Entire MAC - Part B 05302 - MAC B J - 05 Corporation State Wisconsin Physicians Service Insurance MAC - Part A 05401 - MAC A J - 05 Nebraska Corporation Wisconsin Physicians Service Insurance MAC - Part B 05402 - MAC B J - 05 Nebraska Corporation Alaska Alabama Arkansas Arizona Connecticut Florida Georgia Iowa Idaho Illinois Indiana Kansas Kentucky Louisiana Massachusetts Maine Wisconsin Physicians Service Insurance Michigan MAC - Part A 05901 - MAC A J - 05 Corporation Minnesota Missouri - Entire State Mississippi Montana North Carolina North Dakota Nebraska New Hampshire New Jersey Ohio Oregon Rhode Island South Carolina South Dakota Tennessee Utah Printed on 8/25/2017. Page 1 of 15 Contract Contract Contractor Name Jurisdiction State(s) Type Number Virginia -

Glaucoma and Frequency of Ocular and General Diseases in 30 Patients with Aniridia: a Clinical Study

Eur J Ophthalmol22 (2012; :1) 104-110 DOI: 10.5301/EJO.2011.8318 ORIGINAL ARTICLE Glaucoma and frequency of ocular and general diseases in 30 patients with aniridia: a clinical study Eugen Gramer1*, Constantin Reiter1*, Gwendolyn Gramer2 1Department of Ophthalmology, University Würzburg, Würzburg - Germany 2University Hospital for Pediatric and Adolescent Medicine, University Heidelberg, Heidelberg - Germany Department of Ophthalmology, University Würzburg, Würzburg - Germany PURPOSE. To evaluate the following in patients with aniridia: age at first examination at the University Eye Hospital and age at diagnosis of glaucoma; visual acuity; frequency of family history of aniridia; and frequency of ocular and general diseases associated with aniridia. METHODS. This was a consecutive examination of 30 unrelated patients with aniridia and retrospective evaluation of ophthalmologic, pediatric, and internal findings. The relative frequency of age at glauco- ma diagnosis within decades was evaluated for the 20 patients with aniridia and glaucoma. Statistical analysis was performed using the Mann-Whitney test. RESULTS. Relative frequency of the age of patients with aniridia at time of glaucoma diagnosis within the following decades was as follows: from birth to 9 years: 15%, 10-19: 15%, 20-29: 15%, 30-39: 15%, 40-49: 35%, and 50-59: 5%. Visual acuity in the better eye of 20/100 or less was found in 60%. Family history of aniridia was found in 33.3% of patients, with 1-4 relatives with aniridia. A total of 76.7% of patients had congenital cataract, and 66.7% had glaucoma. Mean maximum intraocular pressure of the 20 patients with glaucoma was 35.9 mmHg in the right and 32.6 mmHg in the left eye. -

Congenital Disorders of the Optic Nerve GN Dutton 1039

Eye (2004) 18, 1038–1048 & 2004 Nature Publishing Group All rights reserved 0950-222X/04 $30.00 www.nature.com/eye CAMBRIDGE OPHTHALMOLOGICAL SYMPOSIUM Congenital disorders GN Dutton of the optic nerve: excavations and hypoplasia Abstract Excavations of the optic disc The principal congenital abnormalities Optic disc coloboma of the optic disc that can significantly Definition impair visual function are excavation Optic disc coloboma (Figure 1) comprises a of the optic disc and optic nerve hypoplasia. clearly demarcated bowl-shaped excavation of The excavated optic disc abnormalities the optic disc, which is typically decentred and comprise optic disc coloboma, morning deeper inferiorly. glory syndrome, and peripapillary staphyloma. Optic nerve hypoplasia manifests as a small optic nerve, which Aetiology may or may not be accompanied by a Coloboma of the optic disc is thought to result peripapillary ring (the double ring sign). In from abnormal fusion of the two sides of the addition, the optic disc cupping, which proximal end of the optic cup.1 The condition occurs as a sequel to some cases of can occur in association with multiple periventricular leucomalacia, can arguably congenital abnormalities indicative of an insult be classified as a type of optic nerve to the developing foetus during the sixth week hypoplasia. All of these conditions can be of gestation.2 unilateral or bilateral and can impair visual Optic nerve coloboma may occur sporadically function mildly or severely. It is essential or be inherited with an autosomal dominant